AWS Glue is a serverless information integration service that permits you to course of and combine information coming by totally different information sources at scale. AWS Glue 5.0, the newest model of AWS Glue for Apache Spark jobs, gives a performance-optimized Apache Spark 3.5 runtime expertise for batch and stream processing. With AWS Glue 5.0, you get improved efficiency, enhanced safety, assist for the subsequent era of Amazon SageMaker, and extra. AWS Glue 5.0 allows you to develop, run, and scale your information integration workloads and get insights quicker.

AWS Glue accommodates varied improvement preferences by a number of job creation approaches. For builders preferring direct coding, Python or Scala improvement is on the market utilizing the AWS Glue ETL library.

Constructing production-ready information platforms requires sturdy improvement processes and steady integration and supply (CI/CD) pipelines. To assist numerous improvement wants—whether or not on native machines, Docker containers on Amazon Elastic Compute Cloud (Amazon EC2), or different environments—AWS gives an official AWS Glue Docker picture by the Amazon ECR Public Gallery. The picture allows builders to work effectively of their most well-liked setting whereas utilizing the AWS Glue ETL library.

On this put up, we present learn how to develop and check AWS Glue 5.0 jobs domestically utilizing a Docker container. This put up is an up to date model of the put up Develop and check AWS Glue model 3.0 and 4.0 jobs domestically utilizing a Docker container, and makes use of AWS Glue 5.0 .

Out there Docker photographs

The next Docker photographs can be found for the Amazon ECR Public Gallery:

AWS Glue model 5.0 – ecr.aws/glue/aws-glue-libs:5

AWS Glue Docker photographs are appropriate with each x86_64 and arm64.

On this put up, we use public.ecr.aws/glue/aws-glue-libs:5 and run the container on an area machine (Mac, Home windows, or Linux). This container picture has been examined for AWS Glue 5.0 Spark jobs. The picture incorporates the next:

To arrange your container, you pull the picture from the ECR Public Gallery after which run the container. We exhibit learn how to run your container with the next strategies, relying in your necessities:

spark-submit

REPL shell (pyspark)

pytest

Visible Studio Code

Conditions

Earlier than you begin, ensure that Docker is put in and the Docker daemon is operating. For set up directions, see the Docker documentation for Mac, Home windows, or Linux. Additionally just remember to have no less than 7 GB of disk house for the picture on the host operating Docker.

Configure AWS credentials

To allow AWS API calls from the container, arrange your AWS credentials with the next steps:

Create an AWS named profile.

Open cmd on Home windows or a terminal on Mac/Linux, and run the next command:

PROFILE_NAME=”profile_name”

Within the following sections, we use this AWS named profile.

Pull the picture from the ECR Public Gallery

If you happen to’re operating Docker on Home windows, select the Docker icon (right-click) and select Swap to Linux containers earlier than pulling the picture.

Run the next command to tug the picture from the ECR Public Gallery:

docker pull public.ecr.aws/glue/aws-glue-libs:5

Run the container

Now you possibly can run a container utilizing this picture. You may select any of following strategies based mostly in your necessities.

spark-submit

You may run an AWS Glue job script by operating the spark-submit command on the container.

Write your job script (pattern.py within the following instance) and reserve it below the /local_path_to_workspace/src/ listing utilizing the next instructions:

$ WORKSPACE_LOCATION=/local_path_to_workspace

$ SCRIPT_FILE_NAME=pattern.py

$ mkdir -p ${WORKSPACE_LOCATION}/src

$ vim ${WORKSPACE_LOCATION}/src/${SCRIPT_FILE_NAME}

These variables are used within the following docker run command. The pattern code (pattern.py) used within the spark-submit command is included within the appendix on the finish of this put up.

Run the next command to run the spark-submit command on the container to submit a brand new Spark software:

$ docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_spark_submit

public.ecr.aws/glue/aws-glue-libs:5

spark-submit /house/hadoop/workspace/src/$SCRIPT_FILE_NAME

REPL shell (pyspark)

You may run a REPL (read-eval-print loop) shell for interactive improvement. Run the next command to run the pyspark command on the container to start out the REPL shell:

$ docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_pyspark

public.ecr.aws/glue/aws-glue-libs:5

pyspark

You will note following output:

Python 3.11.6 (most important, Jan 9 2025, 00:00:00) (GCC 11.4.1 20230605 (Crimson Hat 11.4.1-2)) on linux

Kind “assist”, “copyright”, “credit” or “license” for extra info.

Setting default log degree to “WARN”.

To regulate logging degree use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ ‘_/

/__ / .__/_,_/_/ /_/_ model 3.5.2-amzn-1

/_/

Utilizing Python model 3.11.6 (most important, Jan 9 2025 00:00:00)

Spark context Internet UI obtainable at None

Spark context obtainable as ‘sc’ (grasp = native(*), app id = local-1740643079929).

SparkSession obtainable as ‘spark’.

>>>

With this REPL shell, you possibly can code and check interactively.

pytest

For unit testing, you should utilize pytest for AWS Glue Spark job scripts.

Run the next instructions for preparation:

$ WORKSPACE_LOCATION=/local_path_to_workspace

$ SCRIPT_FILE_NAME=pattern.py

$ UNIT_TEST_FILE_NAME=test_sample.py

$ mkdir -p ${WORKSPACE_LOCATION}/exams

$ vim ${WORKSPACE_LOCATION}/exams/${UNIT_TEST_FILE_NAME}

Now let’s invoke pytest utilizing docker run:

$ docker run -i –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

–workdir /house/hadoop/workspace

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_pytest

public.ecr.aws/glue/aws-glue-libs:5

-c “python3 -m pytest –disable-warnings”

When pytest finishes executing unit exams, your output will look one thing like the next:

============================= check session begins ==============================

platform linux — Python 3.11.6, pytest-8.3.4, pluggy-1.5.0

rootdir: /house/hadoop/workspace

plugins: integration-mark-0.2.0

collected 1 merchandise

exams/test_sample.py . (100%)

======================== 1 handed, 1 warning in 34.28s =========================

Visible Studio Code

To arrange the container with Visible Studio Code, full the next steps:

Set up Visible Studio Code.

Set up Python.

Set up Dev Containers.

Open the workspace folder in Visible Studio Code.

Press Ctrl+Shift+P (Home windows/Linux) or Cmd+Shift+P (Mac).

Enter Preferences: Open Workspace Settings (JSON).

Press Enter.

Enter following JSON and reserve it:

{

“python.defaultInterpreterPath”: “/usr/bin/python3.11”,

“python.evaluation.extraPaths”: (

“/usr/lib/spark/python/lib/py4j-0.10.9.7-src.zip:/usr/lib/spark/python/:/usr/lib/spark/python/lib/”,

)

}

Now you’re able to arrange the container.

Run the Docker container:

$ docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_pyspark

public.ecr.aws/glue/aws-glue-libs:5

pyspark

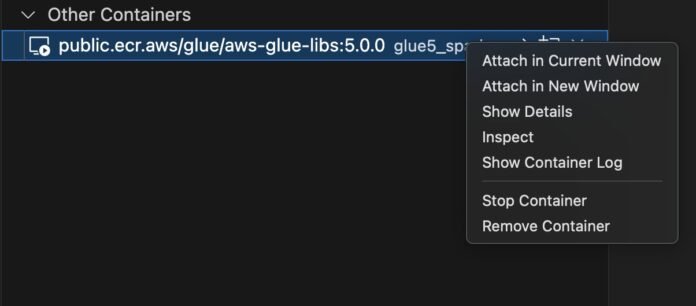

Begin Visible Studio Code.

Select Distant Explorer within the navigation pane.

Select the container ecr.aws/glue/aws-glue-libs:5 (right-click) and select Connect in Present Window.

If the next dialog seems, select Obtained it.

Open /house/hadoop/workspace/.

Create an AWS Glue PySpark script and select Run.

It’s best to see the profitable run on the AWS Glue PySpark script.

Modifications between the AWS Glue 4.0 and AWS Glue 5.0 Docker picture

The next are main modifications between the AWS Glue 4.0 and Glue 5.0 Docker picture:

In AWS Glue 5.0, there’s a single container picture for each batch and streaming jobs. This differs from AWS Glue 4.0, the place there was one picture for batch and one other for streaming.

In AWS Glue 5.0, the default person identify of the container is hadoop. In AWS Glue 4.0, the default person identify was glue_user.

In AWS Glue 5.0, a number of further libraries, together with JupyterLab and Livy, have been faraway from the picture. You may manually set up them.

In AWS Glue 5.0, all of Iceberg, Hudi, and Delta libraries are pre-loaded by default, and the setting variable DATALAKE_FORMATS is now not wanted. Till AWS Glue 4.0, the setting variable DATALAKE_FORMATS was used to specify whether or not the particular desk format is loaded.

The previous checklist is particular to the Docker picture. To study extra about AWS Glue 5.0 updates, see Introducing AWS Glue 5.0 for Apache Spark and Migrating AWS Glue for Spark jobs to AWS Glue model 5.0.

Concerns

Remember that the next options are usually not supported when utilizing the AWS Glue container picture to develop job scripts domestically:

Conclusion

On this put up, we explored how the AWS Glue 5.0 Docker photographs present a versatile basis for growing and testing AWS Glue job scripts in your most well-liked setting. These photographs, available within the Amazon ECR Public Gallery, streamline the event course of by providing a constant, moveable setting for AWS Glue improvement.

To study extra about learn how to construct end-to-end improvement pipeline, see Finish-to-end improvement lifecycle for information engineers to construct a knowledge integration pipeline utilizing AWS Glue. We encourage you to discover these capabilities and share your experiences with the AWS group.

Appendix A: AWS Glue job pattern codes for testing

This appendix introduces three totally different scripts as AWS Glue job pattern codes for testing functions. You need to use any of them within the tutorial.

The next pattern.py code makes use of the AWS Glue ETL library with an Amazon Easy Storage Service (Amazon S3) API name. The code requires Amazon S3 permissions in AWS Identification and Entry Administration (IAM). It’s essential grant the IAM-managed coverage arn:aws:iam::aws:coverage/AmazonS3ReadOnlyAccess or IAM customized coverage that permits you to make ListBucket and GetObject API requires the S3 path.

import sys

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

from awsglue.utils import getResolvedOptions

class GluePythonSampleTest:

def __init__(self):

params = ()

if ‘–JOB_NAME’ in sys.argv:

params.append(‘JOB_NAME’)

args = getResolvedOptions(sys.argv, params)

self.context = GlueContext(SparkContext.getOrCreate())

self.job = Job(self.context)

if ‘JOB_NAME’ in args:

jobname = args(‘JOB_NAME’)

else:

jobname = “check”

self.job.init(jobname, args)

def run(self):

dyf = read_json(self.context, “s3://awsglue-datasets/examples/us-legislators/all/individuals.json”)

dyf.printSchema()

self.job.commit()

def read_json(glue_context, path):

dynamicframe = glue_context.create_dynamic_frame.from_options(

connection_type=”s3″,

connection_options={

‘paths’: (path),

‘recurse’: True

},

format=”json”

)

return dynamicframe

if __name__ == ‘__main__’:

GluePythonSampleTest().run()

The next test_sample.py code is a pattern for a unit check of pattern.py:

import pytest

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

from awsglue.utils import getResolvedOptions

import sys

from src import pattern

@pytest.fixture(scope=”module”, autouse=True)

def glue_context():

sys.argv.append(‘–JOB_NAME’)

sys.argv.append(‘test_count’)

args = getResolvedOptions(sys.argv, (‘JOB_NAME’))

context = GlueContext(SparkContext.getOrCreate())

job = Job(context)

job.init(args(‘JOB_NAME’), args)

Appendix B: Including JDBC drivers and Java libraries

So as to add a JDBC driver not presently obtainable within the container, you possibly can create a brand new listing below your workspace with the JAR recordsdata you want and mount the listing to /choose/spark/jars/ within the docker run command. JAR recordsdata discovered below /choose/spark/jars/ inside the container are mechanically added to Spark Classpath and shall be obtainable to be used through the job run.

For instance, you should utilize the next docker run command so as to add JDBC driver jars to a PySpark REPL shell:

$ docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

-v $WORKSPACE_LOCATION/jars/:/choose/spark/jars/

–workdir /house/hadoop/workspace

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_jdbc

public.ecr.aws/glue/aws-glue-libs:5

pyspark

As highlighted earlier, the customJdbcDriverS3Path connection possibility can’t be used to import a customized JDBC driver from Amazon S3 in AWS Glue container photographs.

Appendix C: Including Livy and JupyterLab

The AWS Glue 5.0 container picture doesn’t have Livy put in by default. You may create a brand new container picture extending the AWS Glue 5.0 container picture as the bottom. The next Dockerfile demonstrates how one can lengthen the Docker picture to incorporate further elements it’s essential to improve your improvement and testing expertise.

To get began, create a listing in your workstation and place the Dockerfile.livy_jupyter file within the listing:

$ mkdir -p $WORKSPACE_LOCATION/jupyterlab/

$ cd $WORKSPACE_LOCATION/jupyterlab/

$ vim Dockerfile.livy_jupyter

The next code is Dockerfile.livy_jupyter:

FROM public.ecr.aws/glue/aws-glue-libs:5 AS glue-base

ENV LIVY_SERVER_JAVA_OPTS=”–add-opens java.base/java.lang.invoke=ALL-UNNAMED –add-opens=java.base/java.nio=ALL-UNNAMED –add-opens=java.base/solar.nio.ch=ALL-UNNAMED –add-opens=java.base/solar.nio.cs=ALL-UNNAMED –add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED”

# Obtain Livy

ADD –chown=hadoop:hadoop https://dlcdn.apache.org/incubator/livy/0.8.0-incubating/apache-livy-0.8.0-incubating_2.12-bin.zip ./

# Set up and configure Livy

RUN unzip apache-livy-0.8.0-incubating_2.12-bin.zip &&

rm apache-livy-0.8.0-incubating_2.12-bin.zip &&

mv apache-livy-0.8.0-incubating_2.12-bin livy &&

mkdir -p livy/logs &&

cat <> livy/conf/livy.conf

livy.server.host = 0.0.0.0

livy.server.port = 8998

livy.spark.grasp = native

livy.repl.enable-hive-context = true

livy.spark.scala-version = 2.12

EOF &&

cat <> livy/conf/log4j.properties

log4j.rootCategory=INFO,console

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.goal=System.err

log4j.appender.console.structure=org.apache.log4j.PatternLayout

log4j.appender.console.structure.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c{1}: %mpercentn

log4j.logger.org.eclipse.jetty=WARN

EOF

# Switching to root person quickly to put in dev dependency packages

USER root

RUN dnf replace -y && dnf set up -y krb5-devel gcc python3.11-devel

USER hadoop

# Set up SparkMagic and JupyterLab

RUN export PATH=$HOME/.native/bin:$HOME/livy/bin/:$PATH &&

printf “numpy<2nIPython<=7.14.0n” > /tmp/constraint.txt &&

pip3.11 –no-cache-dir set up –constraint /tmp/constraint.txt –user pytest boto==2.49.0 jupyterlab==3.6.8 IPython==7.14.0 ipykernel==5.5.6 ipywidgets==7.7.2 sparkmagic==0.21.0 jupyterlab_widgets==1.1.11 &&

jupyter-kernelspec set up –user $(pip3.11 –no-cache-dir present sparkmagic | grep Location | minimize -d” ” -f2)/sparkmagic/kernels/sparkkernel &&

jupyter-kernelspec set up –user $(pip3.11 –no-cache-dir present sparkmagic | grep Location | minimize -d” ” -f2)/sparkmagic/kernels/pysparkkernel &&

jupyter server extension allow –user –py sparkmagic &&

cat <> /house/hadoop/.native/bin/entrypoint.sh

#!/usr/bin/env bash

mkdir -p /house/hadoop/workspace/

livy-server begin

sleep 5

jupyter lab –no-browser –ip=0.0.0.0 –allow-root –ServerApp.root_dir=/house/hadoop/workspace/ –ServerApp.token=” –ServerApp.password=”

EOF

# Setup Entrypoint script

RUN chmod +x /house/hadoop/.native/bin/entrypoint.sh

# Add default SparkMagic Config

ADD –chown=hadoop:hadoop https://uncooked.githubusercontent.com/jupyter-incubator/sparkmagic/refs/heads/grasp/sparkmagic/example_config.json .sparkmagic/config.json

# Replace PATH var

ENV PATH=/house/hadoop/.native/bin:/house/hadoop/livy/bin/:$PATH

ENTRYPOINT (“/house/hadoop/.native/bin/entrypoint.sh”)

Run the docker construct command to construct the picture:

docker construct

-t glue_v5_livy

–file $WORKSPACE_LOCATION/jupyterlab/Dockerfile.livy_jupyter

$WORKSPACE_LOCATION/jupyterlab/

When the picture construct is full, you should utilize the next docker run command to start out the newly constructed picture:

docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

-p 8998:8998

-p 8888:8888

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_jupyter

glue_v5_livy

Appendix D: Including further Python libraries

On this part, we focus on including further Python libraries and putting in Python packages utilizing

Native Python libraries

So as to add native Python libraries, place them below a listing and assign the trail to $EXTRA_PYTHON_PACKAGE_LOCATION:

$ docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

-v $EXTRA_PYTHON_PACKAGE_LOCATION:/house/hadoop/workspace/extra_python_path/

–workdir /house/hadoop/workspace

-e AWS_PROFILE=$PROFILE_NAME

–name glue5_pylib

public.ecr.aws/glue/aws-glue-libs:5

-c ‘export PYTHONPATH=/house/hadoop/workspace/extra_python_path/:$PYTHONPATH; pyspark’

To validate that the trail has been added to PYTHONPATH, you possibly can examine for its existence in sys.path:

Python 3.11.6 (most important, Jan 9 2025, 00:00:00) (GCC 11.4.1 20230605 (Crimson Hat 11.4.1-2)) on linux

Kind “assist”, “copyright”, “credit” or “license” for extra info.

Setting default log degree to “WARN”.

To regulate logging degree use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ ‘_/

/__ / .__/_,_/_/ /_/_ model 3.5.2-amzn-1

/_/

Utilizing Python model 3.11.6 (most important, Jan 9 2025 00:00:00)

Spark context Internet UI obtainable at None

Spark context obtainable as ‘sc’ (grasp = native(*), app id = local-1740719582296).

SparkSession obtainable as ‘spark’.

>>> import sys

>>> “/house/hadoop/workspace/extra_python_path” in sys.path

True

Putting in Python packages utilizing pip

To put in packages from PyPI (or some other artifact repository) utilizing pip, you should utilize the next strategy:

docker run -it –rm

-v ~/.aws:/house/hadoop/.aws

-v $WORKSPACE_LOCATION:/house/hadoop/workspace/

–workdir /house/hadoop/workspace

-e AWS_PROFILE=$PROFILE_NAME

-e SCRIPT_FILE_NAME=$SCRIPT_FILE_NAME

–name glue5_pylib

public.ecr.aws/glue/aws-glue-libs:5

-c ‘pip3 set up snowflake==1.0.5; spark-submit /house/hadoop/workspace/src/$SCRIPT_FILE_NAME’

In regards to the Authors

Subramanya Vajiraya is a Sr. Cloud Engineer (ETL) at AWS Sydney specialised in AWS Glue. He’s keen about serving to prospects resolve points associated to their ETL workload and implementing scalable information processing and analytics pipelines on AWS. Outdoors of labor, he enjoys occurring bike rides and taking lengthy walks together with his canine Ollie.

Subramanya Vajiraya is a Sr. Cloud Engineer (ETL) at AWS Sydney specialised in AWS Glue. He’s keen about serving to prospects resolve points associated to their ETL workload and implementing scalable information processing and analytics pipelines on AWS. Outdoors of labor, he enjoys occurring bike rides and taking lengthy walks together with his canine Ollie.

Noritaka Sekiyama is a Principal Massive Information Architect on the AWS Glue staff. He works based mostly in Tokyo, Japan. He’s liable for constructing software program artifacts to assist prospects. In his spare time, he enjoys biking together with his street bike.

Noritaka Sekiyama is a Principal Massive Information Architect on the AWS Glue staff. He works based mostly in Tokyo, Japan. He’s liable for constructing software program artifacts to assist prospects. In his spare time, he enjoys biking together with his street bike.