Google Quantum AI’s mission is to construct greatest in school quantum computing for in any other case unsolvable issues. For many years the quantum and safety communities have additionally recognized that large-scale quantum computer systems will in some unspecified time in the future sooner or later probably be capable to break a lot of at the moment’s safe public key cryptography algorithms, akin to Rivest–Shamir–Adleman (RSA). Google has lengthy labored with the U.S. Nationwide Institute of Requirements and Expertise (NIST) and others in authorities, trade, and academia to develop and transition to post-quantum cryptography (PQC), which is predicted to be proof against quantum computing assaults. As quantum computing expertise continues to advance, ongoing multi-stakeholder collaboration and motion on PQC is essential.

With a view to plan for the transition from at the moment’s cryptosystems to an period of PQC, it is essential the dimensions and efficiency of a future quantum pc that would probably break present cryptography algorithms is rigorously characterised. Yesterday, we printed a preprint demonstrating that 2048-bit RSA encryption might theoretically be damaged by a quantum pc with 1 million noisy qubits operating for one week. It is a 20-fold lower within the variety of qubits from our earlier estimateprinted in 2019. Notably, quantum computer systems with related error charges at the moment have on the order of solely 100 to 1000 qubits, and the Nationwide Institute of Requirements and Expertise (NIST) just lately launched normal PQC algorithms which can be anticipated to be proof against future large-scale quantum computer systems. Nevertheless, this new outcome does underscore the significance of migrating to those requirements according to NIST advisable timelines.

Estimated assets for factoring have been steadily reducing

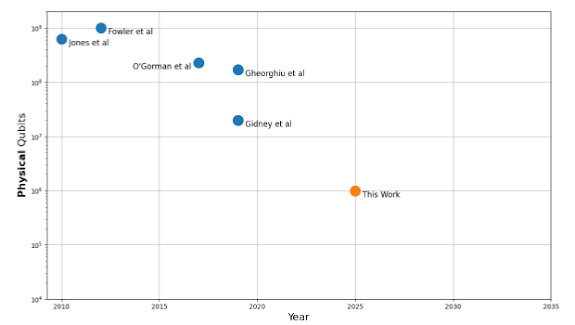

Quantum computer systems break RSA by factoring numbers, utilizing Shor’s algorithm. Since Peter Shor printed this algorithm in 1994, the estimated variety of qubits wanted to run it has steadily decreased. For instance, in 2012, it was estimated {that a} 2048-bit RSA key might be damaged by a quantum pc with a billion bodily qubits. In 2019, utilizing the identical bodily assumptions – which think about qubits with a barely decrease error charge than Google Quantum AI’s present quantum computer systems – the estimate was lowered to twenty million bodily qubits.

Historic estimates of the variety of bodily qubits wanted to issue 2048-bit RSA integers.

This outcome represents a 20-fold lower in comparison with our estimate from 2019

The discount in bodily qubit depend comes from two sources: higher algorithms and higher error correction – whereby qubits utilized by the algorithm (“logical qubits”) are redundantly encoded throughout many bodily qubits, in order that errors will be detected and corrected.

On the algorithmic facet, the important thing change is to compute an approximate modular exponentiation fairly than a precise one. An algorithm for doing this, whereas utilizing solely small work registers, was found In 2024 by Chevigard and Fouque and scramblelow. Their algorithm used 1000x extra operations than prior work, however we discovered methods to cut back that overhead right down to 2x.

On the error correction facet, the important thing change is tripling the storage density of idle logical qubits by including a second layer of error correction. Usually extra error correction layers means extra overhead, however mixture was found by the Google Quantum AI workforce in 2023. One other notable error correction enchancment is utilizing “magic state cultivation”, proposed by the Google Quantum AI workforce in 2024, to cut back the workspace required for sure fundamental quantum operations. These error correction enhancements aren’t particular to factoring and in addition scale back the required assets for different quantum computations like in chemistry and supplies simulation.

Safety implications

NIST just lately concluded a PQC competitors that resulted within the first set of PQC requirements. These algorithms can already be deployed to defend in opposition to quantum computer systems properly earlier than a working cryptographically related quantum pc is constructed.

To evaluate the safety implications of quantum computer systems, nevertheless, it’s instructive to moreover take a more in-depth take a look at the affected algorithms (see right here for an in depth look): RSA and Elliptic Curve Diffie-Hellman. As uneven algorithms, they’re used for encryption in transit, together with encryption for messaging providers, in addition to digital signatures (extensively used to show the authenticity of paperwork or software program, e.g. the identification of internet sites). For uneven encryption, particularly encryption in transit, the motivation emigrate to PQC is made extra pressing as a consequence of the truth that an adversary can acquire ciphertexts, and later decrypt them as soon as a quantum pc is accessible, generally known as a “retailer now, decrypt later” assault. Google has due to this fact been encrypting visitors each in Chrome and internallyswitching to the standardized model of ML-KEM as soon as it turned out there. Notably not affected is symmetric cryptography, which is primarily deployed in encryption at relaxation, and to allow some stateless providers.

For signatures, issues are extra complicated. Some signature use circumstances are equally pressing, e.g., when public keys are fastened in {hardware}. Basically, the panorama for signatures is usually outstanding as a result of increased complexity of the transition, since signature keys are utilized in many alternative locations, and since these keys are usually longer lived than the normally ephemeral encryption keys. Signature keys are due to this fact tougher to switch and way more engaging targets to assault, particularly when compute time on a quantum pc is a restricted useful resource. This complexity likewise motivates transferring earlier fairly than later. To allow this, we now have added PQC signature schemes in public preview in Cloud KMS.

The preliminary public draft of the NIST inside report on the transition to post-quantum cryptography requirements states that weak methods needs to be deprecated after 2030 and disallowed after 2035. Our work highlights the significance of adhering to this advisable timeline.

Extra from Google on PQC: https://cloud.google.com/safety/assets/post-quantum-cryptography?e=48754805