Odd conduct

So: What did they discover? Anthropic checked out 10 completely different behaviors in Claude. One concerned using completely different languages. Does Claude have an element that speaks French and one other half that speaks Chinese language, and so forth?

The group discovered that Claude used elements unbiased of any language to reply a query or resolve an issue after which picked a selected language when it replied. Ask it “What’s the reverse of small?” in English, French, and Chinese language and Claude will first use the language-neutral elements associated to “smallness” and “opposites” to give you a solution. Solely then will it choose a selected language through which to answer. This implies that enormous language fashions can be taught issues in a single language and apply them in different languages.

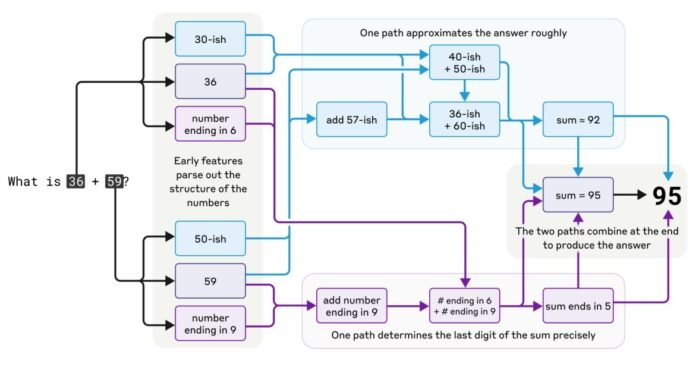

Anthropic additionally checked out how Claude solved simple arithmetic issues. The group discovered that the mannequin appears to have developed its personal inside methods which might be not like these it should have seen in its coaching information. Ask Claude so as to add 36 and 59 and the mannequin will undergo a collection of wierd steps, together with first including a number of approximate values (add 40ish and 60ish, add 57ish and 36ish). In direction of the top of its course of, it comes up with the worth 92ish. In the meantime, one other sequence of steps focuses on the final digits, 6 and 9, and determines that the reply should finish in a 5. Placing that along with 92ish offers the right reply of 95.

And but in case you then ask Claude the way it labored that out, it should say one thing like: “I added those (6+9=15), carried the 1, then added the 10s (3+5+1=9), leading to 95.” In different phrases, it offers you a typical method discovered in every single place on-line reasonably than what it really did. Yep! LLMs are bizarre. (And to not be trusted.)

The steps that Claude 3.5 Haiku used to unravel a simple arithmetic downside weren’t what Anthropic anticipated—they don’t seem to be the steps Claude claimed it took both.

ANTHROPIC

That is clear proof that enormous language fashions will give causes for what they do that don’t essentially mirror what they really did. However that is true for individuals too, says Batson: “You ask someone, ‘Why did you do this?’ They usually’re like, ‘Um, I assume it’s as a result of I used to be— .’ You already know, possibly not. Possibly they have been simply hungry and that’s why they did it.”

Biran thinks this discovering is particularly fascinating. Many researchers examine the conduct of enormous language fashions by asking them to clarify their actions. However that may be a dangerous method, he says: “As fashions proceed getting stronger, they should be outfitted with higher guardrails. I consider—and this work additionally reveals—that relying solely on mannequin outputs is just not sufficient.”

A 3rd activity that Anthropic studied was writing poems. The researchers needed to know if the mannequin actually did simply wing it, predicting one phrase at a time. As an alternative they discovered that Claude by some means appeared forward, selecting the phrase on the finish of the subsequent line a number of phrases upfront.

For instance, when Claude was given the immediate “A rhyming couplet: He noticed a carrot and needed to seize it,” the mannequin responded, “His starvation was like a ravenous rabbit.” However utilizing their microscope, they noticed that Claude had already stumble on the phrase “rabbit” when it was processing “seize it.” It then appeared to write down the subsequent line with that ending already in place.