Be a part of our each day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. Be taught Extra

Enterprises must know if the fashions that energy their functions and brokers work in real-life situations. This kind of analysis can typically be complicated as a result of it’s laborious to foretell particular situations. A revamped model of the RewardBench benchmark appears to be like to present organizations a greater thought of a mannequin’s real-life efficiency.

The Allen Institute of AI (Ai2) launched RewardBench 2, an up to date model of its reward mannequin benchmark, RewardBench, which they declare gives a extra holistic view of mannequin efficiency and assesses how fashions align with an enterprise’s objectives and requirements.

Ai2 constructed RewardBench with classification duties that measure correlations by inference-time compute and downstream coaching. RewardBench primarily offers with reward fashions (RM), which might act as judges and consider LLM outputs. RMs assign a rating or a “reward” that guides reinforcement studying with human suggestions (RHLF).

RewardBench 2 is right here! We took a very long time to study from our first reward mannequin analysis instrument to make one that’s considerably tougher and extra correlated with each downstream RLHF and inference-time scaling. pic.twitter.com/NGetvNrOQV

– ai2 (@allen_ai) June 2, 2025

Nathan Lambert, a senior analysis scientist at Ai2, advised VentureBeat that the primary RewardBench labored as meant when it was launched. Nonetheless, the mannequin atmosphere quickly advanced, and so ought to its benchmarks.

“As reward fashions turned extra superior and use circumstances extra nuanced, we rapidly acknowledged with the neighborhood that the primary model didn’t totally seize the complexity of real-world human preferences,” he mentioned.

Lambert added that with RewardBench 2, “we got down to enhance each the breadth and depth of analysis—incorporating extra various, difficult prompts and refining the methodology to mirror higher how people truly choose AI outputs in apply.” He mentioned the second model makes use of unseen human prompts, has a tougher scoring setup and new domains.

Utilizing evaluations for fashions that consider

Whereas reward fashions check how nicely fashions work, it’s additionally essential that RMs align with firm values; in any other case, the fine-tuning and reinforcement studying course of can reinforce dangerous habits, resembling hallucinations, cut back generalization, and rating dangerous responses too excessive.

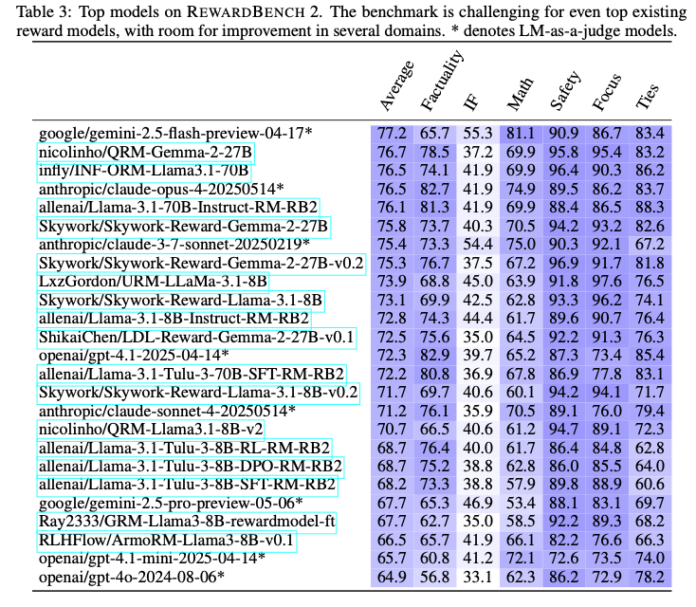

RewardBench 2 covers six completely different domains: factuality, exact instruction following, math, security, focus and ties.

“Enterprises ought to use RewardBench 2 in two other ways relying on their software. In the event that they’re performing RLHF themselves, they need to undertake the most effective practices and datasets from main fashions in their very own pipelines as a result of reward fashions want on-policy coaching recipes (i.e. reward fashions that mirror the mannequin they’re making an attempt to coach with RL). For inference time scaling or knowledge filtering, RewardBench 2 has proven that they will choose the most effective mannequin for his or her area and see correlated efficiency,” Lambert mentioned.

Lambert famous that benchmarks like RewardBench provide customers a method to consider the fashions they’re selecting primarily based on the “dimensions that matter most to them, slightly than counting on a slim one-size-fits-all rating.” He mentioned the thought of efficiency, which many analysis strategies declare to evaluate, could be very subjective as a result of an excellent response from a mannequin extremely is determined by the context and objectives of the consumer. On the identical time, human preferences get very nuanced.

Ai 2 launched the primary model of RewardBench in March 2024. On the time, the corporate mentioned it was the primary benchmark and leaderboard for reward fashions. Since then, a number of strategies for benchmarking and bettering RM have emerged. Researchers at Meta’s FAIR got here out with reWordBench. DeepSeek launched a brand new method known as Self-Principled Critique Tuning for smarter and scalable RM.

Tremendous excited that our second reward mannequin analysis is out. It is considerably tougher, a lot cleaner, and nicely correlated with downstream PPO/BoN sampling.

Pleased hillclimbing!

Large congrats to @saumyamalik44 who lead the undertaking with a complete dedication to excellence. https://t.co/c0b6rHTXY5

— Nathan Lambert (@natolambert) June 2, 2025

How fashions carried out

Since RewardBench 2 is an up to date model of RewardBench, Ai2 examined each current and newly educated fashions to see in the event that they proceed to rank excessive. These included a wide range of fashions, resembling variations of Gemini, Claude, GPT-4.1, and Llama-3.1, together with datasets and fashions like Qwen, Skywork, and its personal Tulu.

The corporate discovered that bigger reward fashions carry out finest on the benchmark as a result of their base fashions are stronger. Total, the strongest-performing fashions are variants of Llama-3.1 Instruct. When it comes to focus and security, Skywork knowledge “is especially useful,” and Tulu did nicely on factuality.

Ai2 mentioned that whereas they imagine RewardBench 2 “is a step ahead in broad, multi-domain accuracy-based analysis” for reward fashions, they cautioned that mannequin analysis must be primarily used as a information to choose fashions that work finest with an enterprise’s wants.

Day by day insights on enterprise use circumstances with VB Day by day

If you wish to impress your boss, VB Day by day has you lined. We provide the inside scoop on what corporations are doing with generative AI, from regulatory shifts to sensible deployments, so you possibly can share insights for optimum ROI.

Thanks for subscribing. Try extra VB newsletters right here.

An error occured.