Amazon Managed Streaming for Apache Kafka (MSK) Join is a completely managed, scalable, and extremely accessible service that permits the streaming of knowledge between Apache Kafka and different knowledge techniques. Amazon MSK Join is constructed on high of Kafka Join, an open-source framework that gives an ordinary option to join Kafka with exterior knowledge techniques. Kafka Join helps quite a lot of connectors, that are used to stream knowledge out and in of Kafka. MSK Join extends the capabilities of Kafka Join by offering a managed service with added security measures, easy configuration, and automated scaling capabilities, enabling companies to concentrate on their knowledge streaming wants with out the overhead of managing the underlying infrastructure.

In some use circumstances, you may want to make use of an MSK cluster in a single AWS account, however MSK Join is positioned in a separate account. On this put up, we show find out how to create a connector to attain this use case. On the time of writing, MSK Join connectors might be created just for MSK clusters which have AWS Id and Entry Administration (IAM) role-based authentication or no authentication. We show find out how to implement IAM authentication after establishing community connectivity. IAM supplies enhanced safety measures, ensuring your techniques are protected towards unauthorized entry.

Resolution overview

The connector might be configured for quite a lot of functions, comparable to sinking knowledge to an Amazon Easy Storage Service (Amazon S3) bucket, monitoring the supply database modifications, or serving as a migration instrument comparable to MirrorMaker2 on MSK Hook up with switch knowledge from a supply cluster to a goal cluster that is positioned in a special account.

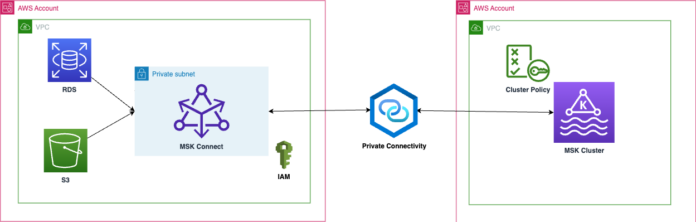

The next diagram illustrates a use case utilizing Debezium and Amazon S3 supply connectors.

The next diagram illustrates utilizing S3 Sink and migration to a cross-account failover cluster utilizing a MirrorMaker connector deployed on MSK Join.

Presently MSK Join connectors might be created just for MSK clusters which have IAM role-based authentication or no authentication. On this weblog, I’ll information you thru the important steps for implementing the industry-recommended IAM (Id and Entry Administration) authentication after establishing community connectivity. IAM supplies enhanced safety measures, making certain your techniques are protected towards unauthorized entry.

The launch of multi-VPC personal connectivity (powered by AWS PrivateLink) and cluster coverage help for MSK clusters simplifies the connectivity of Kafka shoppers to brokers. By enabling this characteristic on the MSK cluster, you need to use the cluster-based coverage to handle all entry management centrally in a single place. On this put up, we cowl the method of enabling this characteristic on the supply MSK cluster.

We don’t totally make the most of the multi-VPC connectivity offered by this new characteristic as a result of that requires you to make use of totally different bootstrap URLs with port numbers (14001:3) that aren’t supported by MSK Join as of writing of this put up. We discover a safe community connectivity resolution that makes use of personal connectivity patterns, as detailed in How Goldman Sachs builds cross-account connectivity to their Amazon MSK clusters with AWS PrivateLink.

Connecting to a cross-account MSK cluster from MSK Join entails the next steps.

Steps to configure the MSK cluster in Account A:

Allow the multi-VPC personal connectivity(Personal Hyperlink) characteristic for IAM authentication scheme that’s enabled on your MSK cluster.

Configure the cluster coverage to permit a cross-account connector.

Implement one of many previous community connectivity patterns in line with your use case to ascertain the connectivity with the Account B VPC and make community modifications accordingly.

Steps to configure the MSK connector in Account B:

Create an MSK connector in personal subnets utilizing the AWS Command Line Interface (AWS CLI).

Confirm the community connectivity from Account A and make community modifications accordingly.

Test the vacation spot service to confirm the incoming knowledge.

Conditions

To comply with together with this put up, it is best to have an MSK cluster in a single AWS account and MSK Join in a separate account.

Arrange the MSK cluster setup in Account A:

On this put up, we solely present the necessary steps which are required to allow the multi-VPC characteristic on an MSK cluster:

Create a provisioned MSK cluster in Account A’s VPC with the next concerns, that are required for the multi-VPC characteristic:

Cluster model have to be 2.7.1 or greater.

Occasion sort have to be m5.giant or greater.

Authentication ought to be IAM (it’s essential to not allow unauthenticated entry for this cluster).

After you create the cluster, go to the Networking settings part of your cluster and select Edit. Then select Activate multi-VPC connectivity.

Choose IAM role-based authentication and select Activate choice.

It’d take round half-hour to allow. This step is required to allow the cluster coverage characteristic that enables the cross-account connector to entry the MSK cluster.

After it has been enabled, scroll right down to Safety settings and select Edit cluster coverage.

Outline your cluster coverage and select Save modifications.

The brand new cluster coverage permits for outlining a Primary or Superior cluster coverage. With the Primary possibility, it solely permits CreateVPCConnection, GetBootstrapBrokers, DescribeCluster, and DescribeClusterV2 actions which are required for creating the cross-VPC connectivity to your cluster. Nevertheless, we’ve to make use of Superior to permit extra actions which are required by the MSK Connector. The coverage ought to be as follows:

{

“Model”: “2012-10-17”,

“Assertion”: ({

“Impact”: “Enable”,

“Principal”: {

“AWS”: “Connector-AccountId”

},

“Motion”: (

“kafka:CreateVpcConnection”,

“kafka:GetBootstrapBrokers”,

“kafka:DescribeCluster”,

“kafka:DescribeClusterV2”,

“kafka-cluster:Join”,

“kafka-cluster:DescribeCluster”,

“kafka-cluster:ReadData”,

“kafka-cluster:DescribeTopic”,

“kafka-cluster:WriteData”,

“kafka-cluster:CreateTopic”,

“kafka-cluster:AlterGroup”,

“kafka-cluster:DescribeGroup”

),

“Useful resource”: (

“arn:aws:kafka:::cluster//”,

“arn:aws:kafka:::matter///”,

“arn:aws:kafka:::group///”

)

})

}

You may want to change the previous permissions to restrict entry to your assets (matters, teams). Additionally, you may prohibit entry to a particular connector by giving the connector IAM function, or you may point out the account quantity to permit the connectors in that account.

Now the cluster is prepared. Nevertheless, you want to be sure of the community connectivity between the cross-account connector VPC and the MSK cluster VPC.

For those who’re utilizing VPC peering or Transit Gateway whereas connecting to MSK Join both from cross-account or the identical account, don’t configure your connector to succeed in the peered VPC assets with IPs within the following CIDR ranges (for extra particulars, see Connecting from connectors):

10.99.0.0/16

192.168.0.0/16

172.21.0.0/16

Within the MSK cluster safety group, be sure to allowed port 9098 from Account B community assets and make modifications within the subnets in line with your community connectivity sample.

Arrange the MSK connector in Account B:

On this part, we show find out how to use the S3 Sink connector. Nevertheless, you need to use a special connector in line with your use case and make the modifications accordingly.

Create an S3 bucket (or use an current bucket).

Make it possible for the VPC that you just’re utilizing on this account has a safety group and personal subnets. In case your connector for MSK Join wants entry to the web, seek advice from Allow web entry for Amazon MSK Join.

Confirm the community connectivity between Account A and Account B by utilizing the telnet command to the dealer endpoints with port 9098.

Create an S3 VPC endpoint.

Create a connector plugin in line with your connector plugin supplier (confluent or lenses). Make a remark of the customized plugin Amazon Useful resource Identify (ARN) to make use of in a later step.

Create an IAM function on your connector to permit entry to your S3 bucket and the MSK cluster.

The IAM function’s belief relationship ought to be as follows:

{

“Model”: “2012-10-17”,

“Assertion”: (

{

“Impact”: “Enable”,

“Principal”: {

“Service”: “kafkaconnect.amazonaws.com”

},

“Motion”: “sts:AssumeRole”

}

)

}

Add the next S3 entry coverage to your IAM function:

{

“Model”: “2012-10-17”,

“Assertion”: ({

“Impact”: “Enable”,

“Motion”: (

“s3:ListAllMyBuckets”,

“s3:ListBucket”,

“s3:GetBucketLocation”,

“s3:DeleteObject”,

“s3:PutObject”,

“s3:GetObject”,

“s3:AbortMultipartUpload”,

“s3:ListMultipartUploadParts”,

“s3:ListBucketMultipartUploads”

),

“Useful resource”: (

“arn:aws:s3:::”,

“arn:aws:s3:::/*”

),

“Situation”: {

“StringEquals”: {

“aws:SourceVpc”: “vpc-xxxx”

}

}

})

}

The next coverage comprises the required actions by the connector:

{

“Model”: “2012-10-17”,

“Assertion”: (

{

“Impact”: “Enable”,

“Motion”: (

“kafka-cluster:Join”,

“kafka-cluster:DescribeCluster”,

“kafka-cluster:ReadData”,

“kafka-cluster:DescribeTopic”,

“kafka-cluster:WriteData”,

“kafka-cluster:CreateTopic”,

“kafka-cluster:AlterGroup”,

“kafka-cluster:DescribeGroup”

),

“Useful resource”: (

“arn:aws:kafka:::cluster//”,

“arn:aws:kafka:::matter///”,

“arn:aws:kafka:::group///”

)

}

)

}

You may want to change the previous permissions to restrict entry to your assets (matters, teams)

Lastly, it’s time to create the MSK connector. As a result of the Amazon MSK console doesn’t permit viewing MSK clusters in different accounts, we present you find out how to use the AWS CLI as an alternative. We additionally use primary Amazon S3 configuration for testing functions. You may want to change the configuration in line with your connector’s use case.

Create a connector utilizing the AWS CLI with the next command with the required parameters of the connector, together with Account A’s MSK cluster dealer endpoints:

aws kafkaconnect create-connector

–capacity “autoScaling={maxWorkerCount=2,mcuCount=1,minWorkerCount=1,scaleInPolicy={cpuUtilizationPercentage=10},scaleOutPolicy={cpuUtilizationPercentage=80}}”

–connector-configuration

“connector.class=io.confluent.join.s3.S3SinkConnector,

s3.area=,

schema.compatibility=NONE,

flush.measurement=2,

duties.max=1,

matters=,

safety.protocol=SASL_SSL,

s3.compression.sort=gzip,

format.class=io.confluent.join.s3.format.json.JsonFormat,

sasl.mechanism=AWS_MSK_IAM,

sasl.jaas.config=software program.amazon.msk.auth.iam.IAMLoginModule required,

sasl.shopper.callback.handler.class=software program.amazon.msk.auth.iam.IAMClientCallbackHandler,

worth.converter=org.apache.kafka.join.storage.StringConverter,

storage.class=io.confluent.join.s3.storage.S3Storage,

s3.bucket.title=,

timestamp.extractor=Document,

key.converter=org.apache.kafka.join.storage.StringConverter”

–connector-name “Connector-name”

–kafka-cluster ‘{“apacheKafkaCluster”: {“bootstrapServers”: “:9098″,”vpc”: {“securityGroups”: (“sg-0b36a015789f859a3″),”subnets”: (“subnet-07950da1ebb8be6d8″,”subnet-026a729668f3f9728”)}}}’

–kafka-cluster-client-authentication “authenticationType=IAM”

–kafka-cluster-encryption-in-transit “encryptionType=TLS”

–kafka-connect-version “2.7.1”

–log-delivery workerLogDelivery='{cloudWatchLogs={enabled=true,logGroup=””}}’

–plugins “customPlugin={customPluginArn=,revision=1}”

–service-execution-role-arn “”

After you create the connector, join the producer to your matter and insert knowledge into it. Within the following code, we use a Kafka shopper to insert knowledge for testing functions:

bin/kafka-console-producer.sh –broker-list –producer.config shopper.properties –topic

If all the things is about up accurately, it is best to see the information in your vacation spot S3 bucket. If not, test the troubleshooting ideas within the following part.

Troubleshooting ideas

After deploying the connector, if it’s within the CREATING state on the connector particulars web page, entry the Amazon CloudWatch log group laid out in your connector creation request. Overview the logs for any errors. If no errors are discovered, anticipate the connector to finish its creation course of.

Moreover, be sure the IAM roles have their required permissions, and test the safety teams and NACLs for correct connectivity between VPCs.

Clear up

If you’re carried out testing this resolution, clear up any undesirable assets to keep away from ongoing prices

Conclusion

On this put up, we demonstrated find out how to create an MSK connector when you want to use an MSK cluster in a single AWS account, however MSK Join is positioned in a separate account. This structure contains an S3 Sink connector for demonstration functions, however it could accommodate different forms of sink and supply connectors. Moreover, this structure focuses solely on IAM authenticated connectors. If an unauthenticated connector is desired, the multi-VPC connectivity (PrivateLink) and cluster coverage parts might be ignored. The remaining course of, which entails making a community connection between the account VPCs, stays the identical.

Check out the answer for your self, and tell us your questions and suggestions within the feedback part.

Take a look at extra AWS Companions or contact an AWS Consultant to find out how we may also help speed up your online business.

In regards to the Creator

Venkata Sai Mahesh Swargam is a Cloud Engineer at AWS in Hyderabad. He focuses on Amazon MSK and Amazon Kinesis providers. Mahesh is devoted to serving to prospects by offering technical steering and fixing points associated to their Amazon MSK architectures. In his free time, he enjoys being with household and touring world wide.

Venkata Sai Mahesh Swargam is a Cloud Engineer at AWS in Hyderabad. He focuses on Amazon MSK and Amazon Kinesis providers. Mahesh is devoted to serving to prospects by offering technical steering and fixing points associated to their Amazon MSK architectures. In his free time, he enjoys being with household and touring world wide.