The Amazon Redshift integration with AWS Lambda supplies the aptitude to create Amazon Redshift Lambda user-defined capabilities (UDFs). This functionality delivers flexibility, enhanced integrations, and safety for capabilities outlined in Lambda that may be run by SQL queries. Amazon Redshift Lambda UDFs supply many benefits:

Enhanced integration – You’ll be able to hook up with exterior companies or APIs from inside your UDF logic, enabling richer information enrichment and operational workflows.

A number of Python runtimes – Lambda UDFs profit from Lambda operate help for a number of Python runtimes relying on particular use instances. As well as, the brand new variations and safety patches can be found inside a month of their official launch.

Impartial scaling – Lambda UDFs use Lambda compute assets, so heavy compute or memory-intensive duties don’t impression question efficiency or useful resource concurrency inside Amazon Redshift.

Isolation and safety – You’ll be able to isolate customized code execution in a separate service boundary. This simplifies upkeep, monitoring, budgeting, and permission administration.

As a result of Lambda UDFs present these vital benefits in integration, flexibility, scalability, and safety, we will probably be ending help for Python UDFs in Amazon Redshift. We suggest that you simply migrate your current Python UDFs to Lambda UDFs by June 30, 2026.

October 30, 2025 – Creation of recent Python UDFs will now not be supported (current capabilities can nonetheless be invoked)

June 30, 2026 – Execution of current Python UDFs will probably be suspended

On this publish, we stroll you thru the best way to migrate your current Python UDFs to Lambda UDFs, arrange monitoring and price evaluations, and assessment key concerns for a easy transition.

Resolution overview

You’ll be able to create UDFs for duties reminiscent of tokenization, encryption and decryption, or information science performance just like the Levenshtein distance calculation. For this publish, we offer examples for patrons who’ve Python UDFs in place, demonstrating the best way to change them with Lambda UDFs.

The Levenshtein operate, often known as the Levenshtein distance or edit distance, is a string metric used to measure the distinction between two sequences of characters. Though this performance was beforehand carried out utilizing Python UDFs utilizing the Python library in Amazon Redshift, Lambda supplies a extra environment friendly and scalable answer. This publish demonstrates the best way to migrate from Python UDFs to Lambda UDFs for calculating Levenshtein distances.

Conditions

You could have the next:

Put together the information

To arrange our use case, full the next steps:

On the Amazon Redshift console, select Question editor v2 underneath Explorer within the navigation pane.

Hook up with your Redshift information warehouse.

Create a desk and cargo information. The next question masses 30,000,000 rows within the buyer desk:

DROP TABLE IF EXISTS buyer;

CREATE TABLE buyer

(

c_customer_sk int4 not null ,

c_customer_id char(16) not null ,

c_current_cdemo_sk int4 ,

c_current_hdemo_sk int4 ,

c_current_addr_sk int4 ,

c_first_shipto_date_sk int4 ,

c_first_sales_date_sk int4 ,

c_salutation char(10) ,

c_first_name char(20) ,

c_last_name char(30) ,

c_preferred_cust_flag char(1) ,

c_birth_day int4 ,

c_birth_month int4 ,

c_birth_year int4 ,

c_birth_country varchar(20) ,

c_login char(13) ,

c_email_address char(50) ,

c_last_review_date_sk int4 ,

main key (c_customer_sk)

) distkey(c_customer_sk);

COPY buyer from ‘s3://redshift-downloads/TPC-DS/2.13/3TB/buyer/’

IAM_ROLE default gzip delimiter ‘|’ EMPTYASNULL REGION ‘us-east-1’;

Determine current Python UDFs

Run the next script to listing current Python UDFs:

SELECT

p.proname,

p.pronargs,

t.typname,

n.nspname,

l.lanname,

pg_get_functiondef(p.oid)

FROM

pg_proc p,

pg_language l,

pg_type t,

pg_namespace n

WHERE

p.prolang = l.oid

and p.prorettype = t.oid

and l.lanname=”plpythonu”

and p.pronamespace = n.oid

and nspname not in (‘pg_catalog’, ‘information_schema’)

ORDER BY

proname;

The next is our current Python UDF definition for Levenshtein distance:

create or change operate fn_levenshtein_distance(a varchar, b varchar) returns integer as

$$

def levenshtein_distance(a, len_a, b, len_b):

d = ((0) * (len_b + 1) for i in vary(len_a + 1))

for i in vary(1, len_a + 1):

d(i)(0) = i

for j in vary(1, len_b + 1):

d(0)(j) = j

for j in vary(1, len_b + 1):

for i in vary(1, len_a + 1):

if a(i – 1) == b(j – 1):

value = 0

else:

value = 1

d(i)(j) = min(d(i – 1)(j) + 1, # deletion

d(i)(j – 1) + 1, # insertion

d(i – 1)(j – 1) + value) # substitution

return d(len_a)(len_b)

def distance(a, b):

len_a, len_b = len(a), len(b)

if len_a == len_b:

return 0

elif len_a == 0:

return len_b

elif len_b == 0:

return len_a

else:

return levenshtein_distance(a, len_a, b, len_b)

return distance(a, b)

$$ immutable;

Convert the Python UDF operate to a Lambda UDF

You’ll be able to simplify changing your Python UDF to a Lambda UDF utilizing Amazon Q Developer, a generative AI-powered assistant. It handles code transformation, packaging, and integration logic, accelerating migration and enhancing scalability. Built-in with standard developer instruments like VS Code, JetBrains, and others, Amazon Q streamlines workflows so groups can modernize analytics utilizing serverless architectures with minimal effort.

Amazon Q Developer code options are primarily based on massive language fashions (LLMs) educated on billions of traces of code, together with open supply and Amazon code. At all times assessment a code suggestion earlier than accepting it, and also you would possibly must edit it to guarantee that it does precisely what you meant.

Convert @python-udf.py Redshift Python UDF to Redshift Lambda UDF which batch processes information within the arguments array in a loop and returns json dump on the finish. Consult with @lambda-context.py for reference and extra steering on Lambda UDF.

Create a Lambda operate

Full the next steps to create a Lambda operate:

On the Lambda console, select Features within the navigation pane.

Select Create operate.

Select Writer from scratch.

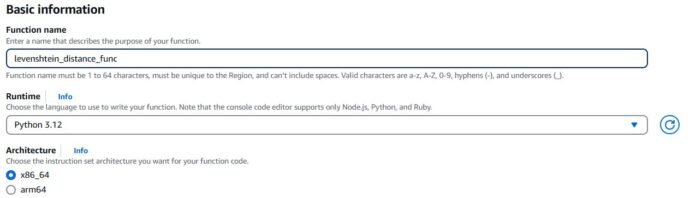

For Perform title, enter a customized title (for instance, levenshtein_distance_func).

For Runtime, select your code surroundings. (The examples on this publish are appropriate with Python 3.12.)

For Structure, choose your system structure. (The examples on this publish are appropriate with x86_64.)

For Execution position, choose Create a brand new position with primary Lambda permissions.

Select Create operate.

Select Code and add the next code:

import json

def lambda_handler(occasion, context):

t1 = occasion(‘arguments’)

resp = (None)*len(t1)

for i, x in enumerate(t1):

if x(0) shouldn’t be None and x(1) shouldn’t be None:

resp(i) = distance(x(0), x(1))

ret = dict()

ret(‘outcomes’) = resp

return json.dumps(ret)

def levenshtein_distance(a, len_a, b, len_b):

d = ((0) * (len_b + 1) for i in vary(len_a + 1))

for i in vary(1, len_a + 1):

d(i)(0) = i

for j in vary(1, len_b + 1):

d(0)(j) = j

for j in vary(1, len_b + 1):

for i in vary(1, len_a + 1):

if a(i – 1) == b(j – 1):

value = 0

else:

value = 1

d(i)(j) = min(d(i – 1)(j) + 1, # deletion

d(i)(j – 1) + 1, # insertion

d(i – 1)(j – 1) + value) # substitution

return d(len_a)(len_b)

def distance(a, b):

len_a, len_b = len(a), len(b)

if len_a == len_b and a == b:

return 0

elif len_a == 0:

return len_b

elif len_b == 0:

return len_a

else:

return levenshtein_distance(a, len_a, b, len_b)

Select configuration and replace Timeout to 1 minute.

You’ll be able to modify reminiscence to optimize efficiency. To be taught extra, see Optimizing Levenshtein Person-Outlined Perform in Amazon Redshift.

Create an Amazon Redshift IAM position

To permit your Amazon Redshift cluster to invoke the Lambda operate, you need to arrange correct IAM permissions. Full the next steps:

Determine the IAM position related along with your Amazon Redshift cluster. When you don’t have one, create a brand new IAM position for Amazon Redshift.

Add the next IAM coverage to this position, offering your AWS Area and AWS account quantity:

{

“Model”: “2012-10-17”,

“Assertion”: (

{

“Impact”: “Enable”,

“Motion”: “lambda:InvokeFunction”,

“Useful resource”: “arn:aws:lambda:::operate:levenshtein_distance_func”

}

)

}

Create a Lambda UDF

Run following script to create your Lambda UDF:

CREATE or REPLACE EXTERNAL FUNCTION

fn_lambda_levenshtein_distance(a varchar, b varchar) returns int

lambda ‘levenshtein_distance_func’ IAM_ROLE default

STABLE

;

Check the answer

To check the answer, run the next script utilizing the Python UDF:

SELECT c_customer_sk, c_customer_id, fn_levenshtein_distance(c_first_name, c_last_name) as distance

FROM buyer

WHERE c_customer_sk in (1,2,3,4,5,31);

The next desk reveals our output.

Run the identical script utilizing the Lambda UDF:

SELECT c_customer_sk, c_customer_id, fn_lambda_levenshtein_distance(c_first_name, c_last_name) as distance

FROM buyer

WHERE c_customer_sk in (1,2,3,4,5,31);

The outcomes of each UDFs match.

Exchange the Python UDF with the Lambda UDF

You need to use the next steps in preproduction for testing:

Revoke entry for the Python UDF:

REVOKE execute on operate fn_levenshtein_distance(varchar, varchar) from or

Grant entry to the Lambda UDF:

grant execute on operate fn_lambda_levenshtein_distance(varchar, varchar) to or

After full testing of the Lambda UDF has been carried out, you’ll be able to drop the Python UDF.

Rename the Lambda UDF fn_lambda_levenshtein_distance to fn_levenshtein_distance so the end-user and software code doesn’t want to vary:

ALTER FUNCTION fn_lambda_levenshtein_distance(varchar, varchar)

RENAME TO fn_levenshtein_distance;

Validate with the next question:

SELECT c_customer_sk, c_customer_id, fn_levenshtein_distance(c_first_name, c_last_name) as distance

FROM buyer

WHERE c_customer_sk in (1,2,3,4,5,31);

Price analysis

To judge the price of the Lambda UDF, full the next steps:

Run the next script to create a desk utilizing a SELECT question, which makes use of the Lambda UDF:

DROP TABLE IF EXISTS customer_lambda;

CREATE TABLE customer_lambda as

SELECT c_customer_sk, c_customer_id, fn_levenshtein_distance(c_first_name, c_last_name) as distance

FROM buyer;

You’ll be able to examine the question logs utilizing CloudWatch Log Insights.

On the CloudWatch console, select Logs within the navigation pane, then select Log Insights.

Filter by the Lambda UDF and use the next question to establish the variety of Lambda invocations.

fields @timestamp, @message, @logStream, @log

| filter @message like /^REPORT/

| kind @timestamp desc

| restrict 10000

Use following question to seek out the price of the Lambda UDF for the precise length you chose:

parse @message /Length:s*(?<@duration_ms>d+.d+)s*mss*Billeds*Length:s*(?<@billed_duration_ms>d+)s*mss*Memorys*Dimension:s*(?<@memory_size_mb>d+)s*MB/

| filter @message like /REPORT RequestId/

| stats sum(@billed_duration_ms * @memory_size_mb * 1.6279296875e-11 + 2.0e-7) as @cost_dollars_total

For this instance, we used the us-east-1 Area utilizing ARM-based cases. For extra particulars on Lambda pricing by Area and the Free Tier restrict, see AWS Lambda pricing.

Select Summarize outcomes.

The price of this Lambda UDF invocation was $0.02329 for 30 million rows.

Monitor Lambda UDFs

Monitoring Lambda UDFs includes monitoring each the Lambda operate’s efficiency and the impression on the Redshift question execution. As a result of UDFs execute externally, a twin method is important.

CloudWatch metrics and logs for Lambda capabilities

CloudWatch supplies complete monitoring for Lambda capabilities, reminiscent of the next key metrics:

Invocations – Tracks the variety of occasions the Lambda operate is named, indicating UDF utilization frequency

Length – Measures execution time, serving to establish efficiency bottlenecks

Errors – Counts failed invocations, which is crucial for detecting points in UDF logic

Throttles – Signifies when Lambda limits invocations because of concurrency caps, which might delay question outcomes

Logs – CloudWatch Logs seize detailed execution output, together with errors and customized log messages, aiding in debugging

Alarms – Configures alarms for prime error charges (for instance, Errors > 0) or extreme length (for instance, Length > 1 second) to obtain proactive notifications

Redshift question efficiency

Inside Amazon Redshift, system views present complete insights into Lambda UDF efficiency and errors:

SYS_QUERY_HISTORY – Identifies queries which have known as your Lambda UDFs by filtering with the UDF title within the query_text column. This helps observe utilization patterns and execution frequency.

SYS_QUERY_DETAIL – Supplies granular execution metrics for queries involving Lambda UDFs, serving to establish efficiency bottlenecks on the step stage.

Efficiency aggregation – Generates abstract studies of Lambda UDF efficiency metrics, together with execution depend, common length, and most length to trace efficiency traits over time.

The next desk summarizes the monitoring instruments out there.

Monitoring Software

Objective

Key Metrics/Views

CloudWatch Metrics

Monitor Lambda operate efficiency

Invocations, Length, Errors, Throttles

CloudWatch Logs

Debug Lambda execution points

Error messages, customized logs

SYS_QUERY_HISTORY

Monitor Lambda UDF utilization patterns

Question execution occasions, standing, person data, question textual content

SYS_QUERY_DETAIL

Analyze Lambda UDF efficiency

Step-level execution particulars, useful resource utilization, question plan data

Efficiency Abstract Stories

Monitor UDF efficiency traits

Execution depend, common/most length, whole elapsed time

Monitoring method for Lambda UDFs in Amazon Redshift

For analyzing particular person queries, you should use the next code to trace how your Lambda UDFs are getting used throughout your group:

SELECT * FROM sys_query_history

WHERE query_text LIKE ‘%your_lambda_udf_name%’

ORDER BY start_time DESC

LIMIT 20;

This helps you do the next:

Determine frequent customers

Monitor execution patterns

Monitor utilization traits

Detect unauthorized entry

You may as well create complete monitoring by utilizing question historical past to observe efficiency metrics on the person stage:

SELECT

usename,

DATE_TRUNC(‘day’, start_time) as day,

COUNT(*) as query_count,

AVG(DATEDIFF(microsecond, start_time, end_time))/1000000.0 as avg_duration_seconds,

MAX(DATEDIFF(microsecond, start_time, end_time))/1000000.0 as max_duration_seconds

FROM sys_query_history q

JOIN pg_user u ON q.user_id = u.usesysid

WHERE query_text LIKE ‘%your_lambda_udf_name%’

AND user_id > 1

GROUP BY usename, day

ORDER BY usename, query_count DESC;

Moreover, you’ll be able to generate weekly efficiency studies utilizing the next aggregation question:

SELECT

‘your_lambda_udf_name’ AS function_name,

COUNT(DISTINCT q.query_id) AS execution_count,

AVG(DATEDIFF(millisecond, q.start_time, q.end_time)) AS avg_duration_ms,

MAX(DATEDIFF(millisecond, q.start_time, q.end_time)) AS max_duration_ms,

SUM(q.elapsed_time) / 1000000 AS total_elapsed_time_sec

FROM

sys_query_history q

WHERE

q.query_text LIKE ‘%your_lambda_udf_name%’

GROUP BY

function_name

ORDER BY

execution_count DESC;

Issues

To maximise the advantages of Lambda UDFs, contemplate the next elements to optimize efficiency, present reliability, safe information, and handle prices. You probably have Python UDFs that don’t use Python libraries, contemplate whether or not they’re candidates to transform to SQL UDFs.

The next are key efficiency concerns:

Batching – Amazon Redshift batches a number of rows right into a single Lambda invocation to cut back name frequency, enhancing effectivity. Be certain the Lambda operate handles batched inputs effectively. For extra particulars, see Accessing exterior parts utilizing Amazon Redshift Lambda UDFs.

Parallel invocations – Redshift cluster slices invoke Lambda capabilities in parallel, enhancing efficiency for giant datasets. Design capabilities to help concurrent executions.

Chilly begins – Lambda capabilities would possibly expertise chilly begin delays, notably if occasionally used. Languages like Python or Node.js usually have quicker startup occasions than Java, decreasing latency.

Perform optimization – Optimize Lambda code for fast execution, minimizing useful resource utilization and latency. For instance, keep away from pointless computations or exterior API calls.

Take into account the next error dealing with strategies:

Sturdy lambda logic – Implement complete error dealing with within the Lambda operate to handle exceptions gracefully. Return clear error messages within the JSON response, as specified within the Amazon Redshift-Lambda interface. For extra particulars, see Scalar Lambda UDFs.

Error propagation – Lambda errors may cause Redshift question failures. Monitor SYS_QUERY_HISTORY for query-level points and CloudWatch Logs for detailed Lambda errors.

JSON interface – The Lambda operate should return a JSON object with success, error_msg, num_records, and outcomes fields. Use correct formatting to keep away from question disruptions.

Clear up

Full the next steps to wash up your assets:

Delete the Redshift provisioned or serverless endpoint.

Delete the Lambda operate.

Delete the IAM roles you created.

Conclusion

Lambda UDFs unlock a brand new stage of flexibility, efficiency, and maintainability for extending Amazon Redshift. By decoupling customized logic from the warehouse engine, groups can scale independently, undertake trendy runtimes, and combine exterior methods.

When you’re presently utilizing Python UDFs in Amazon Redshift, it’s time to discover the advantages of migrating to Lambda UDFs. With the generative AI capabilities of Amazon Q Developer, you’ll be able to automate a lot of this transformation and speed up your modernization journey. To be taught extra, discuss with the Lambda UDF examples GitHub repo and Knowledge Tokenization with Amazon Redshift and Protegrity.

In regards to the authors

Raks Khare is a Senior Analytics Specialist Options Architect at AWS primarily based out of Pennsylvania. He helps clients throughout various industries and areas architect information analytics options at scale on the AWS platform. Exterior of labor, he likes exploring new journey and meals locations and spending high quality time along with his household.

Raks Khare is a Senior Analytics Specialist Options Architect at AWS primarily based out of Pennsylvania. He helps clients throughout various industries and areas architect information analytics options at scale on the AWS platform. Exterior of labor, he likes exploring new journey and meals locations and spending high quality time along with his household.

Ritesh Kumar Sinha is an Analytics Specialist Options Architect primarily based out of San Francisco. He has helped clients construct scalable information warehousing and large information options for over 16 years. He likes to design and construct environment friendly end-to-end options on AWS. In his spare time, he loves studying, strolling, and doing yoga.

Ritesh Kumar Sinha is an Analytics Specialist Options Architect primarily based out of San Francisco. He has helped clients construct scalable information warehousing and large information options for over 16 years. He likes to design and construct environment friendly end-to-end options on AWS. In his spare time, he loves studying, strolling, and doing yoga.

Yanzhu Ji is a Product Supervisor within the Amazon Redshift crew. She has expertise in product imaginative and prescient and technique in industry-leading information merchandise and platforms. She has excellent ability in constructing substantial software program merchandise utilizing internet growth, system design, database, and distributed programming methods. In her private life, Yanzhu likes portray, images, and enjoying tennis.

Yanzhu Ji is a Product Supervisor within the Amazon Redshift crew. She has expertise in product imaginative and prescient and technique in industry-leading information merchandise and platforms. She has excellent ability in constructing substantial software program merchandise utilizing internet growth, system design, database, and distributed programming methods. In her private life, Yanzhu likes portray, images, and enjoying tennis.

Harshida Patel is a Analytics Specialist Principal Options Architect, with AWS.

Harshida Patel is a Analytics Specialist Principal Options Architect, with AWS.