Need smarter insights in your inbox? Join our weekly newsletters to get solely what issues to enterprise AI, knowledge, and safety leaders. Subscribe Now

In all places you look, individuals are speaking about AI brokers like they’re only a immediate away from changing whole departments. The dream is seductive: Autonomous methods that may deal with something you throw at them, no guardrails, no constraints, simply give them your AWS credentials they usually’ll resolve all of your issues. However the actuality is that’s simply not how the world works, particularly not within the enterprise, the place reliability isn’t non-obligatory.

Even when an agent is 99% correct, that’s not at all times ok. If it’s optimizing meals supply routes, which means one out of each hundred orders finally ends up on the improper handle. In a enterprise context, that type of failure charge isn’t acceptable. It’s costly, dangerous and arduous to clarify to a buyer or regulator.

In real-world environments like finance, healthcare and operations, the AI methods that truly ship worth don’t look something like these frontier fantasies. They aren’t improvising within the open world; they’re fixing well-defined issues with clear inputs and predictable outcomes.

If we hold chasing open-world issues with half-ready expertise, we’ll burn time, cash and belief. But when we deal with the issues proper in entrance of us, those with clear ROI and clear boundaries, we are able to make AI work immediately.

This text is about reducing by way of the hype and constructing AI brokers that truly ship, run and assist.

The issue with the open world hype

The tech trade loves a moonshot (and for the document, I do too). Proper now, the moonshot is open-world AI — brokers that may deal with something, adapt to new conditions, be taught on the fly and function with incomplete or ambiguous info. It’s the dream of basic intelligence: Programs that may not solely cause, however improvise.

What makes an issue “open world”?

Open-world issues are outlined by what we don’t know.

Extra formally, drawing from analysis defining these advanced environments, a completely open world is characterised by two core properties:

Time and house are unbounded: An agent’s previous experiences could not apply to new, unseen situations.

Duties are unbounded: They aren’t predetermined and may emerge dynamically.

In such environments, the AI operates with incomplete info; it can not assume that what isn’t identified to be true is fake, it’s merely unknown. The AI is anticipated to adapt to those unexpected adjustments and novel duties because it navigates the world. This presents an extremely troublesome set of issues for present AI capabilities.

Most enterprise issues aren’t like this

In distinction, closed-world issues are ones the place the scope is understood, the foundations are clear and the system can assume it has all of the related knowledge. If one thing isn’t explicitly true, it may be handled as false. These are the sorts of issues most companies really face each day: bill matching, contract validation, fraud detection, claims processing, stock forecasting.

FeatureOpen worldClosed worldScopeUnboundedWell-definedKnowledgeIncompleteComplete (inside area)AssumptionsUnknown ≠ falseUnknown = falseTasksEmergent, not predefinedFixed, repetitiveTestabilityExtremely hardWell-bounded

These aren’t the use instances that sometimes make headlines, however they’re those companies really care about fixing.

The chance of hype and inaction

Nonetheless, the hype is dangerous: By setting the bar at open-world basic intelligence, we make enterprise AI really feel inaccessible. Leaders hear about brokers that may do every part, they usually freeze, as a result of they don’t know the place to begin. The issue feels too massive, too obscure, too dangerous.

It’s like attempting to design autonomous automobiles earlier than we’ve even constructed a working combustion engine. The dream is thrilling, however skipping the basics ensures failure.

Resolve what’s proper in entrance of you

Open-world issues make for excellent demos and even higher funding rounds. However closed-world issues are the place the true worth is immediately. They’re solvable, testable and automatable. They usually’re sitting inside each enterprise, simply ready for the proper system to deal with them.

The query isn’t whether or not AI will resolve open-world issues ultimately. The query is: What are you able to really deploy proper now that makes your corporation sooner, smarter and extra dependable?

What enterprise brokers really appear like

When individuals think about AI brokers immediately, they have an inclination to image a chat window. A person sorts a immediate, and the agent responds with a useful reply (possibly even triggers a device or two). That’s advantageous for demos and client apps, nevertheless it’s not how enterprise AI will really work in follow.

Within the enterprise, most helpful brokers aren’t user-initiated, they’re autonomous.

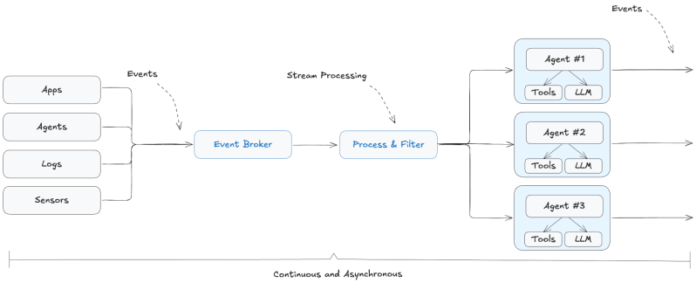

They don’t sit idly ready for a human to immediate them. They’re long-running processes that react to knowledge because it flows by way of the enterprise. They make choices, name companies and produce outputs, repeatedly and asynchronously, while not having to be instructed when to begin.

Think about an agent that displays new invoices. Each time an bill lands, it extracts the related fields, checks them towards open buy orders, flags mismatches and both routes the bill for approval or rejection, with out anybody asking it to take action. It simply listens for the occasion (“new bill acquired”) and goes to work.

Or take into consideration buyer onboarding. An agent would possibly look ahead to the second a brand new account is created, then kick off a cascade: confirm paperwork, run know-your-customer (KYC) checks, personalize the welcome expertise and schedule a follow-up message. The person by no means is aware of the agent exists. It simply runs. Reliably. In actual time.

That is what enterprise brokers appear like:

They’re event-driven: Triggered by adjustments within the system, not person prompts.

They’re autonomous: They act with out human initiation.

They’re steady: They don’t spin up for a single job and disappear.

They’re principally asynchronous: They work within the background, not in blocking workflows.

Brokers are microservices that react and emit to occasions, carry context, use fashions

You don’t construct these brokers by fine-tuning an enormous mannequin. You construct them by wiring collectively current fashions, instruments and logic. It’s a software program engineering downside, not a modeling one.

At their core, enterprise brokers are simply trendy microservices with intelligence. You give them entry to occasions, give them the proper context and let a language mannequin drive the reasoning.

Agent = Occasion-driven microservice + context knowledge + LLM

Executed effectively, that’s a strong architectural sample. It’s additionally a shift in mindset. Constructing brokers isn’t about chasing synthetic basic intelligence (AGI). It’s about decomposing actual issues into smaller steps, then assembling specialised, dependable parts that may deal with them, identical to we’ve at all times accomplished in good software program methods.

We’ve solved this type of downside earlier than

If this sounds acquainted, it ought to. We’ve been right here earlier than.

When monoliths couldn’t scale, we broke them into microservices. When synchronous APIs led to bottlenecks and brittle methods, we turned to event-driven structure. These had been hard-won classes from many years of constructing real-world methods. They labored as a result of they introduced construction and determinism to advanced methods.

I fear that we’re beginning to overlook that historical past and repeat the identical errors in how we construct AI.

As a result of this isn’t a brand new downside. It’s the identical engineering problem, simply with new parts. And proper now, enterprise AI wants the identical ideas that received us right here: clear boundaries, unfastened coupling and methods designed to be dependable from the beginning.

AI fashions should not deterministic, however your methods may be

The issues price fixing in most companies are closed-world: Issues with identified inputs, clear guidelines and measurable outcomes. However the fashions we’re utilizing, particularly LLMs, are inherently non-deterministic. They’re probabilistic by design. The identical enter can yield completely different outputs relying on context, sampling or temperature.

That’s advantageous if you’re answering a immediate. However if you’re working a enterprise course of? That unpredictability is a legal responsibility.

So if you wish to construct production-grade AI methods, your job is easy: Wrap non-deterministic fashions in deterministic infrastructure.

Construct determinism across the mannequin

If you already know a specific device needs to be used for a job, don’t let the mannequin determine, simply name the device.

In case your workflow may be outlined statically, don’t depend on dynamic decision-making, use a deterministic name graph.

If the inputs and outputs are predictable, don’t introduce ambiguity by overcomplicating the agent logic.

Too many groups are reinventing runtime orchestration with each agent, letting the LLM determine what to do subsequent, even when the steps are identified forward of time. You’re simply making your life tougher.

The place event-driven multi-agent methods shine

Occasion-driven multi-agent methods break the issue into smaller steps. If you assign each to a purpose-built agent and set off them with structured occasions, you find yourself with a loosely coupled, totally traceable system that works the best way enterprise methods are alleged to work: With reliability, accountability and clear management.

And since it’s event-driven:

Brokers don’t must find out about one another. They simply reply to occasions.

Work can occur in parallel, rushing up advanced flows.

Failures are remoted and recoverable through occasion logs or retries.

You’ll be able to observe, debug and take a look at every element in isolation.

Don’t chase magic

Closed-world issues don’t require magic. They want stable engineering. And which means combining the flexibleness of LLMs with the construction of fine software program engineering. If one thing may be made deterministic, make it deterministic. Save the mannequin for the elements that truly require judgment.

That’s the way you construct brokers that don’t simply look good in demos however really run, scale and ship in manufacturing.

Why testing is a lot tougher in an open world

One of the vital neglected challenges in constructing brokers is testing, however it’s completely important for the enterprise.

In an open-world context, it’s practically inconceivable to do effectively. The issue house is unbounded so the inputs may be something, the specified outputs are sometimes ambiguous and even the standards for achievement would possibly shift relying on context.

How do you write a take a look at suite for a system that may be requested to do virtually something? You’ll be able to’t.

That’s why open-world brokers are so arduous to validate in follow. You’ll be able to measure remoted behaviors or benchmark slender duties, however you may’t belief the system end-to-end except you’ve someway seen it carry out throughout a combinatorially giant house of conditions, which nobody has.

In distinction, closed-world issues make testing tractable. The inputs are constrained. The anticipated outputs are definable. You’ll be able to write assertions. You’ll be able to simulate edge instances. You’ll be able to know what “right” appears like.

And if you happen to go one step additional, decomposing your agent’s logic into smaller, well-scoped parts utilizing an event-driven structure, it will get much more tractable. Every agent within the system has a slender duty. Its habits may be examined independently, its inputs and outputs mocked or replayed, and its efficiency evaluated in isolation.

When the system is modular, and the scope of every module is closed-world, you may construct take a look at units that truly provide you with confidence.

That is the muse for belief in manufacturing AI.

Constructing the proper basis

The way forward for AI within the enterprise doesn’t begin with AGI. It begins with automation that works. Which means specializing in closed-world issues which can be structured, bounded and wealthy with alternative for actual impression.

You don’t want an agent that may do every part. You want a system that may reliably do one thing:

A declare routed accurately.

A doc parsed precisely.

A buyer adopted up with on time.

These wins add up. They cut back prices, liberate time and construct belief in AI as a reliable a part of the stack.

And getting there doesn’t require breakthroughs in immediate engineering or betting on the following mannequin to magically generalize. It requires doing what good engineers have at all times accomplished: Breaking issues down, constructing composable methods and wiring parts collectively in methods which can be testable and observable.

Occasion-driven multi-agent methods aren’t a silver bullet, they’re only a sensible structure for working with imperfect instruments in a structured approach. They allow you to isolate the place intelligence is required, comprise the place it’s not and construct methods that behave predictably even when particular person elements don’t.

This isn’t about chasing the frontier. It’s about making use of primary software program engineering to a brand new class of issues.

Sean Falconer is Confluent’s AI entrepreneur in residence.

Every day insights on enterprise use instances with VB Every day

If you wish to impress your boss, VB Every day has you coated. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you may share insights for max ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.