Need smarter insights in your inbox? Join our weekly newsletters to get solely what issues to enterprise AI, knowledge, and safety leaders. Subscribe Now

The rise in Deep Analysis options and different AI-powered evaluation has given rise to extra fashions and providers trying to simplify that course of and browse extra of the paperwork companies really use.

Canadian AI firm Cohere is banking on its fashions, together with a newly launched visible mannequin, to make the case that Deep Analysis options must also be optimized for enterprise use instances.

The corporate has launched Command A Imaginative and prescient, a visible mannequin particularly concentrating on enterprise use instances, constructed on the again of its Command A mannequin. The 112 billion parameter mannequin can “unlock invaluable insights from visible knowledge, and make extremely correct, data-driven selections by way of doc optical character recognition (OCR) and picture evaluation,” the corporate says.

“Whether or not it’s decoding product manuals with complicated diagrams or analyzing images of real-world scenes for threat detection, Command A Imaginative and prescient excels at tackling probably the most demanding enterprise imaginative and prescient challenges,” the corporate stated in a weblog submit.

The AI Impression Sequence Returns to San Francisco – August 5

The following part of AI is right here – are you prepared? Be a part of leaders from Block, GSK, and SAP for an unique have a look at how autonomous brokers are reshaping enterprise workflows – from real-time decision-making to end-to-end automation.

Safe your spot now – area is proscribed: https://bit.ly/3GuuPLF

This implies Command A Imaginative and prescient can learn and analyze the most typical forms of photographs enterprises want: graphs, charts, diagrams, scanned paperwork and PDFs.

? @cohere simply dropped Command A Imaginative and prescient on @huggingface ?

Designed for enterprise multimodal use instances: decoding product manuals, analyzing images, asking about charts… ❓??

A 112B dense vision-language mannequin with SOTA efficiency – take a look at the benchmark metrics in… pic.twitter.com/ORMfM5f8cF

– Jeff Boudier? (@jeffboudier) July 31, 2025

Because it’s constructed on Command A’s structure, Command A Imaginative and prescient requires two or fewer GPUs, identical to the textual content mannequin. The imaginative and prescient mannequin additionally retains the textual content capabilities of Command A to learn phrases on photographs and understands not less than 23 languages. Cohere stated that, in contrast to different fashions, Command A Imaginative and prescient reduces the whole price of possession for enterprises and is absolutely optimized for retrieval use instances for companies.

How Cohere is architecting Command A

Cohere stated it adopted a Llav structure to construct its Command A fashions, together with the visible mannequin. This structure turns visible options into tender imaginative and prescient tokens, which will be divided into totally different tiles.

These tiles are handed into the Command A textual content tower, “a dense, 111B parameters textual LLM,” the corporate stated. “On this method, a single picture consumes as much as 3,328 tokens.”

Cohere stated it educated the visible mannequin in three phases: vision-language alignment, supervised fine-tuning (SFT) and post-training reinforcement studying with human suggestions (RLHF).

“This strategy allows the mapping of picture encoder options to the language mannequin embedding area,” the corporate stated. “In distinction, through the SFT stage, we concurrently educated the imaginative and prescient encoder, the imaginative and prescient adapter and the language mannequin on a various set of instruction-following multimodal duties.”

Visualizing enterprise AI

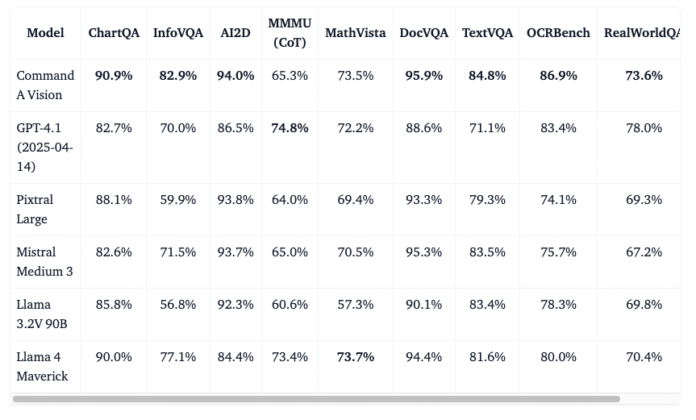

Benchmark assessments confirmed Command A Imaginative and prescient outperforming different fashions with comparable visible capabilities.

Cohere pitted Command A Imaginative and prescient towards Openai’s GPT 4.1, Meta‘S name 4 maverick, Mistral’s Pixtral Massive and Mistral Medium 3 in 9 benchmark assessments. The corporate didn’t point out if it examined the mannequin towards Mistral’s OCR-focused API, Mistral OCR.

It allows brokers to securely see inside your group’s visible knowledge, unlocking the automation of tedious duties involving slides, diagrams, PDFs, and images. pic.twitter.com/iHZnUWekrk

— cohere (@cohere) July 31, 2025

Command A Imaginative and prescient outscored the opposite fashions in assessments equivalent to ChartQA, OCRBench, AI2D and TextVQA. Total, Command A Imaginative and prescient had a median rating of 83.1% in comparison with GPT 4.1’s 78.6%, Llama 4 Maverick’s 80.5% and the 78.3% from Mistral Medium 3.

Most giant language fashions (LLMs) as of late are multimodal, that means they will generate or perceive visible media like images or movies. Nevertheless, enterprises typically use extra graphical paperwork equivalent to charts and PDFs, so extracting data from these unstructured knowledge sources usually proves tough.

With Deep Analysis on the rise, the significance of bringing in fashions able to studying, analyzing and even downloading unstructured knowledge has grown.

Cohere additionally stated it’s providing Command A Imaginative and prescient in an open weights system, in hopes that enterprises trying to transfer away from closed or proprietary fashions will begin utilizing its merchandise. Thus far, there may be some curiosity from builders.

Very impressed at its accuracy extracting hand handwritten notes from a picture!

– Adam Sardo (@sardo_adam) July 31, 2025

Lastly, an AI that received’t decide my horrible doodles.

– Martha Wisener? (@MartWisener) August 1, 2025

Each day insights on enterprise use instances with VB Each day

If you wish to impress your boss, VB Each day has you lined. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you possibly can share insights for optimum ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.