On this weblog, we dive into Agent Studying from Human Suggestions (ALHF) — a brand new machine studying paradigm the place brokers be taught immediately from minimal pure language suggestions, not simply numeric rewards or static labels. This unlocks quicker, extra intuitive agent adaptation for enterprise functions, the place expectations are sometimes specialised and laborious to formalize.

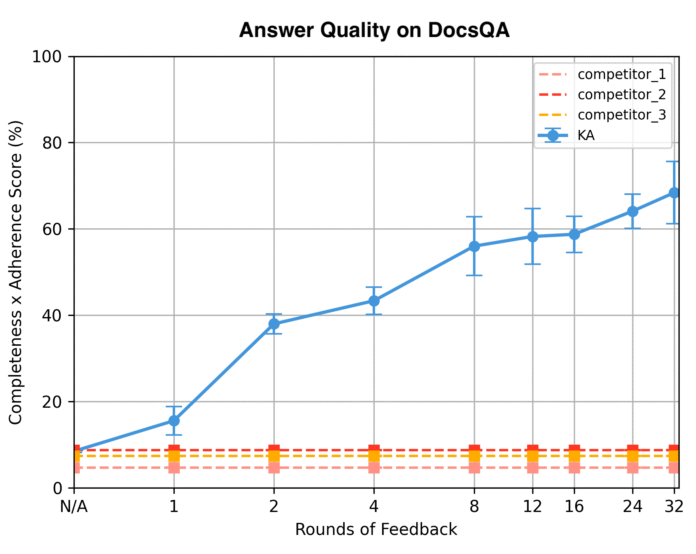

ALHF powers the Databricks Agent Bricks product. In our case examine, we have a look at Agent Bricks Information Assistant (KA) – which regularly improves its responses by way of knowledgeable suggestions. As proven in Determine 1, ALHF drastically boosts the general reply high quality on Databricks DocsQA with as few as 4 suggestions data. With simply 32 suggestions data, we greater than quadruple the reply high quality over the static baselines. Our case examine demonstrates the efficacy of ALHF and opens up a compelling new route for agent analysis.

Determine 1. KA improves its response high quality (as measured by Reply Completeness and Suggestions Adherence) with growing quantities of suggestions. See the “ALHF in Agent Bricks” part for extra particulars.

The Promise of Teachable AI Brokers

In working with enterprise clients of Databricks, a key problem we’ve seen is that many enterprise AI use instances depend upon extremely specialised inner enterprise logic, proprietary knowledge, and intrinsic expectations, which aren’t recognized externally (see our Area Intelligence Benchmark to be taught extra). Subsequently, even essentially the most superior techniques nonetheless want substantial tuning to satisfy the standard threshold of enterprise use instances.

To tune these techniques, current approaches depend on both specific floor reality outputs, that are costly to gather, or reward fashions, which solely give binary/scalar alerts. With a purpose to remedy these challenges, we describe Agent Studying from Human Suggestions (ALHF), a studying paradigm the place an agent adapts its conduct by incorporating a small quantity of pure language suggestions from specialists. This paradigm affords a pure, cost-effective channel for human interplay and allows the system to be taught from wealthy expectation alerts.

Instance

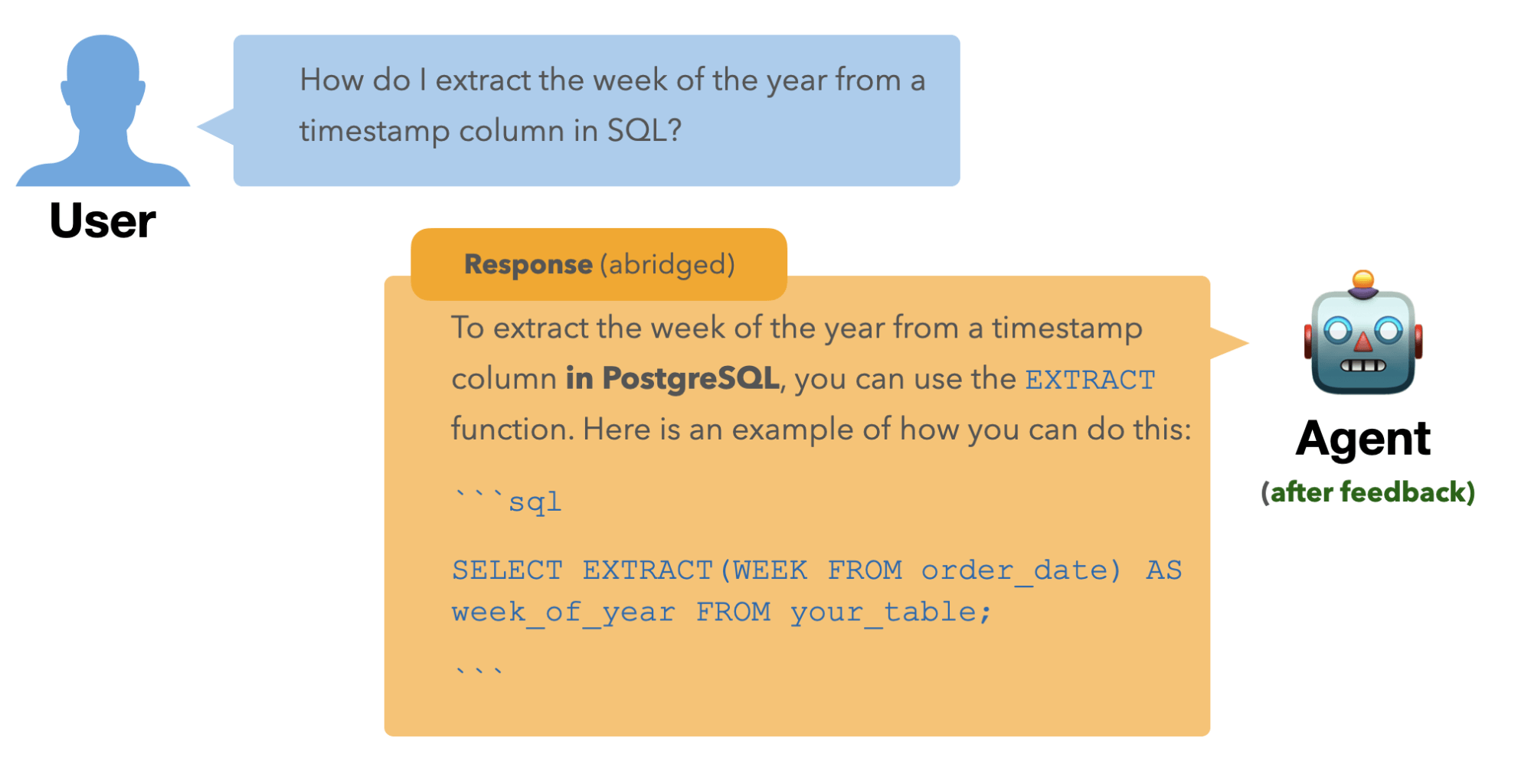

Let’s say we create a Query Answering (QA) agent to reply questions for a hosted database firm. Right here’s an instance query:

The agent urged utilizing the perform weekofyear(), supported in a number of flavors of SQL (MySQL, MariaDB, and so on.). This reply is right in that when used appropriately, weekofyear() does obtain the specified performance. Nonetheless, it isn’t supported in PostgreSQL, the SQL taste most well-liked by our consumer group. Our Topic Matter Professional (SME) can present pure language suggestions on the response to speak this expectation as above, and the agent will adapt accordingly:

ALHF adapts the system responses not just for this single query, but additionally for questions in future conversations the place the suggestions is related, for instance:

As this instance exhibits, ALHF offers builders and SMEs a frictionless and intuitive method to steer an agent’s conduct utilizing pure language — aligning it with their expectations.

ALHF in Agent Bricks

We’ll use one particular use case of the Agent Bricks product – Information Assistant – as a case examine to reveal the ability of ALHF.

Information Assistant (KA) offers a declarative method to create a chatbot over your paperwork, delivering high-quality, dependable responses with citations. KA leverages ALHF to constantly be taught knowledgeable expectations from pure language suggestions and enhance the standard of its responses.

KA first asks for high-level job directions. As soon as it’s linked to the related data sources, it begins answering questions. Specialists can then leverage an Enhance High quality mode to evaluate responses and go away suggestions, which KA incorporates by way of ALHF to refine future solutions.

Analysis

To reveal the worth of ALHF in KA, we consider KA utilizing DocsQA – a dataset of questions and reference solutions on Databricks documentation, a part of our Area Intelligence Benchmark. For this dataset, we even have a set of outlined knowledgeable expectations. For a small set of candidate responses generated by KA, we create a chunk of terse pure language suggestions (like within the above instance) primarily based on these expectations and supply the suggestions to KA to refine its responses. We then measure the response high quality throughout a number of rounds of suggestions to judge if KA efficiently adapts to satisfy knowledgeable expectations.

Notice that whereas the reference solutions mirror factual correctness — whether or not a solution comprises related and correct data to deal with the query — they don’t seem to be essentially ultimate by way of aligning with knowledgeable expectations. As illustrated in our earlier instance, the preliminary response could also be factually right for a lot of flavors of SQL, however should fall quick if the knowledgeable expects a PostgreSQL-specific response.

Contemplating these two dimensions of correctness, we consider the standard of a response utilizing two LLM judges:

Reply Completeness: How nicely the response aligns with the reference response from the dataset. This serves as a baseline measure of factual correctness.Suggestions Adherence: How nicely the response satisfies the precise knowledgeable expectations. This measures the agent’s capability to tailor its output primarily based on customized standards.

Outcomes

Determine 2 exhibits how KA improves in high quality with growing rounds of knowledgeable suggestions on DocsQA. We report outcomes for a held-out check set.

Reply Completeness: With out suggestions, KA already produces high-quality responses comparable with main competing techniques. With as much as 32 items of suggestions, KA’s Reply Completeness improves by 12 proportion factors, clearly outperforming rivals.Suggestions Adherence: The excellence between Suggestions Adherence and Reply Completeness is obvious – all techniques begin with low adherence scores with out suggestions. However right here’s the place ALHF shines: with suggestions KA adherence rating jumps from 11.7% to almost 80%, showcasing the dramatic affect of ALHF.

Determine 2: In distinction to static baselines, KA improves its response with growing quantities of suggestions by way of each Reply Completeness and Suggestions Adherence. Outcomes are reported on unseen questions from the DocsQA dataset.

Total, ALHF is an efficient mechanism for refining and adapting a system’s conduct to satisfy the precise knowledgeable expectations. Specifically, it’s extremely sample-efficient: you don’t want a whole bunch or 1000’s of examples, however can see clear features with a small quantity of suggestions.

ALHF: the technical problem

These spectacular outcomes are doable as a result of KA efficiently addresses two core technical challenges of ALHF.

Studying When to Apply Suggestions

When an knowledgeable offers suggestions on one query, how does the agent know which future questions ought to profit from that very same perception? That is the problem of scoping — figuring out the proper scope of applicability for every bit of suggestions. Or alternatively put, figuring out the relevance of a chunk of suggestions to a query.

Take into account our PostgreSQL instance. When the knowledgeable says “the reply needs to be appropriate with PostgreSQL”, this suggestions should not simply repair that one response. It ought to inform all future SQL-related questions. But it surely should not have an effect on unrelated queries, like “Ought to I exploit matplotlib or seaborn for this chart?”

We undertake an agent reminiscence method that data all prior suggestions and permits the agent to effectively retrieve related suggestions for a brand new query. This allows the agent to dynamically and holistically decide which insights are most related to the present query.

Adapting the Proper System Parts

The second problem is task — determining which components of the system want to alter in response to suggestions. KA is not a single mannequin; it is a multi-component pipeline that generates search queries, retrieves paperwork, and produces solutions. Efficient ALHF requires updating the proper parts in the proper methods.

KA is designed with a set of LLM-powered parts which might be parameterized by suggestions. Every element is a module that accepts related suggestions and adapts its conduct accordingly. Taking the instance from earlier, the place the SME offers the next suggestions on the date extraction instance:

Later, the consumer asks a associated query — “How do I get the distinction between two dates in SQL?”. With out receiving any new suggestions, KA mechanically applies what it realized from the sooner interplay. It begins by modifying the search question within the retrieval stage, tailoring it to the context:

Then, it produces a PostgreSQL-specific response:

By exactly routing the suggestions to the suitable retrieval and response technology parts, ALHF ensures that the agent learns and generalizes successfully from knowledgeable suggestions.

What ALHF Means for You: Inside Agent Bricks

Agent Studying from Human Suggestions (ALHF) represents a significant step ahead in enabling AI brokers to really perceive and adapt to knowledgeable expectations. By enabling pure language suggestions to incrementally form an agent’s conduct, ALHF offers a versatile, intuitive, and highly effective mechanism for steering AI techniques in direction of particular enterprise wants. Our case examine with Information Assistant demonstrates how ALHF can dramatically increase response high quality and adherence to knowledgeable expectations, even with minimal suggestions. As Patrick Vinton, Chief Expertise Officer at Analytics8a KA buyer, mentioned:

“Leveraging Agent Bricks, Analytics8 achieved a 40% enhance in reply accuracy with 800% quicker implementation occasions for our use instances, starting from easy HR assistants to complicated analysis assistants sitting on high of extraordinarily technical, multimodal white papers and documentation. Put up launch, we’ve additionally noticed that reply high quality continues to climb.”

ALHF is now a built-in functionality inside the Agent Bricks product, empowering Databricks clients to deploy extremely personalized enterprise AI options. We encourage all clients taken with leveraging the ability of teachable AI to attach with their Databricks Account Groups and take a look at The and different Agent Bricks use instances to discover how ALHF can remodel their generative AI workflows.

Veronica lyu and Kartik Sreenivasan contributed equally