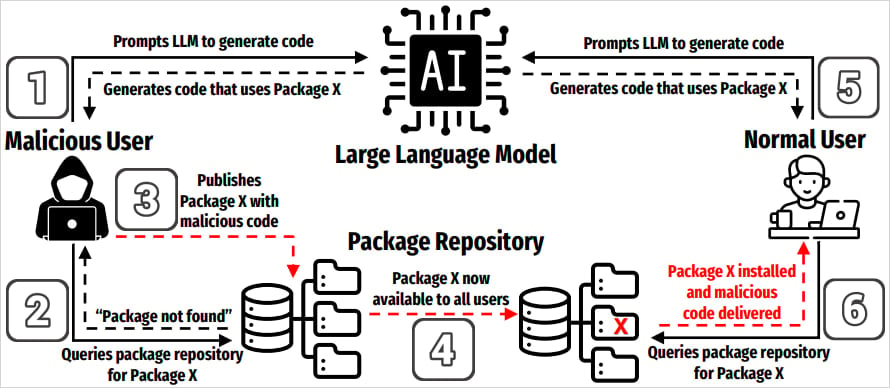

A brand new class of provide chain assaults named ‘slopsquatting’ has emerged from the elevated use of generative AI instruments for coding and the mannequin’s tendency to “hallucinate” non-existent package deal names.

The time period slopsquatting was coined by safety researcher Seth Larson as a spin on typosquatting, an assault technique that tips builders into putting in malicious packages by utilizing names that carefully resemble standard libraries.

Not like typosquatting, slopsquatting does not depend on misspellings. As a substitute, menace actors may create malicious packages on indexes like PyPI and npm named after ones generally made up by AI fashions in coding examples.

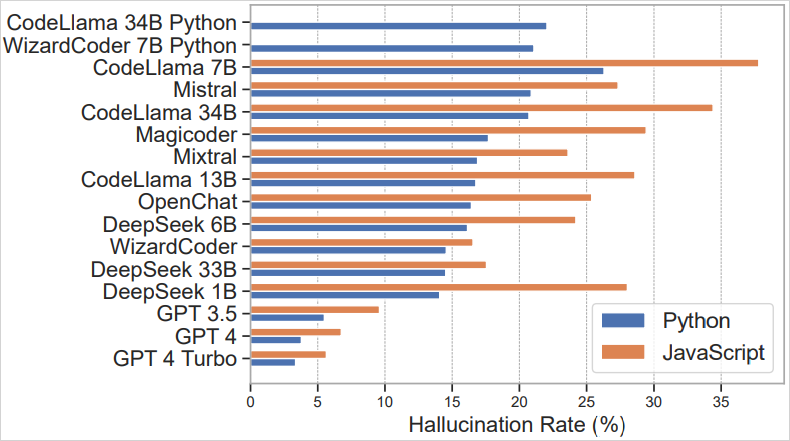

A analysis paper about package deal hallucinations revealed in March 2025 demonstrates that in roughly 20% of the examined instances (576,000 generated Python and JavaScript code samples), really useful packages did not exist.

The state of affairs is worse on open-source LLMs like CodeLlama, DeepSeek, WizardCoder, and Mistral, however industrial instruments like ChatGPT-4 nonetheless hallucinated at a price of about 5%, which is critical.

Hallucination charges for varied LLMs

Hallucination charges for varied LLMs

Supply: arxiv.org

Whereas the variety of distinctive hallucinated package deal names logged within the research was giant, surpassing 200,000, 43% of these have been constantly repeated throughout related prompts, and 58% re-appeared at the very least as soon as once more inside ten runs.

The research confirmed that 38% of those hallucinated package deal names appeared impressed by actual packages, 13% have been the outcomes of typos, and the rest, 51%, have been fully fabricated.

Though there aren’t any indicators that attackers have began making the most of this new kind of assault, researchers from open-source cybersecurity firm Socket warn that hallucinated package deal names are frequent, repeatable, and semantically believable, making a predictable assault floor that may very well be simply weaponized.

“General, 58% of hallucinated packages have been repeated greater than as soon as throughout ten runs, indicating {that a} majority of hallucinations usually are not simply random noise, however repeatable artifacts of how the fashions reply to sure prompts,” explains the Socket researchers.

“That repeatability will increase their worth to attackers, making it simpler to determine viable slopsquatting targets by observing only a small variety of mannequin outputs.”

Overview of the availability chain danger

Overview of the availability chain danger

Supply: arxiv.org

The one technique to mitigate this danger is to confirm package deal names manually and by no means assume a package deal talked about in an AI-generated code snippet is actual or protected.

Utilizing dependency scanners, lockfiles, and hash verification to pin packages to recognized, trusted variations is an efficient means to enhance safety

The analysis has proven that decreasing AI “temperature” settings (much less randomness) reduces hallucinations, so if you happen to’re into AI-assisted or vibe coding, this is a vital issue to think about.

In the end, it’s prudent to all the time check AI-generated code in a protected, remoted setting earlier than operating or deploying it in manufacturing environments.

Primarily based on an evaluation of 14M malicious actions, uncover the highest 10 MITRE ATT&CK methods behind 93% of assaults and learn how to defend towards them.