Need smarter insights in your inbox? Join our weekly newsletters to get solely what issues to enterprise AI, knowledge, and safety leaders. Subscribe Now

One other week in the summertime of 2025 has begun, and in a continuation of the development from final week, with it arrives extra highly effective Chinese language open supply AI fashions.

Little-known (at the least to us right here within the West) Chinese language startup Z.ai has launched two new open supply LLMs — GLM-4.5 and GLM-4.5-Air — casting them as go-to options for AI reasoning, agentic habits, and coding.

And in response to Z.ai’s weblog put up, the fashions carry out close to the highest of the pack of different proprietary LLM leaders within the U.S.

For instance, the flagship GLM-4.5 matches or outperforms main proprietary fashions like Claude 4 Sonnet, Claude 4 Opus, and Gemini 2.5 Professional on evaluations akin to BrowseComp, AIME24, and SWE-bench Verified, whereas rating third total throughout a dozen aggressive assessments.

The AI Influence Collection Returns to San Francisco – August 5

The following section of AI is right here – are you prepared? Be part of leaders from Block, GSK, and SAP for an unique take a look at how autonomous brokers are reshaping enterprise workflows – from real-time decision-making to end-to-end automation.

Safe your spot now – house is restricted: https://bit.ly/3GuuPLF

Its lighter-weight sibling, GLM-4.5-Air, additionally performs inside the high six, providing robust outcomes relative to its smaller scale.

Each fashions function twin operation modes: a pondering mode for advanced reasoning and power use, and a non-thinking mode for fast response situations. They will robotically generate full PowerPoint shows from a single title or immediate, making them helpful for assembly preparation, schooling, and inner reporting.

They additional provide inventive writing, emotionally conscious copywriting, and script era to create branded content material for social media and the net. Furthermore, z.ai says they assist digital character growth and turn-based dialogue techniques for buyer assist, roleplaying, fan engagement, or digital persona storytelling.

Whereas each fashions assist reasoning, coding, and agentic capabilities, GLM-4.5-Air is designed for groups looking for a lighter-weight, extra cost-efficient different with sooner inference and decrease useful resource necessities.

Z.ai additionally lists a number of specialised fashions within the GLM-4.5 household on its API, together with GLM-4.5-X and GLM-4.5-AirX for ultra-fast inference, and GLM-4.5-Flash, a free variant optimized for coding and reasoning duties.

They’re out there now to make use of instantly on Z.ai and thru the Z.ai software programming interface (API) for builders to connect with third-party apps, and their code is accessible on HuggingFace and ModelScope. The corporate additionally supplies a number of integration routes, together with assist for inference through vLLM and SGLang.

Licensing and API pricing

GLM-4.5 and GLM-4.5-Air are launched underneath the Apache 2.0 license, a permissive and commercially pleasant open-source license.

This permits builders and organizations to freely use, modify, self-host, fine-tune, and redistribute the fashions for each analysis and business functions.

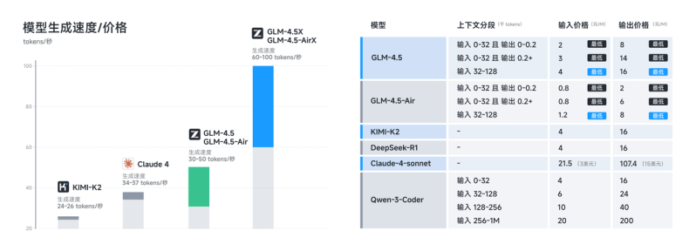

For many who don’t wish to obtain the mannequin code or weights and self-host or deploy on their very own, z.ai’s cloud-based API gives the mannequin for the next costs.

GLM-4.5:

$0.60 / $2.20 per 1 million enter/output tokens

GLM-4.5-Air:

$0.20 / $1.10 per 1M enter/output tokens

A CNBC article on the fashions reported that z.ai would cost solely $0.11 / $0.28 per million enter/output tokens, which can also be supported by a Chinese language graphic the corporate posted on its API documentation for the “Air mannequin.”

Nonetheless, this seems to be the case just for inputting as much as 32,000 tokens and outputting 200 tokens at a single time. (Recall tokens are the numerical designations the LLM makes use of to symbolize totally different semantic ideas and phrase parts, the LLM’s native language, with every token translating to a phrase or portion of a phrase).

In reality, the Chinese language graphic reveals much more detailed pricing for each fashions per batches of tokens inputted/outputted. I’ve tried to translate it beneath:

One other word: since z.ai relies in China, these within the West who’re centered on knowledge sovereignty will wish to due diligence by way of inner insurance policies to pursue utilizing the API, as it might be topic to Chinese language content material restrictions.

Aggressive efficiency on third-party benchmarks, approaching that of main closed/proprietary LLMs

GLM-4.5 ranks third throughout 12 business benchmarks measuring agentic, reasoning, and coding efficiency—trailing solely OpenAI’s GPT-4 and xAI’s Grok 4. GLM-4.5-Air, its extra compact sibling, lands in sixth place.

In agentic evaluations, GLM-4.5 matches Claude 4 Sonnet in efficiency and exceeds Claude 4 Opus in web-based duties. It achieves a 26.4% accuracy on the BrowseComp benchmark, in comparison with Claude 4 Opus’s 18.8%. Within the reasoning class, it scores competitively on duties akin to MATH 500 (98.2%), AIME24 (91.0%), and GPQA (79.1%).

For coding, GLM-4.5 posts a 64.2% success fee on SWE-bench Verified and 37.5% on Terminal-Bench. In pairwise comparisons, it outperforms Qwen3-Coder with an 80.8% win fee and beats Kimi K2 in 53.9% of duties. Its agentic coding means is enhanced by integration with instruments like Claude Code, Roo Code, and CodeGeex.

The mannequin additionally leads in tool-calling reliability, with successful fee of 90.6%, edging out Claude 4 Sonnet and the new-ish Kimi K2.

A part of the wave of open supply Chinese language LLMs

The discharge of GLM-4.5 arrives amid a surge of aggressive open-source mannequin launches in China, most notably from Alibaba’s Qwen Group.

Within the span of a single week, Qwen launched 4 new open-source LLMs, together with the reasoning-focused Qwen3-235B-A22B-Pondering-2507, which now tops or matches main fashions akin to OpenAI’s o4-mini and Google’s Gemini 2.5 Professional on reasoning benchmarks like AIME25, LiveCodeBench, and GPQA.

This week, Alibaba continued the development with the discharge of Wan 2.2, a robust new open supply video mannequin.

Alibaba’s new fashions are, like z.ai, licensed underneath Apache 2.0, permitting business utilization, self-hosting, and integration into proprietary techniques.

The broad availability and permissive licensing of Alibaba’s choices and Chinese language startup Moonshot earlier than it with its Kimi K2 mannequin displays an ongoing strategic effort by Chinese language AI firms to place open-source infrastructure as a viable different to closed U.S.-based fashions.

It additionally locations stress on the U.S.-based mannequin supplier efforts to compete in open supply. Meta has been on a hiring spree after its Llama 4 mannequin household debuted earlier this 12 months to a combined response from the AI neighborhood, together with a healthy dose of criticism for what some AI energy customers noticed as benchmark gaming and inconsistent efficiency.

In the meantime, OpenAI co-founder and CEO Sam Altman lately introduced that OpenAI’s long-awaited and much-hyped frontier open supply LLM — its first since earlier than ChatGPT launched in late 2022 — can be delayed from its initially deliberate July launch to an as-yet unspecified later date.

Structure and coaching classes revealed

GLM-4.5 is constructed with 355 billion complete and 32 billion energetic parameters. Its counterpart, GLM-4.5-Air, gives a lighter-weight design at 106 billion complete and 12 billion energetic parameters.

Each use a Combination-of-Consultants (MoE) structure, optimized with loss-free steadiness routing, sigmoid gating, and elevated depth for enhanced reasoning.

The self-attention block contains Grouped-Question Consideration and the next variety of consideration heads. A Multi-Token Prediction (MTP) layer allows speculative decoding throughout inference.

Pre-training spans 22 trillion tokens break up between general-purpose and code/reasoning corpora. Mid-training provides 1.1 trillion tokens from repo-level code knowledge, artificial reasoning inputs, and long-context/agentic sources.

Z.ai’s post-training course of for GLM-4.5 relied upon a reinforcement studying section powered by its in-house RL infrastructure, slime, which separates knowledge era and mannequin coaching processes to optimize throughput on agentic duties.

Among the many strategies they used have been mixed-precision rollouts and adaptive curriculum studying.

The previous assist the mannequin practice sooner and extra effectively through the use of lower-precision math when producing knowledge, with out sacrificing a lot accuracy.

In the meantime, adaptive curriculum studying means the mannequin begins with simpler duties and regularly strikes to more durable ones, serving to it be taught extra advanced duties regularly over time.

GLM-4.5’s structure prioritizes computational effectivity. In keeping with CNBC, Z.ai CEO Zhang Peng acknowledged that the mannequin runs on simply eight Nvidia H20 GPUs — customized silicon designed for the Chinese language market to adjust to U.S. export controls. That’s roughly half the {hardware} requirement of DeepSeek’s comparable fashions.

Interactive demos

Z.ai highlights full-stack growth, slide creation, and interactive artifact era as demonstration areas on its weblog put up.

Examples embrace a Flappy Chook clone, Pokémon Pokédex net app, and slide decks constructed from structured paperwork or net queries.

Customers can work together with these options on the Z.ai chat platform or by way of API integration.

Firm background and market place

Z.ai was based in 2019 underneath the title Zhipu, and has since grown into considered one of China’s most outstanding AI startups, in response to CNBC.

The corporate has raised over $1.5 billion from traders together with Alibaba, Tencent, Qiming Enterprise Companions, and municipal funds from Hangzhou and Chengdu, with further backing from Aramco-linked Prosperity7 Ventures.

Its GLM-4.5 launch coincides with the World Synthetic Intelligence Convention in Shanghai, the place a number of Chinese language companies showcased developments. Z.ai was additionally named in a June OpenAI report highlighting Chinese language progress in AI, and has since been added to a U.S. entity record limiting enterprise with American companies.

What it means for enterprise technical decision-makers

For senior AI engineers, knowledge engineers, and AI orchestration leads tasked with constructing, deploying, or scaling language fashions in manufacturing, the GLM-4.5 household’s launch underneath the Apache 2.0 license presents a significant shift in choices.

The mannequin gives efficiency that rivals high proprietary techniques throughout reasoning, coding, and agentic benchmarks — but comes with full weight entry, business utilization rights, and versatile deployment paths, together with cloud, personal, or on-prem environments.

For these managing LLM lifecycles — whether or not main mannequin fine-tuning, orchestrating multi-stage pipelines, or integrating fashions with inner instruments — GLM-4.5 and GLM-4.5-Air scale back boundaries to testing and scaling.

The fashions assist customary OpenAI-style interfaces and tool-calling codecs, making it simpler to guage in sandboxed environments or drop into present agent frameworks.

GLM-4.5 additionally helps streaming output, context caching, and structured JSON responses, enabling smoother integration with enterprise techniques and real-time interfaces. For groups constructing autonomous instruments, its deep pondering mode supplies extra exact management over multi-step reasoning habits.

For groups underneath finances constraints or these looking for to keep away from vendor lock-in, the pricing construction undercuts main proprietary alternate options like DeepSeek and Kimi K2. This issues for organizations the place utilization quantity, long-context duties, or knowledge sensitivity make open deployment a strategic necessity.

For professionals in AI infrastructure and orchestration, akin to these implementing CI/CD pipelines, monitoring fashions in manufacturing, or managing GPU clusters, GLM-4.5’s assist for vLLM, SGLang, and mixed-precision inference aligns with present greatest practices in environment friendly, scalable mannequin serving. Mixed with open-source RL infrastructure (slime) and a modular coaching stack, the mannequin’s design gives flexibility for tuning or extending in domain-specific environments.

In brief, GLM-4.5’s launch provides enterprise groups a viable, high-performing basis mannequin they will management, adapt, and scale, with out being tied to proprietary APIs or pricing buildings. It’s a compelling choice for groups balancing innovation, efficiency, and operational constraints.

Every day insights on enterprise use circumstances with VB Every day

If you wish to impress your boss, VB Every day has you lined. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you may share insights for max ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.