Retrieval Augmented Era (RAG) is a widely known method to creating generative AI functions. RAG combines massive language fashions (LLMs) with exterior world information retrieval and is more and more widespread for including accuracy and personalization to AI. It retrieves related info from exterior sources, augments the enter with this knowledge, and generates responses primarily based on each. This method reduces hallucinations, improves truth accuracy, and permits for up-to-date, environment friendly, and explainable AI programs. RAG’s capacity to interrupt by means of classical language mannequin limitations has made it relevant to broad AI use instances.

Amazon OpenSearch Service is a flexible search and analytics software. It’s able to performing safety analytics, looking knowledge, analyzing logs, and plenty of different duties. It will probably additionally work with vector knowledge with a k-nearest neighbors (k-NN) plugin, which makes it useful for extra advanced search methods. Due to this characteristic, OpenSearch Service can function a information base for generative AI functions that combine language era with search outcomes.

By preserving context over a number of exchanges, honing responses, and offering a extra seamless consumer expertise, conversational search enhances RAG. It helps with advanced info wants, resolves ambiguities, and manages multi-turn reasoning. Conversational search offers a extra pure and customized interplay, yielding extra correct and pertinent outcomes, despite the fact that normal RAG performs nicely for single queries.

On this submit, we discover conversational search, its structure, and varied methods to implement it.

Resolution overview

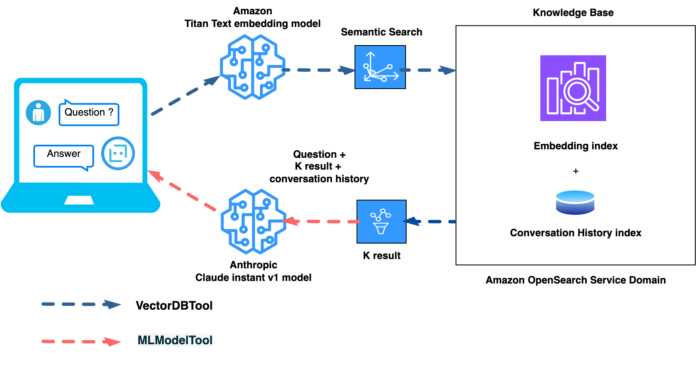

Let’s stroll by means of the answer to construct conversational search. The next diagram illustrates the answer structure.

The brand new OpenSearch characteristic often known as brokers and instruments is used to create conversational search. To develop refined AI functions, brokers coordinate a wide range of machine studying (ML) duties. Each agent has quite a few instruments; every supposed for a selected operate. To make use of brokers and instruments, you want OpenSearch model 2.13 or later.

Conditions

To implement this answer, you want an AWS account. In case you don’t have one, you’ll be able to create an account. You additionally want an OpenSearch Service area with OpenSearch model 2.13 or later. You need to use an current area or create a brand new area.

To make use of the Amazon Titan Textual content Embedding and Anthropic Claude V1 fashions in Amazon Bedrock, you might want to allow entry to those basis fashions (FMs). For directions, check with Add or take away entry to Amazon Bedrock basis fashions.

Configure IAM permissions

Full the next steps to arrange an AWS Identification and Entry Administration (IAM) position and consumer with applicable permissions:

Create an IAM position with the next coverage that can permit the OpenSearch Service area to invoke the Amazon Bedrock API: {

“Model”: “2012-10-17”,

“Assertion”: (

{

“Sid”: “Statement1”,

“Impact”: “Permit”,

“Motion”: (

“bedrock:InvokeAgent”,

“bedrock:InvokeModel”

),

“Useful resource”: (

“arn:aws:bedrock:${Area}::foundation-model/amazon.titan-embed-text-v1”,

“arn:aws:bedrock: ${Area}::foundation-model/anthropic.claude-instant-v1”

)

}

)

}

Relying on the AWS Area and mannequin you employ, specify these within the Useful resource part.

Add opensearchservice.amazonaws.com as a trusted entity.

Make an observation of the IAM position Amazon Useful resource title (ARN).

Assign the previous coverage to the IAM consumer that can create a connector.

Create a passRole coverage and assign it to IAM consumer that can create the connector utilizing Python: {

“Model”: “2012-10-17”,

“Assertion”: (

{

“Impact”: “Permit”,

“Motion”: “iam:PassRole”,

“Useful resource”: “arn:aws:iam::${AccountId}:position/OpenSearchBedrock”

}

)

}

Map the IAM position you created to the OpenSearch Service area position utilizing the next steps:

Log in to the OpenSearch Dashboard and open the Safety web page from the navigation menu.

Select Roles and choose ml_all_access.

Select Mapped Customers and Handle Mapping.

Beneath Customers, add the ARN of the IAM consumer you created.

Set up a connection to the Amazon Bedrock mannequin utilizing the MLCommons plugin

So as to establish patterns and relationships, an embedding mannequin transforms enter knowledge—corresponding to phrases or photos—into numerical vectors in a steady house. Comparable objects are grouped collectively to make it simpler for AI programs to grasp and reply to intricate consumer enquiries.

Semantic search concentrates on the aim and that means of a question. OpenSearch shops knowledge in a vector index for retrieval and transforms it into dense vectors (lists of numbers) utilizing textual content embedding fashions. We’re utilizing amazon.titan-embed-text-v1 hosted on Amazon Bedrock, however you will have to judge and select the fitting mannequin in your use case. The amazon.titan-embed-text-v1 mannequin maps sentences and paragraphs to a 1,536-dimensional dense vector house and is optimized for the duty of semantic search.

Full the next steps to determine a connection to the Amazon Bedrock mannequin utilizing the MLCommons plugin:

Set up a connection by utilizing the Python consumer with the connection blueprint.

Modify the values of the host and area parameters within the supplied code block. For this instance, we’re operating this system in Visible Studio Code with Python model 3.9.6, however newer variations must also work.

For the position ARN, use the ARN you created earlier, and run the next script utilizing the credentials of the IAM consumer you created: import boto3

import requests

from requests_aws4auth import AWS4Auth

host=”https://search-test.us-east-1.es.amazonaws.com/”

area = ‘us-east-1’

service=”es”

credentials = boto3.Session().get_credentials()

awsauth = AWS4Auth(credentials.access_key, credentials.secret_key, area, service, session_token=credentials.token)

path=”_plugins/_ml/connectors/_create”

url = host + path

payload = {

“title”: “Amazon Bedrock Connector: embedding”,

“description”: “The connector to bedrock Titan embedding mannequin”,

“model”: 1,

“protocol”: “aws_sigv4”,

“parameters”: {

“area”: “us-east-1”,

“service_name”: “bedrock”,

“mannequin”: “amazon.titan-embed-text-v1”

},

“credential”: {

“roleArn”: “arn:aws:iam:::position/opensearch_bedrock_external”

},

“actions”: (

{

“action_type”: “predict”,

“methodology”: “POST”,

“url”: “https://bedrock-runtime.${parameters.area}.amazonaws.com/mannequin/${parameters.mannequin}/invoke”,

“headers”: {

“content-type”: “software/json”,

“x-amz-content-sha256”: “required”

},

“request_body”: “{ “inputText”: “${parameters.inputText}” }”,

“pre_process_function”: “connector.pre_process.bedrock.embedding”,

“post_process_function”: “connector.post_process.bedrock.embedding”

}

)

}

headers = {“Content material-Kind”: “software/json”}

r = requests.submit(url, auth=awsauth, json=payload, headers=headers, timeout=15)

print(r.status_code)

print(r.textual content)

Run the Python program. It will return connector_id. python3 connect_bedrocktitanembedding.py

200

{“connector_id”:”nbBe65EByVCe3QrFhrQ2″}

Create a mannequin group in opposition to which this mannequin will probably be registered within the OpenSearch Service area: POST /_plugins/_ml/model_groups/_register

{

“title”: “embedding_model_group”,

“description”: “A mannequin group for bedrock embedding fashions”

}

You get the next output:

{

“model_group_id”: “1rBv65EByVCe3QrFXL6O”,

“standing”: “CREATED”

}

Register a mannequin utilizing connector_id and model_group_id: POST /_plugins/_ml/fashions/_register

{

“title”: “titan_text_embedding_bedrock”,

“function_name”: “distant”,

“model_group_id”: “1rBv65EByVCe3QrFXL6O”,

“description”: “check mannequin”,

“connector_id”: “nbBe65EByVCe3QrFhrQ2”,

“interface”: {}

}

You get the next output:

{

“task_id”: “2LB265EByVCe3QrFAb6R”,

“standing”: “CREATED”,

“model_id”: “2bB265EByVCe3QrFAb60”

}

Deploy a mannequin utilizing the mannequin ID:

POST /_plugins/_ml/fashions/2bB265EByVCe3QrFAb60/_deploy

You get the next output:

{

“task_id”: “bLB665EByVCe3QrF-slA”,

“task_type”: “DEPLOY_MODEL”,

“standing”: “COMPLETED”

}

Now the mannequin is deployed, and you will note that in OpenSearch Dashboards on the OpenSearch Plugins web page.

Create an ingestion pipeline for knowledge indexing

Use the next code to create an ingestion pipeline for knowledge indexing. The pipeline will set up a connection to the embedding mannequin, retrieve the embedding, after which retailer it within the index.

PUT /_ingest/pipeline/cricket_data_pipeline {

“description”: “batting rating abstract embedding pipeline”,

“processors”: (

{

“text_embedding”: {

“model_id”: “GQOsUJEByVCe3QrFfUNq”,

“field_map”: {

“cricket_score”: “cricket_score_embedding”

}

}

}

)

}

Create an index for storing knowledge

Create an index for storing knowledge (for this instance, the cricket achievements of batsmen). This index shops uncooked textual content and embeddings of the abstract textual content with 1,536 dimensions and makes use of the ingest pipeline we created within the earlier step.

PUT cricket_data {

“mappings”: {

“properties”: {

“cricket_score”: {

“sort”: “textual content”

},

“cricket_score_embedding”: {

“sort”: “knn_vector”,

“dimension”: 1536,

“space_type”: “l2”,

“methodology”: {

“title”: “hnsw”,

“engine”: “faiss”

}

}

}

},

“settings”: {

“index”: {

“knn”: “true”

}

}

}

Ingest pattern knowledge

Use the next code to ingest the pattern knowledge for 4 batsmen:

POST _bulk?pipeline=cricket_data_pipeline

{“index”: {“_index”: “cricket_data”}}

{“cricket_score”: “Sachin Tendulkar, usually hailed because the ‘God of Cricket,’ amassed a rare batting file all through his 24-year worldwide profession. In Take a look at cricket, he performed 200 matches, scoring a staggering 15,921 runs at a median of 53.78, together with 51 centuries and 68 half-centuries, with a highest rating of 248 not out. His One Day Worldwide (ODI) profession was equally spectacular, spanning 463 matches the place he scored 18,426 runs at a median of 44.83, notching up 49 centuries and 96 half-centuries, with a high rating of 200 not out – the primary double century in ODI historical past. Though he performed only one T20 Worldwide, scoring 10 runs, his general batting statistics throughout codecs solidified his standing as certainly one of cricket’s all-time greats, setting quite a few information that stand to today.”}

{“index”: {“_index”: “cricket_data”}}

{“cricket_score”: “Virat Kohli, extensively thought to be one of many most interesting batsmen of his era, has amassed spectacular statistics throughout all codecs of worldwide cricket. As of April 2024, in Take a look at cricket, he has scored over 8,000 runs with a median exceeding 50, together with quite a few centuries. His One Day Worldwide (ODI) file is especially stellar, with greater than 12,000 runs at a median nicely above 50, that includes over 40 centuries. In T20 Internationals, Kohli has maintained a excessive common and scored over 3,000 runs. Identified for his distinctive capacity to chase down targets in limited-overs cricket, Kohli has constantly ranked among the many high batsmen in ICC rankings and has damaged a number of batting information all through his profession, cementing his standing as a contemporary cricket legend.”}

{“index”: {“_index”: “cricket_data”}}

{“cricket_score”: “Adam Gilchrist, the legendary Australian wicketkeeper-batsman, had an distinctive batting file throughout codecs throughout his worldwide profession from 1996 to 2008. In Take a look at cricket, Gilchrist scored 5,570 runs in 96 matches at a powerful common of 47.60, together with 17 centuries and 26 half-centuries, with a highest rating of 204 not out. His One Day Worldwide (ODI) file was equally exceptional, amassing 9,619 runs in 287 matches at a median of 35.89, with 16 centuries and 55 half-centuries, and a high rating of 172. Gilchrist’s aggressive batting model and skill to vary the course of a sport rapidly made him one of the vital feared batsmen of his period. Though his T20 Worldwide profession was temporary, his general batting statistics, mixed along with his wicketkeeping abilities, established him as certainly one of cricket’s best wicketkeeper-batsmen.”}

{“index”: {“_index”: “cricket_data”}}

{“cricket_score”: “Brian Lara, the legendary West Indian batsman, had a rare batting file in worldwide cricket throughout his profession from 1990 to 2007. In Take a look at cricket, Lara amassed 11,953 runs in 131 matches at a powerful common of 52.88, together with 34 centuries and 48 half-centuries. He holds the file for the best particular person rating in a Take a look at innings with 400 not out, in addition to the best first-class rating of 501 not out. In One Day Internationals (ODIs), Lara scored 10,405 runs in 299 matches at a median of 40.48, with 19 centuries and 63 half-centuries. His highest ODI rating was 169. Identified for his elegant batting model and skill to play lengthy innings, Lara’s distinctive performances, notably in Take a look at cricket, cemented his standing as one of many best batsmen within the historical past of the sport.”}

Deploy the LLM for response era

Use the next code to deploy the LLM for response era. Modify the values of host, area, and roleArn within the supplied code block.

Create a connector by operating the next Python program. Run the script utilizing the credentials of the IAM consumer created earlier. import boto3

import requests

from requests_aws4auth import AWS4Auth

host=”https://search-test.us-east-1.es.amazonaws.com/”

area = ‘us-east-1’

service=”es”

credentials = boto3.Session().get_credentials()

awsauth = AWS4Auth(credentials.access_key, credentials.secret_key, area, service, session_token=credentials.token)

path=”_plugins/_ml/connectors/_create”

url = host + path

payload = {

“title”: “BedRock Claude instant-v1 Connector “,

“description”: “The connector to BedRock service for claude mannequin”,

“model”: 1,

“protocol”: “aws_sigv4”,

“parameters”: {

“area”: “us-east-1”,

“service_name”: “bedrock”,

“anthropic_version”: “bedrock-2023-05-31”,

“max_tokens_to_sample”: 8000,

“temperature”: 0.0001,

“response_filter”: “$.completion”

},

“credential”: {

“roleArn”: “arn:aws:iam::accountId:position/opensearch_bedrock_external”

},

“actions”: (

{

“action_type”: “predict”,

“methodology”: “POST”,

“url”: “https://bedrock-runtime.${parameters.area}.amazonaws.com/mannequin/anthropic.claude-instant-v1/invoke”,

“headers”: {

“content-type”: “software/json”,

“x-amz-content-sha256”: “required”

},

“request_body”: “{“immediate”:”${parameters.immediate}”, “max_tokens_to_sample”:${parameters.max_tokens_to_sample}, “temperature”:${parameters.temperature}, “anthropic_version”:”${parameters.anthropic_version}” }”

}

)

}

headers = {“Content material-Kind”: “software/json”}

r = requests.submit(url, auth=awsauth, json=payload, headers=headers, timeout=15)

print(r.status_code)

print(r.textual content)

If it ran efficiently, it will return connector_id and a 200-response code:

200

{“connector_id”:”LhLSZ5MBLD0avmh1El6Q”}

Create a mannequin group for this mannequin: POST /_plugins/_ml/model_groups/_register

{

“title”: “claude_model_group”,

“description”: “That is an instance description”

}

It will return model_group_id; make an observation of it:

{

“model_group_id”: “LxLTZ5MBLD0avmh1wV4L”,

“standing”: “CREATED”

}

Register a mannequin utilizing connection_id and model_group_id: POST /_plugins/_ml/fashions/_register

{

“title”: “anthropic.claude-v1”,

“function_name”: “distant”,

“model_group_id”: “LxLTZ5MBLD0avmh1wV4L”,

“description”: “LLM mannequin”,

“connector_id”: “LhLSZ5MBLD0avmh1El6Q”,

“interface”: {}

}

It is going to return model_id and task_id:

{

“task_id”: “YvbVZ5MBtVAPFbeA7ou7”,

“standing”: “CREATED”,

“model_id”: “Y_bVZ5MBtVAPFbeA7ovb”

}

Lastly, deploy the mannequin utilizing an API:

POST /_plugins/_ml/fashions/Y_bVZ5MBtVAPFbeA7ovb/_deploy

The standing will present as COMPLETED. Meaning the mannequin is efficiently deployed.

{

“task_id”: “efbvZ5MBtVAPFbeA7otB”,

“task_type”: “DEPLOY_MODEL”,

“standing”: “COMPLETED”

}

Create an agent in OpenSearch Service

An agent orchestrates and runs ML fashions and instruments. A software performs a set of particular duties. For this submit, we use the next instruments:

VectorDBTool – The agent use this software to retrieve OpenSearch paperwork related to the consumer query

MLModelTool – This software generates consumer responses primarily based on prompts and OpenSearch paperwork

Use the embedding model_id in VectorDBTool and LLM model_id in MLModelTool:

POST /_plugins/_ml/brokers/_register {

“title”: “cricket rating knowledge evaluation agent”,

“sort”: “conversational_flow”,

“description”: “This can be a demo agent for cricket knowledge evaluation”,

“app_type”: “rag”,

“reminiscence”: {

“sort”: “conversation_index”

},

“instruments”: (

{

“sort”: “VectorDBTool”,

“title”: “cricket_knowledge_base”,

“parameters”: {

“model_id”: “2bB265EByVCe3QrFAb60”,

“index”: “cricket_data”,

“embedding_field”: “cricket_score_embedding”,

“source_field”: (

“cricket_score”

),

“enter”: “${parameters.query}”

}

},

{

“sort”: “MLModelTool”,

“title”: “bedrock_claude_model”,

“description”: “A common software to reply any query”,

“parameters”: {

“model_id”: “gbcfIpEByVCe3QrFClUp”,

“immediate”: “nnHuman:You’re a skilled knowledge analysist. You’ll all the time reply query primarily based on the given context first. If the reply will not be instantly proven within the context, you’ll analyze the info and discover the reply. If you do not know the reply, simply say do not know. nnContext:n${parameters.cricket_knowledge_base.output:-}nn${parameters.chat_history:-}nnHuman:${parameters.query}nnAssistant:”

}

}

)

}

This returns an agent ID; pay attention to the agent ID, which will probably be utilized in subsequent APIs.

Question the index

Now we have batting scores of 4 batsmen within the index. For the primary question, let’s specify the participant title:

POST /_plugins/_ml/brokers//_execute {

“parameters”: {

“query”: “What’s batting rating of Sachin Tendulkar ?”

}

}

Based mostly on context and obtainable info, it returns the batting rating of Sachin Tendulkar. Observe the memory_id from the response; you will have it for subsequent questions within the subsequent steps.

We will ask a follow-up query. This time, we don’t specify the participant title and count on it to reply primarily based on the sooner query:

POST /_plugins/_ml/brokers//_execute {

“parameters”: {

“query”: ” What number of T20 worldwide match did he play?”,

“next_action”: “then examine with Virat Kohlis rating”,

“memory_id”: “so-vAJMByVCe3QrFYO7j”,

“message_history_limit”: 5,

“immediate”: “nnHuman:You’re a skilled knowledge analysist. You’ll all the time reply query primarily based on the given context first. If the reply will not be instantly proven within the context, you’ll analyze the info and discover the reply. If you do not know the reply, simply say do not know. nnContext:n${parameters.population_knowledge_base.output:-}nn${parameters.chat_history:-}nnHuman:all the time be taught helpful info from chat historynHuman:${parameters.query}, ${parameters.next_action}nnAssistant:”

}

}

Within the previous API, we use the next parameters:

Query and Next_action – We additionally move the following motion to match Sachin’s rating with Virat’s rating.

Memory_id – That is reminiscence assigned to this dialog. Use the identical memory_id for subsequent questions.

Immediate – That is the immediate you give to the LLM. It contains the consumer’s query and the following motion. The LLM ought to reply solely utilizing the info listed in OpenSearch and should not invent any info. This manner, you stop hallucination.

Check with ML Mannequin software for extra particulars about organising these parameters and the GitHub repo for blueprints for distant inferences.

The software shops the dialog historical past of the questions and solutions within the OpenSearch index, which is used to refine solutions by asking follow-up questions.

In real-world situations, you’ll be able to map memory_id in opposition to the consumer’s profile to protect the context and isolate the consumer’s dialog historical past.

Now we have demonstrated how one can create a conversational search software utilizing the built-in options of OpenSearch Service.

Clear up

To keep away from incurring future expenses, delete the sources created whereas constructing this answer:

Delete the OpenSearch Service area.

Delete the connector.

Delete the index.

Conclusion

On this submit, we demonstrated how one can use OpenSearch brokers and instruments to create a RAG pipeline with conversational search. By integrating with ML fashions, vectorizing questions, and interacting with LLMs to enhance prompts, this configuration oversees your complete course of. This methodology means that you can rapidly develop AI assistants which are prepared for manufacturing with out having to begin from scratch.

In case you’re constructing a RAG pipeline with conversational historical past to let customers ask follow-up questions for extra refined solutions, give it a attempt to share your suggestions or questions within the feedback!

In regards to the creator

Bharav Patel is a Specialist Resolution Architect, Analytics at Amazon Internet Companies. He primarily works on Amazon OpenSearch Service and helps prospects with key ideas and design rules of operating OpenSearch workloads on the cloud. Bharav likes to discover new locations and check out completely different cuisines.

Bharav Patel is a Specialist Resolution Architect, Analytics at Amazon Internet Companies. He primarily works on Amazon OpenSearch Service and helps prospects with key ideas and design rules of operating OpenSearch workloads on the cloud. Bharav likes to discover new locations and check out completely different cuisines.