Because the strains between analytics and AI proceed to blur, organizations discover themselves coping with converging workloads and knowledge wants. Historic analytics knowledge is now getting used to coach machine studying fashions and energy generative AI purposes. This shift requires shorter time to worth and tighter collaboration amongst knowledge analysts, knowledge scientists, machine studying (ML) engineers, and utility builders. Nevertheless, the fact of scattered knowledge throughout numerous methods—from knowledge lakes to knowledge warehouses and purposes—makes it tough to entry and use knowledge effectively. Furthermore, organizations trying to consolidate disparate knowledge sources into an information lakehouse have traditionally relied on extract, rework, and cargo (ETL) processes, which have develop into a major bottleneck of their knowledge analytics and machine studying initiatives. Conventional ETL processes are sometimes advanced, requiring important time and sources to construct and preserve. As knowledge volumes develop, so do the prices related to ETL, resulting in delayed insights and elevated operational overhead. Many organizations discover themselves struggling to effectively onboard transactional knowledge into their knowledge lakes and warehouses, hindering their potential to derive well timed insights and make data-driven selections. On this submit, we tackle these challenges with a two-pronged method:

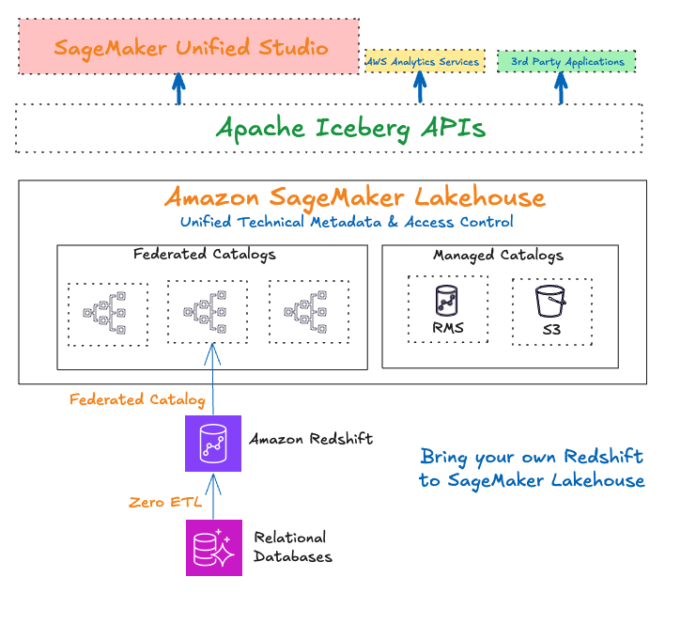

Unified knowledge administration: Utilizing Amazon SageMaker Lakehouse to get unified entry to all of your knowledge throughout a number of sources for analytics and AI initiatives with a single copy of knowledge, no matter how and the place the information is saved. SageMaker Lakehouse is powered by AWS Glue Information Catalog and AWS Lake Formation and brings collectively your present knowledge throughout Amazon Easy Storage Service (Amazon S3) knowledge lakes and Amazon Redshift knowledge warehouses with built-in entry controls. As well as, you’ll be able to ingest knowledge from operational databases and enterprise purposes to the lakehouse in close to real-time utilizing zero-ETL which is a set of fully-managed integrations by AWS that eliminates or minimizes the necessity to construct ETL knowledge pipelines.

Unified improvement expertise: Utilizing Amazon SageMaker Unified Studio to find your knowledge and put it to work utilizing acquainted AWS instruments for full improvement workflows, together with mannequin improvement, generative AI utility improvement, knowledge processing, and SQL analytics, in a single ruled atmosphere.

On this submit, we reveal how one can deliver transactional knowledge from AWS OLTP knowledge shops like Amazon Relational Database Service (Amazon RDS) and Amazon Aurora flowing into Redshift utilizing zero-ETL integrations to SageMaker Lakehouse Federated Catalog (Deliver your personal Amazon Redshift into SageMaker Lakehouse). With this integration, now you can seamlessly onboard the modified knowledge from OLTP methods to a unified lakehouse and expose the identical to analytical purposes for consumptions utilizing Apache Iceberg APIs from new SageMaker Unified Studio. By way of this built-in atmosphere, knowledge analysts, knowledge scientists, and ML engineers can use SageMaker Unified Studio to carry out superior SQL analytics on the transactional knowledge.

Structure patterns for a unified knowledge administration and unified improvement expertise

On this structure sample, we present you methods to use zero-ETL integrations to seamlessly replicate transactional knowledge from Amazon Aurora MySQL-Suitable Version, an operational database, into the Redshift Managed Storage layer. This zero-ETL method eliminates the necessity for advanced knowledge extraction, transformation, and loading processes, enabling close to real-time entry to operational knowledge for analytics. The transferred knowledge is then cataloged utilizing a federated catalog within the SageMaker Lakehouse Catalog and uncovered via the Iceberg Relaxation Catalog API, facilitating complete knowledge evaluation by client purposes.

You then use SageMaker Unified Studio, to carry out superior analytics on the transactional knowledge bridging the hole between operational databases and superior analytics capabilities.

Conditions

Just be sure you have the next conditions:

Deployment steps

On this part, we share steps for deploying sources wanted for Zero-ETL integration utilizing AWS CloudFormation.

Setup sources with CloudFormation

This submit gives a CloudFormation template as a basic information. You possibly can overview and customise it to fit your wants. A few of the sources that this stack deploys incur prices when in use. The CloudFormation template provisions the next elements:

An Aurora MySQL provisioned cluster (supply).

An Amazon Redshift Serverless knowledge warehouse (goal).

Zero-ETL integration between the supply (Aurora MySQL) and goal (Amazon Redshift Serverless). See Aurora zero-ETL integrations with Amazon Redshift for extra info.

Create your sources

To create sources utilizing AWS Cloudformation, observe these steps:

Sign up to the AWS Administration Console.

Choose the us-east-1 AWS Area by which to create the stack.

Open the AWS CloudFormation

Select Launch Stack![]()

Select Subsequent.

This mechanically launches CloudFormation in your AWS account with a template. It prompts you to check in as wanted. You possibly can view the CloudFormation template from inside the console.

For Stack identify, enter a stack identify, for instance UnifiedLHBlogpost.

Preserve the default values for the remainder of the Parameters and select Subsequent.

On the subsequent display screen, select Subsequent.

Evaluation the main points on the ultimate display screen and choose I acknowledge that AWS CloudFormation would possibly create IAM sources.

Select Submit.

Stack creation can take as much as half-hour.

After the stack creation is full, go to the Outputs tab of the stack and file the values of the keys for the next elements, which you’ll use in a later step:

NamespaceName

PortNumber

RDSPassword

RDSUsername

RedshiftClusterSecurityGroupName

RedshiftPassword

RedshiftUsername

VPC

Workgroupname

ZeroETLServicesRoleNameArn

Implementation steps

To implement this answer, observe these steps:

Establishing zero-ETL integration

A zero-ETL integration is already created as part of CloudFormation template offered. Use the next steps from the Zero-ETL integration submit to finish establishing the combination.:

Create a database from integration in Amazon Redshift

Populate supply knowledge in Aurora MySQL

Validate the supply knowledge in your Amazon Redshift knowledge warehouse

Deliver Amazon Redshift metadata to the SageMaker Lakehouse catalog

Now that transactional knowledge from Aurora MySQL is replicating into Redshift tables via zero-ETL integration, you subsequent deliver the information into SageMaker Lakehouse, in order that operational knowledge can co-exist and be accessed and ruled along with different knowledge sources within the knowledge lake. You do that by registering an present Amazon Redshift Serverless namespace that has Zero-ETL tables as a federated catalog in SageMaker Lakehouse.

Earlier than beginning the subsequent steps, it is advisable configure knowledge lake directors in AWS Lake Formation.

Go to the Lake Formation console and within the navigation pane, select Administration roles after which select Duties underneath Administration. Below Information lake directors, select Add.

Within the Add directors web page, underneath Entry kind, choose Information Lake administrator.

Below IAM customers and roles, choose Admin. Select Affirm.

On the Add directors web page, for Entry kind, choose Learn-only directors. Below IAM customers and roles, choose AWSServiceRoleForRedshift and select Affirm. This step permits Amazon Redshift to find and entry catalog objects in AWS Glue Information Catalog.

With the information lake directors configured, you’re able to deliver your present Amazon Redshift metadata to SageMaker Lakehouse catalog:

From the Amazon Redshift Serverless console, select Namespace configuration within the navigation pane.

Below Actions, select Register with AWS Glue Information Catalog. You will discover extra particulars on registering a federated Amazon Redshift catalog in Registering namespaces to the AWS Glue Information Catalog.

Select Register. This can register the namespace to AWS Glue Information Catalog

After registration is full, the Namespace register standing will change to Registered to AWS Glue Information Catalog.

Navigate to the Lake Formation console and select Catalogs New underneath Information Catalog within the navigation pane. Right here you’ll be able to see a pending catalog invitation is offered for the Amazon Redshift namespace registered in Information Catalog.

Choose the pending invitation and select Approve and create catalog. For extra info, see Creating Amazon Redshift federated catalogs.

Enter the Identify, Description, and IAM function (created by the CloudFormation template). Select Subsequent.

Grant permissions utilizing a principal that’s eligible to supply all permissions (an admin person).

Choose IAM customers and guidelines and select Admin.

Below Catalog permissions, choose Tremendous person to grant tremendous person permissions.

Assigning tremendous person permissions grants the person unrestricted permissions to the sources (databases, tables, views) inside this catalog. Comply with the principal of least privilege to grant customers solely the permissions required to carry out a job wherever relevant as a safety greatest observe.

As remaining step, overview all settings and select Create Catalog

After the catalog is created, you will notice two objects underneath Catalogs. dev refers back to the native dev database inside Amazon Redshift, and aurora_zeroetl_integration is the database created for Aurora to Amazon Redshift ZeroETL tables

Tremendous-grained entry management

To arrange fine-grained entry management, observe these steps:

To grant permission to particular person objects, select Motion after which choose Grant.

On the Principals web page, grant entry to particular person objects or a couple of object to completely different principals underneath the federated catalog.

Entry lakehouse knowledge utilizing SageMaker Unified Studio

SageMaker Unified Studio gives an built-in expertise exterior the console to make use of all of your knowledge for analytics and AI purposes. On this submit, we present you methods to use the brand new expertise via the Amazon SageMaker administration console to create a SageMaker platform area utilizing the fast setup methodology. To do that, you arrange IAM Id Heart, a SageMaker Unified Studio area, after which entry knowledge via SageMaker Unified Studio.

Arrange IAM Id Heart

Earlier than creating the area, makes positive that your knowledge admins and knowledge staff are prepared to make use of the Unified Studio expertise by enabling IAM Id Heart for single sign-on following the steps in Establishing Amazon SageMaker Unified Studio. You should utilize Id Heart to arrange single sign-on for particular person accounts and for accounts managed via AWS Organizations. Add customers or teams to the IAM occasion as acceptable. The next screenshot reveals an instance electronic mail despatched to a person via which they will activate their account in IAM Id Heart.

Arrange SageMaker Unified area

Comply with steps in Create a Amazon SageMaker Unified Studio area – fast setup to arrange a SageMaker Unified Studio area. It’s essential to select the VPC that was created by the CloudFormation stack earlier.

The short setup methodology additionally has a Create VPC choice that units up a brand new VPC, subnets, NAT Gateway, VPC endpoints, and so forth, and is supposed for testing functions. There are fees related to this, so delete the area after testing.

Should you see the No fashions accessible, you should use the Grant mannequin entry button to grant entry to Amazon Bedrock serverless fashions to be used in SageMaker Unified Studio, for AI/ML use-cases

Fill within the sections for Area Identify. For instance, MyOLTPDomain. Within the VPC part, choose the VPC that was provisioned by the CloudFormation stack, for instance UnifiedLHBlogpost-VPC. Choose subnets and select Proceed.

Within the IAM Id Heart Consumer part, lookup the newly created person from (for instance, Information User1) and add them to the area. Select Create Area. It is best to see the brand new area together with a hyperlink to open Unified Studio.

Entry knowledge utilizing SageMaker Unified Studio

To entry and analyze your knowledge in SageMaker Unified Studio, observe these steps:

Choose the URL for SageMaker Unified Studio. Select Sign up with SSO and check in utilizing the IAM person, for instance datauser1, and you may be prompted to pick a multi-factor authentication (MFA) methodology.

Choose Authenticator App and proceed with subsequent steps. For extra details about SSO setup, see Managing customers in Amazon SageMaker Unified Studio.After you’ve gotten signed in to the Unified Studio area, it is advisable arrange a brand new venture. For this illustration, we created a brand new pattern venture referred to as MyOLTPDataProject utilizing the venture profile for SQL Analytics as proven right here.A venture profile is a template for a venture that defines what blueprints are utilized to the venture, together with underlying AWS compute and knowledge sources. Watch for the brand new venture to be arrange, and when standing is Energetic, open the venture in Unified Studio.By default, the venture could have entry to the default Information Catalog (AWSDataCatalog). For the federated redshift catalog redshift-consumer-catalog to be seen, it is advisable grant permissions to the venture IAM function utilizing Lake Formation. For this instance, utilizing the Lake Formation console, we have now granted under entry to the demodb database that’s a part of the Zero-ETL catalog to the Unified Studio venture IAM function. Comply with steps in Including present databases and catalogs utilizing AWS Lake Formation permissions.In your SageMaker Unified Studio Venture’s Information part, hook up with the Lakehouse Federated catalog that you just created and registered earlier (for instance redshift-zetl-auroramysql-catalog/aurora_zeroetl_integration). Choose the objects that you just wish to question and execute them utilizing the Redshift Question Editor built-in with SageMaker Unified Studio. If you choose Redshift, you may be transferred to the Question editor the place you’ll be able to execute the SQL and see the outcomes as proven within the following determine.

If you choose Redshift, you may be transferred to the Question editor the place you’ll be able to execute the SQL and see the outcomes as proven within the following determine.

With this integration of Amazon Redshift metadata with SageMaker Lakehouse federated catalog, you’ve gotten entry to your present Redshift knowledge warehouse objects in your organizations centralized catalog managed by SageMaker Lakehouse catalog and be part of the present Redshift knowledge seamlessly with the information saved in your Amazon S3 knowledge lake. This answer helps you keep away from pointless ETL processes to repeat knowledge between the information lake and the information warehouse and reduce knowledge redundancy.

You possibly can additional combine extra knowledge sources serving transactional workloads comparable to Amazon DynamoDB and enterprise purposes comparable to Salesforce and ServiceNow. The structure shared on this submit for accelerated analytical processing utilizing Zero-ETL and SageMaker Lakehouse could be additional expanded by including Zero-ETL integrations for DynamoDB utilizing DynamoDB zero-ETL integration with Amazon SageMaker Lakehouse and for enterprise purposes by following the directions in Simplify knowledge integration with AWS Glue and zero-ETL to Amazon SageMaker Lakehouse

Clear up

If you’re completed, delete the CloudFormation stack to keep away from incurring prices for among the AWS sources used on this walkthrough incur a value. Full the next steps:

On the CloudFormation console, select Stacks.

Select the stack you launched on this walkthrough. The stack have to be at the moment operating.

Within the stack particulars pane, select Delete.

Select Delete stack.

On the Sagemaker console, select Domains and delete the area created for testing.

Abstract

On this submit, you’ve realized methods to deliver knowledge from operational databases and purposes into your lake home in close to real-time via Zero-ETL integrations. You’ve additionally realized a couple of unified improvement expertise to create a venture and produce within the operational knowledge to the lakehouse, which is accessible via SageMaker Unified Studio, and question the information utilizing integration with Amazon Redshift Question Editor. You should utilize the next sources along with this submit to rapidly begin your journey to make your transactional knowledge out there for analytical processing.

AWS zero-ETL

SageMaker Unified Studio

SageMaker Lakehouse

Getting began with Amazon SageMaker Lakehouse

Concerning the authors

Avijit Goswami is a Principal Information Options Architect at AWS specialised in knowledge and analytics. He helps AWS strategic clients in constructing high-performing, safe, and scalable knowledge lake options on AWS utilizing AWS managed companies and open-source options. Outdoors of his work, Avijit likes to journey, hike within the San Francisco Bay Space trails, watch sports activities, and hearken to music.

Avijit Goswami is a Principal Information Options Architect at AWS specialised in knowledge and analytics. He helps AWS strategic clients in constructing high-performing, safe, and scalable knowledge lake options on AWS utilizing AWS managed companies and open-source options. Outdoors of his work, Avijit likes to journey, hike within the San Francisco Bay Space trails, watch sports activities, and hearken to music.

Saman Irfan is a Senior Specialist Options Architect specializing in Information Analytics at Amazon Internet Providers. She focuses on serving to clients throughout numerous industries construct scalable and high-performant analytics options. Outdoors of labor, she enjoys spending time together with her household, watching TV sequence, and studying new applied sciences.

Saman Irfan is a Senior Specialist Options Architect specializing in Information Analytics at Amazon Internet Providers. She focuses on serving to clients throughout numerous industries construct scalable and high-performant analytics options. Outdoors of labor, she enjoys spending time together with her household, watching TV sequence, and studying new applied sciences.

Sudarshan Narasimhan is a Principal Options Architect at AWS specialised in knowledge, analytics and databases. With over 19 years of expertise in Information roles, he’s at the moment serving to AWS Companions & clients construct fashionable knowledge architectures. As a specialist & trusted advisor he helps companions construct & GTM with scalable, safe and excessive performing knowledge options on AWS. In his spare time, he enjoys spending time along with his household, travelling, avidly consuming podcasts and being heartbroken about Man United’s present state.

Sudarshan Narasimhan is a Principal Options Architect at AWS specialised in knowledge, analytics and databases. With over 19 years of expertise in Information roles, he’s at the moment serving to AWS Companions & clients construct fashionable knowledge architectures. As a specialist & trusted advisor he helps companions construct & GTM with scalable, safe and excessive performing knowledge options on AWS. In his spare time, he enjoys spending time along with his household, travelling, avidly consuming podcasts and being heartbroken about Man United’s present state.