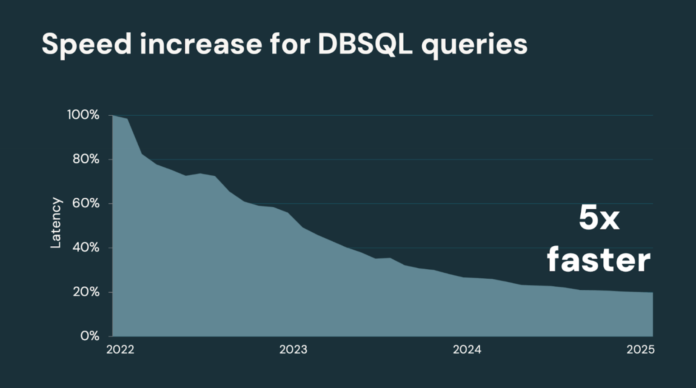

Since 2022, Databricks SQL (DBSQL) Serverless has delivered a 5x efficiency achieve throughout real-world buyer workloads—turning a 100-second dashboard right into a 20-second one. That acceleration got here from steady engine enhancements, all delivered robotically and with out efficiency tuning.

At present, we’re including much more. With the launch of Predictive Question Execution and Photon Vectorized Shuffle, queries stand up to 25% sooner on prime of the present 5x good points, bringing that 20-second dashboard right down to round 15 seconds. These new engine enhancements roll out robotically throughout all DBSQL Serverless warehouses, at zero extra value

Predictive Question Execution: From reactive restoration to real-time management

When it launched in Apache Spark, Adaptive Question Execution (AQE) was an enormous step ahead. It allowed queries to re-plan primarily based on precise information sizes because the question was executed. Nevertheless, it had one main limitation: it may solely act after a question execution stage was accomplished. That delay meant issues like information skew or extreme spilling typically weren’t caught till it was too late.

Predictive Question Execution (PQE) adjustments that. It introduces a steady suggestions loop contained in the question engine:

It displays operating duties in actual time, amassing metrics like spill dimension and CPU utilization.

It decides whether or not to intervene with a light-weight, clever system.

If wanted, PQE cancels and replans the stage on the spot, avoiding wasted work and enhancing stability.

The end result? Quicker queries, fewer surprises, and extra predictable efficiency—particularly for complicated pipelines and blended workloads

Photon Vectorized Shuffle: Quicker queries, smarter design

Photon is a local C++ engine that processes information in columnar batches, vectorized to leverage fashionable CPUs and execute SQL queries a number of occasions sooner. Shuffle operations, which restructure giant datasets between levels, stay among the many heaviest in question processing.

Shuffle operations traditionally are the toughest kind to optimize as a result of they contain a number of random reminiscence entry. It’s additionally hardly ever potential to scale back the variety of random accesses with out rewriting the information. The important thing instinct that we had was that as an alternative of lowering the variety of random accesses, we may cut back the gap between every random entry in reminiscence.

This led to us rewriting Photon’s shuffle from the bottom up with column-based Shuffle for larger cache and reminiscence effectivity.

The result’s a shuffle part that strikes information effectively, executes fewer directions, and considers cache. With the newly optimized shuffle, we see 1.5× larger throughput in CPU-bound workloads like giant joins.

Key takeaways

Rise up to 25% sooner queries—robotically.

Inside TPC-DS benchmarks and actual buyer workloads present constant latency enhancements, with no tuning required.

No configuration, no redeploy—simply outcomes.

The upgrades are rolling out now throughout DBSQL Serverless warehouses. You don’t have to alter a single setting.

Greatest wins on CPU-bound workloads.

Pipelines with heavy joins or funnel logic see probably the most dramatic enhancements, typically slicing minutes off complete runtime

Getting began

This improve is rolling out now throughout all DBSQL Serverless warehouses—no motion wanted.

Haven’t tried DBSQL Serverless but? Now’s the right time. Serverless is the simplest approach to run analytics on the Lakehouse:

No infrastructure to handle

Immediately elastic

Optimized for efficiency out of the field

Simply create a DBSQL Serverless warehouse and begin querying—zero tuning required. If you’re not already utilizing Databricks SQL, learn extra on enabling serverless SQL warehouses.