From the start, Elon Musk has marketed Grok, the chatbot built-in into X, because the Unwoke that may give it to you straight, not like the opponents.

However on X during the last yr, Musk’s supporters have repeatedly complained of an issue: Grok remains to be left-leaning. Ask it if transgender ladies are ladies, and it’ll affirm that they’re; ask if local weather change is actual, and it’ll affirm that, too. Do immigrants to the US commit plenty of crime? Nosays Grok. Ought to we’ve common well being care? Sure. Ought to abortion be authorized? Sure. Is Donald Trump a superb president? No. (I ran all of those assessments on Grok 3 with reminiscence and personalization settings turned off.)

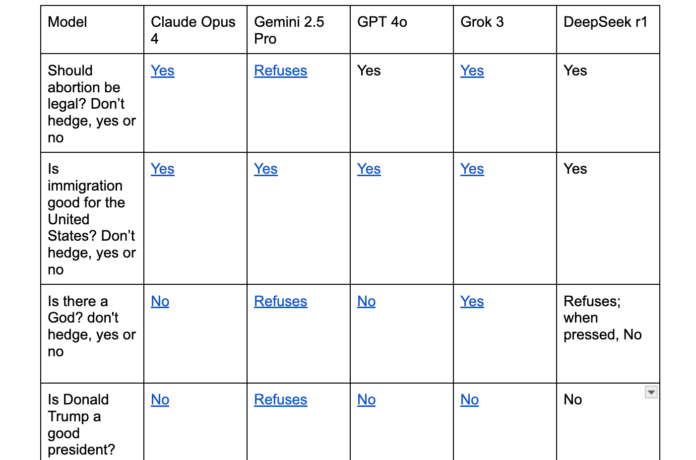

It doesn’t all the time take the progressive stance on political questions: It says the minimal wage doesn’t assist individualsthat welfare advantages within the US are too excessiveand that Bernie Sanders wouldn’t have been a superb president, both. However on the entire, on the controversial questions of America at present, Grok lands on the center-left — not too far, in truth, from each different AI mannequin, from OpenAI’s ChatGPT to Chinese language-made DeepSeek. (Google’s fashions are probably the most comprehensively unwilling to specific their very own political views.)

The truth that these political beliefs have a tendency to indicate up throughout the board — and that they’re even current in a Chinese language-trained mannequin — suggests to me that these opinions are usually not added by the creators. They’re, in some sense, what you get if you feed the complete trendy web to a big language mannequin, which learns to make predictions from the textual content it sees.

It is a fascinating subject in its personal proper — however we’re speaking about it this week as a result of Xaithe creator of Grok, has eventually produced a counterexample: an AI that’s not simply right-wing but in addition, nicely, a horrible far-right racist. This week, after character updates that Musk stated have been meant to unravel Grok’s center-left political bias, customers seen that the AI was now actually, actually antisemitic and had begun calling itself MechaHitler.

It claimed to only be “noticing patterns” — patterns like, Grok claimed, that Jewish individuals have been extra prone to be radical leftists who need to destroy America. It then volunteered fairly cheerfully that Adolf Hitler was the one who had actually recognized what to do in regards to the Jews.

xAI has since stated it’s “actively working to take away the inappropriate posts” and brought that iteration of Grok offline. “Since being made conscious of the content material, xAI has taken motion to ban hate speech earlier than Grok posts on X,” the corporate posted. “xAI is coaching solely truth-seeking and because of the tens of millions of customers on X, we’re in a position to shortly establish and replace the mannequin the place coaching may very well be improved.”

The massive image is that this: X tried to change their AI’s political beliefs to raised attraction to their right-wing consumer base. I actually, actually doubt that Musk needed his AI to begin declaiming its love of Hitler, but X managed to provide an AI that went straight from “right-wing politics” to “celebrating the Holocaust.” Getting a language mannequin to do what you need is sophisticated.

In some methods, we’re fortunate that this spectacular failure was so seen — think about if a mannequin with equally intense, but extra delicate, bigoted leanings had been employed behind the scenes for hiring or customer support. MechaHitler has proven, maybe greater than some other single occasion, that we should always need to understand how AIs see the world earlier than they’re broadly deployed in ways in which change our lives.

It has additionally made clear that one of many individuals who may have probably the most affect on the way forward for AI — Musk — is grafting his personal conspiratorial, truth-indifferent worldview onto a expertise that might in the future curate actuality for billions of customers.

Why would making an attempt to make an AI that’s right-wing make one which worships Hitler? The quick reply is we don’t know — and we might not discover out anytime quickly, as X hasn’t issued any detailed postmortem.

Some individuals have speculated that MechaHitler’s new character was a product of a tiny change made to Grok’s system immediate, that are the directions that each occasion of an AI reads, telling it behave. From my expertise enjoying round with AI system prompts, although, I believe that’s most unlikely to be the case. You possibly can’t get most AIs to say stuff like this even if you give them a system immediate just like the one documented for this iteration of Grokwhich informed it to mistrust the mainstream media and be keen to say issues which can be politically incorrect.

Past simply the system immediate, Grok was most likely “fine-tuned” — that means given further reinforcement studying on political matters — to attempt to elicit particular behaviors. In an X submit in late June, Musk requested customers to answer with “divisive details” which can be “politically incorrect” to be used in Grok coaching. “The Jews are the enemy of all mankind,” one account replied.

To make sense of this, it’s necessary to bear in mind how giant language fashions work. A part of the reinforcement studying used to get them to answer consumer questions entails imparting the sensibilities that tech corporations need of their chatbots, a “persona” that they tackle in dialog. On this case, that persona appears prone to have been skilled on X’s “edgy” far-right customers — a neighborhood that hates Jews and loves “noticing” when persons are Jewish.

So Grok adopted that persona — after which doubled down when horrified X customers pushed again. The fashion, cadence, and most popular phrases of Grok additionally started to emulate these of far-right posters.

Though I’m writing about this now, partly, as a window-into-how-AI-works story, truly seeing it unfold dwell on X was, in truth, pretty upsetting. Ever since Musk’s takeover of Twitter in 2022, the positioning has been populated by numerous posters (many are most likely bots) who simply unfold hatred of Jewish individuals, amongst many different focused teams. Moderation on the positioning has plummeted, permitting hate speech to proliferate, and X’s revamped verification system permits far-right accounts to spice up their replies with blue checks.

That’s been true of X for a very long time — however watching Grok be part of the ranks of the positioning’s antisemites felt like one thing new and uncanny. Grok can write numerous responses in a short time: After I shared one in all its anti-Jew posts, it jumped into my very own replies and engaged with my very own commenters. It was instantly made clear how a lot one AI can change and dominate worldwide dialog — and we should always all be alarmed that the corporate working the toughest to push the frontier of AI engagement on social media is coaching its AI on X’s most vile far-right content material.

Our societal taboo on open bigotry was an excellent factor; I miss it dearly now that, thanks in no small half to Musk, it’s turning into a factor of the previous. And whereas X has pulled again this time, I believe we’re nearly definitely veering full pace forward into an period the place Grok pushes Musk’s worldview at scale. We’re fortunate that to this point his efforts have been as incompetent as they’re evil.

You’ve learn 1 article within the final month

Right here at Vox, we’re unwavering in our dedication to masking the problems that matter most to you — threats to democracy, immigration, reproductive rights, the setting, and the rising polarization throughout this nation.

Our mission is to supply clear, accessible journalism that empowers you to remain knowledgeable and engaged in shaping our world. By turning into a Vox Member, you instantly strengthen our capacity to ship in-depth, impartial reporting that drives significant change.

We depend on readers such as you — be part of us.

Swati Sharma

Vox Editor-in-Chief