Be a part of our every day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. Be taught Extra

Only a yr in the past, the narrative round Google and enterprise AI felt caught. Regardless of inventing core applied sciences just like the Transformer, the tech big appeared perpetually on the again foot, overshadowed by OpenAI‘s viral success, Anthropic‘s coding prowess and Microsoft‘s aggressive enterprise push.

However witness the scene at Google Cloud Subsequent 2025 in Las Vegas final week: A assured Google, armed with benchmark-topping fashions, formidable infrastructure and a cohesive enterprise technique, declaring a surprising turnaround. In a closed-door analyst assembly with senior Google executives, one analyst summed it up. This feels just like the second, he mentioned, when Google went from “catch up, to catch us.”

This sentiment that Google has not solely caught up with however even surged forward of OpenAI and Microsoft within the enterprise AI race prevailed all through the occasion. And it’s extra than simply Google’s advertising and marketing spin. Proof suggests Google has leveraged the previous yr for intense, targeted execution, translating its technological property right into a performant, built-in platform that’s quickly profitable over enterprise decision-makers. From boasting the world’s strongest AI fashions working on hyper-efficient customized silicon, to a burgeoning ecosystem of AI brokers designed for real-world enterprise issues, Google is making a compelling case that it was by no means truly misplaced – however that its stumbles masked a interval of deep, foundational improvement.

Now, with its built-in stack firing on all cylinders, Google seems positioned to steer the subsequent section of the enterprise AI revolution. And in my interviews with a number of Google executives at Subsequent, they mentioned Google wields benefits in infrastructure and mannequin integration that rivals like OpenAI, Microsoft or AWS will battle to copy.

The shadow of doubt: acknowledging the latest previous

It’s inconceivable to understand the present momentum with out acknowledging the latest previous. Google was the birthplace of the Transformer structure, which sparked the fashionable revolution in massive language fashions (LLMs). Google additionally began investing in specialised AI {hardware} (TPUs), which at the moment are driving industry-leading effectivity, a decade in the past. And but, two and a half years in the past, it inexplicably discovered itself enjoying protection.

OpenAI’s ChatGPT captured the general public creativeness and enterprise curiosity at breathtaking pace and have become the fastest-growing app in historical past. Opponents like Anthropic carved out niches in areas like coding.

Google’s personal public steps typically appeared tentative or flawed. The notorious Bard demo fumbles in 2023 and the later controversy over its picture generator producing traditionally inaccurate depictions fed a story of an organization doubtlessly hampered by inner forms or overcorrection on alignment. It felt like Google was misplaced: The AI stumbles appeared to suit a sample, first proven by Google’s preliminary slowness within the cloud competitors, the place it remained a distant third in market share behind Amazon and Microsoft. Google Cloud CTO Will Grannis acknowledged the early questions on whether or not Google Cloud would stand behind in the long term. “Is it even an actual factor?,” he recalled individuals asking him. The query lingered: May Google translate its plain analysis brilliance and infrastructure scale into enterprise AI dominance?

The pivot: a aware choice to steer

Behind the scenes, nevertheless, a shift was underway, catalyzed by a aware choice on the highest ranges to reclaim management. Mat Velloso, VP of product for Google DeepMind’s AI Developer Platform, described sensing a pivotal second upon becoming a member of Google in Feb. 2024, after leaving Microsoft. “Once I got here to Google, I spoke with Sundar (Pichai), I spoke with a number of leaders right here, and I felt like that was the second the place they had been deciding, okay, this (generative AI) is a factor the {industry} clearly cares about. Let’s make it occur,” Velloso shared in an interview with VentureBeat throughout Subsequent final week.

This renewed push wasn’t hampered by a feared “mind drain” that some outsiders felt was depleting Google. In actual fact, the corporate quietly doubled down on execution in early 2024 – a yr marked by aggressive hiring, inner unification and buyer traction. Whereas rivals made splashy hires, Google retained its core AI management, together with DeepMind CEO Demis Hassabis and Google Cloud CEO Thomas Kurian, offering stability and deep experience.

Furthermore, expertise started flowing in direction of Google’s targeted mission. Logan Kilpatrick, as an example, returned to Google from OpenAI, drawn by the chance to construct foundational AI throughout the firm, creating it. He joined Velloso in what he described as a “zero to 1 expertise,” tasked with constructing developer traction for Gemini from the bottom up. “It was just like the group was me on day one… we truly haven’t any customers on this platform, we have now no income. Nobody is concerned about Gemini at this second,” Kilpatrick recalled of the start line. Individuals acquainted with the inner dynamics additionally credit score leaders like Josh Woodward, who helped begin AI Studio and now leads the Gemini App and Labs. Extra just lately, Noam Shazeer, a key co-author of the unique “Consideration Is All You Want” Transformer paper throughout his first tenure at Google, returned to the corporate in late 2024 as a technical co-lead for the essential Gemini undertaking

This concerted effort, combining these hires, analysis breakthroughs, refinements to its database know-how and a sharpened enterprise focus general, started yielding outcomes. These cumulative advances, mixed with what CTO Will Grannis termed “tons of of fine-grain” platform components, set the stage for the bulletins at Subsequent ’25, and cemented Google’s comeback narrative.

Pillar 1: Gemini 2.5 and the period of considering fashions

It’s true {that a} main enterprise mantra has turn out to be “it’s not simply in regards to the mannequin.” In spite of everything, the efficiency hole between main fashions has narrowed dramatically, and tech insiders acknowledge that true intelligence is coming from know-how packaged across the mannequin, not simply the mannequin itself – for instance, agentic applied sciences that enable a mannequin to make use of instruments and discover the online round it.

Regardless of this, to own the demonstrably best-performing LLM is a crucial feat – and a strong validator, an indication that the model-owning firm has issues like superior analysis and probably the most environment friendly underlying know-how structure. With the discharge of Gemini 2.5 Professional simply weeks earlier than Subsequent ’25, Google definitively seized that mantle. It rapidly topped the unbiased Chatbot Area leaderboard, considerably outperforming even OpenAI’s newest GPT-4o variant, and aced notoriously tough reasoning benchmarks like Humanity’s Final Examination. As Pichai acknowledged within the keynote, “It’s our most clever AI mannequin ever. And it’s the greatest mannequin on the earth.” The mannequin had pushed an 80 % improve in Gemini utilization inside a month, he Tweeted individually.

For the primary time, Google’s Gemini demand was on fireplace. As I detailed beforehand, apart from Gemini 2.5 Professional’s uncooked intelligence, what impressed me is its demonstrable reasoning. Google has engineered a “considering” functionality, permitting the mannequin to carry out multi-step reasoning, planning, and even self-reflection earlier than finalizing a response. The structured, coherent chain-of-thought (CoT) – utilizing numbered steps and sub-bullets – avoids the rambling or opaque nature of outputs from different fashions from DeepSeek or OpenAI. For technical groups evaluating outputs for crucial duties, this transparency permits validation, correction, and redirection with unprecedented confidence.

However extra importantly for enterprise customers, Gemini 2.5 Professional additionally dramatically closed the hole in coding, which is without doubt one of the greatest software areas for generative AI. In an interview with VentureBeat, CTO Fiona Tan, the CTO of main retailer Wayfair, mentioned that after preliminary exams, the corporate discovered it “stepped up fairly a bit” and was now “fairly comparable” to Anthropic’s Claude 3.7 Sonnet, beforehand the popular alternative for a lot of builders.

Google additionally added an enormous 1 million token context window to the mannequin, enabling reasoning throughout complete codebases or prolonged documentation, far exceeding the capabilities of the fashions of OpenAI or Anthropic. (OpenAI responded this week with fashions that includes equally massive context home windows, although benchmarks counsel Gemini 2.5 Professional retains an edge in general reasoning). This benefit permits for advanced, multi-file software program engineering duties.

Complementing Professional is Gemini 2.5 Flash, introduced at Subsequent ’25 and launched simply yesterday. Additionally, a “considering” mannequin, Flash is optimized for low latency and cost-efficiency. You’ll be able to management how a lot the mannequin causes and steadiness efficiency together with your price range. This tiered method additional displays the “intelligence per greenback” technique championed by Google executives.

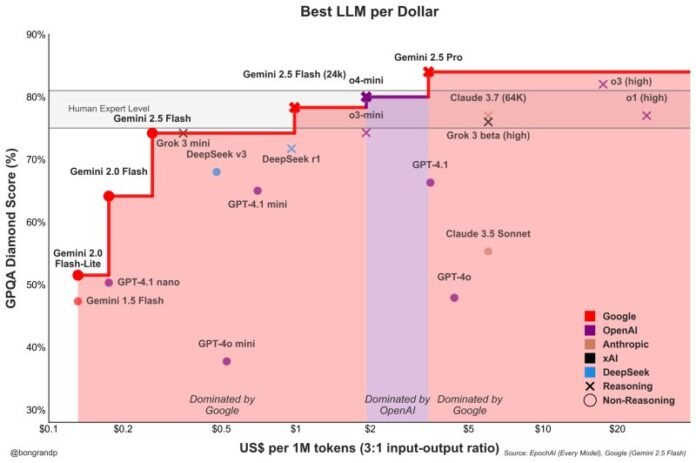

Velloso confirmed a chart revealing that throughout the intelligence spectrum, Google fashions supply one of the best worth. “If we had this dialog one yr in the past… I’d don’t have anything to indicate,” Velloso admitted, highlighting the fast turnaround. “And now, like, throughout the board, we’re, in case you’re in search of no matter mannequin, no matter measurement, like, in case you’re not Google, you’re shedding cash.” Comparable charts have been up to date to account for OpenAI’s newest mannequin releases this week, all displaying the identical factor: Google’s fashions supply one of the best intelligence per greenback. See beneath:

For any given value, Google’s fashions supply extra intelligence than different fashions, about 90 % of the time. Supply: Pierre Bongrand.

Wayfair’s Tan mentioned she additionally noticed promising latency enhancements with 2.5 Professional: “Gemini 2.5 got here again quicker,” making it viable for “extra customer-facing kind of capabilities,” she mentioned, one thing she mentioned hasn’t been the case earlier than with different fashions. Gemini may turn out to be the primary mannequin Wayfair makes use of for these buyer interactions, she mentioned.

The Gemini household’s capabilities lengthen to multimodality, integrating seamlessly with Google’s different main fashions like Imagen 3 (picture era), Veo 2 (video era), Chirp 3 (audio), and the newly introduced Lyria (text-to-music), all accessible through Google’s platform for Enterprise customers, Vertex. Google is the one firm that provides its personal generative media fashions throughout all modalities on its platform. Microsoft, AWS and OpenAI should accomplice with different corporations to do that.

Pillar 2: Infrastructure prowess – the engine below the hood

The flexibility to quickly iterate and effectively serve these highly effective fashions stems from Google’s arguably unparalleled infrastructure, honed over many years of working planet-scale companies. Central to that is the Tensor Processing Unit (TPU).

At Subsequent ’25, Google unveiled Ironwood, its seventh-generation TPU, explicitly designed for the calls for of inference and “considering fashions.” The size is immense, tailor-made for demanding AI workloads: Ironwood pods pack over 9,000 liquid-cooled chips, delivering a claimed 42.5 exaflops of compute energy. Google’s VP of ML Methods Amin Vahdat mentioned on stage at Subsequent that that is “greater than 24 occasions” the compute energy of the world’s present #1 supercomputer.

Google acknowledged that Ironwood gives 2x perf/watt relative to Trillium, the earlier era of TPU. That is vital since enterprise prospects more and more say vitality prices and availability constrain large-scale AI deployments.

Google Cloud CTO Will Grannis emphasised the consistency of this progress. 12 months over yr, Google is making 10x, 8x, 9x, 10x enhancements in its processors, he instructed VentureBeat in an interview, creating what he referred to as a “hyper Moore’s legislation” for AI accelerators. He mentioned prospects are shopping for Google’s roadmap, not simply its know-how.

Google’s place fueled this sustained TPU funding. It must effectively energy huge companies like Search, YouTube, and Gmail for greater than 2 billion customers. This necessitated growing customized, optimized {hardware} lengthy earlier than the present generative AI growth. Whereas Meta operates at an identical shopper scale, different rivals lacked this particular inner driver for decade-long, vertically built-in AI {hardware} improvement.

Now these TPU investments are paying off as a result of they’re driving the effectivity not just for its personal apps, however additionally they enable Google to supply Gemini to different customers at a greater intelligence per greenback, every thing equal.

Why can’t Google’s rivals purchase environment friendly processors from Nvidia, you ask? It’s true that Nvidia’s GPU processors dominate the method pre-training of LLMs. However market demand has pushed up the worth of those GPUs, and Nvidia takes a wholesome minimize for itself as revenue. This passes vital prices alongside to customers of its chips. And likewise, whereas pre-training has dominated the utilization of AI chips thus far, that is altering now that enterprises are literally deploying these purposes. That is the place ” inference” is available in, and right here TPUs are thought of extra environment friendly than GPUs for workloads at scale.

If you ask Google executives the place their principal know-how benefit in AI comes from, they normally fall again to the TPU as crucial. Mark Lohmeyer, the VP who runs Google’s computing infrastructure, was unequivocal: TPUs are “actually a extremely differentiated a part of what we do… OpenAI, they don’t have these capabilities.”

Considerably, Google presents TPUs not in isolation, however as a part of the broader, extra advanced enterprise AI structure. For technical insiders, it’s understood that top-tier efficiency hinges on integrating more and more specialised know-how breakthroughs. Many updates had been detailed at Subsequent. Vahdat described this as a “supercomputing system,” integrating {hardware} (TPUs, the most recent Nvidia GPUs like Blackwell and upcoming Vera Rubin, superior storage like Hyperdisk Exapools, Anyplace Cache, and Fast Storage) with a unified software program stack. This software program consists of Cluster Director for managing accelerators, Pathways (Gemini’s distributed runtime, now out there to prospects), and bringing optimizations like vLLM to TPUs, permitting simpler workload migration for these beforehand on Nvidia/PyTorch stacks. This built-in system, Vahdat argued, is why Gemini 2.0 Flash achieves 24 occasions greater intelligence per greenback, in comparison with GPT-4o.

Google can be extending its bodily infrastructure attain. Cloud WAN makes Google’s low-latency 2-million-mile non-public fiber community out there to enterprises, promising as much as 40% quicker efficiency and 40% decrease complete price of possession (TCO) in comparison with customer-managed networks.

Moreover, Google Distributed Cloud (GDC) permits Gemini and Nvidia {hardware} (through a Dell partnership) to run in sovereign, on-premises, and even air-gapped environments – a functionality Nvidia CEO Jensen Huang lauded as “completely gigantic” for bringing state-of-the-art AI to regulated industries and nations. At Subsequent, Huang referred to as Google’s infrastructure one of the best on the earth: “No firm is best at each single layer of computing than Google and Google Cloud,” he mentioned.

Pillar 3: The built-in full stack – connecting the dots

Google’s strategic benefit grows when contemplating how these fashions and infrastructure parts are woven right into a cohesive platform. In contrast to rivals, which regularly depend on partnerships to bridge gaps, Google controls practically each layer, enabling tighter integration and quicker innovation cycles.

So why does this integration matter, if a competitor like Microsoft can merely accomplice with OpenAI to match infrastructure breadth with LLM mannequin prowess? The Googlers I talked with mentioned it makes an enormous distinction, and so they got here up with anecdotes to again it up.

Take the numerous enchancment of Google’s enterprise database BigQuery. The database now gives a information graph that enables LLMs to go looking over information way more effectively, and it now boasts greater than 5 occasions the shoppers of rivals like Snowflake and Databricks, VentureBeat reported yesterday. Yasmeen Ahmad, Head of Product for Information Analytics at Google Cloud, mentioned the huge enhancements had been solely doable as a result of Google’s information groups had been working carefully with the DeepMind group. They labored by use circumstances that had been arduous to resolve, and this led to the database offering 50 % extra accuracy based mostly on frequent queries, no less than in response to Google’s inner testing, in attending to the correct information than the closest rivals, Ahmad instructed VentureBeat in an interview. Ahmad mentioned this kind of deep integration throughout the stack is how Google has “leapfrogged” the {industry}.

This inner cohesion contrasts sharply with the “frenemies” dynamic at Microsoft. Whereas Microsoft companions with OpenAI to distribute its fashions on the Azure cloud, Microsoft can be constructing its personal fashions. Mat Velloso, the Google govt who now leads the AI developer program, left Microsoft after getting pissed off making an attempt to align Home windows Copilot plans with OpenAI’s mannequin choices. “How do you share your product plans with one other firm that’s truly competing with you… The entire thing is a contradiction,” he recalled. “Right here I sit facet by facet with the people who find themselves constructing the fashions.”

This integration speaks to what Google leaders see as their core benefit: its distinctive capacity to attach deep experience throughout the complete spectrum, from foundational analysis and mannequin constructing to “planet-scale” software deployment and infrastructure design.

Vertex AI serves because the central nervous system for Google’s enterprise AI efforts. And the mixing goes past simply Google’s personal choices. Vertex’s Mannequin Backyard gives over 200 curated fashions, together with Google’s, Meta’s Llama 4, and quite a few open-source choices. Vertex supplies instruments for tuning, analysis (together with AI-powered Evals, which Grannis highlighted as a key accelerator), deployment, and monitoring. Its grounding capabilities leverage inner AI-ready databases alongside compatibility with exterior vector databases. Add to that Google’s new choices to floor fashions with Google Search, the world’s greatest search engine.

Integration extends to Google Workspace. New options introduced at Subsequent ’25, like “Assist Me Analyze” in Sheets (sure, Sheets now has an “=AI” system), Audio Overviews in Docs and Workspace Flows, additional embed Gemini’s capabilities into every day workflows, creating a robust suggestions loop for Google to make use of to enhance the expertise.

Whereas driving its built-in stack, Google additionally champions openness the place it serves the ecosystem. Having pushed Kubernetes adoption, it’s now selling JAX for AI frameworks and now open protocols for agent communication (A2A) alongside assist for present requirements (MCP). Google can be providing tons of of connectors to exterior platforms from inside Agentspace, which is Google’s new unified interface for workers to seek out and use brokers. This hub idea is compelling. The keynote demonstration of Agentspace (beginning at 51:40) illustrates this. Google gives customers pre-built brokers, or staff or builders can construct their very own utilizing no-code AI capabilities. Or they’ll pull in brokers from the surface through A2A connectors. It integrates into the Chrome browser for seamless entry.

Pillar 4: Give attention to enterprise worth and the agent ecosystem

Maybe probably the most vital shift is Google’s sharpened deal with fixing concrete enterprise issues, significantly by the lens of AI brokers. Thomas Kurian, Google Cloud CEO, outlined three causes prospects select Google: the AI-optimized platform, the open multi-cloud method permitting connection to present IT, and the enterprise-ready deal with safety, sovereignty, and compliance.

Brokers are key to this technique. Except for AgentSpace, this additionally consists of:

Constructing Blocks: The open-source Agent Improvement Package (ADK), introduced at Subsequent, has already seen vital curiosity from builders. The ADK simplifies creating multi-agent methods, whereas the proposed Agent2Agent (A2A) protocol goals to make sure interoperability, permitting brokers constructed with totally different instruments (Gemini ADK, LangGraph, CrewAI, and many others.) to collaborate. Google’s Grannis mentioned that A2A anticipates the dimensions and safety challenges of a future with doubtlessly tons of of hundreds of interacting brokers.

This A2A protocol is absolutely necessary. In a background interview with VentureBeat this week, the CISO of a serious US retailer, who requested anonymity due to the sensitivity round safety points. However they mentioned the A2A protocol was useful as a result of the retailer is in search of an answer to differentiate between actual individuals and bots who’re utilizing brokers to purchase merchandise. This retailer needs to keep away from promoting to scalper bots, and with A2A, it’s simpler to barter with brokers to confirm their proprietor identities.

Function-built Brokers: Google showcased knowledgeable brokers built-in into Agentspace (like NotebookLM, Thought Era, Deep Analysis) and highlighted 5 key classes gaining traction: Buyer Brokers (powering instruments like Reddit Solutions, Verizon’s assist assistant, Wendy’s drive-thru), Artistic Brokers (utilized by WPP, Brandtech, Sphere), Information Brokers (driving insights at Mattel, Spotify, Bayer), Coding Brokers (Gemini Code Help), and Safety Brokers (built-in into the brand new Google Unified Safety platform).

This complete agent technique seems to be resonating. Conversations with executives at three different massive enterprises this previous week, additionally talking anonymously on account of aggressive sensitivities, echoed this enthusiasm for Google’s agent technique. Google Cloud COO Francis DeSouza confirmed in an interview: “Each dialog consists of AI. Particularly, each dialog consists of brokers.”

Kevin Laughridge, an govt at Deloitte, a giant person of Google’s AI merchandise, and a distributor of them to different corporations, described the agent market as a “land seize” the place Google’s early strikes with protocols and its built-in platform supply vital benefits. “Whoever is getting out first and getting probably the most brokers that truly ship worth – is who’s going to win on this race,” Laughridge mentioned in an interview. He mentioned Google’s progress was “astonishing,” noting that customized brokers Deloitte constructed only a yr in the past may now be replicated “out of the field” utilizing Agentspace. Deloitte itself is constructing 100 brokers on the platform, focusing on mid-office capabilities like finance, danger and engineering, he mentioned.

The client proof factors are mounting. At Subsequent, Google cited “500 plus prospects in manufacturing” with generative AI, up from simply “dozens of prototypes” a yr in the past. If Microsoft was perceived as method forward a yr in the past, that doesn’t appear so clearly the case anymore. Given the PR conflict from all sides, it’s tough to say who is absolutely profitable proper now definitively. Metrics differ. Google’s 500 quantity isn’t immediately akin to the 400 case research Microsoft promotes (and Microsoft, in response, instructed VentureBeat at press time that it plans to replace this public rely to 600 shortly, underscoring the extreme advertising and marketing). And if Google’s distribution of AI by its apps is important, Microsoft’s Copilot distribution by its 365 providing is equally spectacular. Each at the moment are hitting tens of millions of builders by APIs.

(Editor’s be aware: Understanding how enterprises are navigating this ‘agent land seize,’ and efficiently deploying these advanced AI options, can be central to the discussions at VentureBeat’s Rework occasion this June 24-25 in San Francisco.)

However examples abound of Google’s traction:

Wendy’s: Deployed an AI drive-thru system to hundreds of places in only one yr, bettering worker expertise and order accuracy. Google Cloud CTO Will Grannis famous that the AI system is able to understanding slang and filtering out background noise, considerably lowering the stress of reside buyer interactions. That frees up employees to deal with meals prep and high quality — a shift Grannis referred to as “an important instance of AI streamlining real-world operations.”

Salesforce: Introduced a serious enlargement, enabling its platform to run on Google Cloud for the primary time (past AWS), citing Google’s capacity to assist them “innovate and optimize.”

Honeywell & Intuit: Firms beforehand strongly related to Microsoft and AWS, respectively, now partnering with Google Cloud on AI initiatives.

Main Banks (Deutsche Financial institution, Wells Fargo): Leveraging brokers and Gemini for analysis, evaluation, and modernizing customer support.

Retailers (Walmart, Mercado Libre, Lowe’s): Utilizing search, brokers, and information platforms.

This enterprise traction fuels Google Cloud’s general progress, which has outpaced AWS and Azure for the final three quarters. Google Cloud reached a $44 billion annualized run charge in 2024, up from simply $5 billion in 2018.

Navigating the aggressive waters

Google’s ascent doesn’t imply rivals are standing nonetheless. OpenAI’s fast releases this week of GPT-4.1 (targeted on coding and lengthy context) and the o-series (multimodal reasoning, software use) reveal OpenAI’s continued innovation. Furthermore, OpenAI’s new picture era function replace in GPT-4o fueled huge progress over simply the final month, serving to ChatGPT attain 800 million customers. Microsoft continues to leverage its huge enterprise footprint and OpenAI partnership, whereas Anthropic stays a robust contender, significantly in coding and safety-conscious purposes.

Nonetheless, it’s indeniable that Google’s narrative has improved remarkably. Only a yr in the past, Google was considered as a stodgy, halting, blundering competitor that maybe was about to blow its probability at main AI in any respect. As a substitute, its distinctive, built-in stack and company steadfastness has revealed one thing else: Google possesses world-class capabilities throughout all the spectrum – from chip design (TPUs) and world infrastructure to foundational mannequin analysis (DeepMind), software improvement (Workspace, Search, YouTube), and enterprise cloud companies (Vertex AI, BigQuery, Agentspace). “We’re the one hyperscaler that’s within the foundational mannequin dialog,” deSouza acknowledged flatly. This end-to-end possession permits for optimizations (like “intelligence per greenback”) and integration depth that partnership-reliant fashions battle to match. Opponents typically must sew collectively disparate items, doubtlessly creating friction or limiting innovation pace.

Google’s second is now

Whereas the AI race stays dynamic, Google has assembled all these items on the exact second the market calls for them. As Deloitte’s Laughridge put it, Google hit some extent the place its capabilities aligned completely “the place the market demanded it.” In case you had been ready for Google to show itself in enterprise AI, you will have missed the second — it already has. The corporate that invented most of the core applied sciences powering this revolution seems to have lastly caught up – and greater than that, it’s now setting the tempo that rivals must match.

Within the video beneath, recorded proper after Subsequent, AI knowledgeable Sam Witteveen and I break down the present panorama and rising developments, and why Google’s AI ecosystem feels so robust:

Every day insights on enterprise use circumstances with VB Every day

If you wish to impress your boss, VB Every day has you coated. We provide the inside scoop on what corporations are doing with generative AI, from regulatory shifts to sensible deployments, so you’ll be able to share insights for max ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.