Efficient knowledge governance has lengthy been a essential precedence for organizations searching for to maximise the worth of their knowledge belongings. It encompasses the processes, insurance policies, and practices a company makes use of to handle its knowledge sources. The important thing targets of information governance are to make knowledge discoverable and usable by those that want it, correct and constant, safe and shielded from unauthorized entry or misuse, and compliant with related rules and requirements. Knowledge governance entails establishing clear possession and accountability for knowledge, together with defining roles, tasks, and decision-making authority associated to knowledge administration.

Historically, knowledge governance frameworks have been designed to handle knowledge at relaxation—the structured and unstructured info saved in databases, knowledge warehouses, and knowledge lakes. Amazon DataZone is a knowledge governance and catalog service from Amazon Net Companies (AWS) that enables organizations to centrally uncover, management, and evolve schemas for knowledge at relaxation together with AWS Glue tables on Amazon Easy Storage Service (Amazon S3), Amazon Redshift tables, and Amazon SageMaker fashions.

Nonetheless, the rise of real-time knowledge streams and streaming knowledge purposes impacts knowledge governance, necessitating adjustments to current frameworks and practices to successfully handle the brand new knowledge dynamics. Governing these fast, decentralized knowledge streams presents a brand new set of challenges that stretch past the capabilities of many standard knowledge governance approaches. Components such because the ephemeral nature of streaming knowledge, the necessity for real-time responsiveness, and the technical complexity of distributed knowledge sources require a reimagining of how we take into consideration knowledge oversight and management.

On this put up, we discover how AWS prospects can lengthen Amazon DataZone to help streaming knowledge equivalent to Amazon Managed Streaming for Apache Kafka (Amazon MSK) matters. Builders and DevOps managers can use Amazon MSK, a well-liked streaming knowledge service, to run Kafka purposes and Kafka Join connectors on AWS with out changing into consultants in working it. We clarify how they’ll use Amazon DataZone customized asset sorts and customized authorizers to: 1) catalog Amazon MSK matters, 2) present helpful metadata equivalent to schema and lineage, and three) securely share Amazon MSK matters throughout the group. To speed up the implementation of Amazon MSK governance in Amazon DataZone, we use the Knowledge Options Framework on AWS (DSF), an opinionated open supply framework that we introduced earlier this yr. DSF depends on AWS Cloud Improvement Package (AWS CDK) and gives a number of AWS CDK L3 constructs that speed up constructing knowledge options on AWS, together with streaming governance.

Excessive-level strategy for governing streaming knowledge in Amazon DataZone

To anchor the dialogue on supporting streaming knowledge in Amazon DataZone, we use Amazon MSK as an integration instance, however the strategy and the architectural patterns stay the identical for different streaming providers (equivalent to Amazon Kinesis Knowledge Streams). At a excessive degree, to combine streaming knowledge, you want the next capabilities:

A mechanism for the Kafka subject to be represented within the Amazon DataZone catalog for discoverability (together with the schema of the info flowing inside the subject), monitoring of lineage and different metadata, and for shoppers to request entry in opposition to.

A mechanism to deal with the customized authorization circulation when a shopper triggers the subscription grant to an surroundings. This circulation consists of the next high-level steps:

Gather metadata of goal Amazon MSK cluster or subject that’s being subscribed to by the buyer

Replace the producer Amazon MSK cluster’s useful resource coverage to permit entry from the buyer function

Present Kafka subject degree AWS Identification and Entry Administration (IAM) permission to the buyer roles (extra on this later) in order that it has entry to the goal Amazon MSK cluster

Lastly, replace the interior metadata of Amazon DataZone in order that it’s conscious of the present subscription between producer and shopper

Amazon DataZone catalog

Earlier than you possibly can signify the Kafka subject as an entry within the Amazon DataZone catalog, you want to outline:

A customized asset kind that describes the metadata that’s wanted to explain a Kafka subject. To explain the schema as a part of the metadata, use the built-in type kind amazon.datazone.RelationalTableFormType and create two extra customized type sorts:

MskSourceReferenceFormType that comprises the cluster_ARN and the cluster_type. The sort is used to find out whether or not the Amazon MSK cluster is provisioned or serverless, provided that there’s a unique course of to grant eat permissions.

KafkaSchemaFormType, which comprises varied metadata on the schema, together with the kafka_topic, the schema_version, schema_arn, registry_arn, compatibility_mode (for instance, backward-compatible or forward-compatible) and data_format (for instance, Avro or JSON), which is useful if you happen to plan to combine with the AWS Glue Schema registry.

After the customized asset kind has been outlined, now you can create an asset primarily based on the customized asset kind. The asset describes the schema, the Amazon MSK cluster, and the subject that you simply wish to be made discoverable and accessible to shoppers.

Knowledge supply for Amazon MSK clusters with AWS Glue Schema registry

In Amazon DataZone, you possibly can create knowledge sources for AWS Glue Knowledge Catalog to import technical metadata of database tables from AWS Glue and have the belongings registered within the Amazon DataZone mission. For importing metadata associated to Amazon MSK, you want to use a customized knowledge supply, which could be an AWS Lambda operate, utilizing the Amazon DataZone APIs.

We offer as a part of the answer a customized Amazon MSK knowledge supply with the AWS Glue Schema registry, for automating the creation, replace, and deletion of customized Amazon MSK belongings. It makes use of AWS Lambda to extract schema definitions from a Schema registry and metadata from the Amazon MSK clusters after which creates or updates the corresponding belongings in Amazon DataZone.

Earlier than explaining how the info supply works, you want to know that each customized asset in Amazon DataZone has a novel identifier. When the info supply creates an asset, it shops the asset’s distinctive identifier in Parameter Retailer, a functionality of AWS Methods Supervisor.

The steps for a way the info supply works are as follows:

The Amazon MSK AWS Glue Schema registry knowledge supply could be scheduled to be triggered on a given interval or by listening to AWS Glue Schema occasions equivalent to Create, Replace or Delete Schema. It will also be invoked manually by way of the AWS Lambda console.

When triggered, it retrieves all the present distinctive identifiers from Parameter Retailer. These parameters function reference to establish if an Amazon MSK asset already exists in Amazon DataZone.

The operate lists the Amazon MSK clusters and retrieves the Amazon Useful resource Title (ARN) for the given Amazon MSK identify and extra metadata associated to the Amazon MSK cluster kind (serverless or provisioned). This metadata can be used later by the customized authorization circulation.

Then the operate lists all of the schemas within the Schema registry for a given registry identify. For every schema, it retrieves the newest model and schema definition. The schema definition is what’s going to mean you can add schema info when creating the asset in Amazon DataZone.

For every schema retrieved within the Schema registry, the Lambda operate checks if the belongings exist already by trying into the Methods Supervisor parameters retrieved within the second step.

If the asset exists, the Lambda operate updates the asset in Amazon DataZone, creating a brand new revision with the up to date schema or types.

If the asset doesn’t exist, the Lambda operate creates the asset in Amazon DataZone and shops its distinctive identifier in Methods Supervisor for future reference.

If there are schemas registered in Parameter Retailer which might be now not within the Schema registry, the info supply deletes the corresponding Amazon DataZone belongings and removes the related parameters from Methods Supervisor.

The Amazon MSK AWS Glue Schema registry knowledge supply for Amazon DataZone allows seamless registration of Kafka matters as customized belongings in Amazon DataZone. It does require that the matters within the Amazon MSK cluster are utilizing the Schema registry for schema administration.

Customized authorization circulation

For managed belongings equivalent to AWS Glue Knowledge Catalog and Amazon Redshift belongings, the method to grant entry to the buyer is managed by Amazon DataZone. Customized asset sorts are thought of unmanaged belongings, and the method to grant entry must be carried out exterior of Amazon DataZone.

The high-level steps for the end-to-end circulation are as follows:

(Conditional) If the buyer surroundings doesn’t have a subscription goal, create it by way of the CreateSubscriptionTarget API name. The subscription goal tells Amazon DataZone which environments are appropriate with an asset kind.

The buyer triggers a subscription request by subscribing to the related streaming knowledge asset by way of the Amazon DataZone portal.

The producer receives the subscription request and approves (or denies) the request.

After the subscription request has been accredited by the producer, the buyer can observe the streaming knowledge asset of their mission below the Subscribed knowledge part.

The buyer can decide to set off a subscription grant to a goal surroundings instantly from the Amazon DataZone portal, and this motion triggers the customized authorization circulation.

For steps 2–4, you depend on the default conduct of Amazon DataZone and no change is required. The main target of this part is then step 1 (subscription goal) and step 5 (subscription grant course of).

Subscription goal

Amazon DataZone has an idea referred to as environments inside a mission, which signifies the place the sources are situated and the associated entry configuration (for instance, the IAM function) that’s used to entry these sources. To permit an surroundings to have entry to the customized asset kind, shoppers have to make use of the Amazon DataZone CreateSubscriptionTarget API previous to the subscription grants. The creation of the subscription goal is a one-time operation per customized asset kind per surroundings. As well as, the authorizedPrincipals parameter contained in the CreateSubscriptionTarget API lists the varied IAM principals given entry to the Amazon MSK subject as a part of the grant authorization circulation. Lastly, when calling CreateSubscriptionTarget, the underlying precept used to name the API should belong to the goal surroundings’s AWS account ID.

After the subscription goal has been created for a customized asset kind and surroundings, the surroundings is eligible as a goal for subscription grants.

Subscription grant course of

Amazon DataZone emits occasions primarily based on consumer actions, and you utilize this mechanism to set off the customized authorization course of when a subscription grant has been triggered for Amazon MSK matters. Particularly, you utilize the Subscription grant requested occasion. These are the steps of the authorization circulation:

A Lambda operate collects metadata on the next:

Producer Amazon MSK cluster or Kinesis knowledge stream that the buyer is requesting entry to. Metadata is collected utilizing the GetListing API.

Metadata in regards to the goal surroundings utilizing a name to GetEnvironment API.

Metadata in regards to the subscription goal utilizing a name to GetSubscriptionTarget API to gather the buyer roles to grant.

In parallel, Amazon DataZone inside metadata in regards to the standing of the subscription grant must be up to date, and this occurs on this step. Relying on the kind of motion that’s being achieved (equivalent to GRANT or REVOKE), the standing of the subscription grant is up to date respectively (for instance, GRANT_IN_PROGRESS, REVOKE_IN_PROGRESS).

After the metadata has been collected, it’s handed downstream as a part of the AWS Step Features state.

Replace the useful resource coverage of the goal useful resource (for instance, Amazon MSK cluster or Kinesis knowledge stream) within the producer account. The replace permits licensed principals from the buyer to entry or learn the goal useful resource. Instance of the coverage is as follows:

{

“Impact”: “Permit”,

“Principal”: {

“AWS”: (

“”

)

},

“Motion”: (

‘kafka-cluster:Join’,

‘kafka-cluster:DescribeTopic’,

‘kafka-cluster:DescribeGroup’,

‘kafka-cluster:AlterGroup’,

‘kafka-cluster:ReadData’,

“kafka:CreateVpcConnection”,

“kafka:GetBootstrapBrokers”,

“kafka:DescribeCluster”,

“kafka:DescribeClusterV2”

),

“Useful resource”: (

“”,

“”,

“”

)

}

Replace the configured licensed principals by attaching further IAM permissions relying on particular eventualities. The next examples illustrate what’s being added.

The bottom entry or learn permissions are as follows:

{

“Impact”: “Permit”,

“Motion”: (

‘kafka-cluster:Join’,

‘kafka-cluster:DescribeTopic’,

‘kafka-cluster:DescribeGroup’,

‘kafka-cluster:AlterGroup’,

‘kafka-cluster:ReadData’

),

“Useful resource”: (

“”,

“”,

“”

)

}

If there’s an AWS Glue Schema registry ARN supplied as a part of the AWS CDK assemble parameter, then further permissions are added to permit entry to each the registry and the precise schema:

{

“Impact”: “Permit”,

“Motion”: (

“glue:GetRegistry”,

“glue:ListRegistries”,

“glue:GetSchema”,

“glue:ListSchemas”,

“glue:GetSchemaByDefinition”,

“glue:GetSchemaVersion”,

“glue:ListSchemaVersions”,

“glue:GetSchemaVersionsDiff”,

“glue:CheckSchemaVersionValidity”,

“glue:QuerySchemaVersionMetadata”,

“glue:GetTags”

),

“Useful resource”: (

“”,

“”

)

}

If this grant is for a shopper in a unique account, the next permissions are additionally added to permit managed VPC connections to be created by the buyer:

{

“Impact”: “Permit”,

“Motion”: (

“kafka:CreateVpcConnection”,

“ec2:CreateTags”,

“ec2:CreateVPCEndpoint”

),

“Useful resource”: “*”

}

Replace the Amazon DataZone inside metadata on the progress of the subscription grant (for instance, GRANTED or REVOKED). If there’s an exception in a step, it’s dealt with inside Step Features and the subscription grant metadata is up to date with a failed state (for instance, GRANT_FAILED or REVOKE_FAILED).

As a result of Amazon DataZone helps multi-account structure, the subscription grant course of is a distributed workflow that should carry out actions throughout completely different accounts, and it’s orchestrated from the Amazon DataZone area account the place all of the occasions are acquired.

Implement streaming governance in Amazon DataZone with DSF

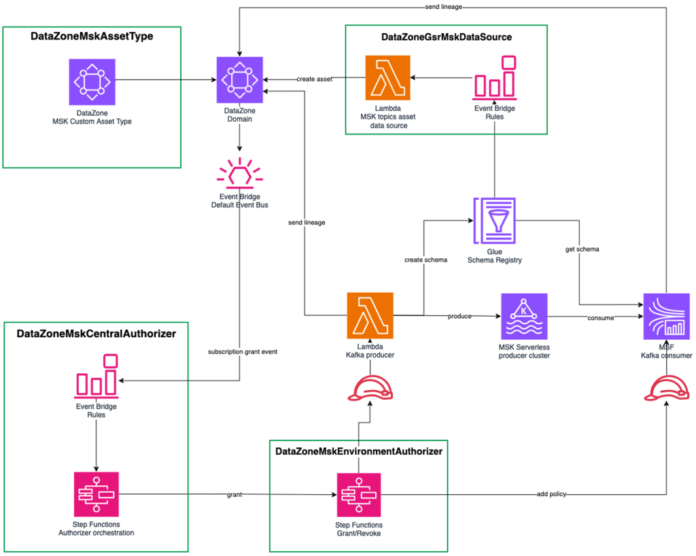

On this part, we deploy an instance as an instance the answer utilizing DSF on AWS, which gives all of the required parts to speed up the implementation of the answer. We use the next CDK L3 constructs from DSF:

DataZoneMskAssetType creates the customized asset kind representing an Amazon MSK subject in Amazon DataZone

DataZoneGsrMskDataSource robotically creates Amazon MSK subject belongings in Amazon DataZone primarily based on schema definitions within the Schema registry

DataZoneMskCentralAuthorizer and DataZoneMskEnvironmentAuthorizer implement the subscription grant course of for Amazon MSK matters and IAM authentication

The next diagram is the structure for the answer.

On this instance, we use Python for the instance code. DSF additionally helps TypeScript.

Deployment steps

Comply with the steps within the data-solutions-framework-on-aws README to deploy the answer. You must deploy the CDK stack first, then create the customized surroundings and redeploy the stack with further info.

Confirm the instance is working

To confirm the instance is working, produce pattern knowledge utilizing the Lambda operate StreamingGovernanceStack-ProducerLambda. Comply with these steps:

Use the AWS Lambda console to check the Lambda operate by working a pattern take a look at occasion. The occasion JSON needs to be empty. Save your take a look at occasion and click on Take a look at.

Producing take a look at occasions will generate a brand new schema producer-data-product within the Schema registry. Test the schema is created from the AWS Glue console utilizing the Knowledge Catalog menu from the left and choosing Stream schema registries.

New knowledge belongings needs to be within the Amazon DataZone portal, below the PRODUCER mission

On the DATA tab, within the left navigation pane, choose Stock knowledge, as proven within the following screenshot

Choose producer-data-product

Choose the BUSINESS METADATA tab to view the enterprise metadata, as proven within the following screenshot.

To view the schema, choose the SCHEMA tab, as proven within the following screenshot

To view the lineage, choose the LINEAGE tab

To publish the asset, choose PUBLISH ASSET, as proven within the following screenshot

Subscribe

To subscribe, observe these steps:

Change to the buyer mission by choosing CONSUMER within the high left of the display screen

Choose Browse Catalog

Select producer-data-product and select SUBSCRIBE, as proven within the following screenshot

Return to the PRODUCER mission and select producer-data-product, as proven within the following screenshot

Select APPROVE, as proven within the following screenshot

Go to the AWS Identification and Entry Administration (IAM) console and seek for the buyer function. Within the function definition, you must see an IAM inline coverage with permissions on the Amazon MSK cluster, the Kafka subject, the Kafka shopper group, the AWS Glue schema registry and the schema from the producer.

Now let’s swap to the buyer’s surroundings within the Amazon Managed Service for Apache Flink console and run the Flink utility referred to as flink-consumer utilizing the Run button.

Return to the Amazon DataZone portal, and ensure that the lineage below the CONSUMER mission was up to date and the brand new Flink job run node was added to the lineage graph, as proven within the following screenshot

Clear up

To scrub up the sources you created as a part of this walkthrough, observe these steps:

Cease the Amazon Managed Streaming for Apache Flink job.

Revoke the subscription grant from the Amazon DataZone console.

Run cdk destroy in your native terminal to delete the stack. Since you marked the constructs with a RemovalPolicy.DESTROY and configured DSF to take away knowledge on destroy, working cdk destroy or deleting the stack from the AWS CloudFormation console will clear up the provisioned sources.

Conclusion

On this put up, we shared how one can combine streaming knowledge from Amazon MSK inside Amazon DataZone to create a unified knowledge governance framework that spans the whole knowledge lifecycle, from the ingestion of streaming knowledge to its storage and eventual consumption by numerous producers and shoppers.

We additionally demonstrated learn how to use the AWS CDK and the DSF on AWS to shortly implement this resolution utilizing built-in greatest practices. Along with the Amazon DataZone streaming governance, DSF helps different patterns, equivalent to Spark knowledge processing and Amazon Redshift knowledge warehousing. Our roadmap is publicly obtainable, and we stay up for your function requests, contributions, and suggestions. You will get began utilizing DSF by following our Fast begin information.

Concerning the Authors

Vincent Gromakowski is a Principal Analytics Options Architect at AWS the place he enjoys fixing prospects’ knowledge challenges. He makes use of his robust experience on analytics, distributed programs and useful resource orchestration platform to be a trusted technical advisor for AWS prospects.

Vincent Gromakowski is a Principal Analytics Options Architect at AWS the place he enjoys fixing prospects’ knowledge challenges. He makes use of his robust experience on analytics, distributed programs and useful resource orchestration platform to be a trusted technical advisor for AWS prospects.

Francisco Morillo is a Sr. Streaming Options Architect at AWS, specializing in real-time analytics architectures. With over 5 years within the streaming knowledge area, Francisco has labored as a knowledge analyst for startups and as a giant knowledge engineer for consultancies, constructing streaming knowledge pipelines. He has deep experience in Amazon Managed Streaming for Apache Kafka (Amazon MSK) and Amazon Managed Service for Apache Flink. Francisco collaborates carefully with AWS prospects to construct scalable streaming knowledge options and superior streaming knowledge lakes, guaranteeing seamless knowledge processing and real-time insights.

Francisco Morillo is a Sr. Streaming Options Architect at AWS, specializing in real-time analytics architectures. With over 5 years within the streaming knowledge area, Francisco has labored as a knowledge analyst for startups and as a giant knowledge engineer for consultancies, constructing streaming knowledge pipelines. He has deep experience in Amazon Managed Streaming for Apache Kafka (Amazon MSK) and Amazon Managed Service for Apache Flink. Francisco collaborates carefully with AWS prospects to construct scalable streaming knowledge options and superior streaming knowledge lakes, guaranteeing seamless knowledge processing and real-time insights.

Jan Michael Go Tan is a Principal Options Architect for Amazon Net Companies. He helps prospects design scalable and revolutionary options with the AWS Cloud.

Jan Michael Go Tan is a Principal Options Architect for Amazon Net Companies. He helps prospects design scalable and revolutionary options with the AWS Cloud.

Sofia Zilberman is a Sr. Analytics Specialist Options Architect at Amazon Net Companies. She has a observe report of 15 years of making large-scale, distributed processing programs. She stays captivated with large knowledge applied sciences and structure tendencies, and is consistently looking out for purposeful and technological improvements.

Sofia Zilberman is a Sr. Analytics Specialist Options Architect at Amazon Net Companies. She has a observe report of 15 years of making large-scale, distributed processing programs. She stays captivated with large knowledge applied sciences and structure tendencies, and is consistently looking out for purposeful and technological improvements.