Companies more and more require scalable, cost-efficient architectures to course of and rework large datasets. On the BMW Group, our Cloud Effectivity Analytics (CLEA) staff has developed a FinOps resolution to optimize prices throughout over 10,000 cloud accounts. Whereas enabling organization-wide effectivity, the staff additionally utilized these ideas to the information structure, ensuring that CLEA itself operates frugally. After evaluating varied instruments, we constructed a serverless knowledge transformation pipeline utilizing Amazon Athena and dbt.

This put up explores our journey, from the preliminary challenges to our present structure, and particulars the steps we took to realize a extremely environment friendly, serverless knowledge transformation setup.

Challenges: Ranging from a inflexible and expensive setup

In our early levels, we encountered a number of inefficiencies that made scaling troublesome. We have been managing complicated schemas with vast tables that required important effort in maintainability. Initially, we used Terraform to create tables and views in Athena, permitting us to handle our knowledge infrastructure as code (IaC) and automate deployments by means of steady integration and supply (CI/CD) pipelines. Nevertheless, this technique slowed us down when altering knowledge fashions or coping with schema modifications, due to this fact requiring excessive growth efforts.

As our resolution grew, we confronted challenges with question efficiency and prices. Every question scanned giant quantities of uncooked knowledge, leading to elevated processing time and better Athena prices. We used views to supply a clear abstraction layer, however this masked underlying complexity as a result of seemingly easy queries towards these views scanned giant volumes of uncooked knowledge, and our partitioning technique wasn’t optimized for these entry patterns. As our datasets grew, the dearth of modularity in our knowledge design elevated complexity, making scalability and upkeep more and more troublesome. We wanted an answer for pre-aggregating, computing, and storing question outcomes of computationally intensive transformations. The absence of strong testing and lineage options made it difficult to establish the basis causes of information inconsistencies after they occurred.

As a part of our enterprise intelligence (BI) resolution, we used Amazon QuickSight to construct our dashboards, offering visible insights into our cloud value knowledge. Nevertheless, our preliminary knowledge structure led to challenges. We have been constructing dashboards on prime of huge, vast datasets, with some hitting the QuickSight per-dataset SPICE restrict of 1 TB. Moreover, throughout SPICE ingest, our largest datasets required 4–5 hours of processing time as a result of performing full scans every time, typically scanning over a terabyte of information. This structure wasn’t serving to us be extra agile and fast whereas scaling up. The lengthy processing instances and storage limitations hindered our means to supply well timed insights and increase our analytics capabilities.

To deal with these points, we enhanced the information structure with AWS Lambda, AWS Step Capabilities, AWS Glue, and dbt. This device stack considerably enhanced our growth agility, empowering us to shortly modify and introduce new knowledge fashions. On the identical time, we improved our total knowledge processing effectivity with incremental hundreds and higher schema administration.

Answer overview

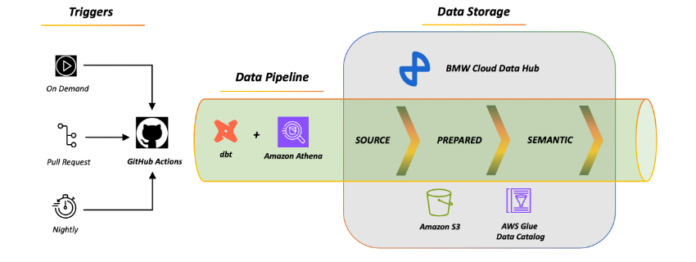

Our present structure consists of a serverless and modular pipeline coordinated by GitHub Actions workflows. We selected Athena as our major question engine for a number of strategic causes: it aligns completely with our staff’s SQL experience, excels at querying Parquet knowledge immediately in our knowledge lake, and alleviates the necessity for devoted compute assets. This makes Athena a super match for CLEA’s structure, the place we course of round 300 GB each day from an information lake of 15 TB, with our largest dataset containing 50 billion rows throughout as much as 400 columns. The potential of Athena to effectively question large-scale Parquet knowledge, mixed with its serverless nature, allows us to deal with writing environment friendly transformations somewhat than managing infrastructure.

The next diagram illustrates the answer structure.

Utilizing this structure, we’ve streamlined our knowledge transformation course of utilizing dbt. In dbt, an information mannequin represents a single SQL transformation that creates both a desk or a view—basically a constructing block of our knowledge transformation pipeline. Our implementation contains round 400 such fashions, 50 knowledge sources, and round 100 knowledge assessments. This setup allows seamless updates—whether or not creating new fashions, updating schemas, or modifying views—triggered just by making a pull request in our supply code repository, with the remainder dealt with routinely.

Our workflow automation contains the next options:

Pull request – After we create a pull request, it’s deployed to our testing setting first. After passing validation and being authorized or merged, it’s deployed to manufacturing utilizing GitHub workflows. This setup allows seamless mannequin creation, schema updates, or view modifications—triggered simply by making a pull request, with the remainder dealt with routinely.

Cron scheduler – For nightly runs or a number of each day runs to scale back knowledge latency, we use scheduled GitHub workflows. This setup permits us to configure particular fashions with completely different replace methods based mostly on knowledge wants. We will set fashions to replace incrementally (processing solely new or modified knowledge), as views (querying with out materializing knowledge), or as full hundreds (fully refreshing the information). This flexibility optimizes processing time and useful resource utilization. We will goal solely particular folders—like supply, ready, or semantic layers—and run the dbt check afterward to validate mannequin high quality.

On demand – When including new columns or altering enterprise logic, we have to replace historic knowledge to take care of consistency. For this, we use a backfill course of, which is a customized GitHub workflow created by our staff. The workflow permits us to pick out particular fashions, embody their upstream dependencies, and set parameters like begin and finish dates. This makes positive that modifications are utilized precisely throughout your complete historic dataset, sustaining knowledge consistency and integrity.

Our pipeline is organized into three major levels—Supply, Ready, and Semantic—every serving a particular goal in our knowledge transformation journey. The Supply stage maintains uncooked knowledge in its authentic type. The Ready stage cleanses and standardizes this knowledge, dealing with duties like deduplication and knowledge sort conversions. The Semantic stage transforms this ready knowledge into business-ready fashions aligned with our analytical wants. A further QuickSight step handles visualization necessities. To attain low value and excessive efficiency, we use dbt fashions and SQL code to handle all transformations and schema modifications. By implementing incremental processing methods, our fashions course of solely new or modified knowledge somewhat than reprocessing your complete dataset with every run.

The Semantic stage (to not be confused with dbt’s semantic layer function) introduces enterprise logic, reworking knowledge into aggregated datasets which are immediately consumable by BMW’s Cloud Knowledge Hub, inside CLEA dashboards, knowledge APIs, or In-Console Cloud Assistant (ICCA) chatbot. The QuickSight step additional optimizes knowledge by choosing solely vital columns through the use of a column-level lineage resolution and setting a dynamic date filter with a sliding window to ingest solely related scorching knowledge into SPICE, avoiding unused knowledge in dashboards or reviews.

This method aligns with BMW Group’s broader knowledge technique, which incorporates streamlining knowledge entry utilizing AWS Lake Formation for fine-grained entry management.

Total, as a high-level construction, we’ve absolutely automated schema modifications, knowledge updates, and testing by means of GitHub pull requests and dbt instructions. This method allows managed deployment with sturdy model management and alter administration. Steady testing and monitoring workflows uphold knowledge accuracy, reliability, and high quality throughout transformations, supporting environment friendly, collaborative mannequin iteration.

Key advantages of the dbt-Athena structure

To design and handle dbt fashions successfully, we use a multi-layered method mixed with value and efficiency optimizations. On this part, we focus on how our method has yielded important advantages in 5 key areas.

SQL-based, developer-friendly setting

Our staff already had robust SQL abilities, so dbt’s SQL-centric method was a pure match. As an alternative of studying a brand new language or framework, builders may instantly begin writing transformations utilizing acquainted SQL syntax with dbt. This familiarity aligns properly with the SQL interface of Athena and, mixed with dbt’s added performance, has elevated our staff’s productiveness.

Behind the scenes, dbt routinely handles synchronization between Amazon Easy Storage Service (Amazon S3), the AWS Glue Knowledge Catalog, and our fashions. When we have to change a mannequin’s materialization sort—for instance, from a view to a desk—it’s so simple as updating a configuration parameter somewhat than rewriting code. This flexibility has decreased our growth time dramatically, allowed us to deal with constructing higher knowledge fashions somewhat than managing infrastructure.

Agility in modeling and deployment

Documentation is essential for any knowledge platform’s success. We use dbt’s built-in documentation capabilities by publishing them to GitHub Pages, which creates an accessible, searchable repository of our knowledge fashions. This documentation contains desk schemas, relationships between fashions, and utilization examples, enabling staff members to know how fashions interconnect and use them successfully.

We use dbt’s built-in testing capabilities to implement complete knowledge high quality checks. These embody schema assessments that confirm column uniqueness, referential integrity, and null constraints, in addition to customized SQL assessments that validate enterprise logic and knowledge consistency. The testing framework runs routinely on each pull request, validating knowledge transformations at every step of our pipeline. Moreover, dbt’s dependency graph supplies a visible illustration of how our fashions interconnect, serving to us perceive the upstream and downstream impacts of any modifications earlier than we implement them. When stakeholders want to switch fashions, they’ll submit modifications by means of pull requests, which, after they’re authorized and merged, routinely set off the mandatory knowledge transformations by means of our CI/CD pipeline. This streamlined course of enabled us to create new knowledge merchandise inside days in comparison with weeks and decreased ongoing upkeep work by catching points early within the growth cycle.

Athena workgroup separation

We use Athena workgroups to isolate completely different question patterns based mostly on their execution triggers and functions. Every workgroup has its personal configuration and metric reporting, permitting us to watch and optimize individually. The dbt workgroup handles our scheduled nightly transformations and on-demand updates triggered by pull requests by means of our Supply, Ready, and Semantic levels. The dbt-test workgroup executes automated knowledge high quality checks throughout pull request validation and nightly builds. The QuickSight workgroup manages SPICE knowledge ingestion queries, and the Advert-hoc workgroup helps interactive knowledge exploration by our staff.

Every workgroup could be configured with particular knowledge utilization quotas, enabling groups to implement granular governance insurance policies. This separation supplies a number of advantages: it allows clear value allocation, supplies remoted monitoring of question patterns throughout completely different use circumstances, and helps implement knowledge governance by means of customized workgroup settings. Amazon CloudWatch monitoring per workgroup helps us monitor utilization patterns, establish question efficiency points, and regulate configurations based mostly on precise wants.

Utilizing QuickSight SPICE

QuickSight SPICE (Tremendous-fast, Parallel, In-memory Calculation Engine) supplies highly effective in-memory processing capabilities that we’ve optimized for our particular use circumstances. Reasonably than loading whole tables into SPICE, we create specialised views on prime of our materialized semantic fashions. These views are fastidiously crafted to incorporate solely the mandatory columns, related metadata joins, and acceptable time filtering to have solely current knowledge obtainable in dashboards.

We’ve applied a hybrid refresh technique for these SPICE datasets: each day incremental updates maintain the information contemporary, and weekly full refreshes keep knowledge consistency. This method strikes a steadiness between knowledge freshness and processing effectivity. The result’s responsive dashboards that keep excessive efficiency whereas retaining processing prices beneath management.

Scalability and cost-efficiency

The serverless structure of Athena eliminates guide infrastructure administration, routinely scaling based mostly on question demand. As a result of prices are based mostly solely on the quantity of information scanned by queries, optimizing queries to scan as little knowledge as potential immediately reduces our prices. We use the distributed question execution capabilities of Athena by means of our dbt mannequin construction, enabling parallel processing throughout knowledge partitions. By implementing efficient partitioning methods and utilizing Parquet file format, we reduce the quantity of information scanned whereas maximizing question efficiency.

Our structure affords flexibility in how we materialize knowledge by means of views, full tables, and incremental tables. With dbt’s incremental fashions and partitioning technique, we course of solely new or modified knowledge as a substitute of whole datasets. This method has confirmed extremely efficient—we’ve noticed important reductions in knowledge processing quantity in addition to knowledge scanning, significantly in our QuickSight workgroup.

The effectiveness of those optimizations applied on the finish of 2023 is seen within the following diagram, exhibiting prices by Athena workgroups.

The workgroups are illustrated as follows:

Inexperienced (QuickSight): Reveals decreased knowledge scanning post-optimization.

Mild blue (Advert-hoc): Varies based mostly on evaluation wants.

Darkish blue (dbt): Maintains constant processing patterns

Orange (dbt-test): Reveals common, environment friendly check execution.

The elevated dbt workload prices immediately correlate with decreased QuickSight prices, reflecting our architectural shift from utilizing complicated views in QuickSight workgroups (which beforehand masked question complexity however led to repeated computations) to utilizing dbt for materializing these transformations. Though this elevated the dbt workload, the general cost-efficiency improved considerably as a result of materialized tables decreased redundant computations in QuickSight. This demonstrates how our optimization methods efficiently handle rising knowledge volumes whereas reaching internet value discount by means of environment friendly knowledge materialization patterns.

Conclusion

Our knowledge structure makes use of dbt and Athena to supply a scalable, cost-efficient, and versatile framework for constructing and managing knowledge transformation pipelines. Athena’s means to question knowledge immediately in Amazon S3 alleviates the necessity to transfer or copy knowledge right into a separate knowledge warehouse, and its serverless mannequin and dbt’s incremental processing reduce each operational overhead and processing prices. Given our staff’s robust SQL experience, expressing these transformations in SQL by means of dbt and Athena was a pure alternative, enabling fast mannequin growth and deployment. With dbt’s automated documentation and lineage, troubleshooting and figuring out knowledge points is simplified, and the system’s modularity permits for fast changes to fulfill evolving enterprise wants.

Beginning with this structure is fast and simple: all that’s wanted is the dbt-core and dbt-athena libraries, and Athena itself requires no setup, as a result of it’s a completely serverless service with seamless integration with Amazon S3. This structure is good for groups seeking to quickly prototype, check, and deploy knowledge fashions, optimizing useful resource utilization, accelerating deployment, and offering high-quality, correct knowledge processing.

For these interested by a managed resolution from dbt, see From knowledge lakes to insights: dbt adapter for Amazon Athena now supported in dbt Cloud.

In regards to the Authors

Philipp Karg is a Lead FinOps Engineer at BMW Group and has a robust background in knowledge engineering, AI, and FinOps. He focuses on driving cloud effectivity initiatives and fostering a cost-aware tradition throughout the firm to leverage the cloud sustainably.

Lead FinOps Engineer at BMW Group and has a robust background in knowledge engineering, AI, and FinOps. He focuses on driving cloud effectivity initiatives and fostering a cost-aware tradition throughout the firm to leverage the cloud sustainably.

Selman Ay is a Knowledge Architect specializing in end-to-end knowledge options, structure, and AI on AWS. Outdoors of labor, he enjoys enjoying tennis and fascinating outside actions.

Selman Ay is a Knowledge Architect specializing in end-to-end knowledge options, structure, and AI on AWS. Outdoors of labor, he enjoys enjoying tennis and fascinating outside actions.

Cizer Pereira is a Senior DevOps Architect at AWS Skilled Companies. He works carefully with AWS clients to speed up their journey to the cloud. He has a deep ardour for cloud-based and DevOps options, and in his free time, he additionally enjoys contributing to open supply initiatives.

Cizer Pereira is a Senior DevOps Architect at AWS Skilled Companies. He works carefully with AWS clients to speed up their journey to the cloud. He has a deep ardour for cloud-based and DevOps options, and in his free time, he additionally enjoys contributing to open supply initiatives.

your blog is fantastic! I’m learning so much from the way you share your thoughts.