The oil and fuel business depends closely on seismic information to discover and extract hydrocarbons safely and effectively. Nevertheless, processing and analyzing giant quantities of seismic information generally is a daunting job, requiring important computational assets and experience.

Equinor, a number one vitality firm, has used the Databricks Information Intelligence Platform to optimize one in every of its exploratory seismic information transformation workflows, reaching important time and value financial savings whereas enhancing information observability.

Equinor’s purpose was to reinforce one in every of its 4D seismic interpretation workflows, specializing in automating and optimizing the detection and classification of reservoir modifications over time. This course of helps figuring out drilling targets, lowering the danger of pricey dry wells, and selling environmentally accountable drilling practices. Key enterprise expectations included:

Optimum drilling targets: Enhance goal identification to drill up a lot of new wells within the upcoming a long time.

Quicker, cost-effective evaluation: Cut back the time and value of 4D seismic evaluation by means of automation.

Deeper reservoir insights: Combine extra subsurface information to unlock improved interpretations and decision-making.

Understanding Seismic Information

Seismic Dice: 3D fashions of the subsurface

Seismic information acquisition entails deploying air weapons to generate sound waves, which replicate off subsurface buildings and are captured by hydrophones. These sensors, situated on streamers towed by seismic vessels or positioned on the seafloor, gather uncooked information that’s later processed to create detailed 3D pictures of the subsurface geology.

File Format: SEG-Y (Society of Exploration Geophysicists) – proprietary file format for storing seismic information, developed within the Seventies, optimized for tape storage

Information Illustration: The processed information is saved as 3D cubes, providing a complete view of subsurface buildings.

Fig. 1: Seismic survey – buying seismic information. Uncooked information is then processed into 3D cubes. Retrieved 15‐06‐2015. Fetched from “Specificity of Geotechnical Measurements and Apply of Polish Offshore Operations”, Krzysztof Wróbel, Bogumił Łączyński, The Worldwide Journal on Marine Navigation and Security of Sea Transportation, quantity 9, quantity 4, December -2015

Seismic Horizons: Mapping Geological Boundaries

Seismic horizons are interpretations of seismic information, representing steady surfaces throughout the subsurface. These horizons point out geological boundaries, tied to modifications in rock properties and even fluid content material. By analyzing the reflections of seismic waves at these boundaries, geologists can determine key subsurface options.

File Format: CSV – generally used for storing interpreted seismic horizon information.

Information Illustration: Horizons are saved as 2D surfaces.

Fig. 2: An instance of two Seismic Horizons From Open Inventor Toolikt/Seismic Horizon (Top Discipline)

Challenges with the Present Pipeline

The present seismic information pipeline processes information to generate the next key outputs:

4D Seismic Distinction Dice: Tracks modifications over time by evaluating two seismic cubes of the identical bodily space, sometimes acquired months or years aside.

4D Seismic Distinction Maps: These maps include attributes or options from the 4D seismic cubes to spotlight particular modifications within the seismic information, aiding reservoir evaluation.

Nevertheless, a number of challenges restrict the effectivity and scalability of the prevailing pipeline:

Suboptimal Distributed Processing: Depends on a number of standalone Python jobs working in parallel on single-node clusters, resulting in inefficiencies.

Restricted Resilience: Vulnerable to failures and lacks mechanisms for error tolerance or automated restoration.

Lack of Horizontal Scalability: Requires high-configuration nodes with substantial reminiscence (e.g., 112 GB), driving up prices.

Excessive Improvement and Upkeep Effort: Managing and troubleshooting the pipeline calls for important engineering assets.

Proposed Answer Structure

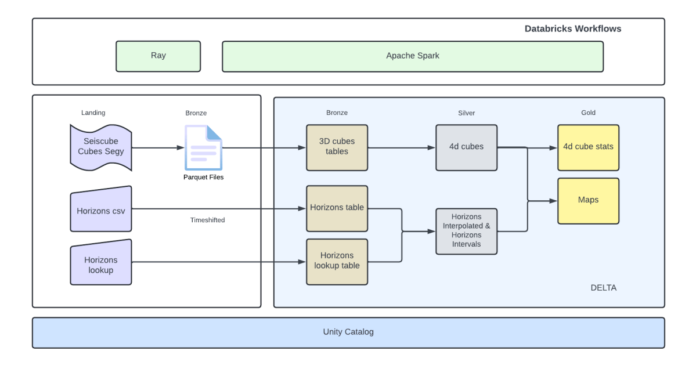

To deal with these challenges, we re-architected the pipeline as a distributed resolution utilizing Ray and Apache Spark™ ruled by Unity Catalog on the Databricks Platform. This strategy considerably improved scalability, resilience, and value effectivity.

Fig. 3: Proposed Structure Diagram

We used the next applied sciences on the Databricks Platform to implement the answer:

Apache Spark™: An open supply framework for large-scale information processing and analytics, guaranteeing environment friendly and scalable computation.

Databricks Workflows: For orchestrating information engineering, information science, and analytics duties.

Delta Lake: An open supply storage layer that ensures reliability by means of ACID transactions, scalable metadata dealing with, and unified batch and streaming information processing. It serves because the default storage format on Databricks.

Ray: A high-performance distributed computing framework, used to scale Python purposes and allow distributed processing of SEG-Y recordsdata by leveraging SegyIO and current processing logic.

SegyIO: A Python library for processing SEG-Y recordsdata, enabling seamless dealing with of seismic information.

Key Advantages:

This re-architected seismic information pipeline addressed inefficiencies within the current pipeline whereas introducing scalability, resilience, and value optimization. The next are the important thing advantages realized:

Important Time Financial savings: Eradicated duplicate information processing by persisting intermediate outcomes (e.g., 3D and 4D cubes), enabling reprocessing of solely the mandatory datasets.

Price Effectivity: Diminished prices by as much as 96% on particular calculation steps, akin to map technology.

Failure-Resilient Design: Leveraged Apache Spark’s distributed processing framework to introduce fault tolerance and automated job restoration.

Horizontal Scalability: Achieved horizontal scalability to beat the constraints of the prevailing resolution, guaranteeing environment friendly scaling as information quantity grows.

Standardized Information Format: Adopted an open, standardized information format to streamline downstream processing, simplify analytics, enhance information sharing, and improve governance and high quality.

Conclusion

This venture highlights the massive potential of contemporary information platforms like Databricks in reworking conventional seismic information processing workflows. By integrating instruments akin to Ray, Apache Spark and Delta, and leveraging Databricks’ platform, we achieved an answer that delivers measurable advantages:

Effectivity Positive aspects: Quicker information processing and fault tolerance.

Price Reductions: A extra economical strategy to seismic information evaluation.

Improved Maintainability: Simplified pipeline structure and standardized know-how stacks lowered code complexity and improvement overhead.

The redesigned pipeline not solely optimized seismic workflows but in addition set a scalable and sturdy basis for future enhancements. This serves as a invaluable mannequin for different organizations aiming to modernize their seismic information processing whereas driving related enterprise outcomes.

Acknowledgments

Particular due to the Equinor Information Engineering, Information Science and Analytics Communities, and Equinor Analysis and Improvement Groups for his or her contributions to this initiative.

“Glorious expertise working with Skilled providers – very excessive technical competence and communication abilities. Important achievements in fairly a short while”

– Anton Eskov

https://www.databricks.com/weblog/class/industries/vitality?classes=vitality