This visitor publish was co-authored with Kostas Diamantis from Skroutz.

At Skroutz, we’re keen about our product, and it’s at all times our prime precedence. We’re consistently working to enhance and evolve it, supported by a big and proficient crew of software program engineers. Our product’s steady innovation and evolution result in frequent updates, usually necessitating modifications and additions to the schemas of our operational databases.

Once we determined to construct our personal information platform to satisfy our information wants, akin to supporting reporting, enterprise intelligence (BI), and decision-making, the principle problem—and in addition a strict requirement—was to verify it wouldn’t block or delay our product improvement.

We selected Amazon Redshift to advertise information democratization, empowering groups throughout the group with seamless entry to information, enabling quicker insights and extra knowledgeable decision-making. This alternative helps a tradition of transparency and collaboration, as information turns into available for evaluation and innovation throughout all departments.

Nonetheless, maintaining with schema modifications from our operational databases, whereas updating the information warehouse with out consistently coordinating with improvement groups, delaying releases, or risking information loss, grew to become a brand new problem for us.

On this publish, we share how we dealt with real-time schema evolution in Amazon Redshift with Debezium.

Answer overview

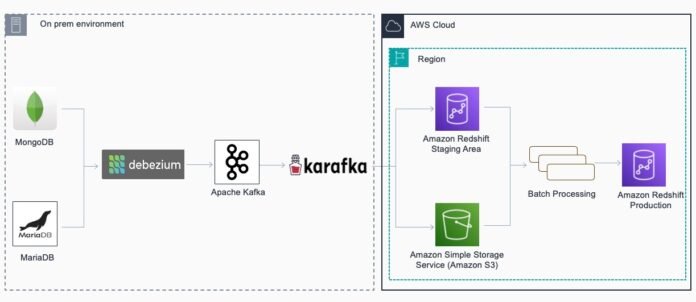

Most of our information resides in our operational databases, akin to MariaDB and MongoDB. Our strategy entails utilizing the change information seize (CDC) approach, which routinely handles the schema evolution of the information shops being captured. For this, we used Debezium together with a Kafka cluster. This resolution allows schema modifications to be propagated with out disrupting the Kafka shoppers.

Nonetheless, dealing with schema evolution in Amazon Redshift grew to become a bottleneck, prompting us to develop a technique to handle this problem. It’s essential to notice that, in our case, modifications in our operational databases primarily contain including new columns slightly than breaking modifications like altering information varieties. Due to this fact, we’ve got applied a semi-manual course of to resolve this subject, together with a compulsory alerting mechanism to inform us of any schema modifications. This two-step course of consists of dealing with schema evolution in actual time and dealing with information updates in an asynchronous guide step. The next architectural diagram illustrates a hybrid deployment mannequin, integrating each on-premises and cloud-based elements.

The information movement begins with information from MariaDB and MongoDB, captured utilizing Debezium for CDC in close to real-time mode. The captured information is streamed to a Kafka cluster, the place Kafka shoppers (constructed on the Ruby Karafka framework) learn and write them to the staging space, both in Amazon Redshift or Amazon Easy Storage Service (Amazon S3). From the staging space, DataLoaders promote the information to manufacturing tables in Amazon Redshift. At this stage, we apply the slowly altering dimension (SCD) idea to those tables, utilizing Sort 7 for many of them.

In information warehousing, an SCD is a dimension that shops information, and although it’s typically steady, it would change over time. Numerous methodologies deal with the complexities of SCD administration. SCD Sort 7 locations each the surrogate key and the pure key into the actual fact desk. This enables the person to pick out the suitable dimension data based mostly on:

The first efficient date on the actual fact document

The latest or present info

Different dates related to the actual fact document

Afterwards, analytical jobs are run to create reporting tables, enabling BI and reporting processes. The next diagram gives an instance of the information modeling course of from a staging desk to a manufacturing desk.

The structure depicted within the diagram reveals solely our CDC pipeline, which fetches information from our operational databases and doesn’t embrace different pipelines, akin to these for fetching information by way of APIs, scheduled batch processes, and lots of extra. Additionally notice that our conference is that dw_* columns are used to catch SCD metadata info and different metadata generally. Within the following sections, we focus on the important thing elements of the answer in additional element.

Actual-time workflow

For the schema evolution half, we give attention to the column dw_md_missing_data, which captures schema evolution modifications in close to actual time that happen within the supply databases. When a brand new change is produced to the Kafka cluster, the Kafka shopper is accountable for writing this transformation to the staging desk in Amazon Redshift. For instance, a message produced by Debezium to the Kafka cluster could have the next construction when a brand new store entity is created:

{

“earlier than”: null,

“after”: {

“id”: 1,

“identify”: “shop1”,

“state”: “hidden”

},

“supply”: {

…

“ts_ms”: “1704114000000”,

…

},

“op”: “c”,

…

}

The Kafka shopper is accountable for making ready and executing the SQL INSERT assertion:

INSERT INTO staging.outlets (

id,

“identify”,

state,

dw_md_changed_at,

dw_md_operation,

dw_md_missing_data

)

VALUES

(

1,

‘shop1’,

‘hidden’,

‘2024-01-01 13:00:00’,

‘create’,

NULL

)

;

After that, let’s say a brand new column is added to the supply desk known as new_column, with the worth new_value.

The brand new message produced to the Kafka cluster could have the next format:

{

“earlier than”: { … },

“after”: {

“id”: 1,

“identify”: “shop1”,

“state”: “hidden”,

“new_column”: “new_value”

},

“supply”: {

…

“ts_ms”: “1704121200000”

…

},

“op”: “u”

…

}

Now the SQL INSERT assertion executed by the Kafka shopper might be as follows:

INSERT INTO staging.outlets (

id,

“identify”,

state,

dw_md_changed_at,

dw_md_operation,

dw_md_missing_data

)

VALUES

(

1,

‘shop1’,

‘hidden’,

‘2024-01-01 15:00:00’,

‘replace’,

JSON_PARSE(‘{“new_column”: “new_value”}’) /* <– test this */

)

;

The patron performs an INSERT as it will for the identified schema, and something new is added to the dw_md_missing_data column as key-value JSON. After the information is promoted from the staging desk to the manufacturing desk, it’s going to have the next construction.

At this level, the information movement continues working with none information loss or the necessity for communication with groups accountable for sustaining the schema within the operational databases. Nonetheless, this information won’t be simply accessible for the information shoppers, analysts, or different personas. It’s price noting that dw_md_missing_data is outlined as a column of the SUPER information sort, which was launched in Amazon Redshift to retailer semistructured information or paperwork as values.

Monitoring mechanism

To trace new columns added to a desk, we’ve got a scheduled course of that runs weekly. This course of checks for tables in Amazon Redshift with values within the dw_md_missing_data column and generates a listing of tables requiring guide motion to make this information out there by way of a structured schema. A notification is then despatched to the crew.

Guide remediation steps

Within the aforementioned instance, the guide steps to make this column out there could be:

Add the brand new columns to each staging and manufacturing tables:

ALTER TABLE staging.outlets ADD COLUMN new_column varchar(255);

ALTER TABLE manufacturing.outlets ADD COLUMN new_column varchar(255);

Replace the Kafka shopper’s identified schema. On this step, we simply want so as to add the brand new column identify to a easy array record. For instance:

class ShopsConsumer < ApplicationConsumer

SOURCE_COLUMNS = (

‘id’,

‘identify’,

‘state’,

‘new_column’ # this one is the brand new column

)

def eat

# Ruby code for:

# 1. information cleansing

# 2. information transformation

# 3. preparation of the SQL INSERT assertion

RedshiftClient.conn.exec <<~SQL

/*

generated SQL INSERT assertion

*/

SQL

finish

finish

Replace the DataLoader’s SQL logic for the brand new column. A DataLoader is accountable for selling the information from the staging space to the manufacturing desk.

class DataLoader::ShopsTable < DataLoader::Base

class << self

def load

RedshiftClient.conn.exec <<~SQL

CREATE TABLE staging.shops_new (LIKE staging.outlets);

SQL

RedshiftClient.conn.exec <<~SQL

/*

We transfer the information to a brand new desk as a result of in staging.outlets

the Kafka shopper will proceed add new rows

*/

ALTER TABLE staging.shops_new APPEND FROM staging.outlets;

SQL

RedshiftClient.conn.exec <<~SQL

BEGIN;

/*

SQL to deal with

* information deduplications and many others

* extra transformations

* all the mandatory operations with a purpose to apply the information modeling we’d like for this desk

*/

INSERT INTO manufacturing.outlets (

id,

identify,

state,

new_column, /* –> this one is the brand new column <– */

dw_start_date,

dw_end_date,

dw_current,

dw_md_changed_at,

dw_md_operation,

dw_md_missing_data

)

SELECT

id,

identify,

state,

new_column, /* –> this one is the brand new column <– */

/*

right here is the logic to use the information modeling (sort 1,2,3,4…7)

*/

FROM

staging.shops_new

;

DROP TABLE staging.shops_new;

END TRANSACTION;

SQL

finish

finish

finish

Switch the information that has been loaded within the meantime from the dw_md_missing_data SUPER column to the newly added column after which clear up. On this step, we simply must run an information migration like the next:

BEGIN;

/*

Switch the information from the `dw_md_missing_data` to the corresponding column

*/

UPDATE manufacturing.outlets

SET new_column = dw_md_missing_data.new_column::varchar(255)

WHERE dw_md_missing_data.new_column IS NOT NULL;

/*

Clear up dw_md_missing_data column

*/

UPDATE manufacturing.outlets

SET dw_md_missing_data = NULL

WHERE dw_md_missing_data IS NOT NULL;

END TRANSACTION;

To carry out the previous operations, we be sure that nobody else performs modifications to the manufacturing.outlets desk as a result of we would like no new information to be added to the dw_md_missing_data column.

Conclusion

The answer mentioned on this publish enabled Skroutz to handle schema evolution in operational databases whereas seamlessly updating the information warehouse. This alleviated the necessity for fixed improvement crew coordination and eliminated dangers of information loss throughout releases, finally fostering innovation slightly than stifling it.

Because the migration of Skroutz to the AWS Cloud approaches, discussions are underway on how the present structure may be tailored to align extra carefully with AWS-centered rules. To that finish, one of many modifications being thought of is Amazon Redshift streaming ingestion from Amazon Managed Streaming for Apache Kafka (Amazon MSK) or open supply Kafka, which can make it doable for Skroutz to course of massive volumes of streaming information from a number of sources with low latency and excessive throughput to derive insights in seconds.

Should you face comparable challenges, focus on with an AWS consultant and work backward out of your use case to supply essentially the most appropriate resolution.

Concerning the authors

Konstantina Mavrodimitraki is a Senior Options Architect at Amazon Internet Providers, the place she assists clients in designing scalable, strong, and safe programs in international markets. With deep experience in information technique, information warehousing, and massive information programs, she helps organizations remodel their information landscapes. A passionate technologist and folks individual, Konstantina loves exploring rising applied sciences and helps the native tech communities. Moreover, she enjoys studying books and taking part in together with her canine.

Konstantina Mavrodimitraki is a Senior Options Architect at Amazon Internet Providers, the place she assists clients in designing scalable, strong, and safe programs in international markets. With deep experience in information technique, information warehousing, and massive information programs, she helps organizations remodel their information landscapes. A passionate technologist and folks individual, Konstantina loves exploring rising applied sciences and helps the native tech communities. Moreover, she enjoys studying books and taking part in together with her canine.

Kostas Diamantis is the Head of the Knowledge Warehouse at Skroutz firm. With a background in software program engineering, he transitioned into information engineering, utilizing his technical experience to construct scalable information options. Obsessed with data-driven decision-making, he focuses on optimizing information pipelines, enhancing analytics capabilities, and driving enterprise insights.

Kostas Diamantis is the Head of the Knowledge Warehouse at Skroutz firm. With a background in software program engineering, he transitioned into information engineering, utilizing his technical experience to construct scalable information options. Obsessed with data-driven decision-making, he focuses on optimizing information pipelines, enhancing analytics capabilities, and driving enterprise insights.