AWS Glue 5.0 now helps Full-Desk Entry (FTA) management in Apache Spark primarily based in your insurance policies outlined in AWS Lake Formation. This new function permits learn and write operations out of your AWS Glue 5.0 Spark jobs on Lake Formation registered tables when the job position has full desk entry. This degree of management is right to be used circumstances that have to comply with safety rules on the desk degree. As well as, now you can use Spark capabilities together with Resilient Distributed Datasets (RDDs), customized libraries, and user-defined features (UDFs) with Lake Formation tables. This functionality permits Information Manipulation Language (DML) operations together with CREATE, ALTER, DELETE, UPDATE, and MERGE INTO statements on Apache Hive and Iceberg tables from inside the similar Apache Spark utility. Information groups can run advanced, interactive Spark purposes by means of Amazon SageMaker Unified Studio in compatibility mode whereas sustaining the table-level safety boundaries supplied by Lake Formation. This simplifies safety and governance of your information lakes.

On this publish, we present you the right way to implement FTA management on AWS Glue 5.0 by means of Lake Formation permissions.

How entry management works on AWS Glue

AWS Glue 5.0 helps two options that obtain entry management by means of Lake Formation:

Full-Desk Entry (FTA) management

Superb-grained entry management (FGAC)

At a excessive degree, FTA helps entry management on the desk degree whereas FGAC can help entry management on the desk, row, column, and cell ranges. To help extra granular entry management, FGAC makes use of a good safety mannequin primarily based on consumer/system house isolation. By sustaining this additional degree of safety, solely a subset of Spark core lessons are allowlisted. Moreover, there’s additional setup for enabling FGAC, comparable to passing the –enable-lakeformation-fine-grained-access parameter to the job. For extra details about FGAC, see Implement fine-grained entry management on information lake tables utilizing AWS Glue 5.0 built-in with AWS Lake Formation.

Whereas this degree of granular management is important for organizations that have to adjust to information governance, safety rules, or take care of delicate information, it’s extreme for organizations that solely want desk degree entry management. To supply clients with a approach to implement desk degree entry with out the efficiency, value, and setup overhead launched by the tighter safety mannequin in FGAC, AWS Glue launched FTA. Let’s dive into FTA, the principle matter of this publish.

How Full-Desk Entry (FTA) works in AWS Glue

Till AWS Glue 4.0, Lake Formation-based information entry labored by means of GlueContext class, the utility class supplied by AWS Glue. With the launch of AWS Glue 5.0, Lake Formation-based information entry is accessible by means of native Spark SQL and Spark DataFrames.

With this launch, when you have got full desk entry to your tables by means of Lake Formation permissions, you don’t have to allow fine-grained entry mode in your AWS Glue jobs or periods. This eliminates the necessity to spin up a system driver and system executors, as a result of they’re designed to permit fine-grained entry, leading to decrease efficiency overhead and decrease value. As well as, though Lake Formation fine-grained entry mode helps learn operations, FTA helps not solely learn operations, but additionally write operations by means of CREATE, ALTER, DELETE, UPDATE, and MERGE INTO instructions.

To make use of FTA mode, you have to enable third-party question engines to entry information with out the AWS Identification and Entry Administration (IAM) session tag validation in Lake Formation. To do that, comply with the steps in Utility integration for full desk entry.

Migrate an AWS Glue 4.0 GlueContext FTA job to AWS Glue 5.0 native Spark FTA

The high-level steps to allow the Spark native FTA function are documented in Utilizing AWS Glue with AWS Lake Formation for Full Desk Entry. Nonetheless, on this part, we’ll undergo an end-to-end instance of the right way to migrate an AWS Glue 4.0 job that makes use of FTA by means of GlueContext to learn an Iceberg desk to an AWS Glue 5.0 job that makes use of Spark native FTA.

Stipulations

Earlier than you get began, just remember to have the next conditions:

An AWS account with AWS Identification and Entry Administration (IAM) roles as wanted:

Lake Formation information entry IAM position that isn’t a service-linked position.

AWS Glue job execution position with AWS managed coverage AWSGlueServiceRole connected and lakeformation:GetDataAccess permission. Be sure you embrace the AWS Glue service within the belief coverage.

The required permissions to carry out the next actions:

Lake Formation arrange within the account and a Lake Formation administrator position or an analogous position to comply with together with the directions on this publish. To be taught extra about establishing permissions for a knowledge lake administrator position, see Create a knowledge lake administrator.

For this publish, we use the us-east-1 AWS Area, however you possibly can combine it in your most well-liked Area if the AWS providers included within the structure can be found in that Area.

You’ll stroll by means of establishing check information and an instance AWS Glue 4.0 job utilizing GlueContext, but when you have already got these and are solely desirous about the right way to migrate, proceed to Migrate an AWS Glue 4.0 GlueContext FTA job to AWS Glue 5.0 native Spark FTA. With the conditions in place, you’re prepared begin the implementation steps.

Create an S3 bucket and add a pattern information file

To create an S3 bucket for the uncooked enter datasets and Iceberg desk, full the next steps:

On the AWS Administration Console for Amazon S3, select Buckets within the navigation pane.

Select Create bucket.

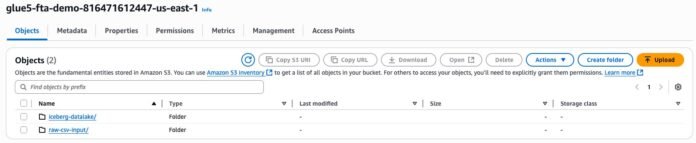

Enter the bucket title (for instance, glue5-fta-demo-${AWS_ACCOUNT_ID}-${AWS_REGION_CODE}), and depart the remaining fields as default.

Select Create bucket.

On the bucket particulars web page, select Create folder.

Create two subfolders: raw-csv-input and iceberg-datalake.

Add the LOAD00000001.csv file into the raw-csv-input folder of the bucket.

Create an AWS Glue database and AWS Glue tables

To create enter and output pattern tables within the Information Catalog, full the next steps:

On the Athena console, navigate to the question editor.

Run the next queries in sequence (present your S3 bucket title):

— Create database for the demo

CREATE DATABASE glue5_fta_demo;

— Create exterior desk in enter CSV information. Change the S3 path along with your bucket title

CREATE EXTERNAL TABLE glue5_fta_demo.raw_csv_input(

op string,

product_id bigint,

class string,

product_name string,

quantity_available bigint,

last_update_time string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ‘,’

STORED AS INPUTFORMAT ‘org.apache.hadoop.mapred.TextInputFormat’

OUTPUTFORMAT ‘org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat’

LOCATION ‘s3:///raw-csv-input/’

TBLPROPERTIES (

‘areColumnsQuoted’=’false’,

‘classification’=’csv’,

‘columnsOrdered’=’true’,

‘compressionType’=’none’,

‘delimiter’=’,’,

‘typeOfData’=’file’);

— Create output Iceberg desk with partitioning. Change the S3 bucket title along with your bucket title

CREATE TABLE glue5_fta_demo.iceberg_datalake WITH (

table_type=”ICEBERG”,

format=”parquet”,

write_compression = ‘SNAPPY’,

is_external = false,

partitioning=ARRAY(‘class’, ‘bucket(product_id, 16)’),

location=’s3:///iceberg-datalake/’

) AS SELECT * FROM glue5_fta_demo.raw_csv_input;

Run the next question to validate the uncooked CSV enter information:

SELECT * FROM glue5_fta_demo.raw_csv_input;The next screenshot exhibits the question consequence:

Run the next question to validate the Iceberg desk information:

SELECT * FROM glue5_fta_demo.iceberg_datalake;The next screenshot exhibits the question consequence:

This step used DDL to create desk definitions. Alternatively, you need to use a Information Catalog API, the AWS Glue console, the Lake Formation console, or an AWS Glue crawler.

The following step is to configure Lake Formation permissions on the iceberg_datalake desk.

Configure Lake Formation permissions

To validate the potential, you’ll want to outline FTA permissions for the iceberg_datalake Information Catalog desk you created. To start out, allow learn entry to iceberg_datalake.

To configure Lake Formation permissions for the iceberg_datalake desk, full the next steps:

On the Lake Formation console, select Information lake areas below Administration within the navigation pane.

Select Register location.

For Amazon S3 path, enter the trail of your S3 bucket to register the situation.

For IAM position, select your Lake Formation information entry IAM position, which isn’t a service linked position.

For Permission mode, choose Lake Formation.

Select Register location.

Grant permissions on the Iceberg desk

The following step is to grant desk permissions on the iceberg_datalake desk to the AWS Glue job position.

On the Lake Formation console, select Information permissions below Permissions within the navigation pane.

Select Grant.

For Principals, select IAM customers and roles.

For IAM customers and roles, select your IAM position that’s going for use on an AWS Glue job.

For LF-Tags or catalog assets, select Named Information Catalog assets.

For Catalogs, select your account ID (the default catalog).

For Databases, select glue5_fta_demo.

For Tables, select iceberg_datalake.

For Desk permissions, select Choose and Describe.

For Information permissions, select All information entry.

Subsequent, create the AWS Glue PySpark job to course of the enter information.

Question the Iceberg desk by means of an AWS Glue 4.0 job utilizing GlueContext and DataFrames

Subsequent, create a pattern AWS Glue 4.0 job to load information from the iceberg_datalake desk. You’ll use this pattern job as a supply of migration. Full the next steps:

On the AWS Glue console, select ETL jobs within the navigation pane.

For Create job, select Script Editor.

For Engine, select Spark.

For Choices, select Begin contemporary.

Select Create script.

For Script, substitute the next parameters:

Change aws_region along with your Area.

Change aws_account_id along with your AWS account ID.

Change warehouse_path along with your Amazon S3 warehouse path for the Iceberg desk.

For extra details about the right way to use Iceberg in AWS Glue 4.0 jobs, see Utilizing the Iceberg framework in AWS Glue.

from awsglue.context import GlueContext

from pyspark.sql import SparkSession

catalog_name = “glue_catalog”

aws_region = “us-east-1”

aws_account_id = “123456789012”

warehouse_path = “s3:///warehouse/”

# Initialize Spark and Glue contexts

spark = SparkSession.builder

.config(f”spark.sql.catalog.{catalog_name}”, “org.apache.iceberg.spark.SparkCatalog”)

.config(f”spark.sql.catalog.{catalog_name}.warehouse”, f”{warehouse_path}”)

.config(f”spark.sql.catalog.{catalog_name}.catalog-impl”, “org.apache.iceberg.aws.glue.GlueCatalog”)

.config(f”spark.sql.catalog.{catalog_name}.io-impl”, “org.apache.iceberg.aws.s3.S3FileIO”)

.config(f”spark.sql.catalog.{catalog_name}.glue.lakeformation-enabled”,”true”)

.config(f”spark.sql.catalog.{catalog_name}.shopper.area”,f”{aws_region}”)

.config(f”spark.sql.catalog.{catalog_name}.glue.id”,f”{aws_account_id}”)

.getOrCreate()

glueContext = GlueContext(spark.sparkContext)

database_name = “glue5_fta_demo”

table_name = “iceberg_datalake”

# Learn the Iceberg desk

df = glueContext.create_data_frame.from_catalog(

database=database_name,

table_name=table_name,

)

df.present()

On the Job particulars tab, for Identify, enter glue-fta-demo-iceberg.

For IAM Position, assign an IAM position that has the required permissions to run an AWS Glue job and skim and write to the S3 bucket.

For Glue model, select Glue 4.0 – Helps spark 3.3, Scala 2, Python 3.

For Job parameters, add the next parameters:

Key: –conf

Worth: spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions

Key: –datalake-formats

Worth: iceberg

Select Save after which Run.

When the job is full, on the Run particulars tab, select Output logs.

You’re redirected to the Amazon CloudWatch console to validate the output.

The output desk is proven within the following screenshot. You see the identical output that you just noticed in Athena while you verified that the Iceberg desk was populated. It’s because the AWS Glue job execution position has full desk entry from the Lake Formation permissions that you just granted:

For those who have been to run this similar AWS Glue job with one other IAM position that wasn’t granted entry to the desk in Lake Formation, you’ll see an error Inadequate Lake Formation permission(s) on iceberg_datalake. Use the next steps to copy this conduct:

Create a brand new IAM position that’s equivalent to the AWS Glue job execution position you already used, however don’t grant permissions to this clone in Lake Formation.

Change the position within the AWS Glue console for glue-fta-demo-iceberg to the brand new cloned position.

Rerun the job. You need to see the error.

For the needs of this publish, change the position again to the unique job execution position that’s registered in Lake Formation so you need to use it within the subsequent steps.

You now have an FTA setup in AWS Glue 4.0 that makes use of GlueContext DataFrames for an Iceberg desk. You noticed how roles which are granted permission in Lake Formation can learn, and the way roles that aren’t granted permission in Lake Formation can’t learn. Within the subsequent part, we present you the right way to migrate from AWS Glue 4.0 GlueContext FTA to AWS Glue 5.0 native Spark FTA.

Migrate an AWS Glue 4.0 GlueContext FTA job to AWS Glue 5.0 native Spark FTA

The Lake Formation permission granting expertise is equivalent whatever the AWS Glue model and Spark information constructions used. Due to this fact, assuming you have got a working Lake Formation setup in your AWS Glue 4.0 job, you don’t want to change these permissions throughout migration. Listed below are the migration steps utilizing the AWS Glue 4.0 instance from the earlier sections:

Enable third-party question engines to entry information with out the IAM session tag validation in Lake Formation. Comply with the step-by-step information in Utility integration for full desk entry.

You shouldn’t want to alter the job runtime position if in case you have AWS Glue 4.0 FTA working (see the instance permissions within the conditions). The primary IAM permission to confirm is that the AWS Glue job execution position has lakeformation:GetDataAccess.

Modify the Spark session configurations within the script. Confirm that the next Spark configurations are current:

–conf spark.sql.catalog.spark_catalog=org.apache.iceberg.spark.SparkSessionCatalog

–conf spark.sql.catalog.spark_catalog.warehouse=s3:///warehouse/

–conf spark.sql.catalog.spark_catalog.shopper.area=REGION

–conf spark.sql.catalog.spark_catalog.glue.account-id=ACCOUNT_ID

–conf spark.sql.catalog.spark_catalog.glue.lakeformation-enabled=true

–conf spark.sql.catalog.dropDirectoryBeforeTable.enabled=true

For more information concerning the above three steps, see Utilizing AWS Glue with AWS Lake Formation for Full Desk Entry.

Replace the script in order that GlueContext DataFrames are modified to native Spark DataFrames. For instance, the up to date script for the earlier AWS Glue 4.0 job would now seem like:

from pyspark.sql import SparkSession

catalog_name = “spark_catalog”

aws_region = “us-east-1”

aws_account_id = “”

warehouse_path = “s3:///warehouse/”

spark = SparkSession.builder

.config(“spark.sql.extensions”,”org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions”)

.config(“spark.sql.defaultCatalog”, f”{catalog_name}”)

.config(f”spark.sql.catalog.{catalog_name}”, “org.apache.iceberg.spark.SparkSessionCatalog”)

.config(f”spark.sql.catalog.{catalog_name}.warehouse”, f”{warehouse_path}”)

.config(f”spark.sql.catalog.{catalog_name}.shopper.area”, f”{aws_region}”)

.config(f”spark.sql.catalog.{catalog_name}.glue.account-id”, f”{aws_account_id}”)

.config(f”spark.sql.catalog.{catalog_name}.glue.lakeformation-enabled”, “true”)

.config(f”spark.sql.catalog.dropDirectoryBeforeTable.enabled”, “true”)

.config(f”spark.sql.catalog.{catalog_name}.catalog-impl”, “org.apache.iceberg.aws.glue.GlueCatalog”)

.config(f”spark.sql.catalog.{catalog_name}.io-impl”, “org.apache.iceberg.aws.s3.S3FileIO”)

.getOrCreate()

database_name = “glue5_fta_demo”

table_name = “iceberg_datalake”

df = spark.sql(f”choose * from {database_name}.{table_name}”)

df.present()

You may take away the –conf job argument that was added within the AWS Glue 4.0 job as a result of it’s set within the script itself now.

For Glue model, select Glue 5.0 – Helps spark 3.5, Scala 2, Python 3.

To confirm that roles that don’t have Lake Formation permissions granted for them aren’t capable of entry the Iceberg desk, you possibly can repeat the identical train you probably did in AWS Glue 4.0 and reuse the clone job execution position to rerun the job. You need to see the error message: AnalysisException: Inadequate Lake Formation permission(s) on glue5_fta_demo

You’ve accomplished the migration and now have an FTA setup in AWS Glue 5.0 that makes use of native Spark and reads from an Iceberg desk. You noticed that roles which are granted permission in Lake Formation can learn and that roles that aren’t granted permission in Lake Formation can’t learn.

Clear up

Full the next steps to wash up your assets:

Delete the AWS Glue job glue-fta-demo-iceberg.

Delete the Lake Formation permissions.

Delete the bucket that you just created for the enter datasets, which could have a reputation just like glue5-fta-demo-${AWS_ACCOUNT_ID}-${AWS_REGION_CODE}.

Conclusion

This publish defined how one can allow Spark native FTA in AWS Glue 5.0 jobs that can implement entry management outlined utilizing Lake Formation grant instructions. For earlier AWS Glue variations, you wanted to combine AWS Glue DataFrames to implement FTA in AWS Glue jobs or migrate to AWS Glue 5.0 FGAC, which has comparatively restricted performance. With this launch, if you happen to don’t want fine-grained management, you possibly can implement FTA by means of Spark DataFrame or Spark SQL for extra flexibility and efficiency. This functionality is presently supported for Iceberg and Hive tables.

This function can prevent effort and encourage portability whereas migrating Spark scripts to totally different serverless environments comparable to AWS Glue and Amazon EMR.

In regards to the authors

Layth Yassin is a Software program Growth Engineer on the AWS Glue crew. He’s enthusiastic about tackling difficult issues at a big scale, and constructing merchandise that push the bounds of the sector. Exterior of labor, he enjoys taking part in/watching basketball, and spending time with family and friends.

Layth Yassin is a Software program Growth Engineer on the AWS Glue crew. He’s enthusiastic about tackling difficult issues at a big scale, and constructing merchandise that push the bounds of the sector. Exterior of labor, he enjoys taking part in/watching basketball, and spending time with family and friends.

Noritaka Sekiyama is a Principal Massive Information Architect on the AWS Glue crew. He’s additionally the writer of the e book Serverless ETL and Analytics with AWS Glue. He’s liable for constructing software program artifacts to assist clients. In his spare time, he enjoys biking together with his highway bike.

Noritaka Sekiyama is a Principal Massive Information Architect on the AWS Glue crew. He’s additionally the writer of the e book Serverless ETL and Analytics with AWS Glue. He’s liable for constructing software program artifacts to assist clients. In his spare time, he enjoys biking together with his highway bike.

Kartik Panjabi is a Software program Growth Supervisor on the AWS Glue crew. His crew builds generative AI options for the Information Integration and distributed system for information integration.

Kartik Panjabi is a Software program Growth Supervisor on the AWS Glue crew. His crew builds generative AI options for the Information Integration and distributed system for information integration.

Matt Su is a Senior Product Supervisor on the AWS Glue crew. He enjoys serving to clients uncover insights and make higher selections utilizing their information with AWS Analytics providers. In his spare time, he enjoys snowboarding and gardening.

Matt Su is a Senior Product Supervisor on the AWS Glue crew. He enjoys serving to clients uncover insights and make higher selections utilizing their information with AWS Analytics providers. In his spare time, he enjoys snowboarding and gardening.