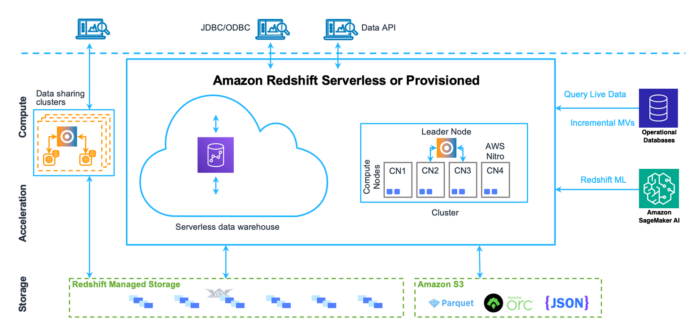

Amazon Redshift is a totally managed petabyte knowledge warehousing service within the cloud. Its massively parallel processing (MPP) structure processes knowledge by distributing queries throughout compute nodes. Every node executes equivalent question code on its knowledge portion, enabling parallel processing.

Amazon Redshift employs columnar storage for database tables, lowering total disk I/O necessities. This storage methodology considerably improves analytic question efficiency by minimizing knowledge learn throughout queries. Information has turn out to be many organizations’ most respected asset, driving demand for real-time or close to real-time analytics in knowledge warehouses. This demand necessitates techniques that assist simultaneous knowledge loading whereas sustaining question efficiency. This put up showcases the important thing enhancements in Amazon Redshift concurrent knowledge ingestion operations.

Challenges and ache factors for write workloads

In a knowledge warehouse setting, managing concurrent entry to knowledge is essential but difficult. Prospects utilizing Amazon Redshift ingest knowledge utilizing numerous approaches. For instance, you would possibly generally use INSERT and COPY statements to load knowledge to a desk, that are additionally known as pure write operations. You may need necessities for low-latency ingestions to maximise knowledge freshness. To realize this, you’ll be able to submit queries concurrently to the identical desk. To allow this, Amazon Redshift implements snapshot isolation by default. Snapshot isolation offers knowledge consistency when a number of transactions are operating concurrently. Snapshot isolation ensures that every transaction sees a constant snapshot of the database because it existed in the beginning of the transaction, stopping learn and write conflicts that might compromise knowledge integrity. With snapshot isolation, learn queries are capable of execute in parallel, so you’ll be able to reap the benefits of the complete efficiency that the info warehouse has to supply.

Nevertheless, pure write operations execute sequentially. Particularly, pure write operations want to amass an unique lock throughout all the transaction. They solely launch the lock when the transaction has dedicated the info. In these circumstances, the efficiency of the pure write operations is constrained by the pace of serial execution of the writes throughout classes.

To grasp this higher, let’s have a look at how a pure write operation works. Each pure write operation contains pre-ingestion duties resembling scanning, sorting, and aggregation on the identical desk. After the pre-ingestion duties are full, the info is written to the desk whereas sustaining knowledge consistency. As a result of the pure write operations run serially, even the pre-ingestion steps ran serially on account of lack of concurrency. Which means when a number of pure write operations are submitted concurrently, they’re processed one after one other, with no parallelization even for the pre-ingestion steps. To enhance the concurrency of ingestion to the identical desk and meet low latency necessities for ingestion, clients usually use workarounds by using staging tables. Particularly, you’ll be able to submit INSERT … VALUES(..) statements into staging tables. Then, you carry out joins with different tables, such FACT and DIMENSION tables, previous to appending knowledge utilizing ALTER TABLE APPEND into your goal tables. This strategy isn’t fascinating as a result of it requires you to keep up staging tables and probably have a bigger storage footprint on account of knowledge block fragmentation from using ALTER TABLE APPEND statements.

In abstract, the sequential execution of concurrent INSERT and COPY statements, on account of their unique locking habits, creates challenges if you wish to maximize the efficiency and effectivity of your knowledge ingestion workflows in Amazon Redshift. To beat these limitations, you could undertake workaround options, introducing further complexity and overhead. The next part outlines how Amazon Redshift has addressed these ache factors with enhancements to concurrent inserts.

Concurrent inserts and its advantages

With Amazon Redshift patch 187, Amazon Redshift has launched important enchancment in concurrency for knowledge ingestion with assist for concurrent inserts. This improves concurrent execution of pure write operations resembling COPY and INSERT statements, accelerating the time so that you can load knowledge into Amazon Redshift. Particularly, a number of pure write operations are capable of progress concurrently and full pre-ingestion duties resembling scanning, sorting, and aggregation in parallel.

To visualise this enchancment, let’s think about an instance of two queries, executed concurrently from completely different transactions.

The next is question 1 in transaction 1:

INSERT INTO table_a SELECT * FROM table_b WHERE table_b.column_x = ‘value_a’;

The next is question 2 in transaction 2:

INSERT INTO table_a SELECT * FROM table_c WHERE table_c.column_y = ‘value_b’

The next determine illustrates a simplified visualization of pure write operations with out concurrent inserts.

With out concurrent inserts, the important thing parts are as follows:

First, each pure write operations (INSERT) must learn knowledge from desk b and desk c, respectively.

The section in pink is the scan step (studying knowledge) and the section in inexperienced is write step (truly inserting the info).

Within the “Earlier than concurrent inserts” state, each queries would run sequentially. Particularly, the scan step in question 2 waits for the insert step in question 1 to finish earlier than it begins.

For instance, think about two identically sized queries throughout completely different transactions. Each queries must scan the identical quantity of knowledge and insert the identical quantity of knowledge into the goal desk. Let’s say each are issued at 10:00 AM. First, question 1 would spend from 10:00 AM to 10:50 AM scanning the info and 10:50 AM to 11:00 AM inserting the info. Subsequent, as a result of question 2 is equivalent in scan and insertion volumes, question 2 would spend from 11:00 AM to 11:50 AM scanning the info and 11:50 AM to 12:00 PM inserting the info. Each transactions began at 10:00 AM. The tip-to-end runtime is 2 hours (transaction 2 ends at 12:00 PM).The next determine illustrates a simplified visualization of pure write operations with concurrent inserts, in contrast with the earlier instance.

With concurrent inserts enabled, the scan step of question 1 and question 2 can progress concurrently. When both of the queries must insert knowledge, they now accomplish that serially. Let’s think about the identical instance, with two identically sized queries throughout completely different transactions. Each queries must scan the identical quantity of knowledge and insert the identical quantity of knowledge into the goal desk. Once more, let’s say each are issued at 10:00 AM. At 10:00 AM, question 1 and question 2 start executing concurrently. From 10:00 AM to 10:50 AM, question 1 and question 2 are capable of scan the info in parallel. From 10:50 AM to 11:00 AM, question 1 inserts the info into the goal desk. Subsequent, from 11:00 AM to 11:10 AM, question 2 inserts the info into the goal desk. The entire end-to-end runtime for each transactions is now lowered to 1 hour and 10 minutes, with question 2 finishing at 11:10 AM. On this state of affairs, the pre-ingestion steps (scanning the info) for each queries are capable of run concurrently, taking the identical period of time as within the earlier instance (50 minutes). Nevertheless, the precise insertion of knowledge into the goal desk is now executed serially, with question 1 finishing the insertion first, adopted by question 2. This demonstrates the efficiency advantages of the concurrent inserts characteristic in Amazon Redshift. By permitting the pre-ingestion steps to run concurrently, the general runtime is improved by 50 minutes in comparison with the sequential execution earlier than the characteristic was launched.

With concurrent inserts, pre-ingestion steps are capable of progress concurrently. Pre-ingestion duties might be one or a mix of duties, resembling scanning, sorting, and aggregation. There are important efficiency advantages achieved within the end-to-end runtime of the queries.

Advantages

Now you can profit from these efficiency enhancements with none further configuration as a result of the concurrent processing is dealt with robotically by the service. There are a number of advantages from the enhancements in concurrent inserts. You possibly can expertise the development of end-to-end efficiency of ingestion workloads once you’re writing to the identical desk. Inner benchmarking reveals that concurrent inserts can enhance end-to-end runtime by as much as 40% for concurrent insert transactions to the identical tables. This characteristic is especially useful for scan-heavy queries (queries that spend extra time studying knowledge than they spend time writing knowledge). The upper the ratio of scan:insert in any question, larger the efficiency enchancment anticipated.

This characteristic additionally improves the throughput and efficiency for multi-warehouse writes by knowledge sharing. Multi-warehouse writes by knowledge sharing helps you scale your write workloads throughout devoted Redshift clusters or serverless workgroups, optimizing useful resource utilization and reaching extra predictable efficiency in your extract, rework, and cargo (ETL) pipelines. Particularly, in multi-warehouse writes by knowledge sharing, queries from completely different warehouses can write knowledge on the identical desk. Concurrent inserts enhance the end-to-end efficiency of those queries by lowering useful resource competition and enabling them to make progress concurrently.

The next determine reveals the efficiency enhancements from inner exams from concurrent inserts, with the orange bar indicating the efficiency enchancment for multi-warehouse writes by knowledge sharing and the blue bar denoting the efficiency enchancment for concurrent inserts on the identical warehouse. Because the graph signifies, queries with larger scan parts relative to insert parts profit as much as 40% with this new characteristic.

You too can expertise further advantages because of utilizing concurrent inserts to handle your ingestion pipelines. If you instantly write knowledge to the identical tables by utilizing the advantage of concurrent inserts as a substitute of utilizing workarounds with ALTER TABLE APPEND statements, you’ll be able to scale back your storage footprint. This is available in two kinds: first from the elimination of momentary tables, and second from the discount in desk fragmentation from frequent ALTER TABLE APPEND statements. Moreover, you’ll be able to keep away from operational overhead of managing advanced workarounds and depend on frequent background and customer-issued VACUUM DELETE operations to handle the fragmentation brought on by appending momentary tables to your goal tables.

Concerns

Though the concurrent insert enhancements in Amazon Redshift present important advantages, it’s necessary to pay attention to potential impasse situations that may come up in a snapshot isolation setting. Particularly, in a snapshot isolation setting, deadlocks can happen in sure circumstances when operating concurrent write transactions on the identical desk. The snapshot isolation impasse occurs when concurrent INSERT and COPY statements are sharing a lock and making progress, and one other assertion must carry out an operation (UPDATE, DELETE, MERGE, or DDL operation) that requires an unique lock on the identical desk.

Think about the next state of affairs:

Transaction 1:

INSERT/COPY INTO table_A;

Transaction 2:

INSERT/COPY INTO table_A;

table_A

A impasse can happen when a number of transactions with INSERT and COPY operations are operating concurrently on the identical desk with a shared lock, and a type of transactions follows its pure write operation with an operation that requires an unique lock, resembling an UPDATE, MERGE, DELETE, or DDL assertion. To keep away from the impasse in these conditions, you’ll be able to separate statements requiring an unique lock (UPDATE, MERGE, DELETE, DDL statements) to a distinct transaction in order that INSERT and COPY statements can progress concurrently, and the statements requiring unique locks can execute after them. Alternatively, for transactions with INSERT and COPY statements and MERGE, UPDATE, and DELETE statements on similar desk, you’ll be able to embody retry logic in your functions to work round potential deadlocks. Check with Potential impasse scenario for concurrent write transactions involving a single desk for extra details about deadlocks, and see Concurrent write examples for examples of concurrent transactions.

Conclusion

On this put up, we demonstrated how Amazon Redshift has addressed a key problem: bettering concurrent knowledge ingestion efficiency right into a single desk. This enhancement may also help you meet your necessities for low latency and stricter SLAs when accessing the newest knowledge. The replace exemplifies our dedication to implementing crucial options in Amazon Redshift based mostly on buyer suggestions.

Concerning the authors

Raghu Kuppala is an Analytics Specialist Options Architect skilled working within the databases, knowledge warehousing, and analytics house. Outdoors of labor, he enjoys making an attempt completely different cuisines and spending time along with his household and buddies.

Raghu Kuppala is an Analytics Specialist Options Architect skilled working within the databases, knowledge warehousing, and analytics house. Outdoors of labor, he enjoys making an attempt completely different cuisines and spending time along with his household and buddies.

Sumant Nemmani is a Senior Technical Product Supervisor at AWS. He’s targeted on serving to clients of Amazon Redshift profit from options that use machine studying and clever mechanisms to allow the service to self-tune and optimize itself, making certain Redshift stays price-performant as they scale their utilization.

Sumant Nemmani is a Senior Technical Product Supervisor at AWS. He’s targeted on serving to clients of Amazon Redshift profit from options that use machine studying and clever mechanisms to allow the service to self-tune and optimize itself, making certain Redshift stays price-performant as they scale their utilization.

Gagan Goel is a Software program Growth Supervisor at AWS. He ensures that Amazon Redshift options meet buyer wants by prioritising and guiding the crew in delivering customer-centric options, monitor and improve question efficiency for buyer workloads.

Gagan Goel is a Software program Growth Supervisor at AWS. He ensures that Amazon Redshift options meet buyer wants by prioritising and guiding the crew in delivering customer-centric options, monitor and improve question efficiency for buyer workloads.

Kshitij Batra is a Software program Growth Engineer at Amazon, specializing in constructing resilient, scalable, and high-performing software program options.

Kshitij Batra is a Software program Growth Engineer at Amazon, specializing in constructing resilient, scalable, and high-performing software program options.

Sanuj Basu is a Principal Engineer at AWS, driving the evolution of Amazon Redshift right into a next-generation, exabyte-scale cloud knowledge warehouse. He leads engineering for Redshift’s core knowledge platform — together with managed storage, transactions, and knowledge sharing — enabling clients to energy seamless multi-cluster analytics and fashionable knowledge mesh architectures. Sanuj’s work helps Redshift clients break by th

Sanuj Basu is a Principal Engineer at AWS, driving the evolution of Amazon Redshift right into a next-generation, exabyte-scale cloud knowledge warehouse. He leads engineering for Redshift’s core knowledge platform — together with managed storage, transactions, and knowledge sharing — enabling clients to energy seamless multi-cluster analytics and fashionable knowledge mesh architectures. Sanuj’s work helps Redshift clients break by th