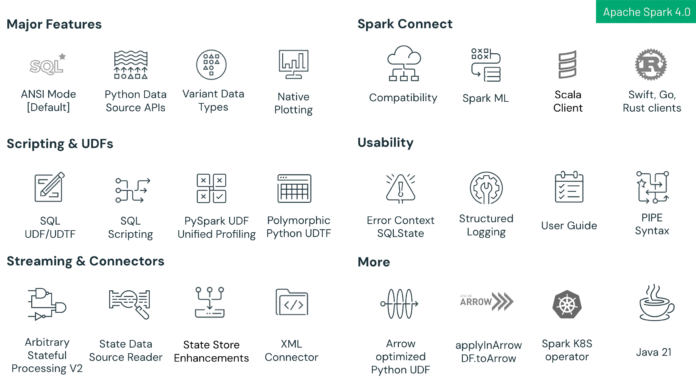

Apache Spark 4.0 marks a significant milestone within the evolution of the Spark analytics engine. This launch brings important developments throughout the board – from SQL language enhancements and expanded connectivity, to new Python capabilities, streaming enhancements, and higher usability. Spark 4.0 is designed to be extra highly effective, ANSI-compliant, and user-friendly than ever, whereas sustaining compatibility with present Spark workloads. On this publish, we clarify the important thing options and enhancements launched in Spark 4.0 and the way they elevate your massive information processing expertise.

Key Highlights in Spark 4.0 embody:

SQL Language Enhancements: New capabilities together with SQL scripting with session variables and management circulation, reusable SQL Person-Outlined Features (UDFs), and intuitive PIPE syntax to streamline and simplify complicated analytics workflows.

Spark Join Enhancements: Spark Join—Spark’s new client-server structure—now achieves excessive characteristic parity with Spark Basic in Spark 4.0. This launch provides enhanced compatibility between Python and Scala, multi-language help (with new shoppers for Go, Swift, and Rust), and an easier migration path by way of the brand new spark.api.mode setting. Builders can seamlessly change from Spark Basic to Spark Join to profit from a extra modular, scalable, and versatile structure.

Reliability & Productiveness Enhancements: ANSI SQL mode enabled by default ensures stricter information integrity and higher interoperability, complemented by the VARIANT information kind for environment friendly dealing with of semi-structured JSON information and structured JSON logging for improved observability and simpler troubleshooting.

Python API Advances: Native Plotly-based plotting immediately on PySpark DataFrames, a Python Information Supply API enabling customized Python batch & streaming connectors, and polymorphic Python UDTFs for dynamic schema help and larger flexibility.

Structured Streaming Advances: New Arbitrary Stateful Processing API known as transformWithState in Scala, Java & Python for strong and fault-tolerant customized stateful logic, state retailer usability enhancements, and a brand new State Retailer Information Supply for improved debuggability and observability.

Within the sections beneath, we share extra particulars on these thrilling options, and on the finish, we offer hyperlinks to the related JIRA efforts and deep-dive weblog posts for individuals who need to study extra. Spark 4.0 represents a sturdy, future-ready platform for large-scale information processing, combining the familiarity of Spark with new capabilities that meet trendy information engineering wants.

Main Spark Join Enhancements

One of the thrilling updates in Spark 4.0 is the general enchancment of Spark Join, particularly the Scala shopper. With Spark 4, all Spark SQL options provide near-complete compatibility between Spark Join and Basic execution mode, with solely minor variations remaining. Spark Join is the brand new client-server structure for Spark that decouples the consumer software from the Spark cluster, and in 4.0, it’s extra succesful than ever:

Improved Compatibility: A serious achievement for Spark Join in Spark 4 is the improved compatibility of the Python and Scala APIs, which makes switching between utilizing Spark Basic and Spark Join seamless. Because of this for many use instances, all it’s a must to do is allow Spark Join in your purposes by setting spark.api.mode to attach. We suggest beginning to develop new jobs and purposes with Spark Join enabled as a way to profit most from Spark’s highly effective question optimization and execution engine.

Multi-Language Help: Spark Join in 4.0 helps a broad vary of languages and environments. Python and Scala shoppers are totally supported, and new community-supported join shoppers for Go, Swift, and Rust can be found. This polyglot help means builders can use Spark within the language of their selection, even outdoors the JVM ecosystem, by way of the Join API. For instance, a Rust information engineering software or a Go service can now immediately hook up with a Spark cluster and run DataFrame queries, increasing Spark’s attain past its conventional consumer base.

SQL Language Options

Spark 4.0 provides new capabilities to simplify information analytics:

SQL Person-Outlined Features (UDFs) – Spark 4.0 introduces SQL UDFs, enabling customers to outline reusable customized features immediately in SQL. These features simplify complicated logic, enhance maintainability, and combine seamlessly with Spark’s question optimizer, enhancing question efficiency in comparison with conventional code-based UDFs. SQL UDFs help short-term and everlasting definitions, making it straightforward for groups to share frequent logic throughout a number of queries and purposes. (Learn the weblog publish)

SQL PIPE Syntax – Spark 4.0 introduces a brand new PIPE syntax, permitting customers to chain SQL operations utilizing the |> operator. This functional-style strategy enhances question readability and maintainability by enabling a linear circulation of transformations. The PIPE syntax is totally appropriate with present SQL, permitting for gradual adoption and integration into present workflows. (Learn the weblog publish)

Language, accent, and case-aware collations – Spark 4.0 introduces a brand new COLLATE property for STRING varieties. You may select from many language and region-aware collations to regulate how Spark determines order and comparisons. You can even resolve whether or not collations needs to be case, accent, and trailing clean insensitive. (Learn the weblog publish)

Session variables – Spark 4.0 introduces session native variables, which can be utilized to maintain and handle state inside a session with out utilizing host language variables. (Learn the weblog publish)

Parameter markers – Spark 4.0 introduces named (“:var”) and unnamed (“?”) fashion parameter markers. This characteristic means that you can parameterize queries and safely move in values by means of the spark.sql() api. This mitigates the chance of SQL injection. (See documentation)

SQL Scripting: Writing multi-step SQL workflows is less complicated in Spark 4.0 because of new SQL scripting capabilities. Now you can execute multi-statement SQL scripts with options like native variables and management circulation. This enhancement lets information engineers transfer components of ETL logic into pure SQL, with Spark 4.0 supporting constructs that have been beforehand solely attainable by way of exterior languages or saved procedures. This characteristic will quickly be additional improved by error situation dealing with. (Learn the weblog publish)

Information Integrity and Developer Productiveness

Spark 4.0 introduces a number of updates that make the platform extra dependable, standards-compliant, and user-friendly. These enhancements streamline each growth and manufacturing workflows, guaranteeing increased information high quality and sooner troubleshooting.

ANSI SQL Mode: One of the important shifts in Spark 4.0 is enabling ANSI SQL mode by default, aligning Spark extra intently with commonplace SQL semantics. This alteration ensures stricter information dealing with by offering specific error messages for operations that beforehand resulted in silent truncations or nulls, similar to numeric overflows or division by zero. Moreover, adhering to ANSI SQL requirements enormously improves interoperability, simplifying the migration of SQL workloads from different programs and decreasing the necessity for intensive question rewrites and crew retraining. General, this development promotes clearer, extra dependable, and transportable information workflows. (See documentation)

New VARIANT Information Kind: Apache Spark 4.0 introduces the brand new VARIANT information kind designed particularly for semi-structured information, enabling the storage of complicated JSON or map-like buildings inside a single column whereas sustaining the power to effectively question nested fields. This highly effective functionality gives important schema flexibility, making it simpler to ingest and handle information that does not conform to predefined schemas. Moreover, Spark’s built-in indexing and parsing of JSON fields improve question efficiency, facilitating quick lookups and transformations. By minimizing the necessity for repeated schema evolution steps, VARIANT simplifies ETL pipelines, leading to extra streamlined information processing workflows. (Learn the weblog publish)

Structured Logging: Spark 4.0 introduces a brand new structured logging framework that simplifies debugging and monitoring. By enabling spark.log.structuredLogging.enabled=true, Spark writes logs as JSON traces—every entry together with structured fields like timestamp, log degree, message, and full Mapped Diagnostic Context (MDC) context. This contemporary format simplifies integration with observability instruments similar to Spark SQL, ELK, and Splunk, making logs a lot simpler to parse, search, and analyze. (Study extra)

Python API Advances

Python customers have quite a bit to have fun in Spark 4.0. This launch makes Spark extra Pythonic and improves the efficiency of PySpark workloads:

Native Plotting Help: Information exploration in PySpark simply bought simpler – Spark 4.0 provides native plotting capabilities to PySpark DataFrames. Now you can name a .plot() methodology or use an related API on a DataFrame to generate charts immediately from Spark information, with out manually gathering information to pandas. Underneath the hood, Spark makes use of Plotly because the default visualization backend to render charts. This implies frequent plot varieties like histograms and scatter plots will be created with one line of code on a PySpark DataFrame, and Spark will deal with fetching a pattern or combination of the info to plot in a pocket book or GUI. By supporting native plotting, Spark 4.0 streamlines exploratory information evaluation – you’ll be able to visualize distributions and developments out of your dataset with out leaving the Spark context or writing separate matplotlib/plotly code. This characteristic is a productiveness boon for information scientists utilizing PySpark for EDA.

Python Information Supply API: Spark 4.0 introduces a brand new Python DataSource API that permits builders to implement customized information sources for batch & streaming completely in Python. Beforehand, writing a connector for a brand new file format, database, or information stream usually required Java/Scala information. Now, you’ll be able to create readers and writers in Python, which opens up Spark to a broader group of builders. For instance, you probably have a customized information format or an API that solely has a Python shopper, you’ll be able to wrap it as a Spark DataFrame supply/sink utilizing this API. This characteristic enormously improves extensibility for PySpark in each batch and streaming contexts. See the PySpark deep-dive publish for an instance of implementing a easy customized information supply in Python or try a pattern of examples right here. (Learn the weblog publish)

Polymorphic Python UDTFs: Constructing on the SQL UDTF functionality, PySpark now helps Person-Outlined Desk Features in Python, together with polymorphic UDTFs that may return completely different schema shapes relying on enter. You may create a Python class as a UDTF utilizing a decorator that yields an iterator of output rows, and register it so it may be known as from Spark SQL or the DataFrame API . A robust side is dynamic schema UDTFs – your UDTF can outline an analyze() methodology to supply a schema on the fly primarily based on parameters, similar to studying a config file to find out output columns. This polymorphic conduct makes UDTFs extraordinarily versatile, enabling situations like processing a various JSON schema or splitting an enter right into a variable set of outputs. PySpark UDTFs successfully let Python logic output a full table-result per invocation, all throughout the Spark execution engine. (See documentation)

Streaming Enhancements

Apache Spark 4.0 continues to refine Structured Streaming for improved efficiency, usability and observability:

Arbitrary Stateful Processing v2: Spark 4.0 introduces a brand new Arbitrary Stateful Processing operator known as transformWithState. TransformWithState permits for constructing complicated operational pipelines with help for object oriented logic definition, composite varieties, help for timers and TTL, help for dealing with preliminary state, state schema evolution and a bunch of different options. This new API is offered in Scala, Java and Python and offers native integrations with different vital options similar to state information supply reader, operator metadata dealing with and many others. (Learn the weblog publish)

State Information Supply – Reader: Spark 4.0 provides the power to question streaming state as a desk . This new state retailer information supply exposes the interior state utilized in stateful streaming aggregations (like counters, session home windows, and many others.), joins and many others as a readable DataFrame. With further choices, this characteristic additionally permits customers to trace state modifications on a per replace foundation for fine-grained visibility. This characteristic additionally helps with understanding what state your streaming job is processing and might additional help in troubleshooting and monitoring the stateful logic of your streams in addition to detecting any underlying corruptions or invariant violations. (Learn the weblog publish)

State Retailer Enhancements: Spark 4.0 additionally provides quite a few state retailer enhancements similar to improved Static Sorted Desk (SST) file reuse administration, snapshot & upkeep administration enhancements, revamped state checkpoint format in addition to further efficiency enhancements. Together with this, quite a few modifications have been added round improved logging and error classification for simpler monitoring and debuggability.

Acknowledgements

Spark 4.0 is a big step ahead for the Apache Spark mission, with optimizations and new options touching each layer—from core enhancements to richer APIs. On this launch, the group closed greater than 5000 JIRA points and round 400 particular person contributors—from unbiased builders to organizations like Databricks, Apple, Linkedin, Intel, OpenAI, eBay, Netease, Baidu —have pushed these enhancements.

We prolong our honest thanks to each contributor, whether or not you filed a ticket, reviewed code, improved documentation, or shared suggestions on mailing lists. Past the headline SQL, Python, and streaming enhancements, Spark 4.0 additionally delivers Java 21 help, Spark K8S operator, XML connectors, Spark ML help on Join, and PySpark UDF Unified Profiling. For the complete record of modifications and all different engine-level refinements, please seek the advice of the official Spark 4.0 launch notes.

Getting Spark 4.0: Getting Spark 4.0: It’s totally open supply—obtain it from spark.apache.org. Lots of its options have been already obtainable in Databricks Runtime 15.x and 16.x, and now they ship out of the field with Runtime 17.0. To discover Spark 4.0 in a managed atmosphere, join the free Group Version or begin a trial, select “17.0” if you spin up your cluster, and also you’ll be operating Spark 4.0 in minutes.

For those who missed our Spark 4.0 meetup the place we mentioned these options, you’ll be able to view the recordings right here. Additionally, keep tuned for future deep-dive meetups on these Spark 4.0 options.