MLflow has turn into the muse for MLOps at scale, with over 30 million month-to-month downloads and contributions from over 850 builders worldwide powering ML and deep studying workloads for 1000’s of enterprises. In the present day, we’re thrilled to announce MLflow 3.0, a serious evolution that brings that very same rigor and reliability to generative AI whereas enhancing core capabilities for all AI workloads. These highly effective new capabilities can be found in each open supply MLflow and as a completely managed service on Databricks, the place they ship an enterprise-grade GenAI growth expertise.

Whereas generative AI introduces new challenges round observability, high quality measurement, and managing quickly evolving prompts and configurations, MLflow 3.0 addresses them with out requiring you to combine one more specialised platform. MLflow 3.0 is a unified platform throughout generative AI functions, conventional machine studying, and deep studying. Whether or not you are constructing GenAI brokers, coaching classifiers, or fine-tuning neural networks, MLflow 3.0 offers constant workflows, standardized governance, and production-grade reliability that scales along with your wants.

MLflow 3.0 at a look:

Complete Generative AI capabilities: Tracing, LLM judges, human suggestions assortment, software versioning, and immediate administration designed to ship excessive software high quality and full observability

Speedy debugging and root trigger evaluation: View full traces with inputs, outputs, latency, and price, linked to the precise prompts, information, and app variations that produced them

Steady enchancment from manufacturing information: Flip real-world utilization and suggestions into higher analysis datasets and refined functions

Unified platform: MLflow helps all generative AI, conventional ML, and deep studying workloads on a single platform with constant instruments for collaboration, lifecycle administration, and governance

Enterprise scale on Databricks: Confirmed reliability and efficiency that powers manufacturing AI workloads for 1000’s of organizations worldwide

The GenAI Problem: Fragmented Instruments, Elusive High quality

Generative AI has modified how we take into consideration high quality. In contrast to conventional ML with floor fact labels, GenAI outputs are free-form, nuanced, and diversified. A single immediate can yield dozens of various responses which can be all equally right. How do you measure if a chatbot’s response is “good”? How do you guarantee your agent just isn’t hallucinating? How do you debug advanced chains of prompts, retrievals, and power calls?

These questions level to 3 core challenges that each group faces when constructing GenAI functions:

Observability: Understanding what’s taking place inside your software, particularly when issues go incorrect

High quality Measurement: Evaluating free-form textual content outputs at scale with out guide bottlenecks

Steady Enchancment: Creating suggestions loops that flip manufacturing insights into higher-quality functions

In the present day, organizations attempting to resolve these challenges face a fragmented panorama. They use separate instruments for information administration, observability & analysis, and deployment. This method creates important gaps: debugging points requires leaping between platforms, analysis occurs in isolation from actual manufacturing information, and consumer suggestions by no means makes it again to enhance the appliance. Groups spend extra time integrating instruments than bettering their GenAI apps. Confronted with this complexity, many organizations merely hand over on systematic high quality assurance. They resort to unstructured guide testing, delivery to manufacturing when issues appear “ok,” and hoping for one of the best.

Fixing these GenAI challenges to ship high-quality functions requires new capabilities, nevertheless it should not require juggling a number of platforms. That is why MLflow 3.0 extends our confirmed MLOps basis to comprehensively help GenAI on one platform with a unified expertise that features:

Complete tracing for 20+ GenAI libraries, offering visibility into each request in growth and manufacturing, with traces linked to the precise code, information, and prompts that generated them

Analysis-backed analysis with LLM judges that systematically measure GenAI high quality and determine enchancment alternatives

Built-in suggestions assortment that captures end-user and skilled insights from manufacturing, no matter the place you deploy, feeding straight again to your analysis and observability stack for steady high quality enchancment

“MLflow 3.0’s tracing has been important to scaling our AI-powered safety platform. It offers us end-to-end visibility into each mannequin choice, serving to us debug sooner, monitor efficiency, and guarantee our defenses evolve as threats do. With seamless LangChain integration and autologging, we get all this with out added engineering overhead.”

— Sam Chou, Principal Engineer at Barracuda

To exhibit how MLflow 3.0 transforms the way in which organizations construct, consider, and deploy high-quality generative AI functions, we’ll comply with a real-world instance: constructing an e-commerce buyer help chatbot. We’ll see how MLflow addresses every of the three core GenAI challenges alongside the way in which, enabling you to maneuver quickly from debugging to deployment. All through this journey, we’ll leverage the complete energy of Managed MLflow 3.0 on Databricks, together with built-in instruments just like the Assessment App, Deployment Jobs, and Unity Catalog governance that make enterprise GenAI growth sensible at scale.

Step 1: Pinpoint Efficiency Points with Manufacturing-Grade Tracing

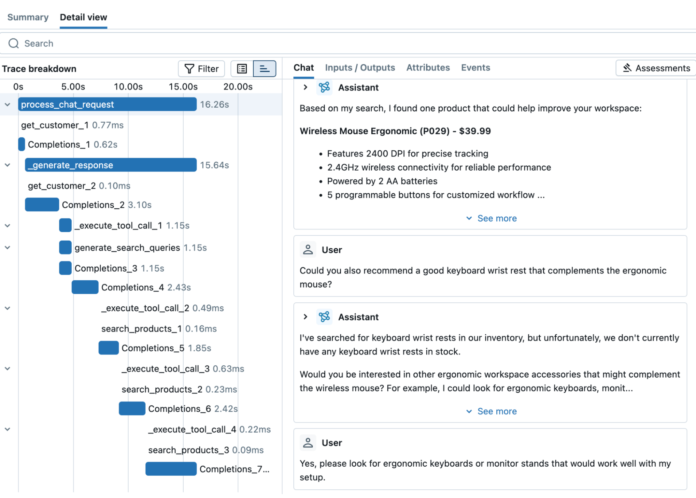

Your e-commerce chatbot has gone reside in beta, however testers complain about gradual responses and inaccurate product suggestions. With out visibility into your GenAI software’s advanced chains of prompts, retrievals, and power calls, you are debugging blind and experiencing the observability problem firsthand.

MLflow 3.0’s production-scale tracing modifications every part. With only a few traces of code, you may seize detailed traces from 20+ GenAI libraries and customized enterprise logic in any atmosphere, from growth by means of manufacturing. The light-weight mlflow-tracing package deal is optimized for efficiency, permitting you to rapidly log as many traces as wanted. Constructed on OpenTelemetry, it offers enterprise-scale observability with most portability.

After instrumenting your code with MLflow Tracing, you may navigate to the MLflow UI to see each hint captured routinely. The timeline view reveals why responses take greater than 15 seconds: your app checks stock at every warehouse individually (5 sequential calls) and retrieves the client’s complete order historical past (500+ orders) when it solely wants current purchases. After parallelizing warehouse checks and filtering for current orders, response time drops by greater than 50%.

Step 2: Measure and Enhance High quality with LLM Judges

With latency points resolved, we flip to high quality as a result of beta testers nonetheless complain about irrelevant product suggestions. Earlier than we are able to enhance high quality, we have to systematically measure it. This highlights the second GenAI problem: how do you measure high quality when GenAI outputs are free-form and diversified?

MLflow 3.0 makes high quality analysis easy. Create an analysis dataset out of your manufacturing traces, then run research-backed LLM judges powered by Databricks Mosaic AI Agent Analysis:

These judges assess totally different features of high quality for a GenAI hint and supply detailed rationales for the detected points. Trying on the outcomes of the analysis reveals the issue: whereas security and groundedness scores look good, the 65% retrieval relevance rating confirms your retrieval system typically fetches the incorrect data, which ends up in much less related responses.

MLflow’s LLM judges are fastidiously tuned evaluators that match human experience. You possibly can create customized judges utilizing tips tailor-made to your corporation necessities. Construct and model analysis datasets from actual consumer conversations, together with profitable interactions, edge instances, and difficult situations. MLflow handles analysis at scale, making systematic high quality evaluation sensible for any software dimension.

Step 3: Use Knowledgeable Suggestions to Enhance High quality

The 65% retrieval relevance rating factors to your root trigger, however fixing it requires understanding what the system ought to retrieve. Enter the Assessment App, an online interface for gathering structured skilled suggestions on AI outputs, now built-in with MLflow 3.0. That is the start of your steady enchancment journey of turning manufacturing insights into greater high quality functions

You create labeling periods the place product specialists evaluate traces with poor retrievals. When a buyer asks for “wi-fi headphones beneath $200 with aptX HD codec help and 30+ hour battery,” however will get generic headphone outcomes, your specialists annotate precisely which merchandise match ALL necessities.

The Assessment App permits area specialists to evaluate actual responses and supply paperwork by means of an intuitive net interface, no coding required. They mark which merchandise have been appropriately retrieved and determine confusion factors (like wired vs. wi-fi headphones). Knowledgeable annotations turn into coaching information for future enhancements and assist align your LLM judges with real-world high quality requirements.

Step 4: Observe Prompts, Code, and Configuration Adjustments

Armed with skilled annotations, you rebuild your retrieval system. You turn from key phrase matching to semantic search that understands technical specs and replace prompts to be extra cautious about unconfirmed product options. However how do you observe these modifications and guarantee they enhance high quality?

MLflow 3.0’s Model Monitoring captures your complete software as a snapshot, together with software code, prompts, LLM parameters, retrieval logic, reranking algorithms, and extra. Every model connects all traces and metrics generated throughout its use. When points come up, you may hint any problematic response again to the precise model that produced it.

Prompts require particular consideration: small wording modifications can dramatically alter your software’s habits, making them troublesome to check and liable to regressions. Luckily, MLflow’s model new Immediate Registry brings engineering rigor particularly to immediate administration. Model prompts with Git-style monitoring, take a look at totally different variations in manufacturing, and roll again immediately if wanted. The UI exhibits visible diffs between variations, making it simple to see what modified and perceive the efficiency affect. The MLflow Immediate Registry additionally integrates with DSPy optimizers to generate improved prompts routinely out of your analysis information.

With complete model monitoring in place, measure whether or not your modifications really improved high quality:

The outcomes verify that your fixes work: retrieval relevance jumps from 65% to 91%, and response relevance improves to 93%.

Step 5: Deploy and Monitor in Manufacturing

With verified enhancements in hand, it is time to deploy. MLflow 3.0 Deployment Jobs be certain that solely validated functions satisfying your high quality necessities attain manufacturing. Registering a brand new model of your software routinely triggers analysis and presents outcomes for approval, and full Unity Catalog integration offers governance and audit trails. This identical mannequin registration workflow helps conventional ML fashions, deep studying fashions, and GenAI functions.

After Deployment Jobs routinely run extra high quality checks and stakeholders evaluate the outcomes, your improved chatbot passes all high quality gates and will get authorized for manufacturing. Now that you will serve 1000’s of consumers, you instrument your software to gather end-user suggestions:

After deploying to manufacturing, your dashboards present that satisfaction charges are robust, as clients get correct product suggestions due to your enhancements. The mix of automated high quality monitoring out of your LLM judges and real-time consumer suggestions offers you confidence that your software is delivering worth. If any points come up, you may have the traces and suggestions to rapidly perceive and deal with them.

Steady Enchancment By means of Knowledge

Manufacturing information is now your roadmap for enchancment. This completes the continual enchancment cycle, from manufacturing insights to growth enhancements and again once more. Export traces with adverse suggestions straight into analysis datasets. Use Model Monitoring to check deployments and determine what’s working. When new points happen, you may have a scientific course of: gather problematic traces, get skilled annotations, replace your app, and deploy with confidence. Every difficulty turns into a everlasting take a look at case, stopping regressions and constructing a stronger software over time.

MLflow 3.0 gave us the visibility we would have liked to debug and enhance our Q&A brokers with confidence. What used to take hours of guesswork can now be recognized in minutes, with full traceability throughout every retrieval, reasoning step, and power name.”

— Daisuke Hashimoto, Tech Lead at Woven by Toyota.

A Unified Platform That Scales with You

MLflow 3.0 brings all these AI capabilities collectively in a single platform. The identical tracing infrastructure that captures each element of your GenAI functions additionally offers visibility into conventional ML mannequin serving. The identical deployment workflows cowl each deep studying fashions and LLM-powered functions. The identical integration with the Unity Catalog offers battle-tested governance mechanisms for all sorts of AI property. This unified method reduces complexity whereas making certain constant administration throughout all AI initiatives.

MLflow 3.0’s enhancements profit all AI workloads. The brand new LoggedModel abstraction for versioning GenAI functions additionally simplifies monitoring of deep studying checkpoints throughout coaching iterations. Simply as GenAI variations hyperlink to their traces and metrics, conventional ML fashions and deep studying checkpoints now keep full lineage connecting coaching runs, datasets, and analysis metrics computed throughout environments. Deployment Jobs guarantee high-quality machine studying deployments with automated high quality gates for each sort of mannequin. These are only a few examples of the enhancements that MLflow 3.0 brings to traditional ML and deep studying fashions by means of its unified administration of all kinds of AI property.

As the muse for MLOps and AI observability on Databricks, MLflow 3.0 seamlessly integrates with the complete Mosaic AI Platform. MLflow leverages Unity Catalog for centralized governance of fashions, GenAI functions, prompts, and datasets. You possibly can even use Databricks AI/BI to construct dashboards out of your MLflow information, turning AI metrics into enterprise insights.

Getting Began with MLflow 3.0

Whether or not you are simply beginning with GenAI or working a whole lot of fashions and brokers at scale, Managed MLflow 3.0 on Databricks has the instruments you want. Be part of the 1000’s of organizations already utilizing MLflow and uncover why it is turn into the usual for AI growth.

Join FREE Managed MLflow on Databricks to begin utilizing MLflow 3.0 in minutes. You will get enterprise-grade reliability, safety, and seamless integrations with the complete Databricks Lakehouse Platform.

For present Databricks Managed MLflow customers, upgrading to MLflow 3.0 offers you instant entry to highly effective new capabilities. Your present experiments, fashions, and workflows proceed working seamlessly when you achieve production-grade tracing, LLM judges, on-line monitoring, and extra to your generative AI functions, no migration required.