Final 12 months, we unveiled knowledge intelligence – AI that may purpose in your enterprise knowledge – with the arrival of the Databricks Mosaic AI stack for constructing and deploying agent programs. Since then, we’ve had 1000’s of consumers deliver AI into manufacturing. This 12 months on the Information and AI Summit, we’re excited to announce a number of key merchandise:

Agent Bricks in Beta

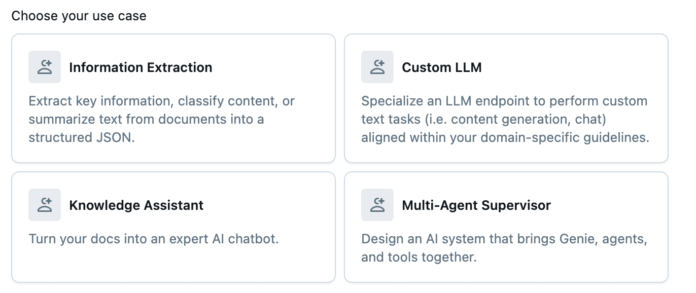

Agent Bricks is a brand new strategy to construct high-quality brokers which might be auto-optimized in your knowledge. Simply present a high-level description of the agent’s activity and join your enterprise knowledge — Agent Bricks handles the remainder. Agent Bricks is optimized for widespread business use circumstances, together with structured info extraction, dependable information help, customized textual content transformation, and constructing multi-agent programs. We use the most recent in agentic analysis from the Databricks Mosaic AI analysis workforce to routinely construct evaluations and optimize agent high quality. For extra particulars, see the Agent Bricks deep dive weblog.

Determine 1: Agent Bricks auto-optimizes brokers in your knowledge and activity

MLflow 3.0

We’re releasing MLflow 3, which was redesigned from the bottom up for Generative AI, with the most recent in monitoring, analysis, and lifecycle administration. Now with MLflow 3, you may monitor and observe brokers which might be deployed anyplace, even outdoors of Databricks. Brokers deployed on AWS, GCP, and even on-premise programs can now be related to MLflow 3 for agent observability.

Determine 2: Actual-time observability is now accessible even for brokers deployed outdoors of Databricks

We have now additionally included in MLflow 3 a immediate registry, permitting you to register, model, take a look at, and deploy totally different LLM prompts in your agent programs.

AI Capabilities in SQL: Now Sooner and Multi-Modal

AI Capabilities allow customers to simply entry the ability of generative AI immediately from inside SQL. This 12 months, we’re excited to share that AI Capabilities now have dramatic efficiency enhancements and expanded multi-modal capabilities. AI Capabilities are actually as much as 3x quicker and 4x decrease price than different distributors on large-scale workloads, enabling you to course of large-scale knowledge transformations with unprecedented pace.

Determine 3: Doc intelligence arrives at Databricks with the introduction of ai_parse in SQL.

Past efficiency, AI Capabilities now help multi-modal capabilities, permitting you to work seamlessly throughout textual content, pictures, and different knowledge varieties. New features like ai_parse_document make it easy to extract structured info from advanced paperwork, unlocking insights from beforehand hard-to-process enterprise content material.

Determine 4: AI Capabilities in SQL is now greater than 3x quicker than the competitors on scaled workloads

Storage-Optimized Vector Search in Public Preview

Mosaic AI Vector Search types the spine of many retrieval programs, and particularly RAG brokers, and our Vector Search product is among the quickest rising merchandise at Databricks. We’ve now fully re-wrote the infrastructure from scratch with the ideas of separating compute and storage. Our new Storage-Optimized Vector Search can scale up billions of vectors whereas delivering 7x decrease price. This breakthrough makes it economically possible to construct refined RAG purposes and semantic search programs throughout your total knowledge property. Whether or not you are powering buyer help chatbots or enabling superior doc discovery, now you can scale with out the prohibitive prices. See our detailed weblog publish for technical deep-dive and efficiency benchmarks.

Serverless GPU Compute in Beta

We’re asserting a significant step ahead in serverless compute with the introduction of GPU help in Databricks serverless platform. GPU-powered AI workloads are actually extra accessible than ever, with this absolutely managed service eliminating the complexity of GPU administration. Whether or not you are coaching fashions, operating inference, or processing large-scale knowledge transformations, Serverless GPU compute offers the efficiency you want with out the operational overhead. Totally built-in into the Databricks platform, Serverless GPU compute allows on-demand entry to A10g (Beta in the present day) and H100s (coming quickly), with out being locked into long-term reservations. Run notebooks on serverless GPUs and submit as jobs, with the total governance of Unity Catalog.

Determine 5: Serverless notebooks and jobs can now run on GPUs, with A10G in Beta and H100s coming quickly

Excessive-Scale Mannequin Serving

Enterprise AI purposes in the present day demand elevated throughput and decrease latencies for manufacturing readiness. Our enhanced Mannequin Serving infrastructure now helps over 250,000 queries per second (QPS). Deliver your real-time on-line ML workloads to Databricks, and allow us to deal with the infrastructure and reliability challenges so you may give attention to the AI mannequin improvement.

With LLM serving, we’ve now launched a brand new proprietary in-house inference engine in all areas. The inference engine incorporates a lot of our personal improvements and customized kernels to speed up inference of Meta Llama and different open-source LLMs. On widespread workloads, our inference engine is as much as 1.5x quicker than correctly configured open supply engines reminiscent of vLLM-v1. Along with the remainder of our LLM serving infrastructure, these improvements imply that serving LLMs on Databricks is less complicated, quicker, and infrequently decrease complete price, than DIY serving options.

From chatbots to suggestion engines, your AI companies can now scale to deal with even probably the most demanding enterprise workloads.

MCP Help in Databricks

Anthropic’s Mannequin Context Protocol (MCP) is a well-liked protocol for offering instruments and information to massive language fashions. We’ve now built-in MCP immediately into the Databricks platform. MCP servers may be hosted with Databricks Apps, giving a seamless strategy to deploy and handle MCP-compliant companies with out further infrastructure administration. You’ll be able to work together with and take a look at MCP-enabled fashions immediately in our Playground surroundings, making it simpler to experiment with totally different mannequin configurations and capabilities.

Determine 6: Rapidly prototype MCP servers with built-in Playground help

Moreover, now you can join your brokers to leverage Databricks with the launch of Databricks-hosted MCP servers for UC features, Genie, and Vector Search. To study extra, see our documentation.

AI Gateway is Usually Accessible

Mosaic AI Gateway is now typically accessible. This unified entry level for all of your AI companies offers centralized governance, utilization logging, and management throughout your total AI software portfolio. We’ve additionally added a bunch of recent capabilities, from having the ability to routinely fallback between totally different suppliers, to PII and security guardrails. With AI Gateway, you may implement charge restrict insurance policies, observe utilization, and implement security guardrails, on AI workloads, whether or not they’re operating on Databricks or by way of exterior companies.

Get Began

These bulletins characterize our continued dedication to creating enterprise AI extra accessible, performant, and cost-effective. Every innovation builds upon our knowledge intelligence platform, guaranteeing that your AI purposes can leverage the total energy of your enterprise knowledge whereas sustaining the governance and safety requirements your group requires.

Able to discover these new capabilities? Begin with our free tier or attain out to your Databricks consultant to find out how these improvements can speed up your AI initiatives.