The crucial for modernization

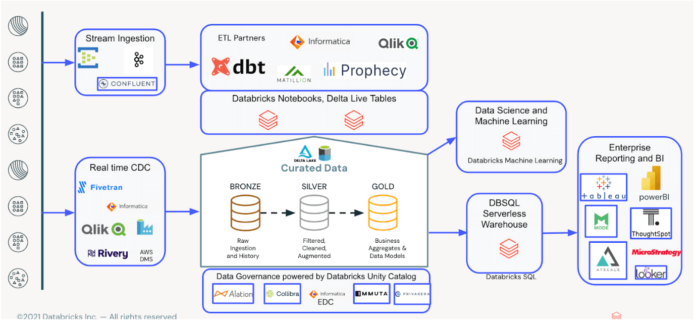

Conventional database options like SQL Server have struggled to maintain up with the calls for of recent information workloads because of an absence of assist for AI/ML, streaming capabilities, and excessive prices. Enterprises more and more undertake cloud-native options like Databricks to realize flexibility, scalability, and value effectivity whereas enabling superior analytics use circumstances.

Key advantages of Databricks over SQL Server

Unified platform: Combines structured and unstructured information processing with AI/ML capabilities. Additional, Unity Catalog gives complete information governance for all information property.

Scalability: Databricks, by way of its cloud-native infrastructure, can scale assets elastically based on workload calls for. This structure allows it to deal with massive, advanced workloads with improved question efficiency and diminished latency.

Price effectivity: Pay-as-you-go cloud pricing fashions cut back infrastructure {hardware} prices. Decrease administrative prices and improved useful resource utilization additionally considerably cut back the general TCO.

Superior analytics: Databricks gives built-in options for superior analytics use circumstances equivalent to AI/ML, GenAI, and real-time streaming. Additional, with Databricks SQL, customers can combine their BI instruments of alternative, thus empowering them to carry out advanced analyses extra effectively.

Architectural deep dive

Migrating from SQL Server to Databricks includes rethinking your information structure to leverage the Lakehouse mannequin’s strengths. Understanding the important thing variations between the 2 platforms is important for designing an efficient migration technique. Key variations between SQL Server and Databricks:

Function

SQL Server

Databricks

Structure

Monolithic RDBMS

Open Lakehouse

Scalability

Vertical scaling

Horizontal scaling through clusters

AI/ML assist

Minimal

Constructed-in assist for AI/ML

Actual-time streaming

Restricted

Absolutely supported

Fashionable information warehousing on Databricks

Enterprise information migration

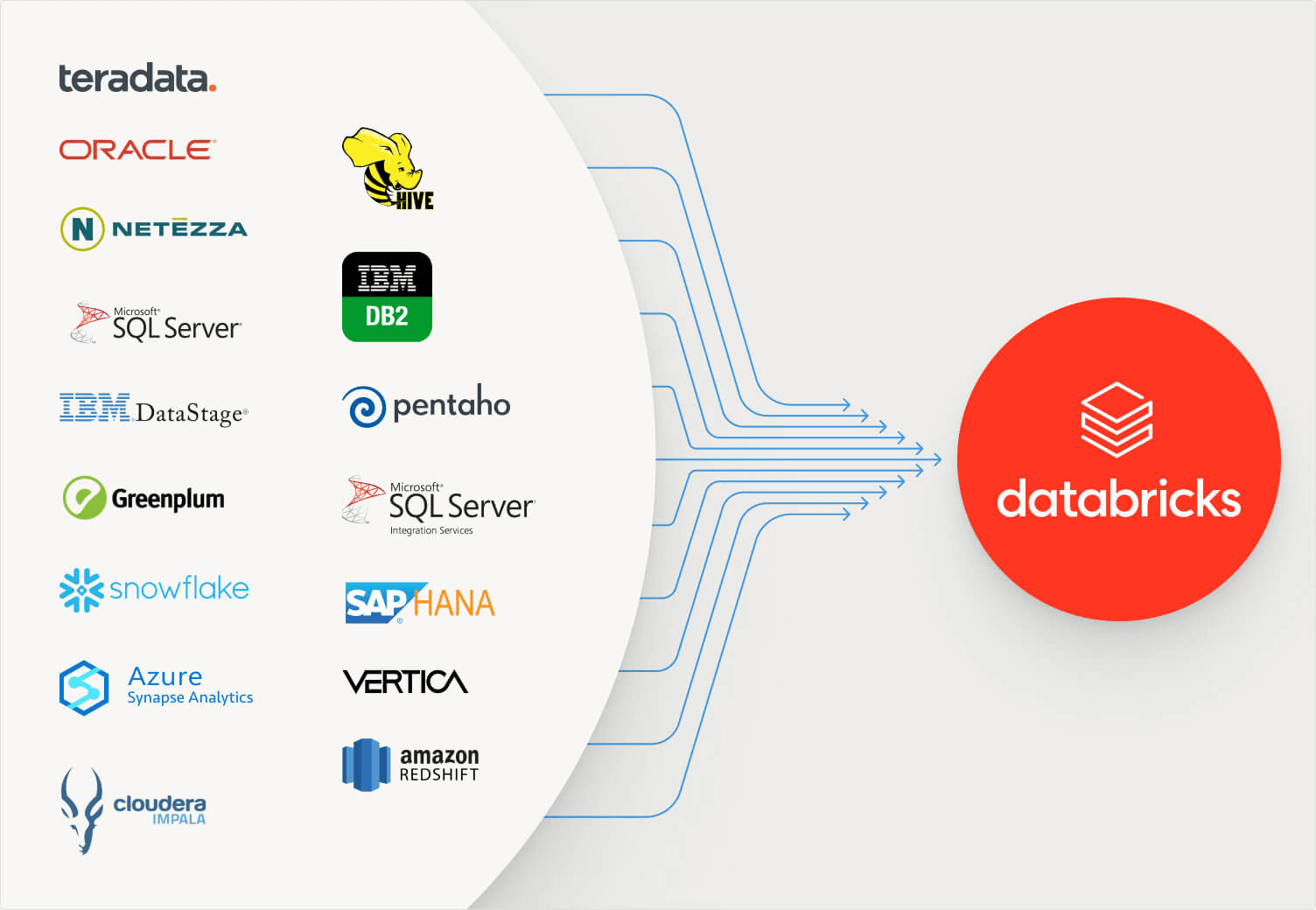

Migrating information from on-premises SQL Server to cloud-based Databricks requires choosing the best instruments and methods primarily based on workload dimension and complexity.

Advisable approaches for information migration:

Databricks Lakeflow Join: Lakeflow Join affords a totally managed SQL Server connector for seamless information ingestion from SQL Server into Databricks lakehouse. For extra info, confer with Ingest information from SQL Server.

Leveraging Databricks Lakehouse Federation: Databricks Lakehouse Federation permits for federated queries throughout completely different information sources, together with SQL Server.

ISV Companions: Databricks ISV Companions, equivalent to Qlik and Fivetran can replicate information from SQL Server to the Databricks Delta desk.

Code migration

Migrating from T-SQL to Databricks SQL requires refactoring SQL scripts, saved procedures, and ETL workflows into Databricks-compatible codecs whereas optimizing efficiency. Databricks has mature code converters and migration tooling to make this course of smoother and extremely automated.

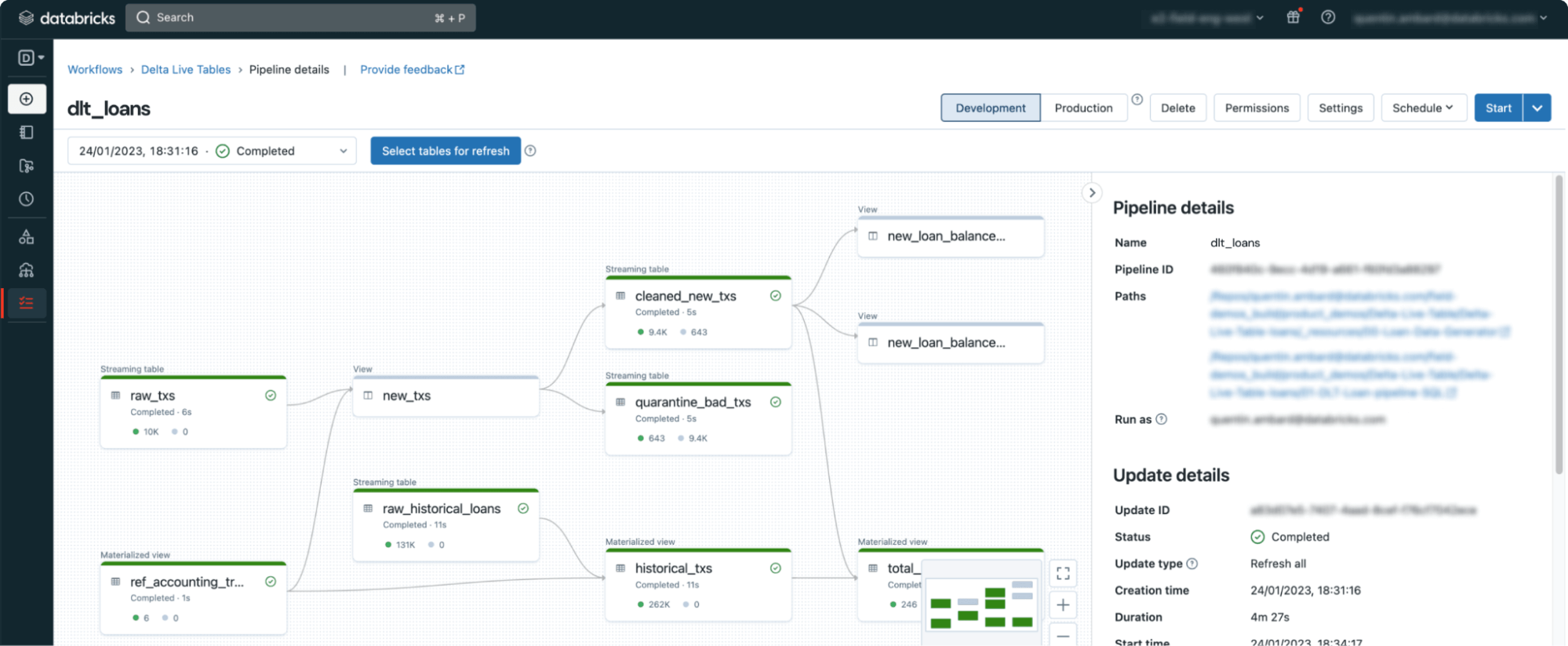

Databricks Code Converter (acquired from BladeBridge) can robotically convert the logic into both Databricks SQL or PySpark notebooks. The BladeBridge conversion software helps schema conversion (tables and views) and SQL queries (choose statements, expressions, capabilities, user-defined capabilities, and so forth.). Additional, saved procedures will be transformed to modular Databricks workflows, SQL Scriptingor DLT pipelines.

ETL Workflow modernization

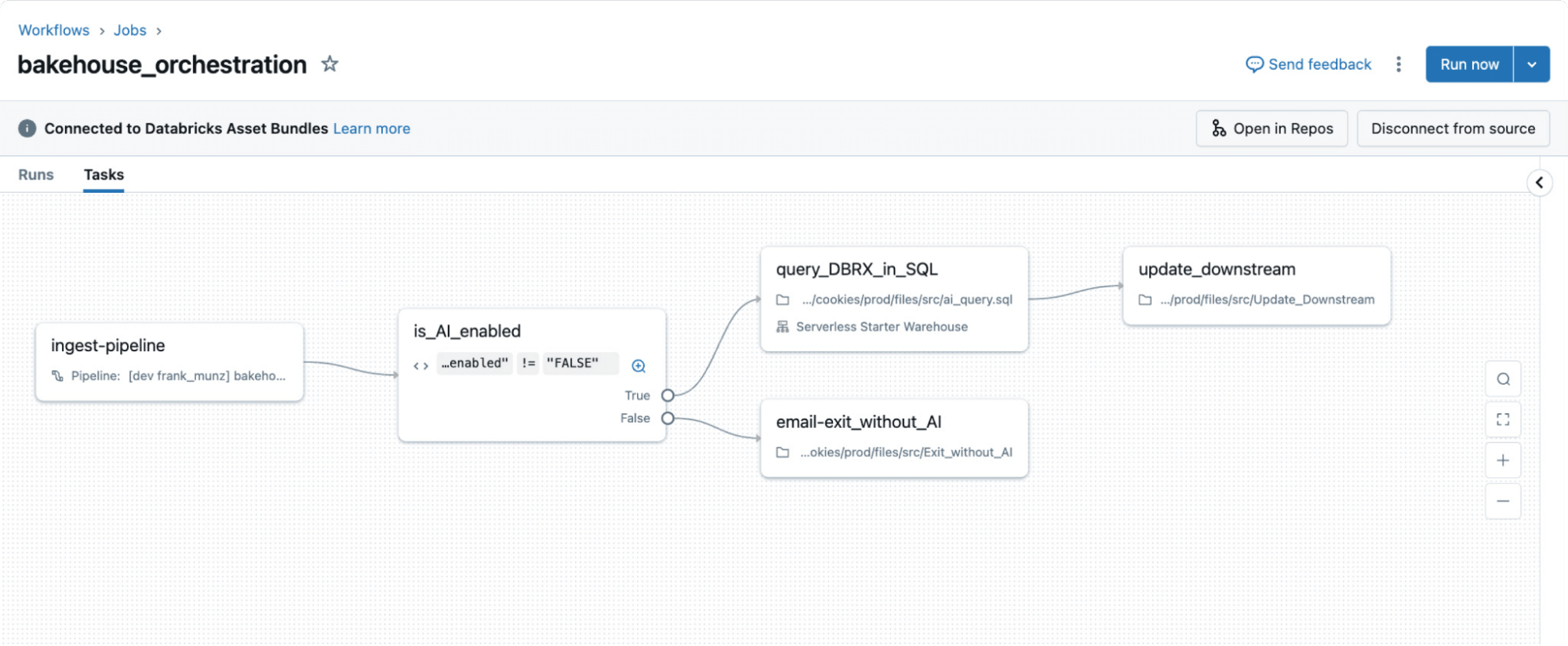

Databricks affords a number of choices for modernizing ETL pipelines, simplifying advanced workflows historically managed by SSIS or SQL Agent.

Choices for ETL orchestration on Databricks:

Databricks Workflows: Native orchestration software supporting Python scripts, Notebooks, dbt transformations, and so forth.

DLT (DLT): Declarative pipelines with built-in information high quality checks.

Databricks Workflows

Databricks DLT

BI and analytics instruments integration

Databricks SQL allows organizations to fulfill information warehousing wants and assist downstream functions and BI Dashboards. Repointing BI instruments like Energy BI or Tableau is important after migrating information pipelines to make sure enterprise continuity.

Microsoft Energy BI, a generally seen downstream utility in varied buyer environments, usually operates on prime of SQL Server’s serving layer.

Energy BI integration greatest practices

Use DirectQuery mode for real-time analytics on Delta tables. DirectQuery is 2- 5x quicker with Databricks vs. SQL Server.

Leverage materialized views in Databricks SQL Warehouse for quicker dashboards through aggregations.

Use SQL Serverless Warehouse for one of the best efficiency for high-concurrency, low-latency workloads.

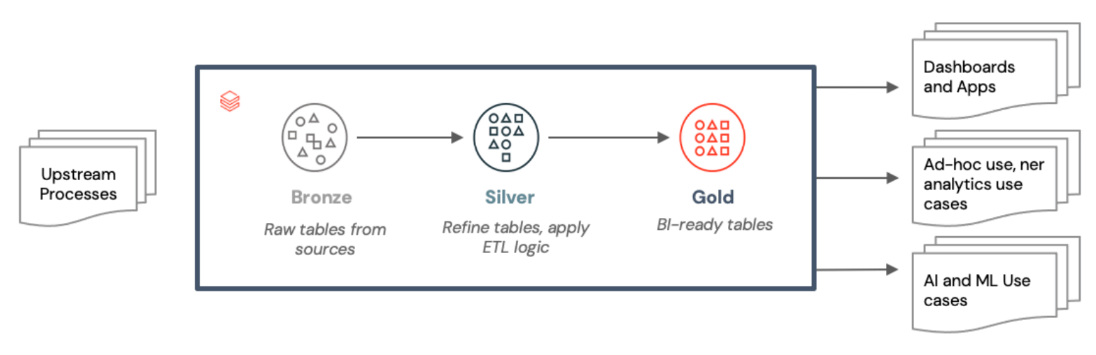

Under is a future-state structure that works effectively for optimizing BI fashions and semantic layers to align with enterprise wants. It features a bronze, silver, and gold layer to feed dashboards, functions, AI, and ML use circumstances.

Validation framework

Validation ensures that migrated datasets keep accuracy and consistency throughout platforms. Advisable validation steps:

Carry out schema checks between the supply (Netezza) and the goal (Databricks).

Evaluate row counts and combination values utilizing automated instruments like Remorph Reconcile or DataCompy.

Run parallel pipelines throughout a transitional section to confirm question outcomes

Data switch and organizational readiness

Upskilling groups on Databricks ideas, Delta Lake structure, Databricks SQL, and efficiency optimization is important for long-term success. Coaching suggestions:

Prepare analysts on Databricks SQL Warehouse options.

Present hands-on labs for engineers transitioning from SSIS to DLT pipelines.

Doc migration patterns and troubleshooting playbooks.

Predictable, low-risk migrations

Migrating from SQL Server to Databricks represents a big shift not simply in know-how however in strategy to information administration and analytics. By planning totally, addressing the important thing variations between platforms, and leveraging Databricks’ distinctive capabilities, organizations can obtain a profitable migration that delivers improved efficiency, scalability, and cost-effectiveness.

The migration journey is a chance to modernize the place your information lives and the way you’re employed with it. By following the following tips and avoiding widespread pitfalls, your group can easily transition to the Databricks Platform and unlock new potentialities for data-driven decision-making.

Do not forget that whereas the technical points of migration are vital, equal consideration must be paid to organizational readiness, data switch, and adoption methods to make sure long-term success.

What to do subsequent

Migration will be difficult. There’ll all the time be tradeoffs to steadiness and surprising points and delays to handle. You want confirmed companions and options for the migration’s folks, course of, and know-how points. We suggest trusting the specialists at Databricks Skilled Companies and our licensed migration companions, who’ve intensive expertise delivering high-quality migration options promptly. Attain out to get your migration evaluation began.

You must also try the Modernizing Your Knowledge Property by Migrating to Azure Databricks eBook.

We even have an entire SQL Server to Databricks Migration Information–get your free copy right here.