Why migrate from Netezza to Databricks?

The restrictions of conventional enterprise information warehouse (EDW) home equipment like Netezza have gotten more and more obvious. These methods have tightly coupled storage, compute, and reminiscence structure, which limits scalability. Increasing capability typically requires expensive {hardware} upgrades, and even within the cloud, it ends in inflexible structure and excessive prices. As organizations look to modernize their Netezza EDW platform, migrating to Databricks Lakehouse gives a scalable cloud-native answer to beat these challenges because it supplies not solely one of the best price-performant Cloud Knowledge Warehouse but additionally a strong superior analytics and Knowledge Intelligence Platform, together with streaming and unified governance capabilities – which future proofs their information structure.

Key advantages of migrating to Databricks

Migrating from Netezza to Databricks isn’t only a lift-and-shift train—it’s a chance to modernize your information structure and unlock broader capabilities. By transferring to a lakehouse structure, organizations can break away from the constraints of tightly coupled, hardware-dependent methods and undertake a extra scalable, versatile, and future-ready platform. Beneath are a number of the key advantages that make Databricks a compelling goal for Netezza migrations.

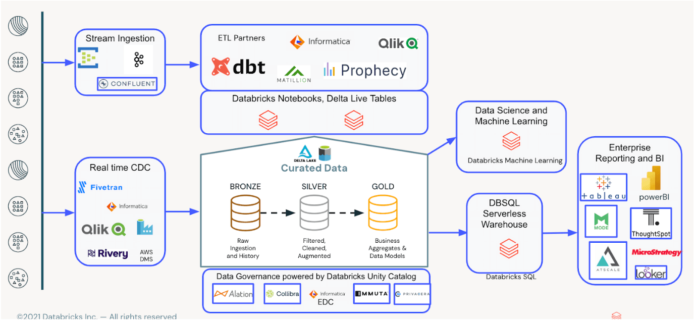

Unified platform: Combines structured and unstructured information processing with AI/ML capabilities. Databricks maintains a single copy of information in cloud storage and supplies numerous processing engines for information warehousing, machine studying, and Generative AI functions, simplifying administration and growing productiveness.

Scalability: In contrast to appliance-based Netezza, Databricks gives limitless scalability by cloud-native infrastructure. Assets scale elastically based mostly on workload calls for, considerably decreasing infrastructure and licensing prices whereas guaranteeing efficiency even underneath intense question hundreds.

Price effectivity: Reduces infrastructure prices with pay-as-you-go cloud pricing fashions.

Superior analytics: Databricks delivers superior analytics options unavailable on conventional warehouse home equipment, reminiscent of built-in AI, ML, and GenAI functionalities. The platform integrates seamlessly with BI instruments (Tableau, Energy BI, ThoughtSpot) and helps saved procedure-like SQL scripting, empowering customers to carry out complicated analyses extra effectively.

Simplified information governance: With Unity Catalog, Databricks simplifies information governance, providing centralized safety, complete auditing, end-to-end information lineage, and fine-grained entry management throughout all information belongings.

AI: Securely join your information with any AI mannequin to create correct, domain-specific functions. Databricks has infused AI all through the Knowledge Intelligence Platform to optimize efficiency and to construct clever experiences.

Redesigning for the Lakehouse structure

Redesigning for the Lakehouse

Migrating from Netezza to Databricks is a chance to simplify and modernize your information structure. The lakehouse structure replaces inflexible, appliance-based methods with a scalable, cloud-native method that helps each analytics and AI on a unified platform.

A standard method is to prepare the lakehouse into layered zones:

Bronze Layer: Ingests uncooked, unfiltered information from numerous sources right into a centralized touchdown zone. This layer preserves information constancy for auditing and replay functions.

Silver Layer: Hosts cleaned, standardized, and domain-modeled information. That is usually the place most transformations and enterprise logic are utilized.

Gold Layer: Offers business-ready datasets—star schemas, marts, sandboxes, and information science zones—tailor-made for consumption by analysts, information scientists, and functions.

This layered construction promotes readability, reusability, and consistency. It additionally breaks down information silos, making it simpler to manipulate and collaborate throughout groups whereas sustaining information high quality and entry controls.

Trendy information warehousing on Databricks

Knowledge migration methods

Migrating information from Netezza requires cautious planning to make sure accuracy, efficiency, and minimal disruption. The very best method is determined by the scale and complexity of your workloads and your present infrastructure. Beneath are confirmed methods for transferring information from Netezza into Databricks effectively.

Select the fitting technique based mostly on workload dimension and complexity:

NZUNLOAD + Auto Loader: Export from Netezza to cloud storage, then ingest with Databricks Auto Loader.

Ingestion Companions: Use companion instruments with change information seize (CDC) assist.

Cloud Instruments: AWS DMS, Azure Knowledge Manufacturing facility, or GCP DMS for streamlined migration.

JDBC/ODBC Drivers: Direct entry by way of Databricks connectors.

Code and logic migration

Netezza SQL scripts, saved procedures, and ETL pipelines operating on Netezza have to be translated into Databricks-compatible codecs whereas optimizing efficiency.

Automated code conversion with BladeBridge

Databricks Migration tooling, BladeBridge, can robotically convert the Netezza SQL dialect into both Databricks SQL scripts.

BladeBridge can automate over 80-90% of NZSQL to Databricks SQL, together with changing saved procedures to modular Databricks workflows, SQL Scriptingor DLT pipelines.

Modernizing your ETL Pipelines

Databricks gives a number of choices for modernizing ETL pipelines, simplifying complicated workflows historically managed by instruments like Informatica or Management-M. Choices for ETL orchestration on Databricks:

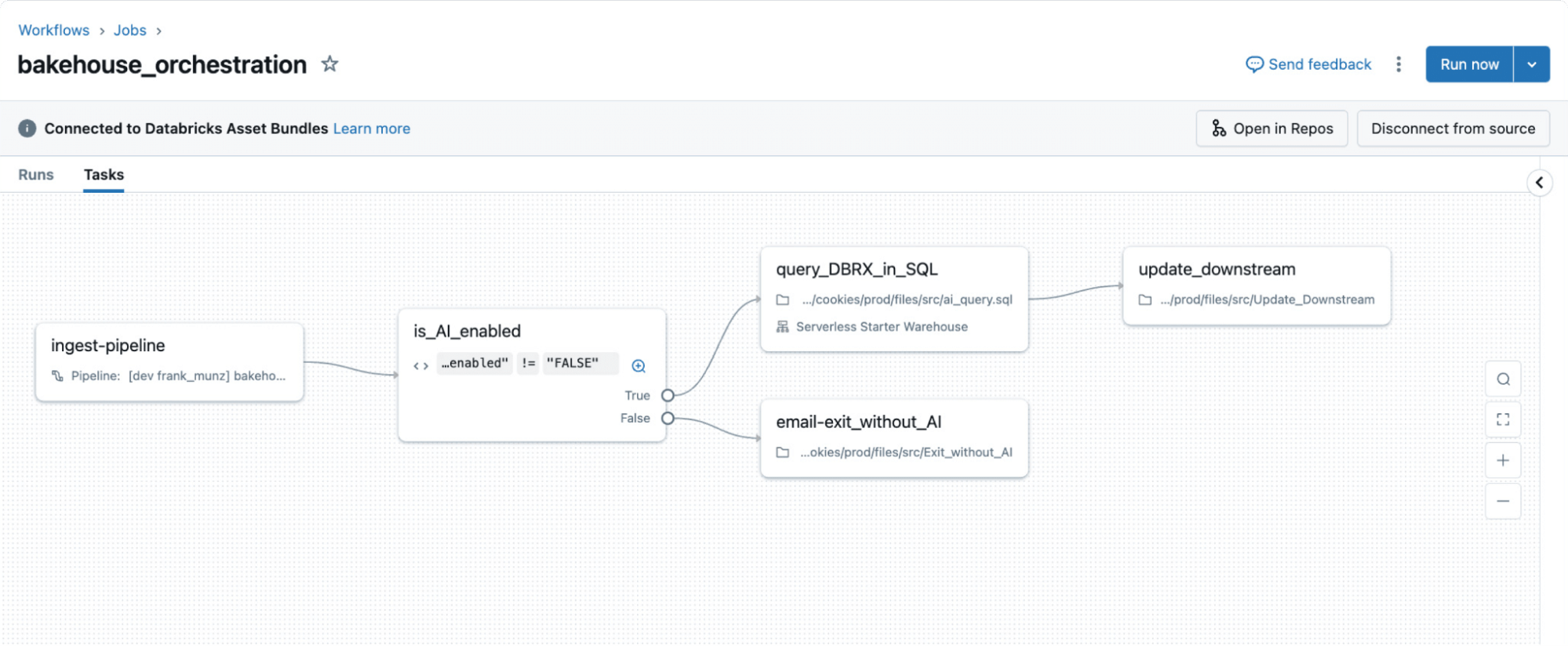

Databricks Workflows: Native orchestration software supporting Python scripts, Notebooks, dbt transformations, and many others.

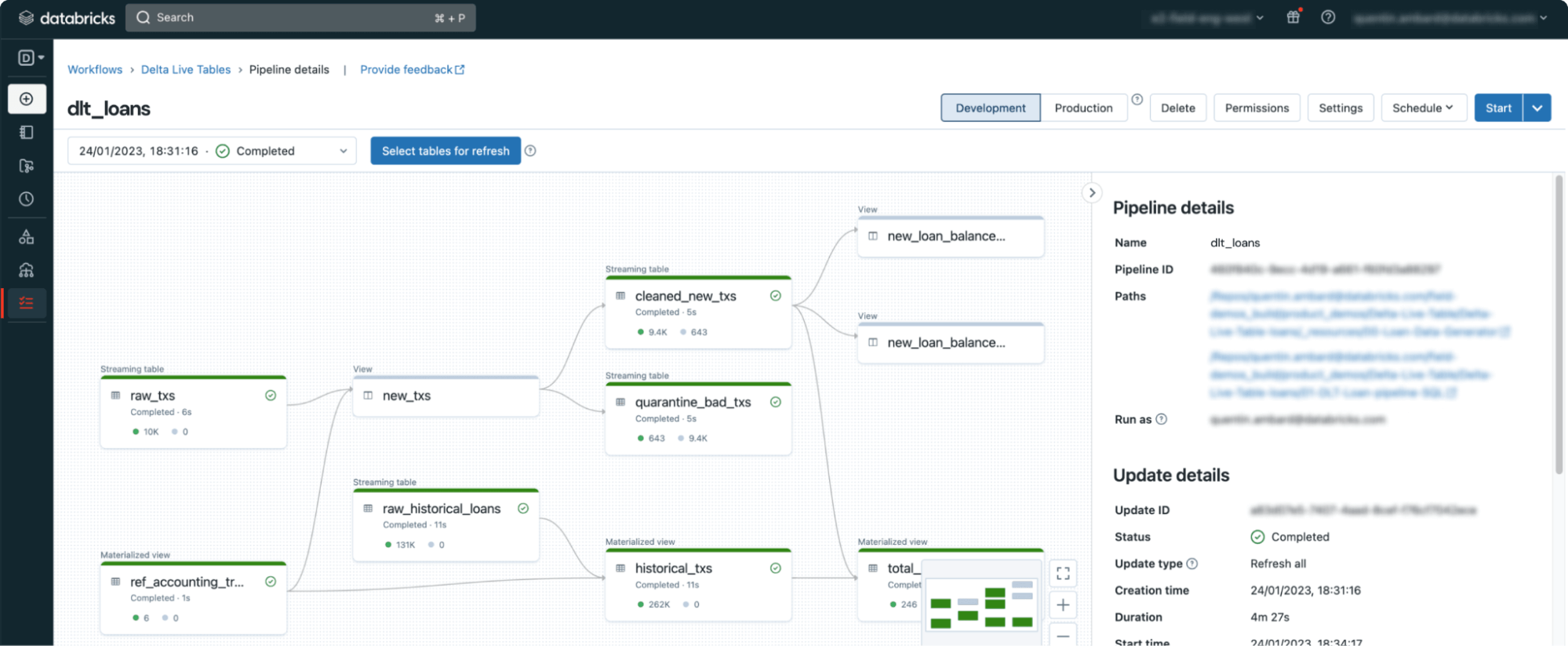

DLT Pipelines: Declarative pipelines with built-in information high quality checks.

Exterior Instruments: Combine Apache Airflow or Azure Knowledge Manufacturing facility by way of REST APIs.

Databricks Workflow

DLT Pipeline

BI and analytics integration

After information is migrated and pipelines are modernized, the subsequent step is enabling entry for analytics and reporting. Databricks supplies built-in instruments and seamless integrations with common BI platforms, making it straightforward for analysts and enterprise customers to question information, construct dashboards, and discover insights—without having to maneuver information out of the lakehouse.

Databricks gives a serverless SQL warehouse with many options that make BI simpler, reminiscent of:

AI/BI Genie: Our AI fashions constantly be taught and adapt to your information and evolving enterprise ideas and supply correct solutions throughout the context of your group utilizing pure language Q&A. With AI/BI Genie, you will get solutions to questions not addressed in your BI Dashboards.

AI-driven Dashboards: Merely describe the visible you need in pure language and let Databricks Assistant generate the chart. Then, modify the chart utilizing level and click on.

Simple BI software Integration: Databricks SQL simply connects BI instruments (Energy BI, Tableau, and extra) to your lakehouse for quick efficiency, low latency and excessive consumer concurrency to your information lake.

Publish-migration validation

Validation ensures that migrated datasets keep accuracy and consistency throughout platforms. Really useful validation steps:

Carry out schema checks between the supply (Netezza) and the goal (Databricks).

Evaluate row counts and mixture values utilizing automated instruments like Remorph Reconcile or DataCompy.

Run parallel pipelines throughout a transitional section to confirm question outcomes.

Data switch and organizational readiness

Upskilling groups on Databricks ideas reminiscent of Delta Lake structure, Spark SQL, and pointers on efficiency optimization is crucial for long-term success. Coaching suggestions:

Practice analysts on Databricks SQL Warehouse options.

Present hands-on labs for engineers transitioning from NZSQL to DLT pipelines.

Doc migration patterns and troubleshooting playbooks.

Predictable, low-risk migrations

Migrating from Netezza to Databricks represents a big shift not simply in expertise however in method to information administration and analytics. By planning totally, addressing the important thing variations between platforms, and leveraging Databricks’ distinctive capabilities, organizations can obtain a profitable migration that delivers improved efficiency, scalability, and cost-effectiveness.

The migration journey is a chance to modernize the place your information lives and the way you’re employed with it. By following the following pointers and avoiding frequent pitfalls, your group can easily transition to the Databricks Platform and unlock new potentialities for data-driven decision-making.

Keep in mind that whereas the technical elements of migration are vital, equal consideration needs to be paid to organizational readiness, information switch, and adoption methods to make sure long-term success.

What to do subsequent

Migration might be difficult. There’ll all the time be tradeoffs to stability and surprising points and delays to handle. You want confirmed companions and options for the migration’s individuals, course of, and expertise elements. We suggest trusting the specialists at Databricks Skilled Companies and our licensed migration companions, who’ve intensive expertise delivering high-quality migration options promptly. Attain out to get your migration evaluation began.

We even have an entire Netezza to Databricks Migration Information–get your free copy right here.