Need smarter insights in your inbox? Join our weekly newsletters to get solely what issues to enterprise AI, information, and safety leaders. Subscribe Now

Researchers at We Katan Labs have launched Arch-Routera brand new routing mannequin and framework designed to intelligently map consumer queries to probably the most appropriate giant language mannequin (LLM).

For enterprises constructing merchandise that depend on a number of LLMs, Arch-Router goals to resolve a key problem: tips on how to direct queries to one of the best mannequin for the job with out counting on inflexible logic or pricey retraining each time one thing modifications.

The challenges of LLM routing

Because the variety of LLMs grows, builders are shifting from single-model setups to multi-model techniques that use the distinctive strengths of every mannequin for particular duties (e.g., code technology, textual content summarization, or picture enhancing).

LLM routing has emerged as a key method for constructing and deploying these techniques, performing as a visitors controller that directs every consumer question to probably the most applicable mannequin.

Present routing strategies typically fall into two classes: “task-based routing,” the place queries are routed based mostly on predefined duties, and “performance-based routing,” which seeks an optimum steadiness between price and efficiency.

Nonetheless, task-based routing struggles with unclear or shifting consumer intentions, notably in multi-turn conversations. Efficiency-based routing, however, rigidly prioritizes benchmark scores, usually neglects real-world consumer preferences and adapts poorly to new fashions until it undergoes pricey fine-tuning.

Extra essentially, because the Katanemo Labs researchers notice of their paper“present routing approaches have limitations in real-world use. They sometimes optimize for benchmark efficiency whereas neglecting human preferences pushed by subjective analysis standards.”

The researchers spotlight the necessity for routing techniques that “align with subjective human preferences, supply extra transparency, and stay simply adaptable as fashions and use instances evolve.”

A brand new framework for preference-aligned routing

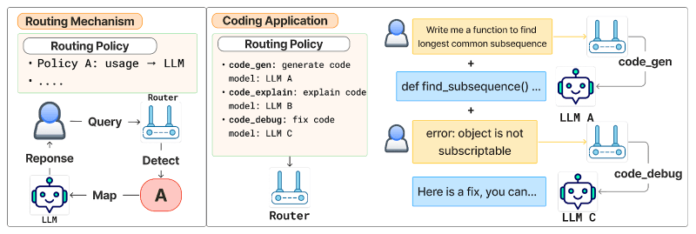

To deal with these limitations, the researchers suggest a “preference-aligned routing” framework that matches queries to routing insurance policies based mostly on user-defined preferences.

On this framework, customers outline their routing insurance policies in pure language utilizing a “Area-Motion Taxonomy.” It is a two-level hierarchy that displays how folks naturally describe duties, beginning with a basic matter (the Area, reminiscent of “authorized” or “finance”) and narrowing to a particular process (the Motion, reminiscent of “summarization” or “code technology”).

Every of those insurance policies is then linked to a most well-liked mannequin, permitting builders to make routing selections based mostly on real-world wants reasonably than simply benchmark scores. Because the paper states, “This taxonomy serves as a psychological mannequin to assist customers outline clear and structured routing insurance policies.”

The routing course of occurs in two phases. First, a preference-aligned router mannequin takes the consumer question and the total set of insurance policies and selects probably the most applicable coverage. Second, a mapping perform connects that chosen coverage to its designated LLM.

As a result of the mannequin choice logic is separated from the coverage, fashions could be added, eliminated, or swapped just by enhancing the routing insurance policies, with none have to retrain or modify the router itself. This decoupling gives the pliability required for sensible deployments, the place fashions and use instances are always evolving.

Desire-aligned routing framework Supply: arXiv

The coverage choice is powered by Arch-Router, a compact 1.5B parameter language mannequin fine-tuned for preference-aligned routing. Arch-Router receives the consumer question and the whole set of coverage descriptions inside its immediate. It then generates the identifier of the best-matching coverage.

For the reason that insurance policies are a part of the enter, the system can adapt to new or modified routes at inference time by way of in-context studying and with out retraining. This generative strategy permits Arch-Router to make use of its pre-trained information to grasp the semantics of each the question and the insurance policies, and to course of all the dialog historical past directly.

A standard concern with together with intensive insurance policies in a immediate is the potential for elevated latency. Nonetheless, the researchers designed Arch-Router to be extremely environment friendly. “Whereas the size of routing insurance policies can get lengthy, we will simply enhance the context window of Arch-Router with minimal impression on latency,” explains Salman Paracha, co-author of the paper and Founder/CEO of Katanemo Labs. He notes that latency is primarily pushed by the size of the output, and for Arch-Router, the output is just the quick title of a routing coverage, like “image_editing” or “document_creation.”

Arch-Router in motion

To construct Arch-Router, the researchers fine-tuned a 1.5B parameter model of the Qwen 2.5 mannequin on a curated dataset of 43,000 examples. They then examined its efficiency towards state-of-the-art proprietary fashions from OpenAI, Anthropic and Google on 4 public datasets designed to guage conversational AI techniques.

The outcomes present that Arch-Router achieves the very best general routing rating of 93.17%, surpassing all different fashions, together with high proprietary ones, by a median of seven.71%. The mannequin’s benefit grew with longer conversations, demonstrating its robust capability to trace context over a number of turns.

Arch-Router vs different fashions Supply: arXiv

Arch-Router vs different fashions Supply: arXiv

In follow, this strategy is already being utilized in a number of eventualities, based on Paracha. For instance, in open-source coding instruments, builders use Arch-Router to direct completely different phases of their workflow, reminiscent of “code design,” “code understanding,” and “code technology,” to the LLMs greatest suited to every process. Equally, enterprises can route doc creation requests to a mannequin like Claude 3.7 Sonnet whereas sending picture enhancing duties to Gemini 2.5 Professional.

The system can be ideally suited “for private assistants in numerous domains, the place customers have a range of duties from textual content summarization to factoid queries,” Paracha stated, including that “in these instances, Arch-Router might help builders unify and enhance the general consumer expertise.”

This framework is built-in with ArchKatanemo Labs’ AI-native proxy server for brokers, which permits builders to implement subtle traffic-shaping guidelines. As an example, when integrating a brand new LLM, a group can ship a small portion of visitors for a particular routing coverage to the brand new mannequin, confirm its efficiency with inner metrics, after which totally transition visitors with confidence. The corporate can be working to combine its instruments with analysis platforms to streamline this course of for enterprise builders additional.

Finally, the aim is to maneuver past siloed AI implementations. “Arch-Router—and Arch extra broadly—helps builders and enterprises transfer from fragmented LLM implementations to a unified, policy-driven system,” says Paracha. “In eventualities the place consumer duties are various, our framework helps flip that process and LLM fragmentation right into a unified expertise, making the ultimate product really feel seamless to the top consumer.”

Every day insights on enterprise use instances with VB Every day

If you wish to impress your boss, VB Every day has you lined. We provide the inside scoop on what corporations are doing with generative AI, from regulatory shifts to sensible deployments, so you possibly can share insights for optimum ROI.

Thanks for subscribing. Try extra VB newsletters right here.

An error occured.