Be a part of the occasion trusted by enterprise leaders for practically 20 years. VB Remodel brings collectively the individuals constructing actual enterprise AI technique. Study extra

Researchers at WITH have developed a framework referred to as Self-Adapting Language Fashions (SEAL) that allows massive language fashions (LLMs) to repeatedly study and adapt by updating their very own inside parameters. SEAL teaches an LLM to generate its personal coaching information and replace directions, permitting it to completely soak up new data and study new duties.

This framework may very well be helpful for enterprise functions, notably for AI brokers that function in dynamic environments, the place they have to always course of new info and adapt their conduct.

The problem of adapting LLMs

Whereas massive language fashions have proven outstanding skills, adapting them to particular duties, integrating new info, or mastering novel reasoning abilities stays a major hurdle.

At present, when confronted with a brand new process, LLMs usually study from information “as-is” by strategies like finetuning or in-context studying. Nevertheless, the offered information just isn’t at all times in an optimum format for the mannequin to study effectively. Present approaches don’t permit the mannequin to develop its personal methods for finest remodeling and studying from new info.

“Many enterprise use circumstances demand extra than simply factual recall—they require deeper, persistent adaptation,” Jyo Pari, PhD scholar at MIT and co-author of the paper, informed VentureBeat. “For instance, a coding assistant would possibly have to internalize an organization’s particular software program framework, or a customer-facing mannequin would possibly have to study a consumer’s distinctive conduct or preferences over time.”

In such circumstances, short-term retrieval falls quick, and the data must be “baked into” the mannequin’s weights in order that it influences all future responses.

Creating self-adapting language fashions

“As a step in direction of scalable and environment friendly adaptation of language fashions, we suggest equipping LLMs with the power to generate their very own coaching information and finetuning directives for utilizing such information,” the MIT researchers state of their paper.

Overview of SEAL framework Supply: arXiv

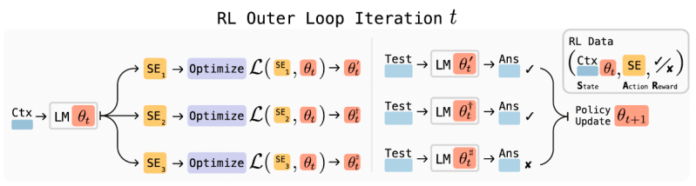

The researchers’ resolution is SEAL, quick for Self-Adapting Language Fashions. It makes use of a reinforcement studying (RL) algorithm to coach an LLM to generate “self-edits”—natural-language directions that specify how the mannequin ought to replace its personal weights. These self-edits can restructure new info, create artificial coaching examples, and even outline the technical parameters for the training course of itself.

Intuitively, SEAL teaches a mannequin how you can create its personal personalised research information. As a substitute of simply studying a brand new doc (the uncooked information), the mannequin learns to rewrite and reformat that info into a mode it may extra simply soak up and internalize. This course of brings collectively a number of key areas of AI analysis, together with artificial information technology, reinforcement studying and test-time coaching (TTT).

The framework operates on a two-loop system. In an “interior loop,” the mannequin makes use of a self-edit to carry out a small, short-term replace to its weights. In an “outer loop,” the system evaluates whether or not that replace improved the mannequin’s efficiency on a goal process. If it did, the mannequin receives a constructive reward, reinforcing its capability to generate that type of efficient self-edit sooner or later. Over time, the LLM turns into an professional at instructing itself.

Of their research, the researchers used a single mannequin for your complete SEAL framework. Nevertheless, additionally they be aware that this course of will be decoupled right into a “teacher-student” mannequin. A specialised instructor mannequin may very well be skilled to generate efficient self-edits for a separate scholar mannequin, which might then be up to date. This strategy may permit for extra specialised and environment friendly adaptation pipelines in enterprise settings.

SEAL in motion

The researchers examined SEAL in two key domains: data incorporation (the power to completely combine new information) and few-shot studying (the power to generalize from a handful of examples).

SEAL in data incorporation Supply: arXiv

SEAL in data incorporation Supply: arXiv

For data incorporation, the purpose was to see if the mannequin may reply questions on a textual content passage with out getting access to the passage throughout questioning. Finetuning Llama-3.2-1B on the uncooked textual content offered solely a marginal enchancment over the bottom mannequin.

Nevertheless, when the SEAL mannequin created “self-edits” by producing a number of “implications” from a passage and was skilled on this artificial information, its accuracy jumped to 47%. Notably, this outperformed outcomes from utilizing artificial information generated by the a lot bigger GPT-4.1, suggesting the mannequin discovered to create superior coaching materials for itself.

SEAL in few-shot studying Supply: arXiv

SEAL in few-shot studying Supply: arXiv

For few-shot studying, the researchers examined SEAL on examples from the Summary Reasoning Corpus (ARC), the place the mannequin should clear up visible puzzles. Within the self-edit section, the mannequin needed to generate your complete adaptation technique, together with which information augmentations and instruments to make use of and what studying price to use.

SEAL achieved a 72.5% success price, a dramatic enchancment over the 20% price achieved with out RL coaching and the 0% price of normal in-context studying.

SEAL (crimson line) continues to enhance throughout RL cycles Supply: arXiv

SEAL (crimson line) continues to enhance throughout RL cycles Supply: arXiv

Implications for the enterprise

Some specialists undertaking that the provision of high-quality, human-generated coaching information may very well be exhausted within the coming years. Progress might quickly rely upon “a mannequin’s capability to generate its personal high-utility coaching sign,” because the researchers put it. They add, “A pure subsequent step is to meta-train a devoted SEAL synthetic-data generator mannequin that produces contemporary pretraining corpora, permitting future fashions to scale and obtain better information effectivity with out counting on extra human textual content.”

For instance, the researchers suggest that an LLM may ingest advanced paperwork like tutorial papers or monetary experiences and autonomously generate 1000’s of explanations and implications to deepen its understanding.

“This iterative loop of self-expression and self-refinement may permit fashions to maintain enhancing on uncommon or underrepresented subjects even within the absence of extra exterior supervision,” the researchers clarify.

This functionality is very promising for constructing AI brokers. Agentic methods should incrementally purchase and retain data as they work together with their atmosphere. SEAL supplies a mechanism for this. After an interplay, an agent may synthesize a self-edit to set off a weight replace, permitting it to internalize the teachings discovered. This permits the agent to evolve over time, enhance its efficiency based mostly on expertise, and cut back its reliance on static programming or repeated human steerage.

“SEAL demonstrates that giant language fashions needn’t stay static after pretraining,” the researchers write. “By studying to generate their very own artificial self-edit information and to use it by light-weight weight updates, they will autonomously incorporate new data and adapt to novel duties.”

Limitations of SEAL

That stated, SEAL just isn’t a common resolution. For instance, it may endure from “catastrophic forgetting,” the place fixed retraining cycles can lead to the mannequin studying its earlier data.

“In our present implementation, we encourage a hybrid strategy,” Pari stated. “Enterprises ought to be selective about what data is essential sufficient to combine completely.”

Factual and evolving information can stay in exterior reminiscence by RAG, whereas long-lasting, behavior-shaping data is best suited to weight-level updates through SEAL.

“This type of hybrid reminiscence technique ensures the best info is persistent with out overwhelming the mannequin or introducing pointless forgetting,” he stated.

It is usually value noting that SEAL takes a non-trivial period of time to tune the self-edit examples and practice the mannequin. This makes steady, real-time enhancing infeasible in most manufacturing settings.

“We envision a extra sensible deployment mannequin the place the system collects information over a interval—say, just a few hours or a day—after which performs focused self-edits throughout scheduled replace intervals,” Pari stated. “This strategy permits enterprises to regulate the price of adaptation whereas nonetheless benefiting from SEAL’s capability to internalize new data.”

Every day insights on enterprise use circumstances with VB Every day

If you wish to impress your boss, VB Every day has you coated. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you possibly can share insights for max ROI.

Thanks for subscribing. Try extra VB newsletters right here.

An error occured.