Be a part of the occasion trusted by enterprise leaders for practically twenty years. VB Rework brings collectively the folks constructing actual enterprise AI technique. Study extra

Editor’s observe: Louis will lead an editorial roundtable on this subject at VB Rework this month. Register at present.

AI fashions are beneath siege. With 77% of enterprises already hit by adversarial mannequin assaults and 41% of these assaults exploiting immediate injections and information poisoning, attackers’ tradecraft is outpacing present cyber defenses.

To reverse this pattern, it’s important to rethink how safety is built-in into the fashions being constructed at present. DevOps groups must shift from taking a reactive protection to steady adversarial testing at each step.

Pink Teaming must be the core

Defending giant language fashions (LLMs) throughout DevOps cycles requires pink teaming as a core element of the model-creation course of. Fairly than treating safety as a ultimate hurdle, which is typical in internet app pipelines, steady adversarial testing must be built-in into each section of the Software program Improvement Life Cycle (SDLC).

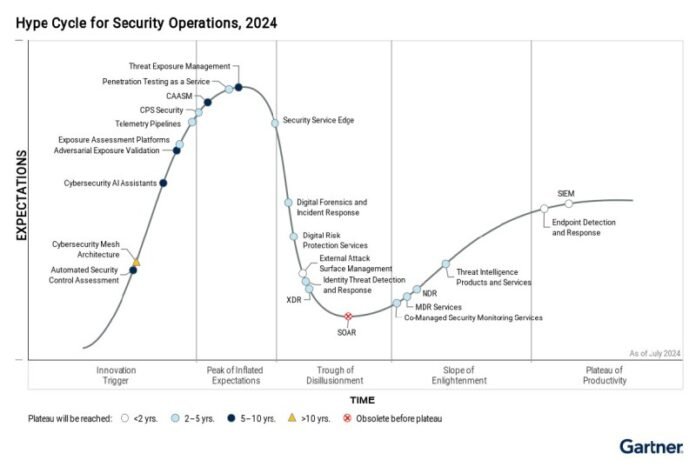

Gartner’s Hype Cycle emphasizes the rising significance of steady risk publicity administration (CTEM), underscoring why pink teaming should combine totally into the DevSecOps lifecycle. Supply: Gartner, Hype Cycle for Safety Operations, 2024

Adopting a extra integrative strategy to DevSecOps fundamentals is changing into essential to mitigate the rising dangers of immediate injections, information poisoning and the publicity of delicate information. Extreme assaults like these have gotten extra prevalent, occurring from mannequin design via deployment, making ongoing monitoring important.

Microsoft’s current steering on planning pink teaming for big language fashions (LLMs) and their purposes offers a useful methodology for beginning an built-in course of. NIST’s AI Danger Administration Framework reinforces this, emphasizing the necessity for a extra proactive, lifecycle-long strategy to adversarial testing and threat mitigation. Microsoft’s current pink teaming of over 100 generative AI merchandise underscores the necessity to combine automated risk detection with professional oversight all through mannequin growth.

As regulatory frameworks, such because the EU’s AI Act, mandate rigorous adversarial testing, integrating steady pink teaming ensures compliance and enhanced safety.

Openai’s strategy to pink teaming integrates exterior pink teaming from early design via deployment, confirming that constant, preemptive safety testing is essential to the success of LLM growth.

Gartner’s framework exhibits the structured maturity path for pink teaming, from foundational to superior workouts, important for systematically strengthening AI mannequin defenses. Supply: Gartner, Enhance Cyber Resilience by Conducting Pink Group Workouts

Gartner’s framework exhibits the structured maturity path for pink teaming, from foundational to superior workouts, important for systematically strengthening AI mannequin defenses. Supply: Gartner, Enhance Cyber Resilience by Conducting Pink Group Workouts

Why conventional cyber defenses fail in opposition to AI

Conventional, longstanding cybersecurity approaches fall quick in opposition to AI-driven threats as a result of they’re basically totally different from typical assaults. As adversaries’ tradecraft surpasses conventional approaches, new strategies for pink teaming are crucial. Right here’s a pattern of the numerous varieties of tradecraft particularly constructed to assault AI fashions all through the DevOps cycles and as soon as within the wild:

Information Poisoning: Adversaries inject corrupted information into coaching units, inflicting fashions to study incorrectly and creating persistent inaccuracies and operational errors till they’re found. This typically undermines belief in AI-driven selections.

Mannequin Evasion: Adversaries introduce fastidiously crafted, delicate enter adjustments, enabling malicious information to slide previous detection techniques by exploiting the inherent limitations of static guidelines and pattern-based safety controls.

Mannequin Inversion: Systematic queries in opposition to AI fashions allow adversaries to extract confidential data, doubtlessly exposing delicate or proprietary coaching information and creating ongoing privateness dangers.

Immediate Injection: Adversaries craft inputs particularly designed to trick generative AI into bypassing safeguards, producing dangerous or unauthorized outcomes.

Twin-Use Frontier Dangers: Within the current paper, Benchmark Early and Pink Group Typically: A Framework for Assessing and Managing Twin-Use Hazards of AI Basis Fashionsresearchers from The Middle for Lengthy-Time period Cybersecurity on the College of California, Berkeley emphasize that superior AI fashions considerably decrease obstacles, enabling non-experts to hold out subtle cyberattacks, chemical threats, or different advanced exploits, basically reshaping the worldwide risk panorama and intensifying threat publicity.

Built-in Machine Studying Operations (MLOps) additional compound these dangers, threats, and vulnerabilities. The interconnected nature of LLM and broader AI growth pipelines magnifies these assault surfaces, requiring enhancements in pink teaming.

Cybersecurity leaders are more and more adopting steady adversarial testing to counter these rising AI threats. Structured red-team workouts at the moment are important, realistically simulating AI-focused assaults to uncover hidden vulnerabilities and shut safety gaps earlier than attackers can exploit them.

How AI leaders keep forward of attackers with pink teaming

Adversaries proceed to speed up their use of AI to create completely new types of tradecraft that defy present, conventional cyber defenses. Their objective is to use as many rising vulnerabilities as attainable.

Business leaders, together with the foremost AI firms, have responded by embedding systematic and complicated red-teaming methods on the core of their AI safety. Fairly than treating pink teaming as an occasional examine, they deploy steady adversarial testing by combining professional human insights, disciplined automation, and iterative human-in-the-middle evaluations to uncover and cut back threats earlier than attackers can exploit them proactively.

Their rigorous methodologies enable them to establish weaknesses and systematically harden their fashions in opposition to evolving real-world adversarial eventualities.

Particularly:

Anthropic depends on rigorous human perception as a part of its ongoing red-teaming methodology. By tightly integrating human-in-the-loop evaluations with automated adversarial assaults, the corporate proactively identifies vulnerabilities and frequently refines the reliability, accuracy and interpretability of its fashions.

Meta scales AI mannequin safety via automation-first adversarial testing. Its Multi-round Automated Pink-Teaming (MART) systematically generates iterative adversarial prompts, quickly uncovering hidden vulnerabilities and effectively narrowing assault vectors throughout expansive AI deployments.

Microsoft harnesses interdisciplinary collaboration because the core of its red-teaming power. Utilizing its Python Danger Identification Toolkit (PyRIT), Microsoft bridges cybersecurity experience and superior analytics with disciplined human-in-the-middle validation, accelerating vulnerability detection and offering detailed, actionable intelligence to fortify mannequin resilience.

OpenAI faucets international safety experience to fortify AI defenses at scale. Combining exterior safety specialists’ insights with automated adversarial evaluations and rigorous human validation cycles, OpenAI proactively addresses subtle threats, particularly focusing on misinformation and prompt-injection vulnerabilities to take care of sturdy mannequin efficiency.

In brief, AI leaders know that staying forward of attackers calls for steady and proactive vigilance. By embedding structured human oversight, disciplined automation, and iterative refinement into their pink teaming methods, these business leaders set the usual and outline the playbook for resilient and reliable AI at scale.

Gartner outlines how adversarial publicity validation (AEV) allows optimized protection, higher publicity consciousness, and scaled offensive testing—important capabilities for securing AI fashions. Supply: Gartner, Market Information for Adversarial Publicity Validation

Gartner outlines how adversarial publicity validation (AEV) allows optimized protection, higher publicity consciousness, and scaled offensive testing—important capabilities for securing AI fashions. Supply: Gartner, Market Information for Adversarial Publicity Validation

As assaults on LLMs and AI fashions proceed to evolve quickly, DevOps and DevSecOps groups should coordinate their efforts to handle the problem of enhancing AI safety. VentureBeat is discovering the next 5 high-impact methods safety leaders can implement instantly:

Combine safety early (Anthropic, OpenAI)

Construct adversarial testing immediately into the preliminary mannequin design and all through your entire lifecycle. Catching vulnerabilities early reduces dangers, disruptions and future prices.

Deploy adaptive, real-time monitoring (Microsoft)

Static defenses can’t shield AI techniques from superior threats. Leverage steady AI-driven instruments like CyberAlly to detect and reply to delicate anomalies rapidly, minimizing the exploitation window.

Stability automation with human judgment (Meta, Microsoft)

Pure automation misses nuance; handbook testing alone gained’t scale. Mix automated adversarial testing and vulnerability scans with professional human evaluation to make sure exact, actionable insights.

Often interact exterior pink groups (OpenAI)

Inner groups develop blind spots. Periodic exterior evaluations reveal hidden vulnerabilities, independently validate your defenses and drive steady enchancment.

Preserve dynamic risk intelligence (Meta, Microsoft, OpenAI)

Attackers continuously evolve techniques. Constantly combine real-time risk intelligence, automated evaluation and professional insights to replace and strengthen your defensive posture proactively.

Taken collectively, these methods guarantee DevOps workflows stay resilient and safe whereas staying forward of evolving adversarial threats.

Pink teaming is now not non-obligatory; it’s important

AI threats have grown too subtle and frequent to rely solely on conventional, reactive cybersecurity approaches. To remain forward, organizations should repeatedly and proactively embed adversarial testing into each stage of mannequin growth. By balancing automation with human experience and dynamically adapting their defenses, main AI suppliers show that sturdy safety and innovation can coexist.

Finally, pink teaming isn’t nearly defending AI fashions. It’s about guaranteeing belief, resilience, and confidence in a future more and more formed by AI.

Be a part of me at Rework 2025

I’ll be internet hosting two cybersecurity-focused roundtables at VentureBeat’s Rework 2025which can be held June 24–25 at Fort Mason in San Francisco. Register to hitch the dialog.

My session will embody one on pink teaming, AI Pink Teaming and Adversarial Testingdiving into methods for testing and strengthening AI-driven cybersecurity options in opposition to subtle adversarial threats.

Each day insights on enterprise use instances with VB Each day

If you wish to impress your boss, VB Each day has you coated. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you may share insights for max ROI.

Thanks for subscribing. Try extra VB newsletters right here.

An error occured.