We’re excited to announce that Lakeflow, Databricks’ unified knowledge engineering resolution, is now Typically Obtainable. It consists of expanded ingestion connectors for in style knowledge sources, a brand new “IDE for knowledge engineering” that makes it simple to construct and debug knowledge pipelines, and expanded capabilities for operationalizing and monitoring ETL.

In final yr’s Information + AI Summit, we launched Lakeflow – our imaginative and prescient for the way forward for knowledge engineering – an end-to-end resolution which incorporates three core elements:

Lakeflow Join: Dependable, managed ingestion from enterprise apps, databases, file techniques, and real-time streams, with out the overhead of customized connectors or exterior companies.

Lakeflow Declarative Pipelines: Scalable ETL pipelines constructed on the open commonplace of Spark Declarative Pipelines, built-in with governance and observability, and offering a streamlined growth expertise by way of a contemporary “IDE for knowledge engineering”.

Lakeflow Jobs: Native orchestration for the Information Intelligence Platform, supporting superior management movement, real-time knowledge triggers, and complete monitoring.

By unifying knowledge engineering, Lakeflow eliminates the complexity and value of sewing collectively completely different instruments, enabling knowledge groups to give attention to creating worth for the enterprise. Lakeflow Designer, the brand new AI-powered visible pipeline builder, empowers any person to construct production-grade knowledge pipelines with out writing code.

It’s been a busy yr, and we’re tremendous excited to share what’s new as Lakeflow reaches Common Availability.

Information engineering groups battle to maintain up with their organizational knowledge wants

In each business, a enterprise’s skill to extract worth from its knowledge by way of analytics and AI is its aggressive benefit. Information is being utilized in each side of the group – to create Buyer 360° views and new buyer experiences, to allow new income streams, to optimize operations and to empower workers. As organizations look to make the most of their very own knowledge, they find yourself with a patchwork of tooling. Information engineers discover it arduous to deal with the complexity of knowledge engineering duties whereas navigating fragmented software stacks which are painful to combine and dear to keep up.

A key problem is knowledge governance – fragmented tooling makes it tough to implement requirements, resulting in gaps in discovery, lineage and observability. A current examine by The Economist discovered that “half of knowledge engineers say governance takes up extra time than the rest”. That very same survey requested knowledge engineers what would yield the most important advantages for his or her productiveness, and so they recognized “‘simplifying knowledge supply connections for ingesting knowledge’, ‘utilizing a single unified resolution as a substitute of a number of instruments’ and ‘higher visibility into knowledge pipelines to seek out and repair points’ among the many prime interventions”.

A unified knowledge engineering resolution constructed into the Information Intelligence Platform

Lakeflow helps knowledge groups deal with these challenges by offering an end-to-end knowledge engineering resolution on the Information Intelligence Platform. Databricks prospects can use Lakeflow for each facet of knowledge engineering – ingestion, transformation and orchestration. As a result of all of those capabilities can be found as a part of a single resolution, there is no such thing as a time spent on complicated software integrations or further prices to license exterior instruments.

As well as, Lakeflow is constructed into the Information Intelligence Platform and with this comes constant methods to deploy, govern and observe all knowledge and AI use instances. For instance, for governance, Lakeflow integrates with Unity Catalog, the unified governance resolution for the Information Intelligence Platform. By Unity Catalog, knowledge engineers achieve full visibility and management over each a part of the info pipeline, permitting them to simply perceive the place knowledge is getting used and root trigger points as they come up.

Whether or not it’s versioning code, deploying CI/CD pipelines, securing knowledge or observing real-time operational metrics, Lakeflow leverages the Information Intelligence Platform to offer a single and constant place to handle end-to-end knowledge engineering wants.

Lakeflow Join: Extra connectors, and quick direct writes to Unity Catalog

This previous yr, we’ve seen sturdy adoption of Lakeflow Join with over 2,000 prospects utilizing our ingestion connectors to unlock worth from their knowledge. One instance is Porsche Holding Salzburg who’s already seeing the advantages of utilizing Lakeflow Hook up with unify their CRM knowledge with analytics to enhance the shopper expertise.

“Utilizing the Salesforce connector from Lakeflow Join helps us shut a crucial hole for Porsche from the enterprise facet on ease of use and value. On the shopper facet, we’re capable of create a totally new buyer expertise that strengthens the bond between Porsche and the shopper with a unified and never fragmented buyer journey.”

– Lucas Salzburger, Venture Supervisor, Porsche Holding Salzburg

Right this moment, we’re increasing the breadth of supported knowledge sources with extra built-in connectors for easy, dependable ingestion. Lakeflow’s connectors are optimized for environment friendly knowledge extraction together with utilizing change knowledge seize (CDC) strategies custom-made for every respective knowledge supply.

These managed connectors now span enterprise functions, file sources, databases, and knowledge warehouses, rolling out throughout numerous launch states:

Enterprise functions: Salesforce, Workday, ServiceNow, Google Analytics, Microsoft Dynamics 365, Oracle NetSuite

File sources: SFTP, SharePoint

Databases: Microsoft SQL Server, Oracle Database, MySQL, PostgreSQL

Information warehouses: Snowflake, Amazon Redshift, Google BigQuery

As well as, a typical use case we see from prospects is ingesting real-time occasion knowledge, usually with message bus infrastructure hosted exterior their knowledge platform. To make this use case easy on Databricks, we’re asserting Zerobus, a Lakeflow Join API that enables builders to write down occasion knowledge on to their lakehouse at very excessive throughput (100 MB/s) with close to real-time latency (<5 seconds). This streamlined ingestion infrastructure gives efficiency at scale and is unified with the Databricks Platform so you may leverage broader analytics and AI instruments immediately.

“Joby is ready to use our manufacturing brokers with Zerobus to push gigabytes a minute of telemetry knowledge on to our lakehouse, accelerating the time to insights – all with Databricks Lakeflow and the Information Intelligence Platform.”

— Dominik Müller, Manufacturing unit Techniques Lead, Joby Aviation Inc.

Lakeflow Declarative Pipelines: Accelerated ETL growth constructed on open requirements

After years of working and evolving DLT with 1000’s of consumers throughout petabytes of knowledge, we’ve taken every thing we realized and created a brand new open commonplace: Spark Declarative Pipelines. That is the following evolution in pipeline growth – declarative, scalable, and open.

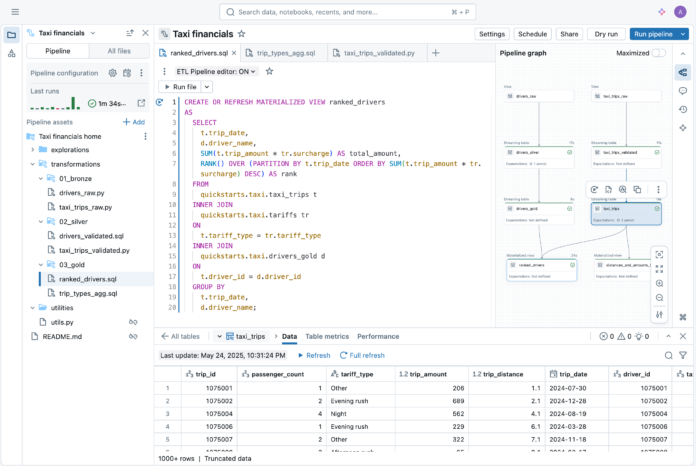

And right now, we’re excited to announce the Common Availability of Lakeflow Declarative Pipelines, bringing the ability of Spark Declarative Pipelines to the Databricks Information Intelligence Platform. It’s 100% source-compatible with the open commonplace, so you may develop pipelines as soon as and run them wherever. It’s additionally 100% backward-compatible with DLT pipelines, so current customers can undertake the brand new capabilities with out rewriting something. Lakeflow Declarative Pipelines are a totally managed expertise on Databricks: hands-off serverless compute, deep integration with Unity Catalog for unified governance, and a purpose-built IDE for Information Engineering.

The brand new IDE for Information Engineering is a contemporary, built-in surroundings constructed to streamline the pipeline growth expertise. It consists of

Code and DAG facet by facet, with dependency visualization and instantaneous knowledge previews

Context-aware debugging that surfaces points inline

Constructed-in Git integration for speedy growth

AI-assisted authoring and configuration

“The brand new editor brings every thing into one place – code, pipeline graph, outcomes, configuration, and troubleshooting. No extra juggling browser tabs or shedding context. Growth feels extra targeted and environment friendly. I can straight see the affect of every code change. One click on takes me to the precise error line, which makes debugging sooner. Every little thing connects – code to knowledge; code to tables; tables to the code. Switching between pipelines is straightforward, and options like auto-configured utility folders take away complexity. This seems like the way in which pipeline growth ought to work.”

— Chris Sharratt, Information Engineer, Rolls-Royce

Lakeflow Declarative Pipelines are actually the unified approach to construct scalable, ruled, and repeatedly optimized pipelines on Databricks – whether or not you’re working in code or visually by way of the Lakeflow Designer, a brand new no-code expertise that allows knowledge practitioners of any technical ability to construct dependable knowledge pipelines.

Lakeflow Jobs: Dependable orchestration for all workloads with unified observability

Databricks Workflows has lengthy been trusted to orchestrate mission-critical workflows, with 1000’s of consumers counting on our platform for pipelines to run over 110 million jobs each week. With the GA of Lakeflow, we’re evolving Workflows into Lakeflow Jobs, unifying this mature, native orchestrator with the remainder of the info engineering stack.

Lakeflow Jobs allows you to orchestrate any course of on the Information Clever Platform with a rising set of capabilities, together with:

Help for a complete assortment of process varieties for orchestrating flows that embody Declarative Pipelines, notebooks, SQL queries, dbt transformations and even publishing AI/BI dashboards or to Energy BI.

Management movement options equivalent to conditional execution, loops and parameter setting on the process or job stage.

Triggers for job runs past easy scheduling with file arrival triggers and the brand new desk replace triggers, which guarantee jobs solely run when new knowledge is offered.

Serverless jobs that gives computerized optimizations for higher efficiency and decrease price.

“With serverless Lakeflow Jobs, we’ve achieved a 3–5x enchancment in latency. What used to take 10 minutes now takes simply 2–3 minutes, considerably lowering processing occasions. This has enabled us to ship sooner suggestions loops for gamers and coaches, guaranteeing they get the insights they want in close to actual time to make actionable selections.”

— Bryce Dugar, Information Engineering Supervisor, Cincinnati Reds

As a part of Lakeflow’s unification, Lakeflow Jobs brings end-to-end observability into each layer of the info lifecycle, from knowledge ingestion to transformation and complicated orchestration. A various toolset tailors to each monitoring want: visible monitoring instruments present search, standing and monitoring at a look, debugging instruments like question profiles assist optimize efficiency, alerts and system tables assist floor points and supply historic insights and knowledge high quality expectations implement guidelines and guarantee excessive requirements to your knowledge pipeline wants.

Get began with Lakeflow

Lakeflow Join, Lakeflow Declarative Pipelines and Lakeflow Jobs are all Typically Obtainable for each Databricks buyer right now. Study extra about Lakeflow right here and go to the official documentation to get began with Lakeflow to your subsequent knowledge engineering venture.