The subsequent era of Amazon SageMaker is the middle in your knowledge, analytics, and AI. SageMaker brings collectively AWS synthetic intelligence and machine studying (AI/ML) and analytics capabilities and delivers an built-in expertise for analytics and AI with unified entry to knowledge. From Amazon SageMaker Unified Studio, a single interface, you possibly can entry your knowledge and use a set of highly effective instruments for knowledge processing, SQL analytics, mannequin improvement, coaching and inference, in addition to generative AI improvement. This unified expertise is assisted by Amazon Q and Amazon SageMaker Catalog (powered by Amazon DataZone), which delivers an embedded generative AI and governance expertise at each step.

With knowledge lineage, now a part of SageMaker Catalog, area directors and knowledge producers can centralize lineage metadata of their knowledge belongings in a single place. You’ll be able to observe the move of knowledge over time, supplying you with a transparent understanding of the place it originated, the way it has modified, and its final use throughout the enterprise. By offering this stage of transparency across the origin of knowledge, knowledge lineage helps knowledge shoppers achieve belief that the info is right for his or her use case. As a result of knowledge lineage is captured on the desk, column, and job stage, knowledge producers can even conduct influence evaluation and reply to knowledge points when wanted.

Seize of knowledge lineage in SageMaker begins after connections and knowledge sources are configured and lineage occasions are generated when knowledge is reworked in AWS Glue or Amazon Redshift. This functionality can be totally appropriate with OpenLineage, so you possibly can additional increase knowledge lineage seize to different knowledge processing instruments. This submit walks you thru tips on how to use the OpenLineage-compatible API of SageMaker or Amazon DataZone to push knowledge lineage occasions programmatically from instruments supporting the OpenLineage commonplace like dbt, Apache Airflow, and Apache Spark.

Resolution overview

Many third-party and open supply instruments which are used in the present day to orchestrate and run knowledge pipelines, like dbt, Airflow, and Spark, have lively help of the OpenLineage commonplace to supply interoperability throughout environments. With this functionality, you solely want to incorporate and configure the correct library to your atmosphere, to have the ability to stream lineage occasions from jobs operating on this instrument on to their corresponding output logs or to a goal HTTP endpoint that you just specify.

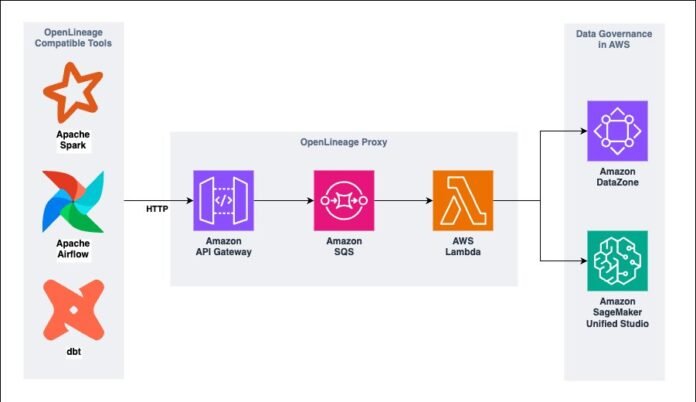

With the goal HTTP endpoint choice, you possibly can introduce a sample to submit lineage occasions from these instruments into SageMaker or Amazon DataZone to additional provide help to centralize governance of your knowledge belongings and processes in a single place. This sample takes the type of a proxy, and its simplified structure is illustrated within the following determine.

The best way that the proxy for OpenLineage works is easy:

Amazon API Gateway exposes an HTTP endpoint and path. Jobs operating with the OpenLineage package deal on high of the supported knowledge processing instruments might be arrange with the HTTP transport choice pointing to this endpoint and path. If connectivity permits, lineage occasions can be streamed into this endpoint because the job runs.

An Amazon Easy Queue Service (Amazon SQS) queue buffers the occasions as they arrive. By storing them in a queue, you have got the choice to implement methods for retries and errors when wanted. For circumstances the place occasion order is required, we advocate the usage of first-in-first-out (FIFO) queues; nevertheless, SageMaker and Amazon DataZone are capable of map incoming OpenLineage occasions, even when they’re out of order.

An AWS Lambda operate retrieves occasions from the queue in batches. For each occasion in a batch, the operate can carry out transformations when wanted and submit the ensuing occasion to the goal SageMaker or Amazon DataZone area.

Although it’s not proven within the structure, AWS Id and Entry Administration (IAM) and Amazon CloudWatch are key capabilities that enable safe interplay between sources with minimal permissions and logging for troubleshooting and observability.

The AWS pattern OpenLineage HTTP Proxy for Amazon SageMaker Governance and Amazon DataZone offers a working implementation of this simplified structure that you would be able to take a look at and customise as wanted. To deploy in a take a look at atmosphere, observe the steps as described within the repository. We use an AWS CloudFormation template to deploy resolution sources.

After you have got deployed the OpenLineage HTTP Proxy resolution, you should utilize it to submit lineage occasions from knowledge processing instruments like dbt, Airflow, and Spark right into a SageMaker or Amazon DataZone area, as proven within the following examples.

Arrange the OpenLineage package deal for Spark in AWS Glue 4.0

AWS Glue added built-in help for OpenLineage with AWS Glue 5.0 (to study extra, see Introducing AWS Glue 5.0 for Apache Spark). For jobs which are nonetheless operating on AWS Glue 4.0, you continue to can stream OpenLineage occasions into SageMaker or Amazon DataZone by utilizing the OpenLineage HTTP Proxy resolution. This serves for instance that may be utilized to different platforms operating Spark like Amazon EMR, third-party options, or self-managed clusters.

To correctly add OpenLineage capabilities to an AWS Glue 4.0 job and configure it to stream lineage occasions into the OpenLineage HTTP Proxy resolution, full the next steps:

Obtain the official OpenLineage package deal for Spark. For our instance, we used the JAR package deal model 2.12 launch 1.9.1.

Retailer the JAR file in an Amazon Easy Storage Service (Amazon S3) bucket that may be accessed by your AWS Glue job.

On the AWS Glue console, open your job.

Below Libraries, for Dependent JARs path, enter the trail of the JAR package deal saved in your S3 bucket.

Within the Job parameters part, add the next parameters:

Allow the OpenLineage package deal:

Key: –user-jars-first

Worth: true

Configure how the OpenLineage package deal can be used to stream lineage occasions. Exchange and with the corresponding values of the OpenLineage HTTP Proxy resolution. These values might be discovered as outputs of the deployed CloudFormation stack. Exchange together with your AWS account ID.

Key: –conf

Worth:

spark.extraListeners=io.openlineage.spark.agent.OpenLineageSparkListener

–conf spark.openlineage.transport.sort=http

–conf spark.openlineage.transport.url=

–conf spark.openlineage.transport.endpoint=/

–conf spark.openlineage.sides.custom_environment_variables=(AWS_DEFAULT_REGION;GLUE_VERSION;GLUE_COMMAND_CRITERIA;GLUE_PYTHON_VERSION;)

–conf spark.glue.accountId=

With this setup, the AWS Glue 4.0 job will use the HTTP transport choice of the OpenLineage package deal to stream lineage occasions into the OpenLineage proxy, which is able to submit occasions to the SageMaker or Amazon DataZone area.

Run the AWS Glue 4.0 job.

The job’s ensuing datasets needs to be sourced into SageMaker or Amazon DataZone in order that OpenLineage occasions are mapped to them. As you discover the sourced dataset in SageMaker Unified Studio, you possibly can observe its origin path as described by the OpenLineage occasions streamed by way of the OpenLineage proxy.

When working with Amazon DataZone, you’ll get the identical consequence.

The origin path on this instance is in depth and maps the ensuing dataset right down to its origin, on this case, a few tables hosted in a relational database and reworked by way of a knowledge pipeline with two AWS Glue 4.0 (Spark) jobs.

Arrange the OpenLineage package deal for dbt

dbt has quickly change into a well-liked framework to construct knowledge pipelines on high of knowledge processing and knowledge warehouse instruments like Amazon Redshift, Amazon EMR, and AWS Glue, in addition to different conventional and third-party options. This framework helps OpenLineage as a approach to standardize era of lineage occasions and combine with the rising knowledge governance ecosystem.dbt deployments may range per atmosphere, which is why we don’t dive into the specifics on this submit. Nonetheless, to easily configure your dbt venture to leverage the OpenLineage HTTP Proxy resolution, full the next steps:

Set up the OpenLineage package deal for dbt. You’ll be able to study extra within the OpenLineage documentation.

Within the root folder of your dbt venture, create an openlineage.yml file the place you possibly can specify the transport configuration. Exchange and with the values of the OpenLineage HTTP Proxy resolution. These values might be discovered as outputs of the deployed CloudFormation stack.

transport:

sort: http

url:

endpoint:

timeout: 5

Run your dbt pipeline. As defined within the OpenLineage documentation, as an alternative of operating the usual dbt run command, you run the dbt-ol run command. The latter command is only a wrapper on high of the usual dbt run command in order that lineage occasions are captured and streamed as configured.

The job’s ensuing datasets needs to be sourced into SageMaker or Amazon DataZone in order that OpenLineage occasions are mapped to them. As you discover the sourced dataset in SageMaker Unified Studio, you possibly can observe its lineage path as described by the OpenLineage occasions streamed by way of the OpenLineage proxy.

When working with Amazon DataZone, you’ll get the identical consequence.

On this instance, the dbt venture is operating on high of Amazon Redshift, which is a standard use case amongst prospects. Amazon Redshift is built-in for automated lineage seize with SageMaker and Amazon DataZone, however such capabilities weren’t used as a part of this instance as an instance how one can nonetheless combine OpenLineage occasions from dbt utilizing the sample carried out within the OpenLineage HTTP Proxy resolution.The dbt pipeline is made by two levels operating sequentially, that are illustrated within the origin path because the nodes with the dbt sort.

Arrange the OpenLineage package deal for Airflow

Airflow is a well-positioned instrument to orchestrate knowledge pipelines at any scale. AWS offers Amazon Managed Workflows for Apache Airflow (Amazon MWAA) as a managed different for patrons that wish to scale back administration and speed up the event of their knowledge technique with Airflow in an economical manner. Airflow additionally helps OpenLineage, so you possibly can centralize lineage with instruments like SageMaker and Amazon DataZone.

The next steps are particular for Amazon MWAA, however they are often extrapolated to different types of deployment of Airflow:

Set up the OpenLineage package deal for Airflow. You’ll be able to study extra within the OpenLineage documentation. For variations 2.7 and later, it’s really helpful to make use of the native Airflow OpenLineage package deal (apache-airflow-providers-openlineage), which is the case for this instance.

To put in the package deal, add it to the necessities.txt file that you’re storing in Amazon S3 and that you’re pointing to when provisioning your Amazon MWAA atmosphere. To study extra, seek advice from Managing Python dependencies in necessities.txt.

As you put in the OpenLineage package deal or afterwards, you possibly can configure it to ship lineage occasions to the OpenLineage proxy:

When filling the shape to create a brand new Amazon MWAA atmosphere or edit an current one, within the Airflow configuration choices part, add the next. Exchange and with the values of the OpenLineage HTTP Proxy resolution. These values might be discovered as outputs of the deployed CloudFormation stack:

Configuration choice: openlineage.transport

Customized worth: {“sort”: “http”, “url”: “”, “endpoint”: “”}

Run your pipeline.

The Airflow duties will robotically use the transport configuration to stream lineage occasions into the OpenLineage proxy as they run. The duty’s ensuing datasets needs to be sourced into SageMaker or Amazon DataZone in order that OpenLineage occasions are mapped to them.As you discover the sourced dataset in SageMaker Unified Studio, you possibly can observe its origin path as described by the OpenLineage occasions streamed by way of the OpenLineage proxy.

When working with Amazon DataZone, you’ll get the identical consequence.

On this instance, the Amazon MWAA Directed Acyclic Graph (DAG) is working on high of Amazon Redshift, just like the dbt instance earlier than. Nonetheless, it’s nonetheless not utilizing the native integration for automated knowledge seize between Amazon Redshift and SageMaker or Amazon DataZone. This fashion, we will illustrate how one can nonetheless combine OpenLineage occasions from Airflow utilizing the sample carried out within the OpenLineage HTTP Proxy resolution.The Airflow DAG is made by a single activity that outputs the ensuing dataset by utilizing datasets that had been created as a part of the dbt pipeline within the earlier instance. That is illustrated within the origin path, the place it contains nodes with the dbt sort and a node with AIRFLOW sort. With this remaining instance, observe how SageMaker and Amazon DataZone map all datasets and jobs to replicate the fact of your knowledge pipelines.

Extra issues when implementing the OpenLineage proxy sample

The OpenLineage proxy sample carried out within the pattern OpenLineage HTTP Proxy resolution and introduced on this submit has proven to be a sensible strategy to combine a rising set of knowledge processing instruments right into a centralized knowledge governance technique on high of SageMaker. We encourage you to dive into it and use it in your take a look at environments to find out how it may be finest used in your particular setup.If inquisitive about taking this sample to manufacturing, we propose you first assessment it completely and customise it to your explicit wants. The next are some gadgets value reviewing as you consider this sample implementation:

The answer used within the examples of this submit makes use of a public API endpoint with no authentication or authorization mechanism. For a manufacturing workload, we advocate limiting entry to the endpoint to a minimal so solely licensed sources are capable of stream messages into it. To study extra, seek advice from Management and handle entry to HTTP APIs in API Gateway.

The logic carried out within the Lambda operate is meant to be custom-made relying in your use case. You may have to implement transformation logic, relying on how OpenLineage occasions are created by the instrument you’re utilizing. As a reference, for the case of the Amazon MWAA instance of this submit, some minor transformations had been required on the title and namespace fields of the inputs and outputs parts of the occasion for full compatibility with the format anticipated for Amazon Redshift datasets as described within the dataset naming conventions of OpenLineage. You may additionally want to alter how the operate logs execution particulars or embrace retry/error logic and extra.

The SQS queue used within the OpenLineage HTTP Proxy resolution is commonplace, which suggests that occasions aren’t delivered so as. If this can be a requirement, you might use FIFO queues as an alternative.

For circumstances the place you wish to submit OpenLineage occasions instantly into SageMaker or Amazon DataZone, with out utilizing the proxy sample defined on this submit, a customized transport is now out there as an extension of the OpenLineage venture model 1.33.0. Leverage this function in circumstances the place you don’t want further controls in your OpenLineage occasion stream, for instance, for those who don’t want any customized transformation logic.

Abstract

On this submit, we confirmed tips on how to use the OpenLineage-compatible APIs of SageMaker to seize knowledge lineage from any instrument supporting this commonplace, by following an architectural sample launched because the OpenLineage proxy. We introduced some examples of how one can arrange instruments like dbt, Airflow, and Spark to stream lineage occasions to the OpenLineage proxy, which subsequently posts them to a SageMaker or Amazon DataZone area. Lastly, we launched a working implementation of this sample that you would be able to take a look at and mentioned some issues when implementing this identical sample to manufacturing.

The SageMaker compatibility with OpenLineage will help simplify governance of your knowledge belongings and improve belief in your knowledge. This functionality is without doubt one of the many options that are actually out there to construct a complete governance technique powered by knowledge lineage, knowledge high quality, enterprise metadata, knowledge discovery, entry automation, and extra. By bundling knowledge governance capabilities with the rising set of instruments out there for knowledge and AI improvement, you possibly can derive worth out of your knowledge quicker and get nearer to consolidating a data-driven tradition. Check out this resolution and get began with SageMaker to hitch the rising set of shoppers which are modernizing their knowledge platform.

In regards to the authors

Jose Romero is a Senior Options Architect for Startups at AWS, primarily based in Austin, Texas. He’s keen about serving to prospects architect fashionable platforms at scale for knowledge, AI, and ML. As a former senior architect in AWS Skilled Providers, he enjoys constructing and sharing options for frequent complicated issues in order that prospects can speed up their cloud journey and undertake finest practices. Join with him on LinkedIn.

Jose Romero is a Senior Options Architect for Startups at AWS, primarily based in Austin, Texas. He’s keen about serving to prospects architect fashionable platforms at scale for knowledge, AI, and ML. As a former senior architect in AWS Skilled Providers, he enjoys constructing and sharing options for frequent complicated issues in order that prospects can speed up their cloud journey and undertake finest practices. Join with him on LinkedIn.

Priya Tiruthani is a Senior Technical Product Supervisor with Amazon SageMaker Catalog (Amazon DataZone) at AWS. She focuses on constructing merchandise and their capabilities in knowledge analytics and governance. She is keen about constructing revolutionary merchandise to deal with and simplify prospects’ challenges of their end-to-end knowledge journey. Outdoors of labor, she enjoys being outside to hike and seize nature’s magnificence. Join together with her on LinkedIn.

Priya Tiruthani is a Senior Technical Product Supervisor with Amazon SageMaker Catalog (Amazon DataZone) at AWS. She focuses on constructing merchandise and their capabilities in knowledge analytics and governance. She is keen about constructing revolutionary merchandise to deal with and simplify prospects’ challenges of their end-to-end knowledge journey. Outdoors of labor, she enjoys being outside to hike and seize nature’s magnificence. Join together with her on LinkedIn.