For contemporary organizations constructed on knowledge insights, efficient knowledge administration is essential for powering superior analytics and machine studying (ML) actions. As knowledge use circumstances develop into extra advanced, knowledge engineering groups require refined tooling to deal with versioning, rising knowledge volumes, and schema modifications throughout a number of knowledge sources and purposes.

Apache Iceberg has emerged as a preferred alternative for knowledge lakes, providing ACID (Atomicity, Consistency, Isolation, Sturdiness) transactions, schema evolution, and time journey capabilities. Iceberg tables will be accessed from numerous distributed knowledge processing frameworks like Apache Spark and Trino, making it a versatile answer for various knowledge processing wants. Among the many out there instruments for working with Iceberg, PyIceberg stands out as a Python implementation that allows desk entry and administration with out requiring distributed compute sources.

On this put up, we display how PyIceberg, built-in with the AWS Glue Knowledge Catalog and AWS Lambda, supplies a light-weight method to harness Iceberg’s highly effective options by way of intuitive Python interfaces. We present how this integration permits groups to begin working with Iceberg tables with minimal setup and infrastructure dependencies.

PyIceberg’s key capabilities and benefits

One among PyIceberg’s major benefits is its light-weight nature. With out requiring distributed computing frameworks, groups can carry out desk operations instantly from Python purposes, making it appropriate for small to medium-scale knowledge exploration and evaluation with minimal studying curve. As well as, PyIceberg is built-in with Python knowledge evaluation libraries like Pandas and Polars, so knowledge customers can use their present expertise and workflows.

When utilizing PyIceberg with the Knowledge Catalog and Amazon Easy Storage Service (Amazon S3), knowledge groups can retailer and handle their tables in a very serverless setting. This implies knowledge groups can give attention to evaluation and insights somewhat than infrastructure administration.

Moreover, Iceberg tables managed by way of PyIceberg are appropriate with AWS knowledge analytics providers. Though PyIceberg operates on a single node and has efficiency limitations with giant knowledge volumes, the identical tables will be effectively processed at scale utilizing providers comparable to Amazon Athena and AWS Glue. This permits groups to make use of PyIceberg for speedy growth and testing, then transition to manufacturing workloads with larger-scale processing engines—whereas sustaining consistency of their knowledge administration method.

Consultant use case

The next are frequent eventualities the place PyIceberg will be notably helpful:

Knowledge science experimentation and have engineering – In knowledge science, experiment reproducibility is essential for sustaining dependable and environment friendly analyses and fashions. Nonetheless, constantly updating organizational knowledge makes it difficult to handle knowledge snapshots for vital enterprise occasions, mannequin coaching, and constant reference. Knowledge scientists can question historic snapshots by way of time journey capabilities and file vital variations utilizing tagging options. With PyIceberg, they will obtain these advantages of their Python setting utilizing acquainted instruments like Pandas. Due to Iceberg’s ACID capabilities, they will entry constant knowledge even when tables are being actively up to date.

Serverless knowledge processing with Lambda – Organizations typically have to course of knowledge and keep analytical tables effectively with out managing advanced infrastructure. Utilizing PyIceberg with Lambda, groups can construct event-driven knowledge processing and scheduled desk updates by way of serverless capabilities. PyIceberg’s light-weight nature makes it well-suited for serverless environments, enabling easy knowledge processing duties like knowledge validation, transformation, and ingestion. These tables stay accessible for each updates and analytics by way of numerous AWS providers, permitting groups to construct environment friendly knowledge pipelines with out managing servers or clusters.

Occasion-driven knowledge ingestion and evaluation with PyIceberg

On this part, we discover a sensible instance of utilizing PyIceberg for knowledge processing and evaluation utilizing NYC yellow taxi journey knowledge. To simulate an event-driven knowledge processing situation, we use Lambda to insert pattern knowledge into an Iceberg desk, representing how real-time taxi journey data could be processed. This instance will display how PyIceberg can streamline workflows by combining environment friendly knowledge ingestion with versatile evaluation capabilities.

Think about your workforce faces a number of necessities:

The information processing answer must be cost-effective and maintainable, avoiding the complexity of managing distributed computing clusters for this moderately-sized dataset.

Analysts want the flexibility to carry out versatile queries and explorations utilizing acquainted Python instruments. For instance, they may want to match historic snapshots with present knowledge to investigate developments over time.

The answer ought to have the flexibility to develop to be extra scalable sooner or later.

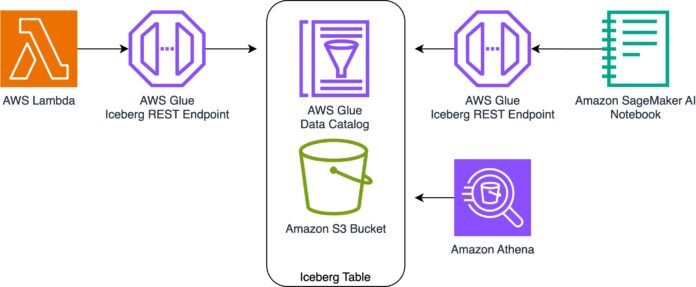

To handle these necessities, we implement an answer that mixes Lambda for knowledge processing with Jupyter notebooks for evaluation, each powered by PyIceberg. This method supplies a light-weight but sturdy structure that maintains knowledge consistency whereas enabling versatile evaluation workflows. On the finish of the walkthrough, we additionally question this knowledge utilizing Athena to display compatibility with a number of Iceberg-supporting instruments and present how the structure can scale.

We stroll by way of the next high-level steps:

Use Lambda to write down pattern NYC yellow taxi journey knowledge to an Iceberg desk on Amazon S3 utilizing PyIceberg with an AWS Glue Iceberg REST endpoint. In a real-world situation, this Lambda operate can be triggered by an occasion from a queuing part like Amazon Easy Queue Service (Amazon SQS). For extra particulars, see Utilizing Lambda with Amazon SQS.

Analyze desk knowledge in a Jupyter pocket book utilizing PyIceberg by way of the AWS Glue Iceberg REST endpoint.

Question the info utilizing Athena to display Iceberg’s flexibility.

The next diagram illustrates the structure.

When implementing this structure, it’s vital to notice that Lambda capabilities can have a number of concurrent invocations when triggered by occasions. This concurrent invocation may result in transaction conflicts when writing to Iceberg tables. To deal with this, it is best to implement an applicable retry mechanism and punctiliously handle concurrency ranges. In case you’re utilizing Amazon SQS as an occasion supply, you possibly can management concurrent invocations by way of the SQS occasion supply’s most concurrency setting.

Stipulations

The next conditions are needed for this use case:

Arrange sources with AWS CloudFormation

You should use the supplied CloudFormation template to arrange the next sources:

Full the next steps to deploy the sources:

Select Launch stack.

![]()

For Parameters, pyiceberg_lambda_blog_database is about by default. You too can change the default worth. In case you change the database title, bear in mind to exchange pyiceberg_lambda_blog_database along with your chosen title in all subsequent steps. Then, select Subsequent.

Select Subsequent.

Choose I acknowledge that AWS CloudFormation may create IAM sources with customized names.

Select Submit.

Construct and run a Lambda operate

Let’s construct a Lambda operate to course of incoming data utilizing PyIceberg. This operate creates an Iceberg desk referred to as nyc_yellow_table within the database pyiceberg_lambda_blog_database within the Knowledge Catalog if it doesn’t exist. It then generates pattern NYC taxi journey knowledge to simulate incoming data and inserts it into nyc_yellow_table.

Though we invoke this operate manually on this instance, in real-world eventualities, this Lambda operate can be triggered by precise occasions, comparable to messages from Amazon SQS. When implementing real-world use circumstances, the operate code have to be modified to obtain the occasion knowledge and course of it primarily based on the necessities.

We deploy the operate utilizing container photographs because the deployment package deal. To create a Lambda operate from a container picture, construct your picture on CloudShell and push it to an ECR repository. Full the next steps:

Check in to the AWS Administration Console and launch CloudShell.

Create a working listing.

mkdir pyiceberg_blog

cd pyiceberg_blog

Obtain the Lambda script lambda_function.py.

aws s3 cp s3://aws-blogs-artifacts-public/artifacts/BDB-5013/lambda_function.py .

This script performs the next duties:

Creates an Iceberg desk with the NYC taxi schema within the Knowledge Catalog

Generates a random NYC taxi dataset

Inserts this knowledge into the desk

Let’s break down the important components of this Lambda operate:

Iceberg catalog configuration – The next code defines an Iceberg catalog that connects to the AWS Glue Iceberg REST endpoint:

# Configure the catalog

catalog_properties = {

“kind”: “relaxation”,

“uri”: f”https://glue.{area}.amazonaws.com/iceberg”,

“s3.area”: area,

“relaxation.sigv4-enabled”: “true”,

“relaxation.signing-name”: “glue”,

“relaxation.signing-region”: area

}

catalog = load_catalog(**catalog_properties)

Desk schema definition – The next code defines the Iceberg desk schema for the NYC taxi dataset. The desk consists of:

Schema columns outlined within the Schema

Partitioning by vendorid and tpep_pickup_datetime utilizing PartitionSpec

Day remodel utilized to tpep_pickup_datetime for each day file administration

Type ordering by tpep_pickup_datetime and tpep_dropoff_datetime

When making use of the day remodel to timestamp columns, Iceberg robotically handles date-based partitioning hierarchically. This implies a single day remodel permits partition pruning on the yr, month, and day ranges with out requiring express transforms for every stage. For extra particulars about Iceberg partitioning, see Partitioning.

# Desk Definition

schema = Schema(

NestedField(field_id=1, title=”vendorid”, field_type=LongType(), required=False),

NestedField(field_id=2, title=”tpep_pickup_datetime”, field_type=TimestampType(), required=False),

NestedField(field_id=3, title=”tpep_dropoff_datetime”, field_type=TimestampType(), required=False),

NestedField(field_id=4, title=”passenger_count”, field_type=LongType(), required=False),

NestedField(field_id=5, title=”trip_distance”, field_type=DoubleType(), required=False),

NestedField(field_id=6, title=”ratecodeid”, field_type=LongType(), required=False),

NestedField(field_id=7, title=”store_and_fwd_flag”, field_type=StringType(), required=False),

NestedField(field_id=8, title=”pulocationid”, field_type=LongType(), required=False),

NestedField(field_id=9, title=”dolocationid”, field_type=LongType(), required=False),

NestedField(field_id=10, title=”payment_type”, field_type=LongType(), required=False),

NestedField(field_id=11, title=”fare_amount”, field_type=DoubleType(), required=False),

NestedField(field_id=12, title=”additional”, field_type=DoubleType(), required=False),

NestedField(field_id=13, title=”mta_tax”, field_type=DoubleType(), required=False),

NestedField(field_id=14, title=”tip_amount”, field_type=DoubleType(), required=False),

NestedField(field_id=15, title=”tolls_amount”, field_type=DoubleType(), required=False),

NestedField(field_id=16, title=”improvement_surcharge”, field_type=DoubleType(), required=False),

NestedField(field_id=17, title=”total_amount”, field_type=DoubleType(), required=False),

NestedField(field_id=18, title=”congestion_surcharge”, field_type=DoubleType(), required=False),

NestedField(field_id=19, title=”airport_fee”, field_type=DoubleType(), required=False),

)

# Outline partition spec

partition_spec = PartitionSpec(

PartitionField(source_id=1, field_id=1001, remodel=IdentityTransform(), title=”vendorid_idenitty”),

PartitionField(source_id=2, field_id=1002, remodel=DayTransform(), title=”tpep_pickup_day”),

)

# Outline type order

sort_order = SortOrder(

SortField(source_id=2, remodel=DayTransform()),

SortField(source_id=3, remodel=DayTransform())

)

database_name = os.environ.get(‘GLUE_DATABASE_NAME’)

table_name = os.environ.get(‘ICEBERG_TABLE_NAME’)

identifier = f”{database_name}.{table_name}”

# Create the desk if it would not exist

location = f”s3://pyiceberg-lambda-blog-{account_id}-{area}/{database_name}/{table_name}”

if not catalog.table_exists(identifier):

desk = catalog.create_table(

identifier=identifier,

schema=schema,

location=location,

partition_spec=partition_spec,

sort_order=sort_order

)

else:

desk = catalog.load_table(identifier=identifier)

Knowledge era and insertion – The next code generates random knowledge and inserts it into the desk. This instance demonstrates an append-only sample, the place new data are constantly added to trace enterprise occasions and transactions:

# Generate random knowledge

data = generate_random_data()

# Convert to Arrow Desk

df = pa.Desk.from_pylist(data)

# Write knowledge utilizing PyIceberg

desk.append(df)

Obtain the Dockerfile. It defines the container picture on your operate code.

aws s3 cp s3://aws-blogs-artifacts-public/artifacts/BDB-5013/Dockerfile .

Obtain the necessities.txt. It defines the Python packages required on your operate code.

aws s3 cp s3://aws-blogs-artifacts-public/artifacts/BDB-5013/necessities.txt .

At this level, your working listing ought to comprise the next three recordsdata:

Dockerfile

lambda_function.py

necessities.txt

Set the setting variables. Exchange along with your AWS account ID:

Construct the Docker picture:

docker construct –provenance=false -t localhost/pyiceberg-lambda .

# Affirm constructed picture

docker photographs | grep pyiceberg-lambda

Set a tag to the picture:

docker tag localhost/pyiceberg-lambda:newest ${AWS_ACCOUNT_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com/pyiceberg-lambda-repository:newest

Log in to the ECR repository created by AWS CloudFormation:

aws ecr get-login-password –region ${AWS_REGION} | docker login –username AWS –password-stdin ${AWS_ACCOUNT_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com

Push the picture to the ECR repository:

docker push ${AWS_ACCOUNT_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com/pyiceberg-lambda-repository:newest

Create a Lambda operate utilizing the container picture you pushed to Amazon ECR:

aws lambda create-function

–function-name pyiceberg-lambda-function

–package-type Picture

–code ImageUri=${AWS_ACCOUNT_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com/pyiceberg-lambda-repository:newest

–role arn:aws:iam::${AWS_ACCOUNT_ID}:function/pyiceberg-lambda-function-role-${AWS_REGION}

–environment “Variables={ICEBERG_TABLE_NAME=nyc_yellow_table, GLUE_DATABASE_NAME=pyiceberg_lambda_blog_database}”

–region ${AWS_REGION}

–timeout 60

–memory-size 1024

Invoke the operate a minimum of 5 occasions to create a number of snapshots, which we are going to look at within the following sections. Be aware that we’re invoking the operate manually to simulate event-driven knowledge ingestion. In actual world eventualities, Lambda capabilities might be robotically invoked with event-driven style.

aws lambda invoke

–function-name arn:aws:lambda:${AWS_REGION}:${AWS_ACCOUNT_ID}:operate:pyiceberg-lambda-function

–log-type Tail

outputfile.txt

–query ‘LogResult’ | tr -d ‘”‘ | base64 -d

At this level, you may have deployed and run the Lambda operate. The operate creates the nyc_yellow_table Iceberg desk within the pyiceberg_lambda_blog_database database. It additionally generates and inserts pattern knowledge into this desk. We’ll discover the data within the desk in later steps.

For extra detailed details about constructing Lambda capabilities with containers, see Create a Lambda operate utilizing a container picture.

Discover the info with Jupyter utilizing PyIceberg

On this part, we display how one can entry and analyze the info saved in Iceberg tables registered within the Knowledge Catalog. Utilizing a Jupyter pocket book with PyIceberg, we entry the taxi journey knowledge created by our Lambda operate and look at totally different snapshots as new data arrive. We additionally tag particular snapshots to retain vital ones, and create new tables for additional evaluation.

Full the next steps to open the pocket book with Jupyter on the SageMaker AI pocket book occasion:

On the SageMaker AI console, select Notebooks within the navigation pane.

Select Open JupyterLab subsequent to the pocket book that you just created utilizing the CloudFormation template.

Obtain the pocket book and open it in a Jupyter setting in your SageMaker AI pocket book.

Open uploaded pyiceberg_notebook.ipynb.

Within the kernel choice dialog, depart the default choice and select Choose.

From this level ahead, you’ll work by way of the pocket book by operating cells so as.

Connecting Catalog and Scanning Tables

You’ll be able to entry the Iceberg desk utilizing PyIceberg. The next code connects to the AWS Glue Iceberg REST endpoint and masses the nyc_yellow_table desk on the pyiceberg_lambda_blog_database database:

import pyarrow as pa

from pyiceberg.catalog import load_catalog

import boto3

# Set AWS area

sts = boto3.consumer(‘sts’)

area = sts._client_config.region_name

# Configure catalog connection properties

catalog_properties = {

“kind”: “relaxation”,

“uri”: f”https://glue.{area}.amazonaws.com/iceberg”,

“s3.area”: area,

“relaxation.sigv4-enabled”: “true”,

“relaxation.signing-name”: “glue”,

“relaxation.signing-region”: area

}

# Specify database and desk names

database_name = “pyiceberg_lambda_blog_database”

table_name = “nyc_yellow_table”

# Load catalog and get desk

catalog = load_catalog(**catalog_properties)

desk = catalog.load_table(f”{database_name}.{table_name}”)

You’ll be able to question full knowledge from the Iceberg desk as an Apache Arrow desk and convert it to a Pandas DataFrame.

Working with Snapshots

One of many vital options of Iceberg is snapshot-based model management. Snapshots are robotically created every time knowledge modifications happen within the desk. You’ll be able to retrieve knowledge from a particular snapshot, as proven within the following instance.

# Get knowledge from a particular snapshot ID

snapshot_id = snapshots.to_pandas()(“snapshot_id”)(3)

snapshot_pa_table = desk.scan(snapshot_id=snapshot_id).to_arrow()

snapshot_df = snapshot_pa_table.to_pandas()

You’ll be able to evaluate the present knowledge with historic knowledge from any time limit primarily based on snapshots. On this case, you’re evaluating the variations in knowledge distribution between the most recent desk and a snapshot desk:

# Examine the distribution of total_amount within the specified snapshot and the most recent knowledge.

import matplotlib.pyplot as plt

plt.determine(figsize=(4, 3))

df(‘total_amount’).hist(bins=30, density=True, label=”newest”, alpha=0.5)

snapshot_df(‘total_amount’).hist(bins=30, density=True, label=”snapshot”, alpha=0.5)

plt.title(‘Distribution of total_amount’)

plt.xlabel(‘total_amount’)

plt.ylabel(‘relative Frequency’)

plt.legend()

plt.present()

Tagging snapshots

You’ll be able to tag particular snapshots with an arbitrary title and question particular snapshots with that title later. That is helpful when managing snapshots of vital occasions.

On this instance, you question a snapshot specifying the tag checkpointTag. Right here, you’re utilizing the polars to create a brand new DataFrame by including a brand new column referred to as trip_duration primarily based on present columns tpep_dropoff_datetime and tpep_pickup_datetime columns:

# retrive tagged snapshot desk as polars knowledge body

import polars as pl

# Get snapshot id from tag title

df = desk.examine.refs().to_pandas()

filtered_df = df(df(“title”) == tag_name)

tag_snapshot_id = filtered_df(“snapshot_id”).iloc(0)

# Scan Desk primarily based on the snapshot id

tag_pa_table = desk.scan(snapshot_id=tag_snapshot_id).to_arrow()

tag_df = pl.from_arrow(tag_pa_table)

# Course of the info including a brand new column “trip_duration” from test level snapshot.

def preprocess_data(df):

df = df.choose((“vendorid”, “tpep_pickup_datetime”, “tpep_dropoff_datetime”,

“passenger_count”, “trip_distance”, “fare_amount”))

df = df.with_columns(

((pl.col(“tpep_dropoff_datetime”) – pl.col(“tpep_pickup_datetime”))

.dt.total_seconds() // 60).alias(“trip_duration”))

return df

processed_df = preprocess_data(tag_df)

show(processed_df)

print(processed_df(“trip_duration”).describe())

Create a brand new desk from the processed DataFrame with the trip_duration column. This step illustrates how one can put together knowledge for potential future evaluation. You’ll be able to explicitly specify the snapshot of the info that the processed knowledge is referring to through the use of a tag, even when the underlying desk has been modified.

# write processed knowledge to new iceberg desk

account_id = sts.get_caller_identity()(“Account”)

new_table_name = “processed_” + table_name

location = f”s3://pyiceberg-lambda-blog-{account_id}-{area}/{database_name}/{new_table_name}”

pa_new_table = processed_df.to_arrow()

schema = pa_new_table.schema

identifier = f”{database_name}.{new_table_name}”

new_table = catalog.create_table(

identifier=identifier,

schema=schema,

location=location

)

# present new desk’s schema

print(new_table.schema())

# insert processed knowledge to new desk

new_table.append(pa_new_table)

Let’s question this new desk comprised of processed knowledge with Athena to display the Iceberg desk’s interoperability.

Question the info from Athena

Within the Athena question editor, you possibly can question the desk pyiceberg_lambda_blog_database.processed_nyc_yellow_table created from the pocket book within the earlier part:

SELECT * FROM “pyiceberg_lambda_blog_database”.”processed_nyc_yellow_table” restrict 10;

By finishing these steps, you’ve constructed a serverless knowledge processing answer utilizing PyIceberg with Lambda and an AWS Glue Iceberg REST endpoint. You’ve labored with PyIceberg to handle and analyze knowledge utilizing Python, together with snapshot administration and desk operations. As well as, you ran the question utilizing one other engine, Athena, which exhibits the compatibility of the Iceberg desk.

Clear up

To wash up the sources used on this put up, full the next steps:

On the Amazon ECR console, navigate to the repository pyiceberg-lambda-repository and delete all photographs contained within the repository.

On the CloudShell, delete working listing pyiceberg_blog.

On the Amazon S3 console, navigate to the S3 bucket pyiceberg-lambda-blog–, which you created utilizing the CloudFormation template, and empty the bucket.

After you affirm the repository and the bucket are empty, delete the CloudFormation stack pyiceberg-lambda-blog-stack.

Delete the Lambda operate pyiceberg-lambda-function that you just created utilizing the Docker picture.

Conclusion

On this put up, we demonstrated how utilizing PyIceberg with the AWS Glue Knowledge Catalog permits environment friendly, light-weight knowledge workflows whereas sustaining sturdy knowledge administration capabilities. We showcased how groups can use Iceberg’s highly effective options with minimal setup and infrastructure dependencies. This method permits organizations to begin working with Iceberg tables rapidly, with out the complexity of establishing and managing distributed computing sources.

That is notably helpful for organizations seeking to undertake Iceberg’s capabilities with a low barrier to entry. The light-weight nature of PyIceberg permits groups to start working with Iceberg tables instantly, utilizing acquainted instruments and requiring minimal further studying. As knowledge wants develop, the identical Iceberg tables will be seamlessly accessed by AWS analytics providers like Athena and AWS Glue, offering a transparent path for future scalability.

To be taught extra about PyIceberg and AWS analytics providers, we encourage you to discover the PyIceberg documentation and What’s Apache Iceberg?

In regards to the authors

Sotaro Hikita is a Specialist Options Architect targeted on analytics with AWS, working with huge knowledge applied sciences and open supply software program. Outdoors of labor, he all the time seeks out good meals and has not too long ago develop into keen about pizza.

Sotaro Hikita is a Specialist Options Architect targeted on analytics with AWS, working with huge knowledge applied sciences and open supply software program. Outdoors of labor, he all the time seeks out good meals and has not too long ago develop into keen about pizza.

Shuhei Fukami is a Specialist Options Architect targeted on Analytics with AWS. He likes cooking in his spare time and has develop into obsessive about making pizza as of late.

Shuhei Fukami is a Specialist Options Architect targeted on Analytics with AWS. He likes cooking in his spare time and has develop into obsessive about making pizza as of late.