Giant language fashions are difficult to adapt to new enterprise duties. Prompting is error-prone and achieves restricted high quality good points, whereas fine-tuning requires massive quantities of human-labeled information that’s not accessible for many enterprise duties. Right this moment, we’re introducing a brand new mannequin tuning methodology that requires solely unlabeled utilization information, letting enterprises enhance high quality and price for AI utilizing simply the info they have already got. Our methodology, Check-time Adaptive Optimization (TAO), leverages test-time compute (as popularized by o1 and R1) and reinforcement studying (RL) to show a mannequin to do a activity higher based mostly on previous enter examples alone, that means that it scales with an adjustable tuning compute price range, not human labeling effort. Crucially, though TAO makes use of test-time compute, it makes use of it as a part of the method to prepare a mannequin; that mannequin then executes the duty instantly with low inference prices (i.e., not requiring further compute at inference time). Surprisingly, even with out labeled information, TAO can obtain higher mannequin high quality than conventional fine-tuning, and it could deliver cheap open supply fashions like Llama to inside the high quality of expensive proprietary fashions like GPT-4o and o3-mini.

TAO is a part of our analysis crew’s program on Knowledge Intelligence — the issue of constructing AI excel at particular domains utilizing the info enterprises have already got. With TAO, we obtain three thrilling outcomes:

On specialised enterprise duties corresponding to doc query answering and SQL technology, TAO outperforms conventional fine-tuning on hundreds of labeled examples. It brings environment friendly open supply fashions like Llama 8B and 70B to the same high quality as costly fashions like GPT-4o and o3-mini1 with out the necessity for labels.

We are able to additionally use multi-task TAO to enhance an LLM broadly throughout many duties. Utilizing no labels, TAO improves the efficiency of Llama 3.3 70B by 2.4% on a broad enterprise benchmark.

Growing TAO’s compute price range at tuning time yields higher mannequin high quality with the identical information, whereas the inference prices of the tuned mannequin keep the identical.

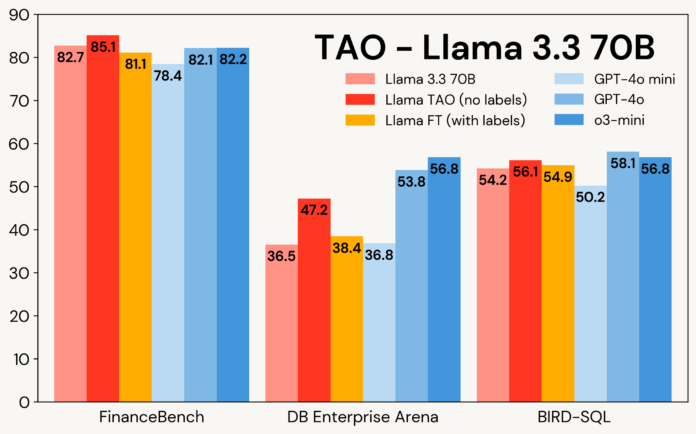

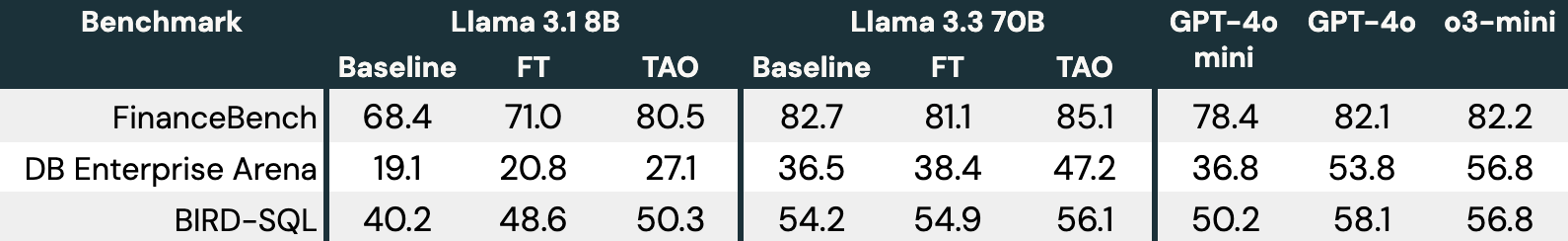

Determine 1 reveals how TAO improves Llama fashions on three enterprise duties: FinanceBench, DB Enterprise Area, and BIRD-SQL (utilizing the Databricks SQL dialect)². Regardless of solely gaining access to LLM inputs, TAO outperforms conventional fine-tuning (FT) with hundreds of labeled examples and brings Llama inside the similar vary as costly proprietary fashions.

Determine 1: TAO on Llama 3.1 8B and Llama 3.3 70B throughout three enterprise benchmarks. TAO results in substantial enhancements in high quality, outperforming fine-tuning and difficult costly proprietary LLMs.

TAO is now accessible in preview to Databricks clients who need to tune Llama, and it will likely be powering a number of upcoming merchandise. Fill out this kind to specific your curiosity in attempting it in your duties as a part of the non-public preview. On this submit, we describe extra about how TAO works and our outcomes with it.

How Does TAO Work? Utilizing Check-Time Compute and Reinforcement Studying to Tune Fashions

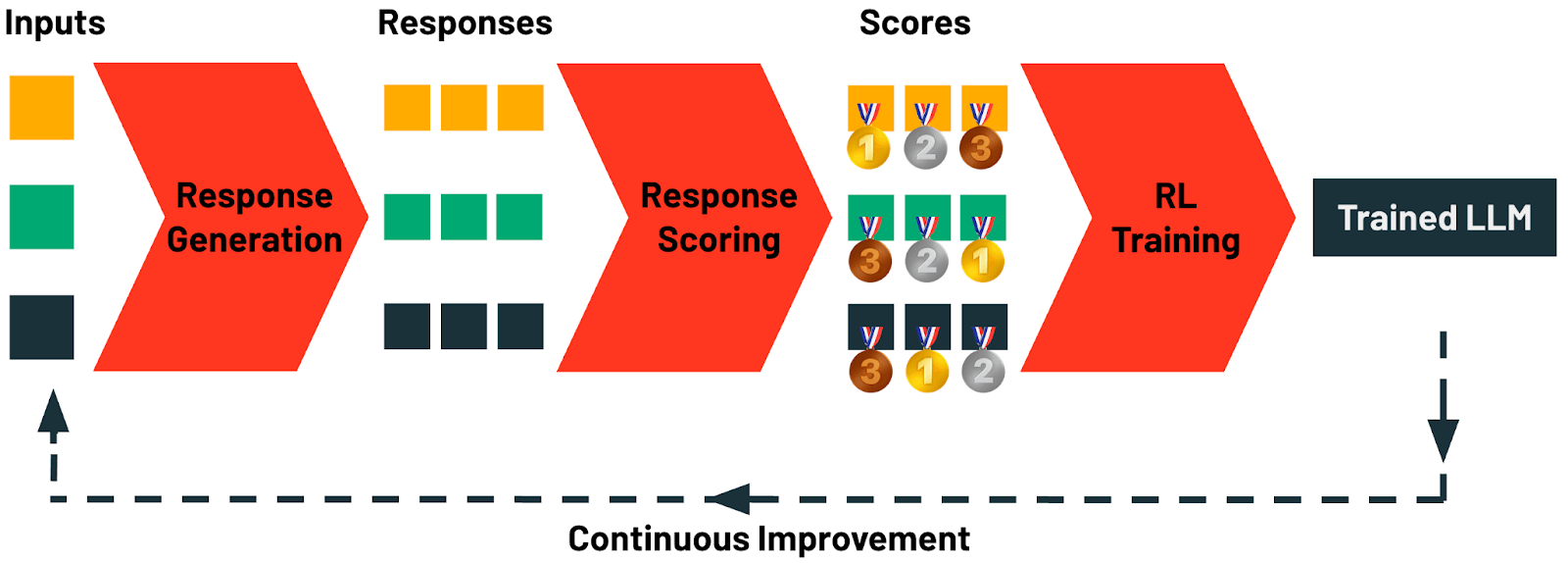

As an alternative of requiring human annotated output information, the important thing thought in TAO is to make use of test-time compute to have a mannequin discover believable responses for a activity, then use reinforcement studying to replace an LLM based mostly on evaluating these responses. This pipeline will be scaled utilizing test-time compute, as an alternative of pricy human effort, to extend high quality. Furthermore, it could simply be personalized utilizing task-specific insights (e.g., customized guidelines). Surprisingly, making use of this scaling with high-quality open supply fashions results in higher outcomes than human labels in lots of instances.

Determine 2: The TAO pipeline.

Particularly, TAO contains 4 phases:

Response Era: This stage begins with amassing instance enter prompts or queries for a activity. On Databricks, these prompts will be mechanically collected from any AI software utilizing our AI Gateway. Every immediate is then used to generate a various set of candidate responses. A wealthy spectrum of technology methods will be utilized right here, starting from easy chain-of-thought prompting to classy reasoning and structured prompting strategies.

Response Scoring: On this stage, generated responses are systematically evaluated. Scoring methodologies embrace a wide range of methods, corresponding to reward modeling, preference-based scoring, or task-specific verification using LLM judges or customized guidelines. This stage ensures every generated response is quantitatively assessed for high quality and alignment with standards.

Reinforcement Studying (RL) Coaching: Within the ultimate stage, an RL-based strategy is utilized to replace the LLM, guiding the mannequin to supply outputs intently aligned with high-scoring responses recognized within the earlier step. By this adaptive studying course of, the mannequin refines its predictions to reinforce high quality.

Steady Enchancment: The one information TAO wants is instance LLM inputs. Customers naturally create this information by interacting with an LLM. As quickly as your LLM is deployed, you start producing coaching information for the subsequent spherical of TAO. On Databricks, your LLM can get higher the extra you utilize it, because of TAO.

Crucially, though TAO makes use of test-time compute, it makes use of it to coach a mannequin that then executes a activity instantly with low inference prices. Because of this the fashions produced by TAO have the identical inference price and pace as the unique mannequin – considerably lower than test-time compute fashions like o1, o3 and R1. As our outcomes present, environment friendly open supply fashions skilled with TAO can problem main proprietary fashions in high quality.

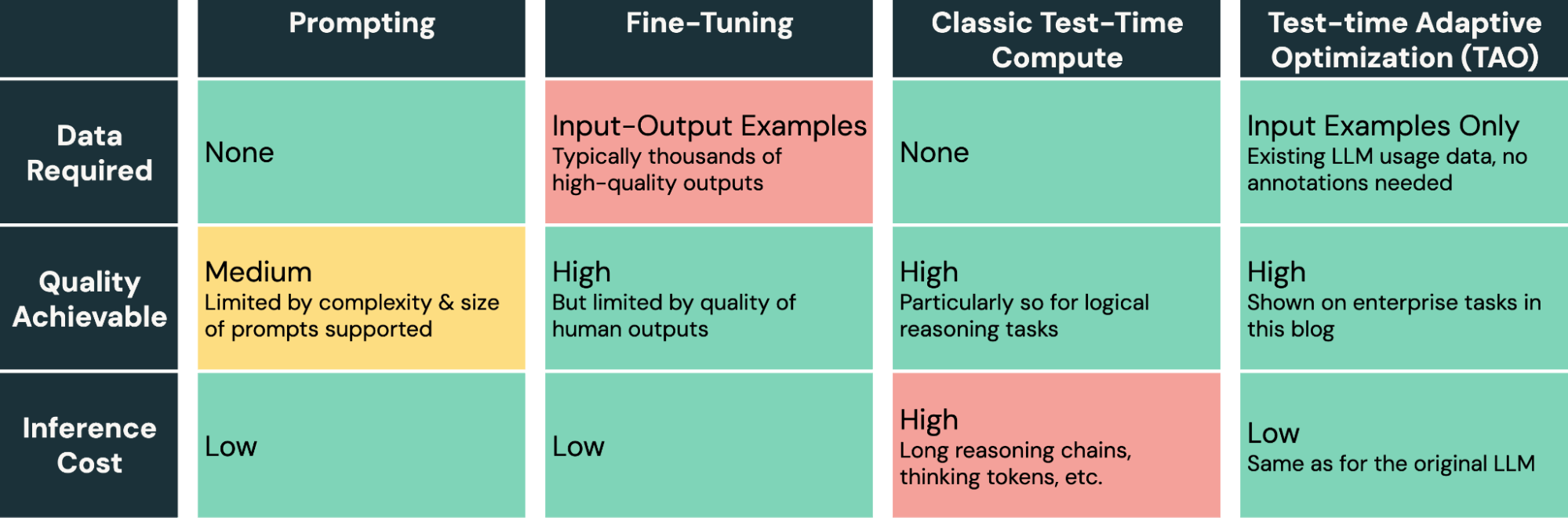

TAO supplies a strong new methodology within the toolkit for tuning AI fashions. Not like immediate engineering, which is sluggish and error–inclined, and fine-tuning, which requires producing costly and high-quality human labels, TAO lets AI engineers obtain nice outcomes by merely offering consultant enter examples of their activity.

Desk 1: Comparability of LLM tuning strategies.

TAO is a extremely versatile methodology that may be personalized if wanted, however our default implementation in Databricks works properly out-of-the-box on numerous enterprise duties. On the core of our implementation are new reinforcement studying and reward modeling strategies our crew developed that allow TAO to study by exploration after which tune the underlying mannequin utilizing RL. For instance, one of many components powering TAO is a customized reward mannequin we skilled for enterprise duties, DBRM, that may produce correct scoring indicators throughout a variety of duties.

Enhancing Job Efficiency with TAO

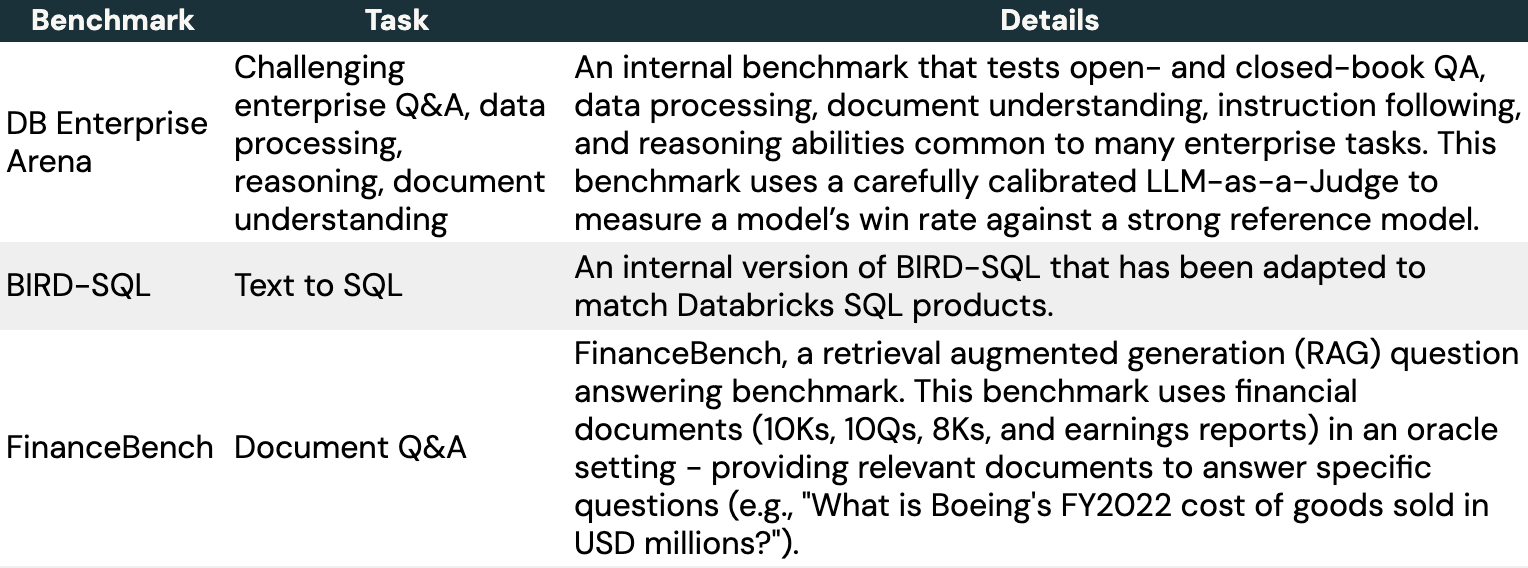

On this part, we dive deeper into how we used TAO to tune LLMs on specialised enterprise duties. We chosen three consultant benchmarks, together with standard open supply benchmarks and inner ones we developed as a part of our Area Intelligence Benchmark Suite (DIBS).

Desk 2: Overview of benchmarks used on this weblog.

For every activity, we evaluated a number of approaches:

Utilizing an open supply Llama mannequin (Llama 3.1-8B or Llama 3.3-70B) out of the field.

High quality-tuning on Llama. To do that, we used or created massive, real looking input-output datasets with hundreds of examples, which is often what’s required to realize good efficiency with fine-tuning. These included:

7200 artificial questions on SEC paperwork for FinanceBench.

4800 human-written inputs for DB Enterprise Area.

8137 examples from the BIRD-SQL coaching set, modified to match the Databricks SQL dialect.

TAO on Llama, utilizing simply the instance inputs from our fine-tuning datasets, however not the outputs, and utilizing our DBRM enterprise-focused reward mannequin. DBRM itself will not be skilled on these benchmarks.

Excessive-quality proprietary LLMs – GPT 4o-mini, GPT 4o and o3-mini.

As proven in Desk 3, throughout all three benchmarks and each Llama fashions, TAO considerably improves the baseline Llama efficiency, even past that of fine-tuning.

Desk 3: TAO on Llama 3.1 8B and Llama 3.3 70B throughout three enterprise benchmarks.

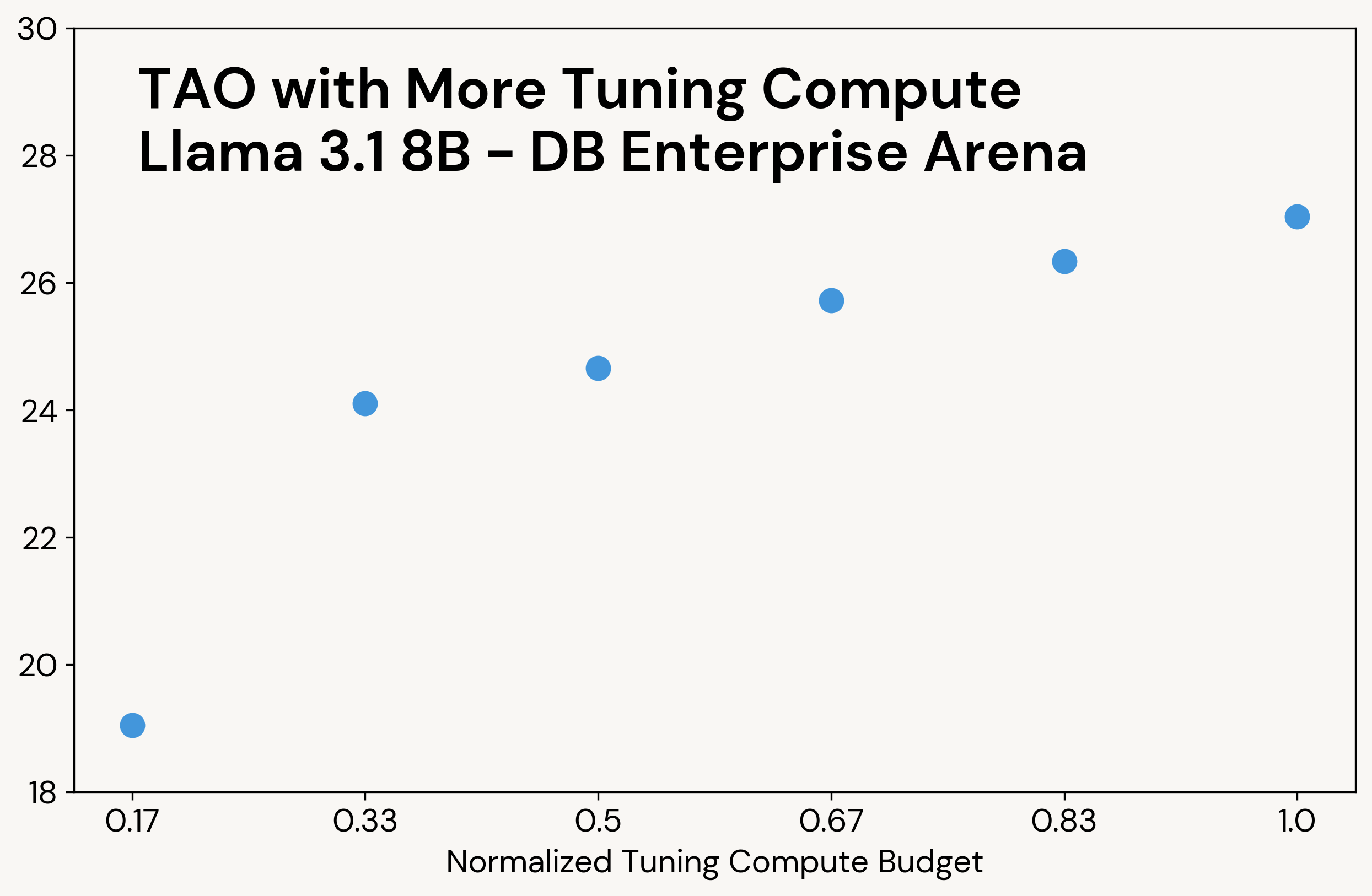

Like basic test-time compute, TAO produces higher-quality outcomes when it’s given entry to extra compute (see Determine 3 for an instance). Not like test-time compute, nevertheless, this extra compute is just used through the tuning section; the ultimate LLM has the identical inference price as the unique LLM.

Determine 3: TAO scales with the quantity of test-time compute used through the tuning course of. Inference price to make use of the ensuing LLM is identical as the unique LLM.

Enhancing Multitask Intelligence with TAO

Up to now, we’ve used TAO to enhance LLMs on particular person slender duties, corresponding to SQL technology. Nevertheless, as brokers turn into extra advanced, enterprises more and more want LLMs that may carry out a couple of activity. On this part, we present how TAO can broadly enhance mannequin efficiency throughout a spread of enterprise duties.

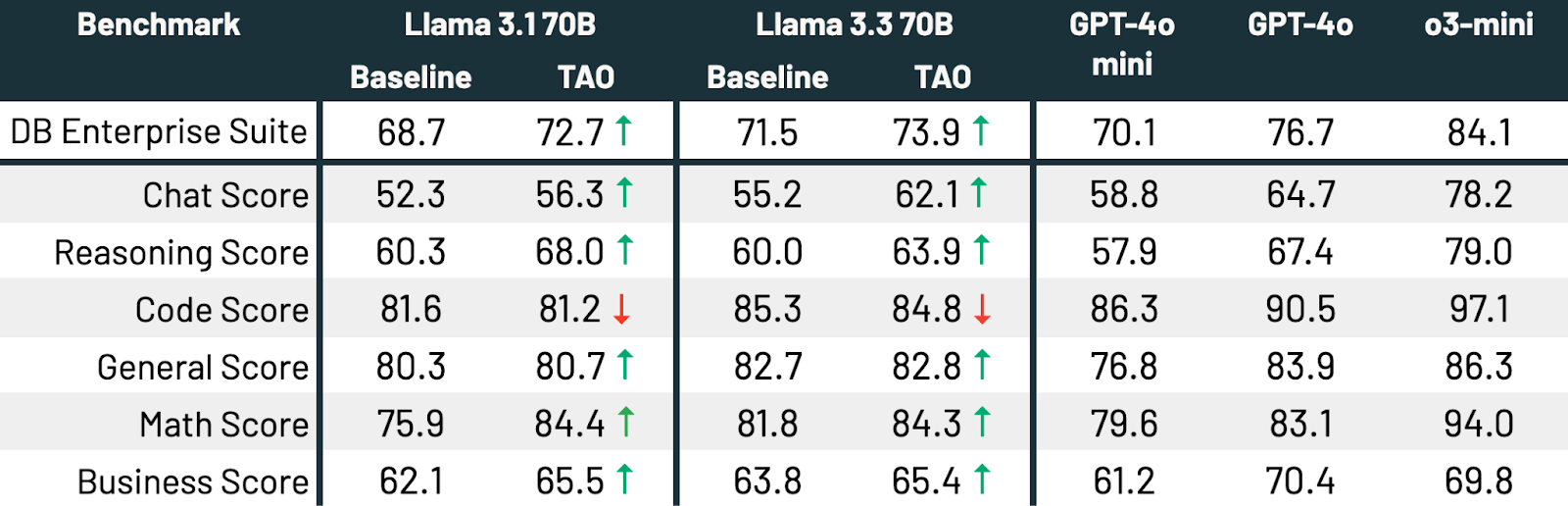

On this experiment, we gathered 175,000 prompts that replicate a various set of enterprise duties, together with coding, math, question-answering, doc understanding, and chat. We then ran TAO on Llama 3.1 70B and Llama 3.3 70B. Lastly, we examined a collection of enterprise-relevant duties, which incorporates standard LLM benchmarks (e.g. Area Arduous, LiveBench, GPQA Diamond, MMLU Professional, HumanEval, MATH) and inner benchmarks in a number of areas related to enterprises.

TAO meaningfully improves the efficiency of each fashions

Desk 4: Enhancing multitask enterprise intelligence utilizing TAO

Utilizing TAO in Observe

TAO is a strong tuning methodology that works surprisingly properly on many duties by leveraging test-time compute. To make use of it efficiently by yourself duties, you’ll need:

Adequate instance inputs to your activity (a number of thousand), both collected from a deployed AI software (e.g., questions despatched to an agent) or generated synthetically.

A sufficiently correct scoring methodology: for Databricks clients, one highly effective software right here is our customized reward mannequin, DBRM, that powers our implementation of TAO, however you possibly can increase DBRM with customized scoring guidelines or verifiers if they’re relevant to your activity.

One greatest observe that can allow TAO and different mannequin enchancment strategies is to create an information flywheel to your AI purposes. As quickly as you deploy an AI software, you possibly can gather inputs, mannequin outputs, and different occasions via providers like Databricks Inference Tables. You’ll be able to then use simply the inputs to run TAO. The extra folks use your software, the extra information you’ll have to tune it on, and – because of TAO – the higher your LLM will get.

Conclusion and Getting Began on Databricks

On this weblog, we offered Check-time Adaptive Optimization (TAO), a brand new mannequin tuning method that achieves high-quality outcomes while not having labeled information. We developed TAO to deal with a key problem we noticed enterprise clients dealing with: they lacked labeled information wanted by customary fine-tuning. TAO makes use of test-time compute and reinforcement studying to enhance fashions utilizing information that enterprises have already got, corresponding to enter examples, making it simple to enhance any deployed AI software in high quality and cut back price by utilizing smaller fashions. TAO is a extremely versatile methodology that reveals the ability of test-time compute for specialised AI improvement, and we consider it would give builders a strong and easy new software to make use of alongside prompting and fine-tuning.

Databricks clients are already utilizing TAO on Llama in non-public preview. Fill out this kind to specific your curiosity in attempting it in your duties as a part of the non-public preview. TAO can be being integrated into lots of our upcoming AI product updates and launches – keep tuned!

¹ Authors: Raj Ammanabrolu, Ashutosh Baheti, Jonathan Chang, Xing Chen, Ta-Chung Chi, Brian Chu, Brandon Cui, Erich Elsen, Jonathan Frankle, Ali Ghodsi, Pallavi Koppol, Sean Kulinski, Jonathan Li, Dipendra Misra, Jose Javier Gonzalez Ortiz, Sean Owen, Mihir Patel, Mansheej Paul, Cory Stephenson, Alex Trott, Ziyi Yang, Matei Zaharia, Andy Zhang, Ivan Zhou

² We use o3-mini-medium all through this weblog.

3 That is the BIRD-SQL benchmark modified for Databricks’ SQL dialect and merchandise.