Boston Dynamics printed a brand new video highlighting how its new, electrical Atlas humanoid performs duties within the lab. You possibly can watch the video above.

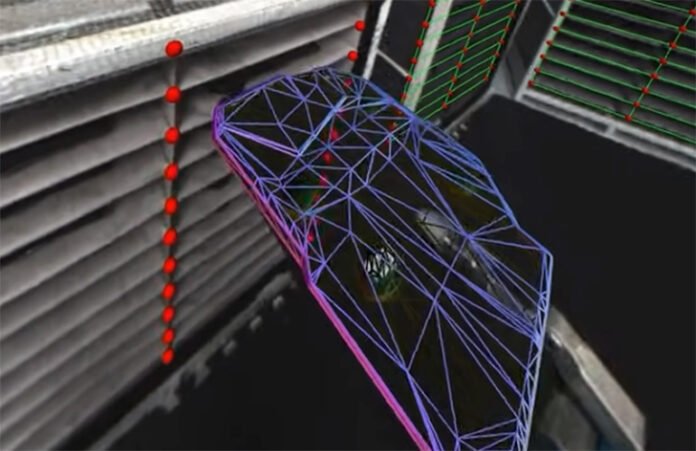

The very first thing that hits me from the video is how Atlas showcases its real-time notion. The video reveals how Atlas actively registers its body of reference for the engine covers and all of the selecting/place areas. The robotic frequently updates its understanding of the world to deal with the components successfully. When it picks one thing up, it evaluates the topology of the half – how one can deal with it and the place to put it.

Atlas perceives the topology of the half held in its hand because it acquires the half from the shelf. | Credit score: Boston Dynamics

Then, there’s this second at 1:14 within the demo the place an engineer dropped an engine cowl on the ground. Atlas reacts as if it hears the half hit the ground. The humanoid then seems to be round, locates the half, figures out how one can choose it up (once more, evaluating its kind), and locations it with the mandatory precision into the engine cowl space.

“On this specific clip, the search conduct is manually triggered,” mentioned Scott Kuindersma, senior director of robotics analysis at Boston Dynamics,” instructed The Robotic Report. “The robotic isn’t utilizing audio cues to detect an engine cowl hitting the bottom. The robotic is autonomously ‘discovering’ the thing on the ground, so in follow we will run the identical imaginative and prescient mannequin passively and set off the identical conduct if an engine cowl (or no matter half we’re working with) is detected out of the fixture throughout regular operation.”

The video highlights Atlas’ capability to adapt and understand its setting, regulate its idea of that world, and nonetheless keep on with its assigned process. It reveals how Atlas can deal with chaotic environments, preserve its process goal, and make modifications to its mission on the fly.

Atlas can scan the ground and determine a component on the ground that doesn’t belong there. | Credit score: Boston Dynamics

“When the thing is in view of the cameras, Atlas makes use of an object pose estimation mannequin that makes use of a render-and-compare strategy to estimate pose from monocular pictures,” Boston Dynamics wrote in a weblog concerning the video. “The mannequin is educated with large-scale artificial information, and generalizes zero-shot to novel objects given a CAD mannequin. When initialized with a 3D pose prior, the mannequin iteratively refines it to attenuate the discrepancy between the rendered CAD mannequin and the captured digital camera picture. Alternatively, the pose estimator may be initialized from a 2D region-of-interest prior (similar to an object masks). Atlas then generates a batch of pose hypotheses which are fed to a scoring mannequin, and the perfect match speculation is subsequently refined. Atlas’s pose estimator works reliably on tons of of manufacturing facility belongings which we now have beforehand modeled and textured in-house.”

I see, due to this fact I’m

Robotic imaginative and prescient steerage has been viable for the reason that Nineteen Nineties. At the moment, robots might observe gadgets on transferring conveyors and regulate native frames of reference for a circuit board meeting based mostly on fiducials. Nothing is stunning or novel about this state-of-the-art for robotic imaginative and prescient steerage.

What’s distinctive now for humanoids is the mobility of the robotic. Any cellular manipulator should persistently replace its world map. Fashionable robotic imaginative and prescient steerage makes use of imaginative and prescient language fashions (VLM) to know the world by way of the attention of the digital camera.

These older industrial robots have been mounted to the bottom and used 2D imaginative and prescient and sophisticated calibration routines to map the sector of view of the digital camera. What we’re seeing demonstrated with Atlas is a cellular, humanoid robotic understanding its environment and persevering with its process even because the setting modifications across the robotic. Fashionable robots have a 3D understanding of the world round them.

Boston Dynamics admits this demo is a mixture of AI-based capabilities (like notion) and a few procedural programming for managing the mission. The video is an efficient demonstration of the development of the capabilities of the software program evolution. For these techniques to work in the true world, they need to deal with each refined modifications and macro modifications to their working environments.

Making its means by way of the world

It’s fascinating to look at Atlas transfer. The actions, at occasions, appear a bit odd, nevertheless it’s a superb illustration of how the AI perceives the world and the alternatives that it makes to maneuver by way of the world. We solely get to witness a small slice of this decision-making within the video.

Boston Dynamics has beforehand printed a video displaying movement seize (mocap) based mostly behaviors. The mocap video demonstrates the agility of the system and what it will probably do with easy enter. The jerkiness of this newest video, below AI determination making and management, is a great distance from the uncanny valley-involving mocap demonstrations. We additionally featured Boston Dynamics CTO Aaron Saunders as a keynote presenter on the 2025 Robotics Summit and Expo in Boston.

There stays quite a lot of real-time processing for Atlas to grasp its world. Within the video, we see the robotic stopping to course of the setting, earlier than it comes to a decision and continues. I’m assured that is solely going to get sooner over time because the code evolves and the AI fashions turn out to be higher of their comprehension and flexibility. I believe that’s the place the race is now: creating the AI-based software program that permits these robots to adapt, perceive their setting, and constantly be taught from quite a lot of multi-modal information.

Editor’s Word: This text was up to date at 1:46 PM Japanese with a quote from Scott Kuindersma, senior director of robotics analysis at Boston Dynamics.