Be a part of our day by day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Study Extra

Giant language fashions (LLMs) are more and more able to complicated reasoning by “inference-time scaling,” a set of methods that allocate extra computational sources throughout inference to generate solutions. Nevertheless, a new examine from Microsoft Analysis reveals that the effectiveness of those scaling strategies isn’t common. Efficiency boosts differ considerably throughout completely different fashions, duties and downside complexities.

The core discovering is that merely throwing extra compute at an issue throughout inference doesn’t assure higher or extra environment friendly outcomes. The findings may also help enterprises higher perceive value volatility and mannequin reliability as they appear to combine superior AI reasoning into their functions.

Placing scaling strategies to the take a look at

The Microsoft Analysis staff carried out an intensive empirical evaluation throughout 9 state-of-the-art basis fashions. This included each “typical” fashions like GPT-4o, Claude 3.5 Sonnet, Gemini 2.0 Professional and Llama 3.1 405B, in addition to fashions particularly fine-tuned for enhanced reasoning by inference-time scaling. This included OpenAI’s o1 and o3-mini, Anthropic’s Claude 3.7 Sonnet, Google’s Gemini 2 Flash Considering, and DeepSeek R1.

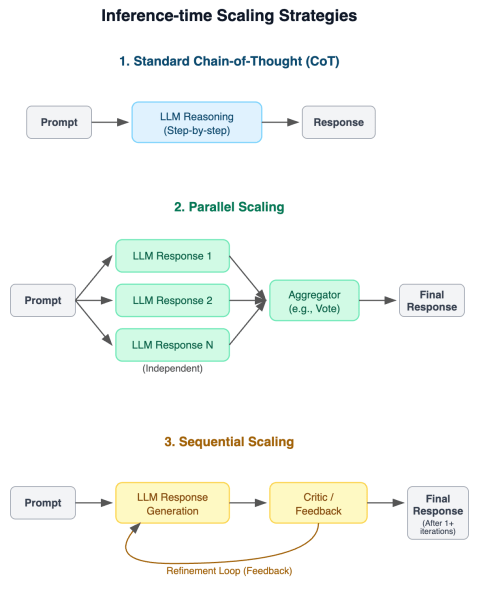

They evaluated these fashions utilizing three distinct inference-time scaling approaches:

Normal Chain-of-Thought (CoT): The fundamental methodology the place the mannequin is prompted to reply step-by-step.

Parallel Scaling: the mannequin generates a number of impartial solutions for a similar query and makes use of an aggregator (like majority vote or choosing the best-scoring reply) to reach at a ultimate outcome.

Sequential Scaling: The mannequin iteratively generates a solution and makes use of suggestions from a critic (probably from the mannequin itself) to refine the reply in subsequent makes an attempt.

These approaches had been examined on eight difficult benchmark datasets masking a variety of duties that profit from step-by-step problem-solving: math and STEM reasoning (AIME, Omni-MATH, GPQA), calendar planning (BA-Calendar), NP-hard issues (3SAT, TSP), navigation (Maze) and spatial reasoning (SpatialMap).

A number of benchmarks included issues with various issue ranges, permitting for a extra nuanced understanding of how scaling behaves as issues change into tougher.

“The supply of issue tags for Omni-MATH, TSP, 3SAT, and BA-Calendar permits us to investigate how accuracy and token utilization scale with issue in inference-time scaling, which is a perspective that’s nonetheless underexplored,” the researchers wrote in the paper detailing their findings.

The researchers evaluated the Pareto frontier of LLM reasoning by analyzing each accuracy and the computational value (i.e., the variety of tokens generated). This helps establish how effectively fashions obtain their outcomes.

Inference-time scaling Pareto frontier Credit score: arXiv

Inference-time scaling Pareto frontier Credit score: arXiv

Additionally they launched the “conventional-to-reasoning hole” measure, which compares the absolute best efficiency of a traditional mannequin (utilizing a super “best-of-N” choice) towards the common efficiency of a reasoning mannequin, estimating the potential features achievable by higher coaching or verification methods.

Extra compute isn’t all the time the reply

The examine offered a number of essential insights that problem widespread assumptions about inference-time scaling:

Advantages differ considerably: Whereas fashions tuned for reasoning usually outperform typical ones on these duties, the diploma of enchancment varies tremendously relying on the precise area and activity. Positive factors usually diminish as downside complexity will increase. As an illustration, efficiency enhancements seen on math issues didn’t all the time translate equally to scientific reasoning or planning duties.

Token inefficiency is rife: The researchers noticed excessive variability in token consumption, even between fashions attaining related accuracy. For instance, on the AIME 2025 math benchmark, DeepSeek-R1 used over 5 occasions extra tokens than Claude 3.7 Sonnet for roughly comparable common accuracy.

Extra tokens don’t result in increased accuracy: Opposite to the intuitive concept that longer reasoning chains imply higher reasoning, the examine discovered this isn’t all the time true. “Surprisingly, we additionally observe that longer generations relative to the identical mannequin can typically be an indicator of fashions struggling, slightly than improved reflection,” the paper states. “Equally, when evaluating completely different reasoning fashions, increased token utilization just isn’t all the time related to higher accuracy. These findings encourage the necessity for extra purposeful and cost-effective scaling approaches.”

Price nondeterminism: Maybe most regarding for enterprise customers, repeated queries to the identical mannequin for a similar downside may end up in extremely variable token utilization. This implies the price of operating a question can fluctuate considerably, even when the mannequin constantly gives the proper reply.

Variance in response size (spikes present smaller variance) Credit score: arXiv

Variance in response size (spikes present smaller variance) Credit score: arXiv

The potential in verification mechanisms: Scaling efficiency constantly improved throughout all fashions and benchmarks when simulated with a “good verifier” (utilizing the best-of-N outcomes).

Standard fashions typically match reasoning fashions: By considerably growing inference calls (as much as 50x extra in some experiments), typical fashions like GPT-4o may typically method the efficiency ranges of devoted reasoning fashions, notably on much less complicated duties. Nevertheless, these features diminished quickly in extremely complicated settings, indicating that brute-force scaling has its limits.

On some duties, the accuracy of GPT-4o continues to enhance with parallel and sequential scaling. Credit score: arXiv

On some duties, the accuracy of GPT-4o continues to enhance with parallel and sequential scaling. Credit score: arXiv

Implications for the enterprise

These findings carry vital weight for builders and enterprise adopters of LLMs. The difficulty of “value nondeterminism” is especially stark and makes budgeting troublesome. Because the researchers level out, “Ideally, builders and customers would favor fashions for which the usual deviation on token utilization per occasion is low for value predictability.”

“The profiling we do in (the examine) could possibly be helpful for builders as a instrument to choose which fashions are much less unstable for a similar immediate or for various prompts,” Besmira Nushi, senior principal analysis supervisor at Microsoft Analysis, instructed VentureBeat. “Ideally, one would wish to decide a mannequin that has low commonplace deviation for proper inputs.”

Fashions that peak blue to the left constantly generate the identical variety of tokens on the given activity Credit score: arXiv

Fashions that peak blue to the left constantly generate the identical variety of tokens on the given activity Credit score: arXiv

The examine additionally gives good insights into the correlation between a mannequin’s accuracy and response size. For instance, the next diagram reveals that math queries above ~11,000 token size have a really slim probability of being right, and people generations ought to both be stopped at that time or restarted with some sequential suggestions. Nevertheless, Nushi factors out that fashions permitting these submit hoc mitigations even have a cleaner separation between right and incorrect samples.

“In the end, additionally it is the duty of mannequin builders to consider decreasing accuracy and value non-determinism, and we anticipate loads of this to occur because the strategies get extra mature,” Nushi mentioned. “Alongside value nondeterminism, accuracy nondeterminism additionally applies.”

One other essential discovering is the constant efficiency increase from good verifiers, which highlights a crucial space for future work: constructing sturdy and broadly relevant verification mechanisms.

“The supply of stronger verifiers can have various kinds of influence,” Nushi mentioned, corresponding to bettering foundational coaching strategies for reasoning. “If used effectively, these also can shorten the reasoning traces.”

Robust verifiers also can change into a central a part of enterprise agentic AI options. Many enterprise stakeholders have already got such verifiers in place, which can have to be repurposed for extra agentic options, corresponding to SAT solvers, logistic validity checkers, and so on.

“The questions for the longer term are how such current methods will be mixed with AI-driven interfaces and what’s the language that connects the 2,” Nushi mentioned. “The need of connecting the 2 comes from the truth that customers is not going to all the time formulate their queries in a proper manner, they may wish to use a pure language interface and anticipate the options in the same format or in a ultimate motion (e.g. suggest a gathering invite).”

Each day insights on enterprise use circumstances with VB Each day

If you wish to impress your boss, VB Each day has you coated. We provide the inside scoop on what corporations are doing with generative AI, from regulatory shifts to sensible deployments, so you possibly can share insights for optimum ROI.

Thanks for subscribing. Try extra VB newsletters right here.

An error occured.

Verpasse nicht die Highlights aus Mode & Accessoires – entdecke Must-haves für deinen Look.