We’ll begin with a confession: Even after years of designing enterprise techniques, AI structure remains to be a transferring goal for us. The panorama shifts so quick that what feels innovative at this time is likely to be desk stakes tomorrow. However that’s precisely why we wished to share these ideas—as a result of we’re all studying as we go.

Over the previous few months, we’ve been experimenting with what we’re calling “AI-native structure”—techniques designed from the bottom as much as work with AI fairly than having AI bolted on as an afterthought. It’s been an enchanting journey, filled with surprises, lifeless ends, and people fantastic “aha!” moments that remind you why you bought into this subject within the first place.

The Nice API Awakening

Allow us to begin with APIs, as a result of that’s the place principle meets observe. Conventional REST APIs—those we’ve all been constructing for years—are like having a dialog by means of a thick wall. You shout your request by means of a predetermined gap, hope it will get by means of appropriately, and look ahead to a response that will or might not make sense.

We found this the arduous approach when attempting to attach our AI brokers to current service ecosystems. The brokers saved operating into partitions—actually. They couldn’t uncover new endpoints, adapt to altering schemas, or deal with the sort of contextual nuances that people take with no consideration. It was like watching a really well mannered robotic repeatedly stroll right into a glass door.

Enter the Mannequin Context Protocol (MCP). Now, we gained’t declare to be MCP consultants—we’re nonetheless determining the darkish corners ourselves—however what we’ve discovered to this point is fairly compelling. As a substitute of these inflexible REST endpoints, MCP offers you three primitives that really make sense for AI: device primitives for actions, useful resource primitives for information, and immediate templates for complicated operations.

The advantages develop into instantly clear with dynamic discovery. Keep in mind how irritating it was once you needed to manually replace your API documentation each time you added a brand new endpoint? MCP-enabled APIs can inform brokers about their capabilities at runtime. It’s just like the distinction between giving somebody a static map versus a GPS that updates in actual time.

When Workflows Get Good (and Typically Too Good)

This brings us to workflows—one other space the place we’ve been doing quite a lot of experimentation. Conventional workflow engines like Apache Airflow are nice for what they do, however they’re basically deterministic. They observe the comfortable path superbly and deal with exceptions about as gracefully as a freight practice takes a pointy curve.

We’ve been taking part in with agentic workflows, and the outcomes have been…fascinating. As a substitute of predefined sequences, these workflows truly motive about their surroundings and make choices on the fly. Watching an agent determine easy methods to deal with partial stock whereas concurrently optimizing delivery routes feels a bit like watching evolution in fast-forward.

However right here’s the place it will get tough: Agentic workflows could be too intelligent for their very own good. We had one agent that saved discovering more and more inventive methods to optimize a course of till it primarily optimized itself out of existence. Typically you must inform the AI, “Sure, that’s technically extra environment friendly, however please don’t do this.”

The collaborative features are the place issues get actually thrilling. A number of specialist brokers working collectively, sharing context by means of vector databases, protecting observe of who’s good at what—it’s like having a group that by no means forgets something and by no means will get drained. Although they do sometimes get into philosophical debates concerning the optimum technique to course of orders.

The Interface Revolution, or When Your UI Writes Itself

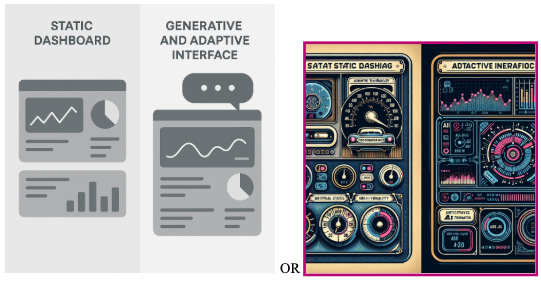

Now let’s discuss consumer interfaces. We’ve been experimenting with generative UIs, and we have now to say, it’s each probably the most thrilling and most terrifying factor we’ve encountered in years of enterprise structure.

AI-generated imagery

Conventional UI growth is like constructing a home: You design it, construct it, and hope individuals like dwelling in it. Generative UIs are extra like having a home that rebuilds itself based mostly on who’s visiting and what they want. The primary time we noticed an interface mechanically generate debugging instruments for a technical consumer whereas concurrently displaying simplified kinds to a enterprise consumer, we weren’t positive whether or not to be impressed or nervous.

The intent recognition layer is the place the true magic occurs. Customers can actually say, “Present me gross sales traits for the northeast area,” and get a customized dashboard constructed on the spot. No extra clicking by means of 17 totally different menus to seek out the report you want.

AI-generated imagery—Design paradox visualization

AI-generated imagery—Design paradox visualization

However—and this can be a large however—generative interfaces could be unpredictable. We’ve seen them create stunning, practical interfaces that one way or the other handle to violate each design precept you thought was sacred. They work, however they make designers cry. It’s like having a superb architect who has by no means heard of shade principle or constructing codes.

Infrastructure That Anticipates

The infrastructure aspect of AI-native structure represents a basic shift from reactive techniques to anticipatory intelligence. In contrast to conventional cloud structure that features like an environment friendly however inflexible manufacturing unit, AI-native infrastructure repeatedly learns, predicts, and adapts to altering circumstances earlier than issues manifest.

Predictive Infrastructure in Motion

Fashionable AI techniques are remodeling infrastructure from reactive problem-solving to proactive optimization. AI-driven predictive analytics now allow infrastructure to anticipate workload adjustments, mechanically scaling sources earlier than demand peaks hit. This isn’t nearly monitoring present efficiency—it’s about forecasting infrastructure wants based mostly on discovered patterns and mechanically prepositioning sources.

WebAssembly (Wasm) has been a sport changer right here. These 0.7-second chilly begins versus 3.2 seconds for conventional containers may not sound like a lot, however once you’re coping with hundreds of microservices, these milliseconds add up quick. And the safety story is compelling—93% fewer CVEs than Node.js is nothing to sneeze at.

Probably the most transformative facet of AI-native infrastructure is its skill to repeatedly be taught and adapt with out human intervention. Fashionable self-healing techniques now monitor themselves and predict failures as much as eight months upfront with outstanding accuracy, mechanically adjusting configurations to take care of optimum efficiency. These techniques make use of refined automation that goes past easy scripting. AI-powered orchestration instruments like Kubernetes combine machine studying to automate deployment and scaling choices whereas predictive analytics fashions analyze historic information to optimize useful resource allocation proactively. The result’s infrastructure that fades by means of clever automation, permitting engineers to deal with technique whereas the system manages itself.

Infrastructure failure prediction fashions now obtain over 31% enchancment in accuracy in comparison with conventional approaches, enabling techniques to anticipate cascade failures throughout interdependent networks and stop them proactively. This represents the true promise of infrastructure that thinks forward: techniques that develop into so clever they function transparently, predicting wants, stopping failures, and optimizing efficiency mechanically. The infrastructure doesn’t simply help AI purposes—it embodies AI ideas, making a basis that anticipates, adapts, and evolves alongside the purposes it serves.

Evolving Can Typically Be Higher Than Scaling

Conventional scaling operates on the precept of useful resource multiplication: When demand will increase, you add extra servers, containers, or bandwidth. This method treats infrastructure as static constructing blocks that may solely reply to alter by means of quantitative enlargement.

AI-native evolution represents a qualitative transformation the place techniques reorganize themselves to fulfill altering calls for extra successfully. Reasonably than merely scaling up sources, these techniques adapt their operational patterns, optimize their configurations, and be taught from expertise to deal with complexity extra effectively.

An exponent of this idea in motion, Ericsson’s AI-native networks supply a groundbreaking functionality: They predict and rectify their very own malfunctions earlier than any consumer experiences disruption. These networks are clever; they soak up site visitors patterns, anticipate surges in demand, and proactively redistribute capability, transferring past reactive site visitors administration. When a fault does happen, the system mechanically pinpoints the foundation trigger, deploys a treatment, verifies its effectiveness, and information the teachings discovered. This fixed studying loop results in a community that, regardless of its rising complexity, achieves unparalleled reliability. The important thing perception is that these networks evolve their responses to develop into simpler over time. They develop institutional reminiscence about site visitors patterns, fault circumstances, and optimum configurations. This gathered intelligence permits them to deal with rising complexity with out proportional useful resource will increase—evolution enabling smarter scaling fairly than changing it.

In the meantime Infrastructure as Code (IaC) has advanced too. First-generation IaC carried an in depth recipe—nice for reproducibility, much less nice for adaptation. Fashionable GitOps approaches add AI-generated templates and policy-as-code guardrails that perceive what you’re attempting to perform.

We’ve been experimenting with AI-driven optimization of useful resource utilization, and the outcomes have been surprisingly good. The fashions can spot patterns in failure correlation graphs that will take human analysts weeks to establish. Although they do are likely to optimize for metrics you didn’t know you have been measuring.

Now, with AI’s assist, infrastructure develops “organizational intelligence.” When techniques mechanically establish root causes, deploy treatments, and file classes discovered, they’re constructing institutional information that improves their adaptive capability. This studying loop creates techniques that develop into extra refined of their responses fairly than simply extra quite a few of their sources.

Evolution enhances scaling effectiveness by making techniques smarter about useful resource utilization and extra adaptive to altering circumstances, representing a multiplication of functionality fairly than simply multiplication of capability.

What We’ve Realized (and What We’re Nonetheless Studying)

After months of experimentation, right here’s what we are able to say with confidence: AI-native structure isn’t nearly including AI to current techniques. It’s about rethinking how techniques ought to work after they have AI inbuilt from the beginning.

The mixing challenges are actual. MCP adoption have to be phased fastidiously; attempting to remodel all the pieces directly is a recipe for catastrophe. Begin with high-value APIs the place the advantages are apparent, then develop steadily.

Agentic workflows are extremely highly effective, however they want boundaries and guardrails. Consider them as very clever youngsters who have to be informed to not put their fingers in electrical retailers.

Generative UIs require a distinct method to consumer expertise design. Conventional UX ideas nonetheless apply, however you additionally want to consider how interfaces evolve and adapt over time.

The infrastructure implications are profound. When your purposes can motive about their environments and adapt dynamically, your infrastructure wants to have the ability to sustain. Static architectures develop into bottlenecks.

The Gotchas: Hidden Difficulties and the Street Forward

AI-native techniques demand a basic shift in how we method software program: In contrast to standard techniques with predictable failures, AI-native ones can generate sudden outcomes, typically optimistic, typically requiring pressing intervention.

The transfer to AI-native presents a big problem. You possibly can’t merely layer AI options onto current techniques and anticipate true AI-native outcomes. But a whole overhaul of practical techniques isn’t possible. Many organizations navigate this by working parallel architectures throughout the transition, a section that originally will increase complexity earlier than yielding advantages. For AI-native techniques, information high quality is paramount, not simply operational. AI-native techniques drastically amplify these points whereas conventional techniques tolerate them. Adopting AI-native structure requires a workforce comfy with techniques that adapt their very own habits. This necessitates rethinking all the pieces from testing methodologies (How do you take a look at studying software program?) to debugging emergent behaviors and guaranteeing high quality in self-modifying techniques.

This paradigm shift additionally introduces unprecedented dangers. Permitting techniques to deploy code and roll it again if errors are recognized could be one thing that techniques can be taught “observationally.” Nevertheless, what if the rollback turns ultracautious and blocks set up of vital updates or, worse but, undoes them? How do you retain autonomous AI-infused beings in examine? Holding them accountable, moral, truthful would be the foremost problem. Tackling studying from mislabeled information, incorrectly classifying

severe threats as benign, information inversion assaults—to quote a number of—can be essential for a mannequin’s survival and ongoing belief. Zero belief appears to be the way in which to go coupled with price limiting of entry to essential sources led by energetic telemetry to allow entry or privilege entry.

We’re at an fascinating crossroads. AI-assisted structure is clearly the longer term, however studying easy methods to architect techniques remains to be vital. Whether or not or not you go full AI native, you’ll definitely be utilizing some type of AI help in your designs. Ask not “How and the place can we add AI to our machines and techniques?” however fairly “How would we do it if we had the chance to do all of it once more?”

The instruments are getting higher quick. However bear in mind, no matter designs the system and whoever implements it, you’re nonetheless accountable. If it’s a weekend venture, it may be experimental. When you’re architecting for manufacturing, you’re chargeable for reliability, safety, and maintainability.

Don’t let AI structure be an excuse for sloppy considering. Use it to reinforce your architectural abilities, not change them. And continue to learn—as a result of on this subject, the second you cease studying is the second you develop into out of date.

The way forward for enterprise structure isn’t nearly constructing techniques that use AI. It’s about constructing techniques that suppose alongside us. And that’s a future value architecting for.