Organizations face vital challenges managing their massive information analytics workloads. Information groups wrestle with fragmented improvement environments, advanced useful resource administration, inconsistent monitoring, and cumbersome guide scheduling processes. These points result in prolonged improvement cycles, inefficient useful resource utilization, reactive troubleshooting, and difficult-to-maintain information pipelines.These challenges are particularly essential for enterprises processing terabytes of knowledge day by day for enterprise intelligence (BI), reporting, and machine studying (ML). Such organizations want unified options that streamline their whole analytics workflow.

The following era of Amazon SageMaker with Amazon EMR in Amazon SageMaker Unified Studio addresses these ache factors by an built-in improvement atmosphere (IDE) the place information staff can develop, check, and refine Spark purposes in a single constant atmosphere. Amazon EMR Serverless alleviates cluster administration overhead by dynamically allocating assets based mostly on workload necessities, and built-in monitoring instruments assist groups rapidly establish efficiency bottlenecks. Integration with Apache Airflow by Amazon Managed Workflows for Apache Airflow (Amazon MWAA) gives sturdy scheduling capabilities, and the pay-only-for-resources-used mannequin delivers vital price financial savings.

On this submit, we exhibit the way to develop and monitor a Spark software utilizing current information in Amazon Easy Storage Service (Amazon S3) utilizing SageMaker Unified Studio.

Answer overview

This answer makes use of SageMaker Unified Studio to execute and oversee a Spark software, highlighting its built-in capabilities. We cowl the next key steps:

Create an EMR Serverless compute atmosphere for interactive purposes utilizing SageMaker Unified Studio.

Create and configure a Spark software.

Use TPC-DS information to construct and run the Spark software utilizing a Jupyter pocket book in SageMaker Unified Studio.

Monitor software efficiency and schedule recurring runs with Amazon MWAA built-in.

Analyze leads to SageMaker Unified Studio to optimize workflows.

Stipulations

For this walkthrough, you have to have the next conditions:

Add EMR Serverless as compute

Full the next steps to create an EMR Serverless compute atmosphere to construct your Spark software:

In SageMaker Unified Studio, open the mission you created as a prerequisite and select Compute.

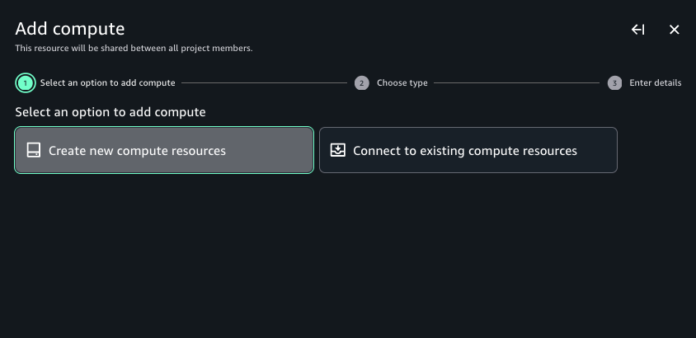

Select Information processing, then select Add compute.

Select Create new compute assets, then select Subsequent.

Select EMR Serverless, then select Subsequent.

For Compute title, enter a reputation.

For Launch label, select emr-7.5.0.

For Permission mode, select Compatibility.

Select Add compute.

It takes a couple of minutes to spin up the EMR Serverless software. After it’s created, you may view the compute in SageMaker Unified Studio.

The previous steps exhibit how one can arrange an Amazon EMR Serverless software in SageMaker Unified Studio to run interactive PySpark workloads. In subsequent steps, we construct and monitor Spark purposes in an interactive JupyterLab workspace.

Develop, monitor, and debug a Spark software in a Jupyter pocket book inside SageMaker Unified Studio

On this part, we construct a Spark software utilizing the TPC-DS dataset inside SageMaker Unified Studio. With Amazon SageMaker Information Processing, you may give attention to remodeling and analyzing your information with out managing compute capability or open supply purposes, saving you time and decreasing prices. SageMaker Information Processing gives a unified developer expertise from Amazon EMR, AWS Glue, Amazon Redshift, Amazon Athena, and Amazon MWAA in a single pocket book and question interface. You may robotically provision your capability on Amazon EMR on Amazon Elastic Compute Cloud (Amazon EC2) or EMR Serverless. Scaling guidelines handle modifications to your compute demand to optimize efficiency and runtimes. Integration with Amazon MWAA simplifies workflow orchestration by assuaging infrastructure administration wants. For this submit, we use EMR Serverless to learn and question the TPC-DS dataset inside a pocket book and run it utilizing Amazon MWAA.

Full the next steps:

Upon completion of the earlier steps and conditions, navigate to SageMaker Studio and open your mission.

Select Construct after which JupyterLab.

The pocket book takes about 30 seconds to initialize and hook up with the house.

Underneath Pocket book, select Python 3 (ipykernel).

Within the first cell, subsequent to Native Python, select the dropdown menu and select PySpark.

Select the dropdown menu subsequent to Mission.Spark and select EMR-S Compute.

Run the next code to develop your Spark software. This instance reads a 3 TB TPC-DS dataset in Parquet format from a publicly accessible S3 bucket:

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/retailer/”).createOrReplaceTempView(“retailer”)

After the Spark session begins and execution logs begin to populate, you may discover the Spark UI and driver logs to additional debug and troubleshoot Spark progra The next screenshot reveals an instance of the Spark UI.

The next screenshot reveals an instance of the Spark UI.  The next screenshot reveals an instance of the driving force logs.

The next screenshot reveals an instance of the driving force logs.  The next screenshot reveals the Executors tab, which gives entry to the driving force and executor logs.

The next screenshot reveals the Executors tab, which gives entry to the driving force and executor logs.

Use the next code to learn some extra TPC-DS datasets. You may create short-term views and use the Spark UI to see the recordsdata being learn. Discuss with the appendix on the finish of this for particulars on utilizing the TPC-DS dataset inside your buckets.

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/merchandise/”).createOrReplaceTempView(“merchandise”)

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/store_sales/”).createOrReplaceTempView(“store_sales”)

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/date_dim/”).createOrReplaceTempView(“date_dim”)

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/buyer/”).createOrReplaceTempView(“buyer”)

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/catalog_sales/”).createOrReplaceTempView(“catalog_sales”)

spark.learn.parquet(“s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/web_sales/”).createOrReplaceTempView(“web_sales”)

In every cell of your pocket book, you may develop Spark Job Progress to view the levels of the job submitted to EMR Serverless for a selected cell. You may see the time taken to finish every stage. As well as, if a failure happens, you may study the logs, making troubleshooting a seamless expertise.

As a result of the recordsdata are partitioned based mostly on date key column, you may observe that Spark runs parallel duties for reads.

Subsequent, get the rely throughout the date time keys on information that’s partitioned based mostly on the time key utilizing the next code:

choose rely(1), ss_sold_date_sk from store_sales group by ss_sold_date_sk order by ss_sold_date_sk

Monitor jobs within the Spark UI

On the Jobs tab of the Spark UI, you may see a listing of full or actively operating jobs, with the next particulars:

The motion that triggered the job

The time it took (for this instance, 41 seconds, however timing will range)

The variety of levels (2) and duties (3,428); these are for reference and particular to this particular instance

You may select the job to view extra particulars, significantly across the levels. Our job has two levels; a brand new stage is created at any time when there’s a shuffle. We now have one stage for the preliminary studying of every dataset, and one for the aggregation.  Within the following instance, we run some TPC-DS SQL statements which might be used for efficiency and benchmarks:

Within the following instance, we run some TPC-DS SQL statements which might be used for efficiency and benchmarks:

with frequent_ss_items as

(choose substr(i_item_desc,1,30) itemdesc,i_item_sk item_sk,d_date solddate,rely(*) cnt

from store_sales, date_dim, merchandise

the place ss_sold_date_sk = d_date_sk

and ss_item_sk = i_item_sk

and d_year in (2000, 2000+1, 2000+2,2000+3)

group by substr(i_item_desc,1,30),i_item_sk,d_date

having rely(*) >4),

max_store_sales as

(choose max(csales) tpcds_cmax

from (choose c_customer_sk,sum(ss_quantity*ss_sales_price) csales

from store_sales, buyer, date_dim

the place ss_customer_sk = c_customer_sk

and ss_sold_date_sk = d_date_sk

and d_year in (2000, 2000+1, 2000+2,2000+3)

group by c_customer_sk) x),

best_ss_customer as

(choose c_customer_sk,sum(ss_quantity*ss_sales_price) ssales

from store_sales, buyer

the place ss_customer_sk = c_customer_sk

group by c_customer_sk

having sum(ss_quantity*ss_sales_price) > (95/100.0) *

(choose * from max_store_sales))

choose sum(gross sales)

from (choose cs_quantity*cs_list_price gross sales

from catalog_sales, date_dim

the place d_year = 2000

and d_moy = 2

and cs_sold_date_sk = d_date_sk

and cs_item_sk in (choose item_sk from frequent_ss_items)

and cs_bill_customer_sk in (choose c_customer_sk from best_ss_customer)

union all

(choose ws_quantity*ws_list_price gross sales

from web_sales, date_dim

the place d_year = 2000

and d_moy = 2

and ws_sold_date_sk = d_date_sk

and ws_item_sk in (choose item_sk from frequent_ss_items)

and ws_bill_customer_sk in (choose c_customer_sk from best_ss_customer))) x

You may monitor your Spark job in SageMaker Unified Studio utilizing two strategies. Jupyter notebooks present fundamental monitoring, exhibiting real-time job standing and execution progress. For extra detailed evaluation, use the Spark UI. You may study particular levels, duties, and execution plans. The Spark UI is especially helpful for troubleshooting efficiency points and optimizing queries. You may monitor estimated levels, operating duties, and job timing particulars. This complete view helps you perceive useful resource utilization and monitor job progress in depth.

On this part, we defined how one can EMR Serverless compute in SageMaker Unified Studio to construct an interactive Spark software. By the Spark UI, the interactive software gives fine-grained task-level standing, I/O, and shuffle particulars, in addition to hyperlinks to corresponding logs of the duty for this stage instantly out of your pocket book, enabling a seamless troubleshooting expertise.

Clear up

To keep away from ongoing expenses in your AWS account, delete the assets you created throughout this tutorial:

Delete the connection.

Delete the EMR job.

Delete the EMR output S3 buckets.

Delete the Amazon MWAA assets, corresponding to workflows and environments.

Conclusion

On this submit, we demonstrated how the following era of SageMaker, mixed with EMR Serverless, gives a strong answer for creating, monitoring, and scheduling Spark purposes utilizing information in Amazon S3. The built-in expertise considerably reduces complexity by providing a unified improvement atmosphere, automated useful resource administration, and complete monitoring capabilities by Spark UI, whereas sustaining cost-efficiency by a pay-as-you-go mannequin. For companies, this implies sooner time-to-insight, improved workforce collaboration, and diminished operational overhead, so information groups can give attention to analytics relatively than infrastructure administration.

To get began, discover the Amazon SageMaker Unified Studio Consumer Information, arrange a mission in your AWS atmosphere, and uncover how this answer can remodel your group’s information analytics capabilities.

Appendix

Within the following sections, we talk about the way to run a workload on a schedule and supply particulars in regards to the TPC-DS dataset for constructing the Spark software utilizing EMR Serverless.

Run a workload on a schedule

On this part, we deploy a JupyterLab pocket book and create a workflow utilizing Amazon MWAA. You need to use workflows to orchestrate notebooks, querybooks, and extra in your mission repositories. With workflows, you may outline a set of duties organized as a directed acyclic graph (DAG) that may run on a user-defined schedule.Full the next steps:

In SageMaker Unified Studio, select Construct, and below Orchestration, select Workflows.

Select Create Workflow in Editor.

You’ll be redirected to the JupyterLab pocket book with a brand new DAG referred to as untitled.py created below the /src/workflows/dag folder.

We rename this pocket book to tpcds_data_queries.py.

You may reuse the prevailing template with the next updates:

Replace line 17 with the schedule you need your code to run.

Replace line 26 along with your NOTEBOOK_PATH. This ought to be in src/.ipynb. Be aware the title of the robotically generated dag_id; you may title it based mostly in your necessities.

Select File and Save pocket book.

To check, you may set off a guide run of your workload.

In SageMaker Unified Studio, select Construct, and below Orchestration, select Workflows.

Select your workflow, then select Run.

You may monitor the success of your job on the Runs tab.

To debug your pocket book job by accessing the Spark UI inside your Airflow job console, you have to use EMR Serverless Airflow Operators to submit your job. The hyperlink is on the market on the Particulars tab of your question.

This feature has the next key limitations: it’s not out there for Amazon EMR on EC2, and SageMaker pocket book job operators don’t work.

You may configure the operator to generate one-time hyperlinks to the applying UIs and Spark stdout logs by passing enable_application_ui_links=True as a parameter. After the job begins operating, these hyperlinks can be found on the Particulars tab of the related job. If enable_application_ui_links=False, then the hyperlinks can be current however grayed out.

Be sure you have the emr-serverless:GetDashboardForJobRun AWS Identification and Entry Administration (IAM) permissions to generate the dashboard hyperlink.

Open the Airflow UI in your job. The Spark UI and historical past server dashboard choices are seen on the Particulars tab, as proven within the following screenshot.

The next screenshot reveals the Jobs tab of the Spark UI.

Use the TPC-DS dataset to construct the Spark software utilizing EMR Serverless

To make use of the TPC-DS dataset to run the Spark software in opposition to a dataset in an S3 bucket, it’s essential to copy the TPC-DS dataset into your S3 bucket:

Create a brand new S3 bucket in your check account if wanted. Within the following code, exchange $YOUR_S3_BUCKET along with your S3 bucket title. We recommend you export YOUR_S3_BUCKET as an atmosphere variable:

Copy the TPC-DS supply information as enter to your S3 bucket. If it’s not exported as an atmosphere variable, exchange $YOUR_S3_BUCKET along with your S3 bucket title:

aws s3 sync s3://blogpost-sparkoneks-us-east-1/weblog/BLOG_TPCDS-TEST-3T-partitioned/ s3://$YOUR_S3_BUCKET/weblog/BLOG_TPCDS-TEST-3T-partitioned/

Concerning the Authors

Amit Maindola is a Senior Information Architect targeted on information engineering, analytics, and AI/ML at Amazon Net Providers. He helps prospects of their digital transformation journey and allows them to construct extremely scalable, sturdy, and safe cloud-based analytical options on AWS to realize well timed insights and make essential enterprise selections.

Amit Maindola is a Senior Information Architect targeted on information engineering, analytics, and AI/ML at Amazon Net Providers. He helps prospects of their digital transformation journey and allows them to construct extremely scalable, sturdy, and safe cloud-based analytical options on AWS to realize well timed insights and make essential enterprise selections.

Abhilash is a senior specialist options architect at Amazon Net Providers (AWS), serving to public sector prospects on their cloud journey with a give attention to AWS Information and AI companies. Outdoors of labor, Abhilash enjoys studying new applied sciences, watching films, and visiting new locations.

Abhilash is a senior specialist options architect at Amazon Net Providers (AWS), serving to public sector prospects on their cloud journey with a give attention to AWS Information and AI companies. Outdoors of labor, Abhilash enjoys studying new applied sciences, watching films, and visiting new locations.