With GenSim-2, builders can modify climate and lighting situations comparable to rain, fog, snow, glare, and time of day or night time in video knowledge. | Supply: Helm.ai

Helm.ai final week launched the Helm.ai Driver, a real-time deep neural community, or DNN, transformer-based path-prediction system for freeway and concrete Stage 4 autonomous driving. The corporate demonstrated the mannequin’s capabilities in a closed-loop atmosphere utilizing its proprietary GenSim-2 generative AI basis mannequin to re-render reasonable sensor knowledge in simulation.

“We’re excited to showcase real-time path prediction for city driving with Helm.ai Driver, primarily based on our proprietary transformer DNN structure that requires solely vision-based notion as enter,” said Vladislav Voroninski, Helm.ai’s CEO and founder. “By coaching on real-world knowledge, we developed a complicated path-prediction system which mimics the delicate behaviors of human drivers, studying finish to finish with none explicitly outlined guidelines.”

“Importantly, our city path prediction for (SAE) L2 by means of L4 is suitable with our production-grade, surround-view imaginative and prescient notion stack,” he continued. “By additional validating Helm.ai Driver in a closed-loop simulator, and mixing with our generative AI-based sensor simulation, we’re enabling safer and extra scalable growth of autonomous driving techniques.”

Based in 2016, Helm.ai develops synthetic intelligence software program for superior driver-assist techniques (ADAS), autonomous autos, and robotics. The corporate provides full-stack, real-time AI techniques, together with end-to-end autonomous techniques, plus growth and validation instruments powered by its Deep Educating methodology and generative AI.

Redwood Metropolis, Calif.-based Helm.ai collaborates with international automakers on production-bound tasks. In December, it unveiled GenSim-2, its generative AI mannequin for creating and modifying video knowledge for autonomous driving.

Helm.ai Driver learns in actual time

Helm.ai mentioned its new mannequin predicts the trail of a self-driving car in actual time utilizing solely camera-based notion—no HD maps, lidar, or extra sensors required. It takes the output of Helm.ai’s production-grade notion stack as enter, making it straight suitable with extremely validated software program. This modular structure permits environment friendly validation and larger interpretability, mentioned the corporate

Educated on large-scale, real-world knowledge utilizing Helm.ai’s proprietary Deep Educating methodology, the path-prediction mannequin reveals strong, human driver-like behaviors in complicated city driving eventualities, the corporate claimed. This consists of dealing with intersections, turns, impediment avoidance, passing maneuvers, and response to car cut-ins. These are emergent behaviors from end-to-end studying, not explicitly programmed or tuned into the system, Helm.ai famous.

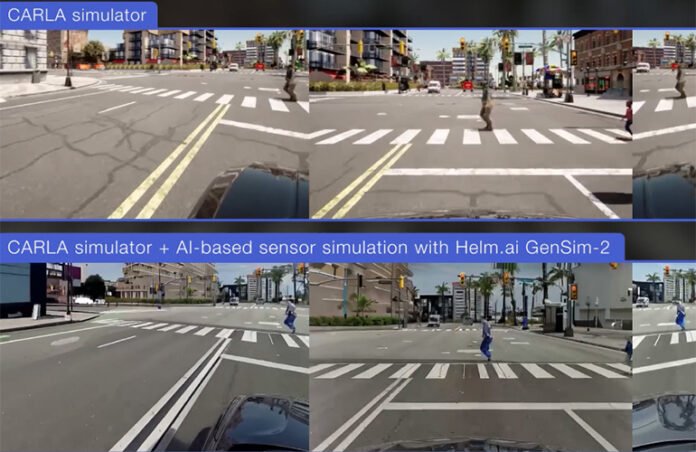

To show the mannequin’s path-prediction capabilities in a practical, dynamic atmosphere, Helm.ai deployed it in a closed-loop simulation utilizing the open-source CARLA platform (see video above). On this setting, Helm.ai Driver repeatedly responded to its atmosphere, identical to driving in the true world.

As well as, Helm.ai mentioned GenSim-2 re-rendered the simulated scenes to supply reasonable digital camera outputs that carefully resemble real-world visuals.

Helm.ai mentioned its basis fashions for path prediction and generative sensor simulation “are key constructing blocks of its AI-first method to autonomous driving. The corporate plans to proceed delivering fashions that generalize throughout car platforms, geographies, and driving situations.