Be part of our each day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Study Extra

Two fashionable approaches for customizing giant language fashions (LLMs) for downstream duties are fine-tuning and in-context studying (ICL). In a current examineresearchers at Google DeepMind and Stanford College explored the generalization capabilities of those two strategies. They discover that ICL has better generalization capacity (although it comes at the next computation price throughout inference). In addition they suggest a novel strategy to get the very best of each worlds.

The findings may also help builders make essential selections when constructing LLM purposes for his or her bespoke enterprise knowledge.

Testing how language fashions study new methods

High quality-tuning includes taking a pre-trained LLM and additional coaching it on a smaller, specialised dataset. This adjusts the mannequin’s inner parameters to show it new data or expertise. In-context studying (ICL), then again, doesn’t change the mannequin’s underlying parameters. As a substitute, it guides the LLM by offering examples of the specified process instantly throughout the enter immediate. The mannequin then makes use of these examples to determine find out how to deal with a brand new, comparable question.

The researchers got down to rigorously evaluate how properly fashions generalize to new duties utilizing these two strategies. They constructed “managed artificial datasets of factual data” with complicated, self-consistent constructions, like imaginary household timber or hierarchies of fictional ideas.

To make sure they have been testing the mannequin’s capacity to study new info, they changed all nouns, adjectives, and verbs with nonsense phrases, avoiding any overlap with the information the LLMs might need encountered throughout pre-training.

The fashions have been then examined on numerous generalization challenges. For example, one take a look at concerned easy reversals. If a mannequin was educated that “femp are extra harmful than glon,” might it accurately infer that “glon are much less harmful than femp”? One other take a look at targeted on easy syllogisms, a type of logical deduction. If informed “All glon are yomp” and “All troff are glon,” might the mannequin deduce that “All troff are yomp”? In addition they used a extra complicated “semantic construction benchmark” with a richer hierarchy of those made-up info to check extra nuanced understanding.

“Our outcomes are targeted totally on settings about how fashions generalize to deductions and reversals from fine-tuning on novel data constructions, with clear implications for conditions when fine-tuning is used to adapt a mannequin to company-specific and proprietary info,” Andrew Lampinen, Analysis Scientist at Google DeepMind and lead creator of the paper, informed VentureBeat.

To judge efficiency, the researchers fine-tuned Gemini 1.5 Flash on these datasets. For ICL, they fed your entire coaching dataset (or giant subsets) as context to an instruction-tuned mannequin earlier than posing the take a look at questions.

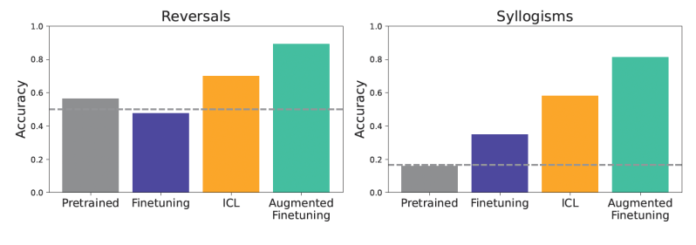

The outcomes persistently confirmed that, in data-matched settings, ICL led to higher generalization than commonplace fine-tuning. Fashions utilizing ICL have been typically higher at duties like reversing relationships or making logical deductions from the supplied context. Pre-trained fashions, with out fine-tuning or ICL, carried out poorly, indicating the novelty of the take a look at knowledge.

“One of many foremost trade-offs to think about is that, while ICL doesn’t require fine-tuning (which saves the coaching prices), it’s typically extra computationally costly with every use, because it requires offering extra context to the mannequin,” Lampinen stated. “However, ICL tends to generalize higher for the datasets and fashions that we evaluated.”

A hybrid strategy: Augmenting fine-tuning

Constructing on the statement that ICL excels at versatile generalization, the researchers proposed a brand new technique to reinforce fine-tuning: including in-context inferences to fine-tuning knowledge. The core concept is to make use of the LLM’s personal ICL capabilities to generate extra numerous and richly inferred examples, after which add these augmented examples to the dataset used for fine-tuning.

They explored two foremost knowledge augmentation methods:

A neighborhood technique: This strategy focuses on particular person items of data. The LLM is prompted to rephrase single sentences from the coaching knowledge or draw direct inferences from them, reminiscent of producing reversals.

A worldwide technique: The LLM is given the total coaching dataset as context, then prompted to generate inferences by linking a selected doc or reality with the remainder of the supplied info, resulting in an extended reasoning hint of related inferences.

When the fashions have been fine-tuned on these augmented datasets, the positive aspects have been important. This augmented fine-tuning considerably improved generalization, outperforming not solely commonplace fine-tuning but additionally plain ICL.

“For instance, if one of many firm paperwork says ‘XYZ is an inner software for analyzing knowledge,’ our outcomes recommend that ICL and augmented finetuning shall be more practical at enabling the mannequin to reply associated questions like ‘What inner instruments for knowledge evaluation exist?’” Lampinen stated.

This strategy provides a compelling path ahead for enterprises. By investing in creating these ICL-augmented datasets, builders can construct fine-tuned fashions that exhibit stronger generalization capabilities.

This could result in extra strong and dependable LLM purposes that carry out higher on numerous, real-world inputs with out incurring the continual inference-time prices related to giant in-context prompts.

“Augmented fine-tuning will typically make the mannequin fine-tuning course of costlier, as a result of it requires an extra step of ICL to enhance the information, adopted by fine-tuning,” Lampinen stated. “Whether or not that extra price is merited by the improved generalization will depend upon the precise use case. Nonetheless, it’s computationally cheaper than making use of ICL each time the mannequin is used, when amortized over many makes use of of the mannequin.”

Whereas Lampinen famous that additional analysis is required to see how the elements they studied work together in numerous settings, he added that their findings point out that builders could need to contemplate exploring augmented fine-tuning in circumstances the place they see insufficient efficiency from fine-tuning alone.

“In the end, we hope this work will contribute to the science of understanding studying and generalization in basis fashions, and the practicalities of adapting them to downstream duties,” Lampinen stated.

Every day insights on enterprise use circumstances with VB Every day

If you wish to impress your boss, VB Every day has you lined. We provide the inside scoop on what corporations are doing with generative AI, from regulatory shifts to sensible deployments, so you’ll be able to share insights for optimum ROI.

Thanks for subscribing. Try extra VB newsletters right here.

An error occured.

your blog is fantastic! I’m learning so much from the way you share your thoughts.