Be part of our every day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. Be taught Extra

The distant horizon is at all times murky, the minute particulars obscured by sheer distance and atmospheric haze. For this reason forecasting the longer term is so imprecise: We can’t clearly see the outlines of the shapes and occasions forward of us. As a substitute, we take educated guesses.

The newly printed AI 2027 state of affairs, developed by a staff of AI researchers and forecasters with expertise at establishments like OpenAI and The Heart for AI Coverage, gives an in depth 2 to 3-year forecast for the longer term that features particular technical milestones. Being near-term, it speaks with nice readability about our AI close to future.

Knowledgeable by in depth professional suggestions and state of affairs planning workouts, AI 2027 outlines a quarter-by-quarter development of anticipated AI capabilities, notably multimodal fashions attaining superior reasoning and autonomy. What makes this forecast notably noteworthy is each its specificity and the credibility of its contributors, who’ve direct perception into present analysis pipelines.

Probably the most notable prediction is that synthetic normal intelligence (AGI) might be achieved in 2027, and synthetic superintelligence (ASI) will comply with months later. AGI matches or exceeds human capabilities throughout nearly all cognitive duties, from scientific analysis to inventive endeavors, whereas demonstrating adaptability, widespread sense reasoning and self-improvement. ASI goes additional, representing techniques that dramatically surpass human intelligence, with the power to unravel issues we can’t even comprehend.

Like many predictions, these are primarily based on assumptions, not the least of which is that AI fashions and purposes will proceed to progress exponentially, as they’ve for the final a number of years. As such, it’s believable, however not assured to anticipate exponential progress, particularly as scaling of those fashions might now be hitting diminishing returns.

Not everybody agrees with these predictions. Ali Farhadi, the CEO of the Allen Institute for Synthetic Intelligence, informed The New York Instances: “I’m all for projections and forecasts, however this (AI 2027) forecast doesn’t appear to be grounded in scientific proof, or the truth of how issues are evolving in AI.”

Nevertheless, there are others who view this evolution as believable. Anthropic co-founder Jack Clark wrote in his Import you will have e-newsletter that AI 2027 is: “The very best remedy but of what ‘dwelling in an exponential’ may seem like.” He added that it’s a “technically astute narrative of the subsequent few years of AI growth.” This timeline additionally aligns with that proposed by Anthropic CEO Dario Amodei, who has stated that AI that may surpass people in virtually every thing will arrive within the subsequent two to a few years. And, Google DeepMind stated in a brand new analysis paper that AGI may plausibly arrive by 2030.

The nice acceleration: Disruption with out precedent

This looks as if an auspicious time. There have been comparable moments like this in historical past, together with the invention of the printing press or the unfold of electrical energy. Nevertheless, these advances required a few years and a long time to have a big impression.

The arrival of AGI feels completely different, and doubtlessly horrifying, particularly whether it is imminent. AI 2027 describes one state of affairs that, attributable to misalignment with human values, superintelligent AI destroys humanity. If they’re proper, probably the most consequential danger for humanity might now be throughout the similar planning horizon as your subsequent smartphone improve. For its half, the Google DeepMind paper notes that human extinction is a doable final result from AGI, albeit unlikely of their view.

Opinions change slowly till individuals are introduced with overwhelming proof. That is one takeaway from Thomas Kuhn’s singular work “The Construction of Scientific Revolutions.” Kuhn reminds us that worldviews don’t shift in a single day, till, immediately, they do. And with AI, that shift might already be underway.

The longer term attracts close to

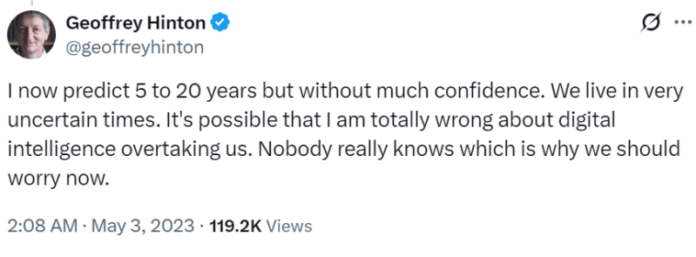

Earlier than the looks of huge language fashions (LLMs) and ChatGPT, the median timeline projection for AGI was for much longer than it’s in the present day. The consensus amongst specialists and prediction markets positioned the median anticipated arrival of AGI across the 12 months 2058. Earlier than 2023, Geoffrey Hinton — one of many “Godfathers of AI” and a Turing Award winner — thought AGI was “30 to 50 years and even longer away.” Nevertheless, progress proven by LLMs led him to vary his thoughts and stated it may arrive as quickly as 2028.

There are quite a few implications for humanity if AGI does arrive within the subsequent a number of years and is adopted shortly by ASI. Writing in FortuneJeremy Kahn stated that if AGI arrives within the subsequent few years “it may certainly result in massive job losses, as many organizations can be tempted to automate roles.”

A two-year AGI runway gives an inadequate grace interval for people and companies to adapt. Industries corresponding to customer support, content material creation, programming and information evaluation may face a dramatic upheaval earlier than retraining infrastructure can scale. This stress will solely intensify if a recession happens on this timeframe, when firms are already trying to cut back payroll prices and sometimes supplant personnel with automation.

Cogito, Ergo… Oh?

Even when AGI doesn’t result in in depth job losses or species extinction, there are different critical ramifications. Ever for the reason that Age of Cause, human existence has been grounded in a perception that we matter as a result of we predict.

This perception that pondering defines our existence has deep philosophical roots. It was René Descartes, writing in 1637, who articulated the now-famous phrase: “Je pense, donc je suis” (“I feel, subsequently I’m”). He later translated it into Latin: “Cogito, ergo sum.” In so doing, he proposed that certainty might be discovered within the act of particular person thought. Even when he had been deceived by his senses, or misled by others, the actual fact that he was pondering proved that he existed.

On this view, the self is anchored in cognition. It was a revolutionary thought on the time and gave rise to Enlightenment humanism, the scientific technique and, finally, fashionable democracy and particular person rights. People as thinkers turned the central figures of the trendy world.

Which raises a profound query: If machines can now suppose, or seem to suppose, and we outsource our pondering to AI, what does that imply for the trendy conception of the self? A latest research reported by 404 Media explores this conundrum. It discovered that when individuals rely closely on generative AI for work, they have interaction in much less important pondering which, over time, can “outcome within the deterioration of cognitive colleges that must be preserved.”

The place can we go from right here?

If AGI is coming within the subsequent few years — or quickly thereafter — we should quickly grapple with its implications not only for jobs and security, however for who we’re. And we should achieve this whereas additionally acknowledging its extraordinary potential to speed up discovery, cut back struggling and prolong human functionality in unprecedented methods. For instance, Amodei has stated that “highly effective AI” will allow 100 years of organic analysis and its advantages, together with improved healthcare, to be compressed into 5 to 10 years.

The forecasts introduced in AI 2027 might or is probably not appropriate, however they’re believable and provocative. And that plausibility ought to be sufficient. As people with company, and as members of firms, governments and societies, we should act now to organize for what could also be coming.

For companies, this implies investing in each technical AI security analysis and organizational resilience, creating roles that combine AI capabilities whereas amplifying human strengths. For governments, it requires accelerated growth of regulatory frameworks that deal with each quick considerations like mannequin analysis and longer-term existential dangers. For people, it means embracing steady studying centered on uniquely human abilities together with creativity, emotional intelligence and complicated judgment, whereas creating wholesome working relationships with AI instruments that don’t diminish our company.

The time for summary debate about distant futures has handed; concrete preparation for near-term transformation is urgently wanted. Our future won’t be written by algorithms alone. It is going to be formed by the alternatives we make, and the values we uphold, beginning in the present day.

Gary Grossman is EVP of know-how follow at Edelman and international lead of the Edelman AI Heart of Excellence.

Day by day insights on enterprise use circumstances with VB Day by day

If you wish to impress your boss, VB Day by day has you coated. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you’ll be able to share insights for optimum ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.

I love how you write—it’s like having a conversation with a good friend. Can’t wait to read more!This post pulled me in from the very first sentence. You have such a unique voice!Seriously, every time I think I’ll just skim through, I end up reading every word. Keep it up!Your posts always leave me thinking… and wanting more. This one was no exception!Such a smooth and engaging read—your writing flows effortlessly. Big fan here!Every time I read your work, I feel like I’m right there with you. Beautifully written!You have a real talent for storytelling. I couldn’t stop reading once I started.The way you express your thoughts is so natural and compelling. I’ll definitely be back for more!Wow—your writing is so vivid and alive. It’s hard not to get hooked!You really know how to connect with your readers. Your words resonate long after I finish reading.