Organizations operating Apache Spark workloads, whether or not on Amazon EMR, AWS Glue, Amazon Elastic Kubernetes Service (Amazon EKS), or self-managed clusters, make investments numerous engineering hours in efficiency troubleshooting and optimization. When a crucial extract, remodel, and cargo (ETL) pipeline fails or runs slower than anticipated, engineers find yourself spending hours navigating by way of a number of interfaces akin to logs or Spark UI, correlating metrics throughout totally different techniques and manually analyzing execution patterns to establish root causes. Though Spark Historical past Server offers detailed telemetry knowledge, together with job execution timelines, stage-level metrics, and useful resource consumption patterns, accessing and decoding this wealth of knowledge requires deep experience in Spark internals and navigating by way of a number of interconnected net interface tabs.

Right this moment, we’re asserting the open supply launch of Spark Historical past Server MCP, a specialised Mannequin Context Protocol (MCP) server that transforms this workflow by enabling AI assistants to entry and analyze your current Spark Historical past Server knowledge by way of pure language interactions. This undertaking, developed collaboratively by AWS open supply and Amazon SageMaker Knowledge Processing, turns complicated debugging classes into conversational interactions that ship quicker, extra correct insights with out requiring modifications to your present Spark infrastructure. You should use this MCP server along with your self-managed or AWS managed Spark Historical past Servers to investigate Spark functions operating within the cloud or on-premises deployments.

Understanding Spark observability problem

Apache Spark has turn into the usual for large-scale knowledge processing, powering crucial ETL pipelines, real-time analytics, and machine studying (ML) workloads throughout 1000’s of organizations. Constructing and sustaining Spark functions is, nonetheless, nonetheless an iterative course of, the place builders spend vital time testing, optimizing, and troubleshooting their code. Spark utility builders centered on knowledge engineering and knowledge integration use instances typically encounter vital operational challenges due to a couple totally different causes:

Advanced connectivity and configuration choices to quite a lot of sources with Spark – Though this makes it a well-liked knowledge processing platform, it typically makes it difficult to seek out the foundation reason behind inefficiencies or failures when Spark configurations aren’t optimally or appropriately configured.

Spark’s in-memory processing mannequin and distributed partitioning of datasets throughout its staff – Though good for parallelism, this typically makes it troublesome for customers to establish inefficiencies. This leads to sluggish utility execution or root reason behind failures brought on by useful resource exhaustion points akin to out of reminiscence and disk exceptions.

Lazy analysis of Spark transformations – Though lazy analysis optimizes efficiency, it makes it difficult to precisely and rapidly establish the appliance code and logic that brought about the failure from the distributed logs and metrics emitted from totally different executors.

Spark Historical past Server

Spark Historical past Server offers a centralized net interface for monitoring accomplished Spark functions, serving complete telemetry knowledge together with job execution timelines, stage-level metrics, process distribution, executor useful resource consumption, and SQL question execution plans. Though Spark Historical past Server assists builders for efficiency debugging, code optimization, and capability planning, it nonetheless has challenges:

Time-intensive handbook workflows – Engineers spend hours navigating by way of the Spark Historical past Server UI, switching between a number of tabs to correlate metrics throughout jobs, phases, and executors. Engineers should consistently swap between the Spark UI, cluster monitoring instruments, code repositories, and documentation to piece collectively an entire image of utility efficiency, which regularly takes days.

Experience bottlenecks – Efficient Spark debugging requires deep understanding of execution plans, reminiscence administration, and shuffle operations. This specialised information creates dependencies on senior engineers and limits group productiveness.

Reactive problem-solving – Groups sometimes uncover efficiency points solely after they influence manufacturing techniques. Guide monitoring approaches don’t scale to proactively establish degradation patterns throughout a whole bunch of each day Spark jobs.

How MCP transforms Spark observability

The Mannequin Context Protocol offers a standardized interface for AI brokers to entry domain-specific knowledge sources. Not like general-purpose AI assistants working with restricted context, MCP-enabled brokers can entry technical details about particular techniques and supply insights based mostly on precise operational knowledge reasonably than generic suggestions.With the assistance of Spark Historical past Server accessible by way of MCP, as an alternative of manually gathering efficiency metrics from a number of sources and correlating them to know utility conduct, engineers can have interaction with AI brokers which have direct entry to all Spark execution knowledge. These brokers can analyze execution patterns, establish efficiency bottlenecks, and supply optimization suggestions based mostly on precise job traits reasonably than basic greatest practices.

Introduction to Spark Historical past Server MCP

The Spark Historical past Server MCP is a specialised bridge between AI brokers and your current Spark Historical past Server infrastructure. It connects to a number of Spark Historical past Server situations and exposes their knowledge by way of standardized instruments that AI brokers can use to retrieve utility metrics, job execution particulars, and efficiency knowledge.

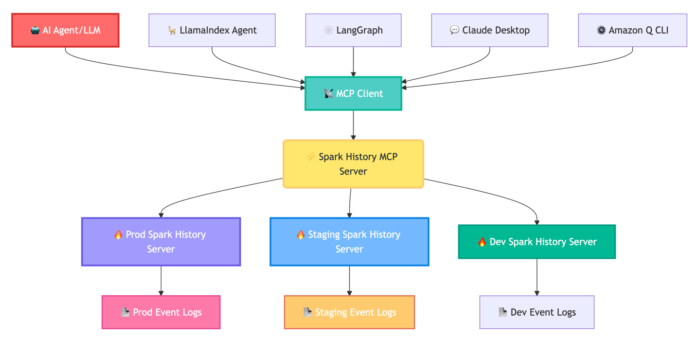

Importantly, the MCP server capabilities purely as a knowledge entry layer, enabling AI brokers akin to Amazon Q Developer CLI, Claude desktop, Strands Brokers, LlamaIndex, and LangGraph to entry and cause about your Spark knowledge. The next diagram exhibits this movement.

The Spark Historical past Server MCP instantly addresses these operational challenges by enabling AI brokers to entry Spark efficiency knowledge programmatically. This transforms the debugging expertise from handbook UI navigation to conversational evaluation. As a substitute of hours within the UI, ask, “Why did job spark-abcd fail?” and obtain root trigger evaluation of the failure. This enables customers to make use of AI brokers for expert-level efficiency evaluation and optimization suggestions, with out requiring deep Spark experience.

The MCP server offers complete entry to Spark telemetry throughout a number of granularity ranges. Utility-level instruments retrieve execution summaries, useful resource utilization patterns, and success charges throughout job runs. Job and stage evaluation instruments present execution timelines, stage dependencies, and process distribution patterns for figuring out crucial path bottlenecks. Activity-level instruments expose executor useful resource consumption patterns and particular person operation timings for detailed optimization evaluation. SQL-specific instruments present question execution plans, be part of methods, and shuffle operation particulars for analytical workload optimization. You possibly can evaluation the entire set of instruments out there within the MCP server within the undertaking README.

How you can use the MCP server

The MCP is an open normal that allows safe connections between AI functions and knowledge sources. This MCP server implementation helps each Streamable HTTP and STDIO protocols for max flexibility.

The MCP server runs as a neighborhood service inside your infrastructure both on Amazon Elastic Compute Cloud (Amazon EC2) or Amazon EKS, connecting on to your Spark Historical past Server situations. You preserve full management over knowledge entry, authentication, safety, and scalability.

All of the instruments can be found with streamable HTTP and STDIO protocol:

Streamable HTTP – Full superior instruments for LlamaIndex, LangGraph, and programmatic integrations

STDIO mode – Core performance of Amazon Q CLI and Claude Desktop

For deployment, it helps a number of Spark Historical past Server situations and offers deployments with AWS Glue, Amazon EMR, and Kubernetes.

Fast native setup

To arrange Spark Historical past MCP server domestically, execute the next instructions in your terminal:

git clone

cd spark-history-server-mcp

# Set up Activity (if not already put in)

brew set up go-task # macOS, see for others

# Setup and begin testing

process set up # Set up dependencies

process start-spark-bg # Begin Spark Historical past Server with pattern knowledge

process start-mcp-bg # Begin MCP Server

process start-inspector-bg # Begin MCP Inspector

# Opens for interactive testing

# When performed, run process stop-all

For complete configuration examples and integration guides, check with the undertaking README.

Integration with AWS managed providers

The Spark Historical past Server MCP integrates seamlessly with AWS managed providers, providing enhanced debugging capabilities for Amazon EMR and AWS Glue workloads. This integration adapts to numerous Spark Historical past Server deployments out there throughout these AWS managed providers whereas offering a constant, conversational debugging expertise:

AWS Glue – Customers can use the Spark Historical past Server MCP integration with self-managed Spark Historical past Server on an EC2 occasion or launch domestically utilizing Docker container. Organising the mixing is easy. Observe the step-by-step directions within the README to configure the MCP server along with your most well-liked Spark Historical past Server deployment. Utilizing this integration, AWS Glue customers can analyze AWS Glue ETL job efficiency no matter their Spark Historical past Server deployment strategy.

Amazon EMR – Integration with Amazon EMR makes use of the service-managed Persistent UI function for EMR on Amazon EC2. The MCP server requires solely an EMR cluster Amazon Useful resource Title (ARN) to find the out there Persistent UI on the EMR cluster or mechanically configure a brand new one for instances its lacking with token-based authentication. This eliminates the necessity for manually configuring Spark Historical past Server setup whereas offering safe entry to detailed execution knowledge from EMR Spark functions. Utilizing this integration, knowledge engineers can ask questions on their Spark workloads, akin to “Are you able to get job bottle neck for spark-? ” The MCP responds with detailed evaluation of execution patterns, useful resource utilization variations, and focused optimization suggestions, so groups can fine-tune their Spark functions for optimum efficiency throughout AWS providers.

For complete configuration examples and integration particulars, check with the AWS Integration Guides.

Trying forward: The way forward for AI-assisted Spark optimization

This open-source launch establishes the muse for enhanced AI-powered Spark capabilities. This undertaking establishes the muse for deeper integration with AWS Glue and Amazon EMR to simplify the debugging and optimization expertise for purchasers utilizing these Spark environments. The Spark Historical past Server MCP is open supply below the Apache 2.0 license. We welcome contributions together with new software extensions, integrations, documentation enhancements, and deployment experiences.

Get began right now

Rework your Spark monitoring and optimization workflow right now by offering AI brokers with clever entry to your efficiency knowledge.

Discover the GitHub repository

Evaluate the excellent README for setup and integration directions

Be part of discussions and submit points for enhancements

Contribute new options and deployment patterns

Acknowledgment: A particular due to everybody who contributed to the event and open-sourcing of the Apache Spark historical past server MCP: Vaibhav Naik, Akira Ajisaka, Wealthy Bowen, Savio Dsouza.

In regards to the authors

Manabu McCloskey is a Options Architect at Amazon Internet Companies. He focuses on contributing to open supply utility supply tooling and works with AWS strategic prospects to design and implement enterprise options utilizing AWS sources and open supply applied sciences. His pursuits embrace Kubernetes, GitOps, Serverless, and Souls Sequence.

Manabu McCloskey is a Options Architect at Amazon Internet Companies. He focuses on contributing to open supply utility supply tooling and works with AWS strategic prospects to design and implement enterprise options utilizing AWS sources and open supply applied sciences. His pursuits embrace Kubernetes, GitOps, Serverless, and Souls Sequence.

Vara Bonthu is a Principal Open Supply Specialist SA main Knowledge on EKS and AI on EKS at AWS, driving open supply initiatives and serving to AWS prospects to numerous organizations. He focuses on open supply applied sciences, knowledge analytics, AI/ML, and Kubernetes, with in depth expertise in improvement, DevOps, and structure. Vara focuses on constructing extremely scalable knowledge and AI/ML options on Kubernetes, enabling prospects to maximise cutting-edge know-how for his or her data-driven initiatives

Vara Bonthu is a Principal Open Supply Specialist SA main Knowledge on EKS and AI on EKS at AWS, driving open supply initiatives and serving to AWS prospects to numerous organizations. He focuses on open supply applied sciences, knowledge analytics, AI/ML, and Kubernetes, with in depth expertise in improvement, DevOps, and structure. Vara focuses on constructing extremely scalable knowledge and AI/ML options on Kubernetes, enabling prospects to maximise cutting-edge know-how for his or her data-driven initiatives

Andrew Kim is a Software program Improvement Engineer at AWS Glue, with a deep ardour for distributed techniques structure and AI-driven options, specializing in clever knowledge integration workflows and cutting-edge function improvement on Apache Spark. Andrew focuses on re-inventing and simplifying options to complicated technical issues, and he enjoys creating net apps and producing music in his free time.

Andrew Kim is a Software program Improvement Engineer at AWS Glue, with a deep ardour for distributed techniques structure and AI-driven options, specializing in clever knowledge integration workflows and cutting-edge function improvement on Apache Spark. Andrew focuses on re-inventing and simplifying options to complicated technical issues, and he enjoys creating net apps and producing music in his free time.

Shubham Mehta is a Senior Product Supervisor at AWS Analytics. He leads generative AI function improvement throughout providers akin to AWS Glue, Amazon EMR, and Amazon MWAA, utilizing AI/ML to simplify and improve the expertise of knowledge practitioners constructing knowledge functions on AWS.

Shubham Mehta is a Senior Product Supervisor at AWS Analytics. He leads generative AI function improvement throughout providers akin to AWS Glue, Amazon EMR, and Amazon MWAA, utilizing AI/ML to simplify and improve the expertise of knowledge practitioners constructing knowledge functions on AWS.

Kartik Panjabi is a Software program Improvement Supervisor on the AWS Glue group. His group builds generative AI options for the Knowledge Integration and distributed system for knowledge integration.

Kartik Panjabi is a Software program Improvement Supervisor on the AWS Glue group. His group builds generative AI options for the Knowledge Integration and distributed system for knowledge integration.

Mohit Saxena is a Senior Software program Improvement Supervisor on the AWS Knowledge Processing Workforce (AWS Glue and Amazon EMR). His group focuses on constructing distributed techniques to allow prospects with new AI/ML-driven capabilities to effectively remodel petabytes of knowledge throughout knowledge lakes on Amazon S3, databases and knowledge warehouses on the cloud.

Mohit Saxena is a Senior Software program Improvement Supervisor on the AWS Knowledge Processing Workforce (AWS Glue and Amazon EMR). His group focuses on constructing distributed techniques to allow prospects with new AI/ML-driven capabilities to effectively remodel petabytes of knowledge throughout knowledge lakes on Amazon S3, databases and knowledge warehouses on the cloud.