Prospects new to Amazon OpenSearch Service usually ask what number of shards their indexes want. An index is a group of shards, and an index’s shard depend can have an effect on each indexing and search request effectivity. OpenSearch Service can soak up giant quantities of knowledge, cut up it into smaller items referred to as shards, and distribute these shards throughout a dynamically altering set of situations.

On this submit, we offer some sensible steerage for figuring out the perfect shard depend in your use case.

Shards overview

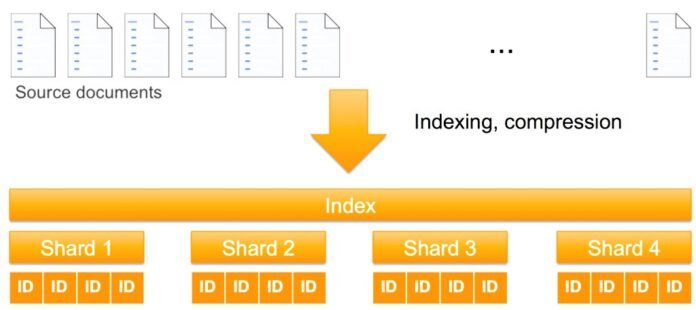

A search engine has two jobs: create an index from a set of paperwork, and search that index to compute the best-matching paperwork. In case your index is sufficiently small, a single partition on a single machine can retailer that index. For bigger doc units, in instances the place a single machine isn’t giant sufficient to carry the index, or in instances the place a single machine can’t compute your search outcomes successfully, the index will be cut up into partitions. These partitions are referred to as shards in OpenSearch Service. Every doc is routed to a shard that’s calculated, by default, by utilizing a hash of that doc’s ID.

A shard is each a unit of storage and a unit of computation. OpenSearch Service distributes shards throughout nodes in your cluster to parallelize index storage and processing. For those who add extra nodes to an OpenSearch Service area, it mechanically rebalances the shards by shifting them between the nodes. The next determine illustrates this course of.

As storage, main shards are distinct from each other. The doc set in a single shard doesn’t overlap the doc set in different shards. This strategy makes shards unbiased for storage.

As computational items, shards are additionally distinct from each other. Every shard is an occasion of an Apache Lucene index that computes outcomes on the paperwork it holds. As a result of all of the shards comprise the index, they need to perform collectively to course of every question and replace request for that index. To course of a question, OpenSearch Service routes the question to an information node for a main or duplicate shard. Every node computes its response domestically and the shard responses get aggregated for a last response. To course of a write request (a doc ingestion or an replace to an current doc), OpenSearch Service routes the request to the suitable shards—main then duplicate. As a result of most writes are bulk requests, all shards of an index are usually used.

The 2 various kinds of shards

There are two sorts of shards in OpenSearch Service—main and duplicate shards. In an OpenSearch index configuration, the first shard depend serves to partition information and the duplicate depend is the variety of full copies of the first shards. For instance, for those who configure your index with 5 main shards and 1 duplicate, you should have a complete of 10 shards: 5 main shards and 5 duplicate shards.

The first shard receives writes first. The first shard passes paperwork to the duplicate shards for indexing by default. OpenSearch Service’s O-series situations use phase replication. By default, OpenSearch Service waits for acknowledgment from duplicate shards earlier than confirming a profitable write operation to the consumer. Major and duplicate shards present redundant information storage, enhancing cluster resilience in opposition to node failures. Within the following instance, the OpenSearch Service area has three information nodes. There are two indexes, inexperienced (darker) and blue (lighter), every of which has three shards. The first for every shard is printed in crimson. Every shard additionally has a single duplicate, proven with no define.

OpenSearch Service maps shards to nodes primarily based on various guidelines. Probably the most primary rule is that main and duplicate shards are by no means put onto the identical node. If a knowledge node fails, OpenSearch Service mechanically creates one other information node and re-replicates shards from surviving nodes and redistributes them throughout the cluster. If main shards fail, duplicate shards are promoted to main to stop information loss and supply steady indexing and search operations.

So what number of shards? Deal with storage first

There are three kinds of workloads that OpenSearch customers usually keep: seek for purposes, log analytics, and as a vector database. Search workloads are read-heavy and latency delicate. They’re usually tied to an utility to boost search functionality and efficiency. A typical sample is to index the information in relational databases to offer customers extra filtering capabilities and supply environment friendly full textual content search.

Log workloads are write-heavy and obtain information constantly from purposes and community gadgets. Usually, that information is put right into a altering set of indexes, primarily based on an indexing time interval like day by day or month-to-month relying on the use case. As a substitute of indexing primarily based on time interval, you need to use rollover insurance policies primarily based on index measurement or doc depend to verify shard sizing greatest practices are adopted.

Vector database workloads use the OpenSearch Service k-Nearest Neighbor (k-NN) plugin to index vectors from an embedding pipeline. This allows semantic search, which measures relevance utilizing the which means of phrases reasonably than precisely matching the phrases. The embedding mannequin from the pipeline maps multimodal information right into a vector with probably 1000’s of dimensions. OpenSearch Service searches throughout vectors to supply search outcomes.

To find out the optimum variety of shards in your workload, begin together with your index storage necessities. Though storage necessities can fluctuate broadly, a normal guideline is to make use of 1:1.25 utilizing the supply information measurement to estimate utilization. Additionally, compression algorithms default to efficiency, however will also be adjusted to cut back measurement. In the case of shard sizes, contemplate the next primarily based on the workload:

Search – Divide your whole storage requirement by 30 GB.

If search latency is excessive, use a smaller shard measurement (as little as 10GB), growing the shard depend and parallelism for question processing.

Rising the shard depend reduces the quantity of labor at every shard (they’ve fewer paperwork to course of), but in addition will increase the quantity of networking for distributing the question and gathering the response. To steadiness these competing considerations, look at your common hit depend. In case your hit depend is excessive, use smaller shards. In case your hit depend is low, use bigger shards.

Logs – Divide the storage requirement in your desired time interval by 50 GB.

If utilizing an ISM coverage with rollover, contemplate setting the min_size parameter to 50 GB.

Rising the shard depend for logs workloads equally improves parallelism. Nevertheless, most queries for logs workloads have a small hit depend, so question processing is gentle. Logs workloads work nicely with bigger shard sizes, however shard smaller in case your question workload is heavier.

Vector – Divide your whole storage requirement by 50 GB.

Decreasing shard measurement (as little as 10GB) can enhance search latency when your vector queries are hybrid with a heavy lexical part. Conversely, growing shard measurement (as excessive as 75GB) can enhance latency when your queries are pure vector queries.

OpenSearch gives different optimization strategies for vector databases, together with vector quantization and disk-based search.

Okay-NN queries behave like extremely filtered search queries, with low hit counts. Due to this fact, bigger shards are inclined to work nicely. Be ready to shard smaller when your queries are heavier.

Don’t be afraid of utilizing a single shard

In case your index comprises lower than the suggested shard measurement (30 GB for search and 50 GB in any other case), we suggest that you just use a single main shard. Though it’s tempting so as to add extra shards pondering it should enhance efficiency, this strategy can truly be counterproductive for smaller datasets due to the added networking. Every shard you add to an index distributes the processing of requests for that index throughout a further node. Efficiency can lower as a result of there’s overhead for distributed operations to separate and mix outcomes throughout nodes when a single node can do it sufficiently.

Set the shard depend

Whenever you create an OpenSearch index, you set the first and duplicate counts for that index. As a result of you possibly can’t dynamically change the first shard depend of an current index, it’s important to make this vital configuration resolution earlier than indexing your first doc.

You set the shard depend utilizing the OpenSearch create index API. For instance (present your OpenSearch Service area endpoint URL and index identify):

curl -XPUT https:/// -H ‘Content material-Kind: utility/json’ -d

‘{

“settings”: {

“index” : {

“number_of_shards”: 3,

“number_of_replicas”: 1

}

}

}’

In case you have a single index workload, you solely have to do that one time, if you create your index for the primary time. In case you have a rolling index workload, you create a brand new index commonly. Use the index template API to automate making use of settings to all new indexes whose identify matches the template. The next instance units the shard depend for any index whose identify has the prefix logs (present your OpenSearch service endpoint area URL and index template identify):

curl -XPUT https:///_index_template/ -H ‘Content material-Kind: utility/json’ -d

‘{

“index_patterns”: (“logs*”),

“template”: {

“settings”: {

“index” : {

“number_of_shards”: 3,

“number_of_replicas”: 1

}

}

}

}’

Conclusion

This submit outlined primary shard sizing greatest practices, however further elements may affect the perfect index configuration you select to implement in your OpenSearch Service area.

For extra details about sharding, check with Optimize OpenSearch index shard sizes or Shard technique. Each sources may help you higher fine-tune your OpenSearch Service area to optimize its accessible compute sources.

Concerning the authors

Tom Burns is a Senior Cloud Help Engineer at AWS and relies within the NYC space. He’s an issue skilled in Amazon OpenSearch Service and engages with prospects for essential occasion troubleshooting and enhancing the supportability of the service. Exterior of labor, he enjoys taking part in along with his cats, taking part in board video games with buddies, and taking part in aggressive video games on-line.

Tom Burns is a Senior Cloud Help Engineer at AWS and relies within the NYC space. He’s an issue skilled in Amazon OpenSearch Service and engages with prospects for essential occasion troubleshooting and enhancing the supportability of the service. Exterior of labor, he enjoys taking part in along with his cats, taking part in board video games with buddies, and taking part in aggressive video games on-line.

Ron Miller is a Options Architect primarily based out of NYC, supporting transportation and logistics prospects. Ron works intently with AWS’s Knowledge & Analytics specialist group to advertise and help OpenSearch. On the weekend, Ron is a shade tree mechanic and trains to finish triathlons.

Ron Miller is a Options Architect primarily based out of NYC, supporting transportation and logistics prospects. Ron works intently with AWS’s Knowledge & Analytics specialist group to advertise and help OpenSearch. On the weekend, Ron is a shade tree mechanic and trains to finish triathlons.