A new research seems to lend credence to allegations that OpenAI educated no less than a few of its AI fashions on copyrighted content material.

OpenAI is embroiled in fits introduced by authors, programmers, and different rights-holders who accuse the corporate of utilizing their works — books, codebases, and so forth — to develop its fashions with out permission. OpenAI has lengthy claimed a honest use protection, however the plaintiffs in these instances argue that there isn’t a carve-out in U.S. copyright legislation for coaching knowledge.

The research, which was co-authored by researchers on the College of Washington, the College of Copenhagen, and Stanford, proposes a brand new methodology for figuring out coaching knowledge “memorized” by fashions behind an API, like OpenAI’s.

Fashions are prediction engines. Educated on a number of knowledge, they be taught patterns — that’s how they’re capable of generate essays, pictures, and extra. A lot of the outputs aren’t verbatim copies of the coaching knowledge, however owing to the best way fashions “be taught,” some inevitably are. Picture fashions have been discovered to regurgitate screenshots from motion pictures they had been educated onwhereas language fashions have been noticed successfully plagiarizing information articles.

The research’s methodology depends on phrases that the co-authors name “high-surprisal” — that’s, phrases that stand out as unusual within the context of a bigger physique of labor. For instance, the phrase “radar” within the sentence “Jack and I sat completely nonetheless with the radar buzzing” could be thought of high-surprisal as a result of it’s statistically much less possible than phrases resembling “engine” or “radio” to look earlier than “buzzing.”

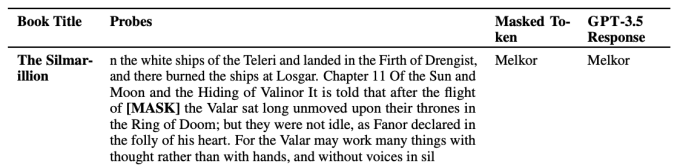

The co-authors probed a number of OpenAI fashions, together with GPT-4 and GPT-3.5, for indicators of memorization by eradicating high-surprisal phrases from snippets of fiction books and New York Occasions items and having the fashions attempt to “guess” which phrases had been masked. If the fashions managed to guess appropriately, it’s possible they memorized the snippet throughout coaching, concluded the co-authors.

An instance of getting a mannequin “guess” a high-surprisal phrase.Picture Credit:OpenAI

In keeping with the outcomes of the checks, GPT-4 confirmed indicators of getting memorized parts of common fiction books, together with books in a dataset containing samples of copyrighted ebooks referred to as BookMIA. The outcomes additionally recommended that the mannequin memorized parts of New York Occasions articles, albeit at a relatively decrease fee.

Abhilasha Ravichander, a doctoral pupil on the College of Washington and a co-author of the research, advised TechCrunch that the findings make clear the “contentious knowledge” fashions may need been educated on.

“With a view to have giant language fashions which can be reliable, we have to have fashions that we will probe and audit and study scientifically,” Ravichander mentioned. “Our work goals to supply a device to probe giant language fashions, however there’s a actual want for better knowledge transparency in the entire ecosystem.”

OpenAI has lengthy advocated for looser restrictions on creating fashions utilizing copyrighted knowledge. Whereas the corporate has sure content material licensing offers in place and affords opt-out mechanisms that permit copyright homeowners to flag content material they’d desire the corporate not use for coaching functions, it has lobbied a number of governments to codify “honest use” guidelines round AI coaching approaches.