Be part of our day by day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Be taught Extra

It began with the announcement of OpenAI’s o1 mannequin in Sept. 2024, however actually took off with the DeepSeek R1 launch in Jan. 2025.

Now, evidently most main AI mannequin suppliers and trainers are in a brand new race to ship higher, quicker, and cheaper “reasoning” AI language fashions — that’s, ones that possibly take slightly longer to answer a human consumer, however ideally accomplish that with higher, extra complete, extra properly “reasoned” solutions, which these class of fashions get by performing “chain-of-thought,” reflecting on their very own conclusions and interrogating them for veracity earlier than responding.

ByteDance, the Chinese language internet media big father or mother of TikTok, is the newest to affix the get together with the announcement and publication of the technical paper behind Seed-Pondering-v1.5, an upcoming giant language mannequin (LLM) designed to advance reasoning efficiency throughout each science, tech, math, and engineering (STEM) fields and general-purpose domains.

The mannequin isn’t but out there for obtain or use, and it’s unclear what the licensing phrases shall be—whether or not it will likely be proprietary/closed supply, open supply/free for all to make use of and modify at will, or someplace in between. Nonetheless, the technical paper gives some noteworthy particulars which might be price going over now and upfront of at any time when they’re made out there.

Constructed atop the more and more widespread Combination-of-Specialists (MoE) structure

Like Meta’s new Llama 4 and Mistral’s Mixtral earlier than it, Seed-Pondering-v1.5 is constructed utilizing a Combination-of-Specialists (MoE) structure.

This structure is designed to make fashions extra environment friendly. It primarily combines the capabilities of a number of fashions into one, every specializing in a distinct area.

On this case, the MoE structure signifies that Seed-Pondering-v1.5 makes use of solely 20 billion of the 200 billion parameters at a time.

ByteDance says in its technical paper revealed to GitHub that Seed-Pondering-v1.5 prioritizes structured reasoning and considerate response era.

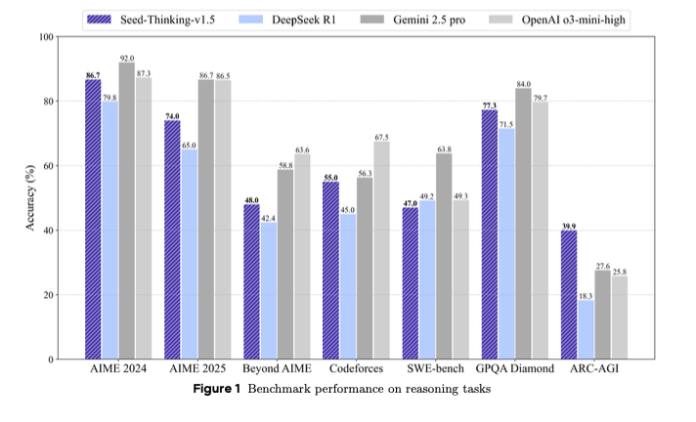

The outcomes almost converse for themselves, with Seed-Pondering-v1.5 outperforming DeepSeek R1 and approaching Google’s newly launched Gemini 2.5 Professional and OpenAI’s o3-mini-high reasoner on many third-party benchmark evaluations. It even exceeds these two within the case of the ARC-AGI benchmarkwhich measures progress in the direction of synthetic basic intelligence, seen because the objective or “Holy Grail” of AI. This mannequin outperforms people on most economically priceless duties, in accordance with OpenAI’s definition.

Positioned as a compact but succesful different to bigger state-of-the-art fashions, Seed-Pondering-v1.5 achieves aggressive benchmark outcomes. It introduces reinforcement studying (RL) improvements, coaching information curation and AI infrastructure.

Efficiency benchmarks and mannequin focus

Seed-Pondering-v1.5 exhibits sturdy efficiency on a collection of difficult duties, scoring 86.7% on AIME 2024, 55.0% go@8 on Codeforces and 77.3% on the GPQA science benchmark. These outcomes place it near or matching fashions like OpenAI’s o3-mini-high and Google’s Gemini 2.5 Professional on particular reasoning metrics.

On non-reasoning duties, the mannequin was evaluated by way of human choice comparisons and achieved an 8.0% greater win charge over DeepSeek R1, suggesting that its strengths generalize past logic or math-heavy challenges.

To handle saturation in customary benchmarks like AIME, ByteDance launched BeyondAIME, a brand new, tougher math benchmark with curated issues designed to withstand memorization and higher discriminate mannequin efficiency. This and the Codeforces analysis set are anticipated to be publicly launched to help future analysis.

Knowledge technique

Coaching information performed a central position within the mannequin’s growth. For supervised fine-tuning (SFT), the staff curated 400,000 samples, together with 300,000 verifiable (STEM, logic and coding duties) and 100,000 non-verifiable issues like artistic writing and role-playing.

For RL coaching, information was segmented into:

Verifiable issues: 100,000 rigorously filtered STEM questions and logic puzzles with recognized solutions, sourced from elite competitions and professional evaluation.

Non-verifiable duties: Human-preference datasets centered on open-ended prompts, evaluated utilizing pairwise reward fashions.

The STEM information leaned closely on superior arithmetic, accounting for over 80% of the issue set. Further logic information included duties like Sudoku and 24-point puzzles, with adjustable problem to match mannequin progress.

Reinforcement studying method

Reinforcement studying in Seed-Pondering-v1.5 is powered by customized actor-critic (VAPO) and policy-gradient (DAPO) frameworks, developed to deal with recognized instabilities in RL coaching. These strategies scale back reward sign sparsity and improve coaching stability, particularly in lengthy chain-of-thought (CoT) settings.

Reward fashions play a crucial position in supervising RL outputs. ByteDance launched two key instruments:

Seed-Verifier: A rule-based LLM that checks if generated and reference solutions are mathematically equal.

Seed-Pondering-Verifier: A step-by-step reasoning-based decide that improves judgment consistency and resists reward hacking.

This two-tiered reward system allows nuanced analysis for each easy and complicated duties.

Infrastructure and scaling

To help environment friendly large-scale coaching, ByteDance constructed a system atop its HybridFlow framework. Execution is dealt with by Ray clusters, and coaching and inference processes are co-located to scale back GPU idle time.

The Streaming Rollout System (SRS) is a notable innovation that separates mannequin evolution from runtime execution. It accelerates iteration pace by asynchronously managing partially accomplished generations throughout mannequin variations. This structure reportedly delivers as much as 3× quicker RL cycles.

Further infrastructure strategies embrace:

Combined precision (FP8) for reminiscence financial savings

Knowledgeable parallelism and kernel auto-tuning for MoE effectivity

ByteCheckpoint for resilient and versatile checkpointing

AutoTuner for optimizing parallelism and reminiscence configurations

Human analysis and real-world impression

To guage alignment with human-centric preferences, ByteDance carried out human testing throughout a spread of domains, together with artistic writing, humanities information and basic dialog.

Seed-Pondering-v1.5 constantly outperformed DeepSeek R1 throughout periods, reinforcing its applicability to real-world consumer wants.

The event staff notes that reasoning fashions educated totally on verifiable duties demonstrated sturdy generalization to artistic domains—an consequence attributed to the construction and rigor embedded in mathematical coaching workflows.

What it means for technical leaders, information engineers and enterprise decision-makers

For technical leads managing the lifecycle of huge language fashions—from information curation to deployment—Seed-Pondering-v1.5 presents a chance to rethink how reasoning capabilities are built-in into enterprise AI stacks.

Its modular coaching course of, which incorporates verifiable reasoning datasets and multi-phase reinforcement studying, significantly appeals to groups trying to scale LLM growth whereas retaining fine-grained management.

ByteDance’s strikes to introduce Seed-Verifier and Seed-Pondering-Verifier provide mechanisms for extra reliable reward modeling, which might be crucial when deploying fashions into customer-facing or regulated environments.

For groups working below tight deadlines and restricted bandwidth, the mannequin’s stability below reinforcement studying, enabled by improvements like VAPO and dynamic sampling, might scale back iteration cycles and streamline fine-tuning for particular duties.

From an orchestration and deployment perspective, the mannequin’s hybrid infrastructure method—together with the Streaming Rollout System (SRS) and help for FP8 optimization—suggests important beneficial properties in coaching throughput and {hardware} utilization.

These options could be priceless for engineers accountable for scaling LLM operations throughout cloud and on-prem techniques. The truth that Seed-Pondering-v1.5 was educated with mechanisms to adapt reward suggestions primarily based on runtime dynamics speaks on to the challenges of managing heterogeneous information pipelines and sustaining consistency throughout domains.

For groups tasked with guaranteeing reliability, reproducibility, and steady integration of latest instruments, Seed-Pondering-v1.5’s system-level design might function a blueprint for constructing strong, multi-modal orchestration techniques.

For information engineering professionals, the structured method to coaching information—together with rigorous filtering, augmentation and professional verification—reinforces the significance of knowledge high quality as a multiplier of mannequin efficiency. This might encourage extra deliberate approaches to dataset growth and validation pipelines.

Future outlook

Seed-Pondering-v1.5 outcomes from collaboration inside ByteDance’s Seed LLM Methods staff, led by Yonghui Wu and with public illustration by Haibin Lin, a long-time AI contributor.

The venture additionally attracts on earlier efforts, similar to Doubao 1.5 Professional, and incorporates shared strategies in RLHF and information curation.

The staff plans to proceed refining reinforcement studying strategies, specializing in coaching effectivity and reward modeling for non-verifiable duties. The general public launch of inner benchmarks similar to BeyondAIME is meant to foster broader development in reasoning-focused AI analysis.

Every day insights on enterprise use circumstances with VB Every day

If you wish to impress your boss, VB Every day has you coated. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you may share insights for max ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.

Looks like my earlier comment didn’t appear, but I just wanted to say—your blog is so inspiring! I’m still figuring things out as a beginner,and reading your posts makes me want to keep going with my own writing journey.