Resilience has all the time been a prime precedence for purchasers working mission-critical Apache Kafka functions. Amazon Managed Streaming for Apache Kafka (Amazon MSK) is deployed throughout a number of Availability Zones and gives resilience inside an AWS Area. Nevertheless, mission-critical Kafka deployments require cross-Area resilience to reduce downtime throughout service impairment in a Area. With Amazon MSK Replicator, you’ll be able to construct multi-Area resilient streaming functions to offer enterprise continuity, share information with companions, combination information from a number of clusters for analytics, and serve international purchasers with diminished latency. This submit explains the best way to use MSK Replicator for cross-cluster information replication and particulars the failover and failback processes whereas protecting the identical subject identify throughout Areas.

MSK Replicator overview

Amazon MSK affords two cluster sorts: Provisioned and Serverless. Provisioned cluster helps two dealer sorts: Normal and Categorical. With the introduction of Amazon MSK Categorical brokers, now you can deploy MSK clusters that considerably cut back restoration time by as much as 90% whereas delivering constant efficiency. Categorical brokers present as much as 3 instances the throughput per dealer and scale as much as 20 instances sooner in comparison with Normal brokers working Kafka. MSK Replicator works with each dealer sorts in Provisioned clusters and together with Serverless clusters.

MSK Replicator helps an an identical subject identify configuration, enabling seamless subject identify retention throughout each active-active or active-passive replication. This avoids the chance of infinite replication loops generally related to third-party or open supply replication instruments. When deploying an active-passive cluster structure for regional resilience, the place one cluster handles dwell site visitors and the opposite acts as a standby, an an identical subject configuration simplifies the failover course of. Purposes can transition to the standby cluster with out reconfiguration as a result of subject names stay constant throughout the supply and goal clusters.

To arrange an active-passive deployment, you must allow multi-VPC connectivity for the MSK cluster within the main Area and deploy an MSK Replicator within the secondary Area. The replicator will eat information from the first Area’s MSK cluster and asynchronously replicate it to the secondary Area. You join the purchasers initially to the first cluster however fail over the purchasers to the secondary cluster within the case of main Area impairment. When the first Area recovers, you deploy a brand new MSK Replicator to duplicate information again from the secondary cluster to the first. It is advisable to cease the shopper functions within the secondary Area and restart them within the main Area.

As a result of replication with MSK Replicator is asynchronous, there’s a risk of duplicate information within the secondary cluster. Throughout a failover, shoppers may reprocess some messages from Kafka matters. To handle this, deduplication ought to happen on the buyer facet, comparable to by utilizing an idempotent downstream system like a database.

Within the subsequent sections, we display the best way to deploy MSK Replicator in an active-passive structure with an identical subject names. We offer a step-by-step information for failing over to the secondary Area throughout a main Area impairment and failing again when the first Area recovers. For an active-active setup, confer with Create an active-active setup utilizing MSK Replicator.

Answer overview

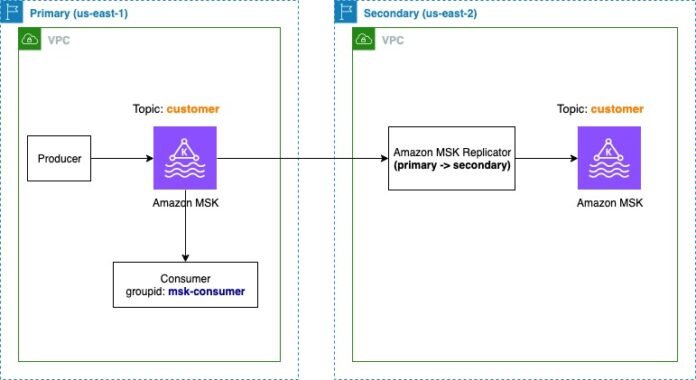

On this setup, we deploy a main MSK Provisioned cluster with Categorical brokers within the us-east-1 Area. To offer cross-Area resilience for Amazon MSK, we set up a secondary MSK cluster with Categorical brokers within the us-east-2 Area and replicate matters from the first MSK cluster to the secondary cluster utilizing MSK Replicator. This configuration gives excessive resilience inside every Area by utilizing Categorical brokers, and cross-Area resilience is achieved by an active-passive structure, with replication managed by MSK Replicator.

The next diagram illustrates the answer structure.

The first Area MSK cluster handles shopper requests. Within the occasion of a failure to speak to MSK cluster on account of main area impairment, you have to fail over the purchasers to the secondary MSK cluster. The producer writes to the shopper subject within the main MSK cluster, and the buyer with the group ID msk-consumer reads from the identical subject. As a part of the active-passive setup, we configure MSK Replicator to make use of an identical subject names, ensuring that the shopper subject stays constant throughout each clusters with out requiring modifications from the purchasers. The complete setup is deployed inside a single AWS account.

Within the subsequent sections, we describe the best way to arrange a multi-Area resilient MSK cluster utilizing MSK Replicator and likewise present the failover and failback technique.

Provision an MSK cluster utilizing AWS CloudFormation

We offer AWS CloudFormation templates to provision sure assets:

This may create the digital personal cloud (VPC), subnets, and the MSK Provisioned cluster with Categorical brokers inside the VPC configured with AWS Identification and Entry Administration (IAM) authentication in every Area. It would additionally create a Kafka shopper Amazon Elastic Compute Cloud (Amazon EC2) occasion, the place we will use the Kafka command line to create and think about a Kafka subject and produce and eat messages to and from the subject.

Configure multi-VPC connectivity within the main MSK cluster

After the clusters are deployed, you have to allow the multi-VPC connectivity within the main MSK cluster deployed in us-east-1. This may enable MSK Replicator to connect with the first MSK cluster utilizing multi-VPC connectivity (powered by AWS PrivateLink). Multi-VPC connectivity is just required for cross-Area replication. For same-Area replication, MSK Replicator makes use of an IAM coverage to connect with the first MSK cluster.

MSK Replicator makes use of IAM authentication solely to connect with each main and secondary MSK clusters. Subsequently, though different Kafka purchasers can nonetheless proceed to make use of SASL/SCRAM or mTLS authentication, for MSK Replicator to work, IAM authentication needs to be enabled.

To allow multi-VPC connectivity, full the next steps:

On the Amazon MSK console, navigate to the MSK cluster.

On the Properties tab, underneath Community settings, select Activate multi-VPC connectivity on the Edit dropdown menu.

For Authentication kind, choose IAM role-based authentication.

Select Activate choice.

Enabling multi-VPC connectivity is a one-time setup and it will possibly take roughly 30–45 minutes relying on the variety of brokers. After that is enabled, you have to present the MSK cluster useful resource coverage to permit MSK Replicator to speak to the first cluster.

Underneath Safety settings¸ select Edit cluster coverage.

Choose Embrace Kafka service principal.

Now that the cluster is enabled to obtain requests from MSK Replicator utilizing PrivateLink, we have to arrange the replicator.

Create a MSK Replicator

Full the next steps to create an MSK Replicator:

Within the secondary Area (us-east-2), open the Amazon MSK console.

Select Replicators within the navigation pane.

Select Create replicator.

Enter a reputation and non-obligatory description.

Within the Supply cluster part, present the next data:

For Cluster area, select us-east-1.

For MSK cluster, enter the Amazon Useful resource Identify (ARN) for the first MSK cluster.

For cross-Area setup, the first cluster will seem disabled if the multi-VPC connectivity is just not enabled and the cluster useful resource coverage is just not configured within the main MSK cluster. After you select the first cluster, it robotically selects the subnets related to main cluster. Safety teams are usually not required as a result of the first cluster’s entry is ruled by the cluster useful resource coverage.

Subsequent, you choose the goal cluster. The goal cluster Area is defaulted to the Area the place the MSK Replicator is created. On this case, it’s us-east-2.

Within the Goal cluster part, present the next data:

For MSK cluster, enter the ARN of the secondary MSK cluster. This may robotically choose the cluster subnets and the safety group related to the secondary cluster.

For Safety teams, select any further safety teams.

Be sure that the safety teams have outbound guidelines to permit site visitors to your secondary cluster’s safety teams. Additionally ensure that your secondary cluster’s safety teams have inbound guidelines that settle for site visitors from the MSK Replicator safety teams offered right here.

Now let’s present the MSK Replicator settings.

Within the Replicator settings part, enter the next data:

For Matters to duplicate, we maintain the matters to duplicate as a default worth that replicates all matters from the first to secondary cluster.

For Replication beginning place, we select Earliest, in order that we will get all of the occasions from the beginning of the supply matters.

For Copy settings, choose Maintain the identical subject names to configure the subject identify within the secondary cluster as an identical to the first cluster.

This makes positive that the MSK purchasers don’t want so as to add a prefix to the subject names.

For this instance, we maintain the Shopper group replication setting as default and set Goal compression kind as None.

Additionally, MSK Replicator will robotically create the required IAM insurance policies.

Select Create to create the replicator.

The method takes round 15–20 minutes to deploy the replicator. After the MSK Replicator is working, this might be mirrored within the standing.

Configure the MSK shopper for the first cluster

Full the next steps to configure the MSK shopper:

On the Amazon EC2 console, navigate to the EC2 occasion of the first Area (us-east-1) and connect with the EC2 occasion dr-test-primary-KafkaClientInstance1 utilizing Session Supervisor, a functionality of AWS Methods Supervisor.

After you might have logged in, you have to configure the first MSK cluster bootstrap deal with to create a subject and publish information to the cluster. You will get the bootstrap deal with for IAM authentication on the Amazon MSK console underneath View Consumer Info on the cluster particulars web page.

Configure the bootstrap deal with with the next code:

sudo su – ec2-user

export BS_PRIMARY=<>

Configure the shopper configuration for IAM authentication to speak to the MSK cluster:

echo -n “safety.protocol=SASL_SSL

sasl.mechanism=AWS_MSK_IAM

sasl.jaas.config=software program.amazon.msk.auth.iam.IAMLoginModule required;

sasl.shopper.callback.handler.class=software program.amazon.msk.auth.iam.IAMClientCallbackHandler

” > /dwelling/ec2-user/kafka/config/client_iam.properties

Create a subject and produce and eat messages to the subject

Full the next steps to create a subject after which produce and eat messages to it:

Create a buyer subject:

/dwelling/ec2-user/kafka/bin/kafka-topics.sh –bootstrap-server=$BS_PRIMARY

–create –replication-factor 3 –partitions 3

–topic buyer

–command-config=/dwelling/ec2-user/kafka/config/client_iam.properties

Create a console producer to jot down to the subject:

/dwelling/ec2-user/kafka/bin/kafka-console-producer.sh

–bootstrap-server=$BS_PRIMARY –topic buyer

–producer.config=/dwelling/ec2-user/kafka/config/client_iam.properties

Produce the next pattern textual content to the subject:

It is a buyer subject

That is the 2nd message to the subject.

Press Ctrl+C to exit the console immediate.

Create a client with group.id msk-consumer to learn all of the messages from the start of the shopper subject:

/dwelling/ec2-user/kafka/bin/kafka-console-consumer.sh

–bootstrap-server=$BS_PRIMARY –topic buyer –from-beginning

–consumer.config=/dwelling/ec2-user/kafka/config/client_iam.properties

–consumer-property group.id=msk-consumer

This may eat each the pattern messages from the subject.

Press Ctrl+C to exit the console immediate.

Configure the MSK shopper for the secondary MSK cluster

Go to the EC2 cluster of the secondary Area us-east-2 and comply with the beforehand talked about steps to configure an MSK shopper. The one distinction from the earlier steps is that you need to use the bootstrap deal with of the secondary MSK cluster because the atmosphere variable. Configure the variable $BS_SECONDARY to configure the secondary Area MSK cluster bootstrap deal with.

Confirm replication

After the shopper is configured to speak to the secondary MSK cluster utilizing IAM authentication, checklist the matters within the cluster. As a result of the MSK Replicator is now working, the shopper subject is replicated. To confirm it, let’s see the checklist of matters within the cluster:

/dwelling/ec2-user/kafka/bin/kafka-topics.sh –bootstrap-server=$BS_SECONDARY

–list –command-config=/dwelling/ec2-user/kafka/config/client_iam.properties

The subject identify is buyer with none prefix.

By default, MSK Replicator replicates the main points of all the buyer teams. Since you used the default configuration, you’ll be able to confirm utilizing the next command if the buyer group ID msk-consumer can also be replicated to the secondary cluster:

/dwelling/ec2-user/kafka/bin/kafka-consumer-groups.sh –bootstrap-server=$BS_SECONDARY

–list –command-config=/dwelling/ec2-user/kafka/config/client_iam.properties

Now that we’ve verified the subject is replicated, let’s perceive the important thing metrics to watch.

Monitor replication

Monitoring MSK Replicator is essential to ensure that replication of knowledge is going on quick. This reduces the chance of knowledge loss in case an unplanned failure happens. Some essential MSK Replicator metrics to watch are ReplicationLatency, MessageLag, and ReplicatorThroughput. For an in depth checklist, see Monitor replication.

To grasp what number of bytes are processed by MSK Replicator, you need to monitor the metric ReplicatorBytesInPerSec. This metric signifies the typical variety of bytes processed by the replicator per second. Knowledge processed by MSK Replicator consists of all information MSK Replicator receives. This contains the information replicated to the goal cluster and filtered by MSK Replicator. This metric is relevant in case you use Maintain similar subject identify within the MSK Replicator copy settings. Throughout a failback state of affairs, MSK Replicator begins to learn from the earliest offset and replicates information from the secondary again to the first. Relying on the retention settings, some information may exist within the main cluster. To forestall duplicates, MSK Replicator processes the information however robotically filters out duplicate information.

Fail over purchasers to the secondary MSK cluster

Within the case of an sudden occasion within the main Area by which purchasers can’t connect with the first MSK cluster or the purchasers are receiving sudden produce and eat errors, this could possibly be an indication that the first MSK cluster is impacted. It’s possible you’ll discover a sudden spike in replication latency. If the latency continues to rise, it might point out a regional impairment in Amazon MSK. To confirm this, you’ll be able to test the AWS Well being Dashboard, although there’s a probability that standing updates could also be delayed. When you determine indicators of a regional impairment in Amazon MSK, you need to put together to fail over the purchasers to the secondary area.

For important workloads we suggest not taking a dependency on management airplane actions for failover. To mitigate this threat, you may implement a pilot gentle deployment, the place important elements of the stack are stored working in a secondary area and scaled up when the first area is impaired. Alternatively, for sooner and smoother failover with minimal downtime, a sizzling standby strategy is really helpful. This entails pre-deploying the whole stack in a secondary area in order that, in a catastrophe restoration state of affairs, the pre-deployed purchasers will be rapidly activated within the secondary area.

Failover course of

To carry out the failover, you first must cease the purchasers pointed to the first MSK cluster. Nevertheless, for the aim of the demo, we’re utilizing console producer and shoppers, so our purchasers are already stopped.

In an actual failover state of affairs, utilizing main Area purchasers to speak with the secondary Area MSK cluster is just not really helpful, because it breaches fault isolation boundaries and results in elevated latency. To simulate the failover utilizing the previous setup, let’s begin a producer and client within the secondary Area (us-east-2). For this, run a console producer within the EC2 occasion (dr-test-secondary-KafkaClientInstance1) of the secondary Area.

The next diagram illustrates this setup.

Full the next steps to carry out a failover:

Create a console producer utilizing the next code:

/dwelling/ec2-user/kafka/bin/kafka-console-producer.sh

–bootstrap-server=$BS_SECONDARY –topic buyer

–producer.config=/dwelling/ec2-user/kafka/config/client_iam.properties

Produce the next pattern textual content to the subject:

That is the third message to the subject.

That is the 4th message to the subject.

Now, let’s create a console client. It’s essential to ensure the buyer group ID is strictly the identical as the buyer connected to the first MSK cluster. For this, we use the group.id msk-consumer to learn the messages from the shopper subject. This simulates that we’re mentioning the identical client connected to the first cluster.

Create a console client with the next code:

/dwelling/ec2-user/kafka/bin/kafka-console-consumer.sh

–bootstrap-server=$BS_SECONDARY –topic buyer –from-beginning

–consumer.config=/dwelling/ec2-user/kafka/config/client_iam.properties

–consumer-property group.id=msk-consumer

Though the buyer is configured to learn all the information from the earliest offset, it solely consumes the final two messages produced by the console producer. It’s because MSK Replicator has replicated the buyer group particulars together with the offsets learn by the buyer with the buyer group ID msk-consumer. The console client with the identical group.id mimic the behaviour that the buyer is failed over to the secondary Amazon MSK cluster.

Fail again purchasers to the first MSK cluster

Failing again purchasers to the first MSK cluster is the widespread sample in an active-passive state of affairs, when the service within the main area has recovered. Earlier than we fail again purchasers to the first MSK cluster, it’s essential to sync the first MSK cluster with the secondary MSK cluster. For this, we have to deploy one other MSK Replicator within the main Area configured to learn from the earliest offset from the secondary MSK cluster and write to the first cluster with the identical subject identify. The MSK Replicator will copy the information from the secondary MSK cluster to the first MSK cluster. Though the MSK Replicator is configured to begin from the earliest offset, it is not going to duplicate the information already current within the main MSK cluster. It would robotically filter out the present messages and can solely write again the brand new information produced within the secondary MSK cluster when the first MSK cluster was down. The replication step from secondary to main wouldn’t be required in case you don’t have a enterprise requirement of protecting the information similar throughout each clusters.

After the MSK Replicator is up and working, monitor the MessageLag metric of MSK Replicator. This metric signifies what number of messages are but to be replicated from the secondary MSK cluster to the first MSK cluster. The MessageLag metric ought to come down near 0. Now you need to cease the producers writing to the secondary MSK cluster and restart connecting to the first MSK cluster. You must also enable the shoppers to learn information from the secondary MSK cluster till the MaxOffsetLag metric for the shoppers is just not 0. This makes positive that the shoppers have already processed all of the messages from the secondary MSK cluster. The MessageLag metric must be 0 by this time as a result of no producer is producing information within the secondary cluster. MSK Replicator replicated all messages from the secondary cluster to the first cluster. At this level, you need to begin the buyer with the identical group.id within the main Area. You possibly can delete the MSK Replicator created to repeat messages from the secondary to the first cluster. Be sure that the beforehand current MSK Replicator is in RUNNING standing and efficiently replicating messages from the first to secondary. This may be confirmed by trying on the ReplicatorThroughput metric, which must be higher than 0.

Failback course of

To simulate a failback, you first must allow multi-VPC connectivity within the secondary MSK cluster (us-east-2) and add a cluster coverage for the Kafka service principal like we did earlier than.

Deploy the MSK Replicator within the main Area (us-east-1) with the supply MSK cluster pointed to us-east-2 and the goal cluster pointed to us-east-1. Configure Replication beginning place as Earliest and Copy settings as Maintain the identical subject names.

The next diagram illustrates this setup.

After the MSK Replicator is in RUNNING standing, let’s confirm there isn’t any duplicate whereas replicating the information from the secondary to the first MSK cluster.

Run a console client with out the group.id within the EC2 occasion (dr-test-primary-KafkaClientInstance1) of the first Area (us-east-1):

/dwelling/ec2-user/kafka/bin/kafka-console-consumer.sh

–bootstrap-server=$BS_PRIMARY –topic buyer –from-beginning

–consumer.config=/dwelling/ec2-user/kafka/config/client_iam.properties

This could present the 4 messages with none duplicates. Though within the client we specify to learn from the earliest offset, MSK Replicator makes positive the duplicate information isn’t replicated again to the first cluster from the secondary cluster.

It is a buyer subject

That is the 2nd message to the subject.

That is the third message to the subject.

That is the 4th message to the subject.

Now you can level the purchasers to begin producing to and consuming from the first MSK cluster.

Clear up

At this level, you’ll be able to tear down the MSK Replicator deployed within the main Area.

Conclusion

This submit explored the best way to improve Kafka resilience by establishing a secondary MSK cluster in one other Area and synchronizing it with the first cluster utilizing MSK Replicator. We demonstrated the best way to implement an active-passive catastrophe restoration technique whereas sustaining constant subject names throughout each clusters. We offered a step-by-step information for configuring replication with an identical subject names and detailed the processes for failover and failback. Moreover, we highlighted key metrics to watch and outlined actions to offer environment friendly and steady information replication.

For extra data, confer with What’s Amazon MSK Replicator? For a hands-on expertise, check out the Amazon MSK Replicator Workshop. We encourage you to check out this characteristic and share your suggestions with us.

Concerning the Creator

Subham Rakshit is a Senior Streaming Options Architect for Analytics at AWS primarily based within the UK. He works with clients to design and construct streaming architectures to allow them to get worth from analyzing their streaming information. His two little daughters maintain him occupied more often than not exterior work, and he loves fixing jigsaw puzzles with them. Join with him on LinkedIn.

Finde top Produkte für deine Fitness in Sport & Freizeit – Ausrüstung und Kleidung für jeden Bedarf.