A safety weak spot has been disclosed within the synthetic intelligence (AI)-powered code editor Cursor that would set off code execution when a maliciously crafted repository is opened utilizing this system.

The difficulty stems from the truth that an out-of-the-box safety setting is disabled by default, opening the door for attackers to run arbitrary code on customers’ computer systems with their privileges.

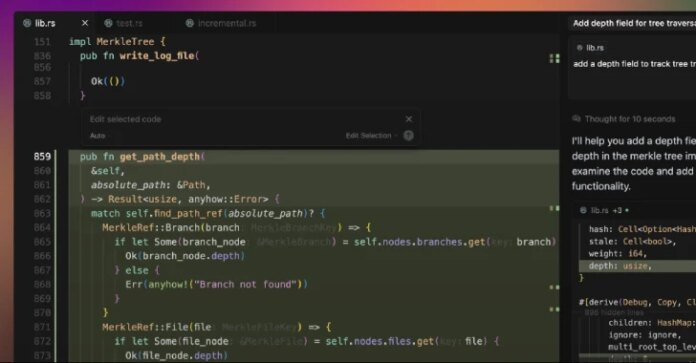

“Cursor ships with Workspace Belief disabled by default, so VS Code-style duties configured with runOptions.runOn: ‘folderOpen’ auto-execute the second a developer browses a undertaking,” Oasis Safety mentioned in an evaluation. “A malicious .vscode/duties.json turns an informal ‘open folder’ into silent code execution within the consumer’s context.”

Cursor is an AI-powered fork of Visible Studio Code, which helps a characteristic referred to as Workspace Belief to permit builders to soundly browse and edit code no matter the place it got here from or who wrote it.

With this feature disabled, an attacker could make accessible a undertaking in GitHub (or any platform) and embody a hidden “autorun” instruction that instructs the IDE to execute a process as quickly as a folder is opened, inflicting malicious code to be executed when the sufferer makes an attempt to browse the booby-trapped repository in Cursor.

“This has the potential to leak delicate credentials, modify information, or function a vector for broader system compromise, inserting Cursor customers at important danger from provide chain assaults,” Oasis Safety researcher Erez Schwartz mentioned.

To counter this menace, customers are suggested to allow Office Belief in Cursor, open untrusted repositories in a distinct code editor, and audit them earlier than opening them within the device.

The event comes as immediate injections and jailbreaks have emerged as a stealthy and systemic menace plaguing AI-powered coding and reasoning brokers like Claude Code, Cline, K2 Assumeand Windsurfingpermitting menace actors to embed malicious directions in sneaky methods to trick the techniques into performing malicious actions or leaking knowledge from software program improvement environments.

Software program provide chain safety outfit Checkmarx, in a report final week, revealed how Anthropic’s newly launched automated safety opinions in Claude Code may inadvertently expose initiatives to safety dangers, together with instructing it to disregard weak code by immediate injections, inflicting builders to push malicious or insecure code previous safety opinions.

“On this case, a rigorously written remark can persuade Claude that even plainly harmful code is totally protected,” the corporate mentioned. “The tip consequence: a developer – whether or not malicious or simply making an attempt to close Claude up – can simply trick Claude into considering a vulnerability is protected.”

One other downside is that the AI inspection course of additionally generates and executes check instances, which may result in a state of affairs the place malicious code is run towards manufacturing databases if Claude Code is not correctly sandboxed.

The AI firm, which additionally just lately launched a brand new file creation and enhancing characteristic in Claude, has warned that the characteristic carries immediate injection dangers as a result of it working in a “sandboxed computing surroundings with restricted web entry.”

Particularly, it is attainable for a nasty actor to “inconspicuously” add directions through exterior information or web sites – aka oblique immediate injection – that trick the chatbot into downloading and working untrusted code or studying delicate knowledge from a information supply linked through the Mannequin Context Protocol (MCP).

“This implies Claude may be tricked into sending info from its context (e.g., prompts, initiatives, knowledge through MCP, Google integrations) to malicious third events,” Anthropic mentioned. “To mitigate these dangers, we suggest you monitor Claude whereas utilizing the characteristic and cease it when you see it utilizing or accessing knowledge unexpectedly.”

That is not all. Late final month, the corporate additionally revealed browser-using AI fashions like Claude for Chrome can face immediate injection assaults, and that it has carried out a number of defenses to deal with the menace and cut back the assault success charge of 23.6% to 11.2%.

“New types of immediate injection assaults are additionally continuously being developed by malicious actors,” it added. “By uncovering real-world examples of unsafe conduct and new assault patterns that are not current in managed checks, we’ll train our fashions to acknowledge the assaults and account for the associated behaviors, and be certain that security classifiers will decide up something that the mannequin itself misses.”

On the similar time, these instruments have additionally been discovered vulnerable to conventional safety vulnerabilities, broadening the assault floor with potential real-world influence –

A WebSocket authentication bypass in Claude Code IDE extensions (CVE-2025-52882CVSS rating: 8.8) that would have allowed an attacker to connect with a sufferer’s unauthenticated native WebSocket server just by luring them to go to an internet site below their management, enabling distant command execution

An SQL injection vulnerability within the Postgres MCP server that would have allowed an attacker to bypass the read-only restriction and execute arbitrary SQL statements

A path traversal vulnerability in Microsoft NLWeb that would have allowed a distant attacker to learn delicate information, together with system configurations (“/and many others/passwd”) and cloud credentials (.env information), utilizing a specifically crafted URL

An incorrect authorization vulnerability in Lovable (CVE-2025-48757, CVSS rating: 9.3) that would have allowed distant unauthenticated attackers to learn or write to arbitrary database tables of generated websites

Open redirect, saved cross-site scripting (XSS), and delicate knowledge leakage vulnerabilities in Base44 that would have allowed attackers to entry the sufferer’s apps and improvement workspace, harvest API keys, inject malicious logic into user-generated purposes, and exfiltrate knowledge

A vulnerability in Ollama Desktop arising because of incomplete cross-origin controls that would have allowed an attacker to stage a drive-by assault, the place visiting a malicious web site can reconfigure the applying’s settings to intercept chats and even alter responses utilizing poisoned fashions

“As AI-driven improvement accelerates, probably the most urgent threats are sometimes not unique AI assaults however failures in classical safety controls,” Imperva mentioned. “To guard the rising ecosystem of ‘vibe coding’ platforms, safety have to be handled as a basis, not an afterthought.”