If you’re spinning up your Amazon OpenSearch Service area, you could work out the storage, occasion varieties, and occasion depend; resolve the sharding methods and whether or not to make use of a cluster supervisor; and allow zone consciousness. Usually, we take into account storage as a tenet for figuring out occasion depend, however not different parameters. On this put up, we provide some suggestions primarily based on T-shirt sizing for log analytics workloads.

Log analytics and streaming workload traits

If you use OpenSearch Service in your streaming workloads, you ship knowledge from a number of sources into OpenSearch Service. OpenSearch Service indexes your knowledge in an index that you simply outline.

Log knowledge naturally follows a time collection sample, and subsequently a time-based indexing technique (every day or weekly indexes) is beneficial. For environment friendly administration of log knowledge, you should implement time-based index patterns and set retention durations. You additional outline time slicing and a retention interval for the info to handle its lifecycle in your area.

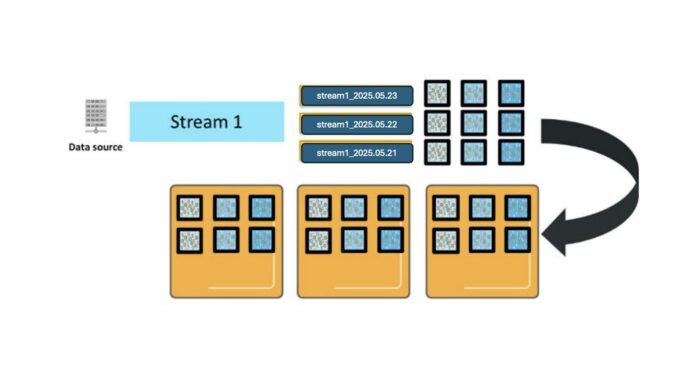

For illustration, take into account that you’ve an information supply producing a steady stream of log knowledge, and also you’ve configured a every day rolling index and set a retention interval of three days. Because the logs arrive, OpenSearch Service creates an index per day with names like stream1_2025.05.21, stream1_2025.05.22, and so forth. The prefix stream1_* is what we name an index samplea naming conference that helps group-related indexes.

The next diagram exhibits three major shards for every every day index. These shards are deployed throughout three OpenSearch Service knowledge cases, with one duplicate for every major shard. (For simplicity, the diagram doesn’t present that major and duplicate shards are at all times positioned on completely different cases for fault tolerance.)

When OpenSearch Service processes new log entries, they’re despatched to all related major shards and their replicas within the lively index, which on this instance is simply as we speak’s index because of the every day index configuration.

There are a number of vital traits of how OpenSearch Service processes your new entries:

Whole shard depend – Every index sample may have a D * P * (1 + R) complete shards, the place D represents retention in days, P represents major shards, and R is the variety of replicas. These shards are distributed throughout your knowledge nodes.

Lively index – Time slicing implies that new log entries are solely written to as we speak’s index.

Useful resource utilization – When sending a _bulk request with log entries, these are distributed throughout all shards within the lively index. In our instance with three major shards and one duplicate per shard, that’s a complete of six shards processing new knowledge concurrently, requiring 6 vCPUs to effectively deal with a single _bulk request.

Equally, OpenSearch Service distributes queries throughout the shards for the indexes concerned. In case you question this index sample throughout all 3 days, you’ll have interaction 9 shards, and wish 9 vCPUs to course of the request.

It will get much more sophisticated if you add in additional knowledge streams and index patterns. For every extra knowledge stream or index sample, you deploy shards for every of the every day indexes and use vCPUs to course of requests in proportion to the shards deployed, as proven within the previous diagram. If you make concurrent requests to a couple of index, every shard for all of the indexes concerned should course of these requests.

Cluster capability

Because the variety of index patterns and concurrent requests will increase, you’ll be able to shortly overwhelm the cluster’s sources. OpenSearch Service consists of inside queues that buffer requests and mitigate this concurrency demand. You possibly can monitor these queues utilizing the _cat/thread_pool API, which exhibits queue depths and helps you perceive when your cluster is approaching capability limits.

One other complicating dimension is that the time to course of your updates and queries depends upon the contents of the updates and queries. As requests are available, the queues are filling on the price you’re sending them. They’re draining at a price that’s ruled by the obtainable vCPUs, the time they tackle every request, and the processing time for that request. You possibly can interleave extra requests if these requests clear in a millisecond than in the event that they clear in a second. You need to use the _nodes/stats OpenSearch API to watch common load in your CPUs. For extra details about the question phases, consult with A question, or There and Again Once more on the OpenSearch weblog.

In case you see the queue depths rising, you’re transferring right into a “warning” space, the place the cluster is dealing with load. However for those who proceed, you can begin to exceed the obtainable queues and should scale so as to add extra CPUs. In case you begin to see load rising, which is correlated with queue depth rising, you’re additionally in a “warning” space and may take into account scaling.

Suggestions

For sizing a site, take into account the next steps:

Decide the storage required – Whole storage = (every day supply knowledge in bytes × 1.45) × (number_of_replicas + 1) × variety of days retained. This accounts for the extra 45% overhead on every day supply knowledge, damaged down as follows:

10% for bigger index dimension than supply knowledge.

5% for working system overhead (reserved by Linux for system restoration and disk defragmentation safety).

20% for OpenSearch reserved house per occasion (phase merges, logs, and inside operations).

10% for added storage buffer (minimizes impression of node failure and Availability Zone outages).

Outline the shard depend – Approximate variety of major shards = storage dimension required per index / desired shard dimension. Spherical as much as the closest a number of of your knowledge node depend to keep up even distribution. For extra detailed steering on shard sizing and distribution methods, consult with “Amazon OpenSearch Service 101: What number of shards do I want” For log analytics workloads, take into account the next:

Advisable shard dimension: 30–50 GB

Optimum goal: 50 GB per shard

Calculate CPU necessities – Advisable ratio is 1.25 vCPU:1 Shard for decrease knowledge volumes. Greater ratios are beneficial for bigger volumes. Goal utilization is 60% common, 80% most.

Select the precise occasion sort – Take into account the next primarily based in your nodes:

Let’s have a look at an instance for area sizing. The preliminary necessities are as follows:

Day by day log quantity: 3 TB

Retention interval: 3 months (90 days)

Reproduction depend: 1

We make the next occasion calculation.

The next desk recommends cases, quantity of supply knowledge, storage wanted for 7 days of retention, and lively shards primarily based on the previous pointers.

T-Shirt Dimension

Knowledge (Per Day)

Storage Wanted (with 7 days Retention)

Lively Shards

Knowledge Nodes

Main Nodes

XSmall

10 GB

175 GB

2 @ 50 GB

3 * r7g.massive. search

3 * m7g.massive. search

Small

100 GB

1.75 TB

6 @ 50 GB

3 * r7g.xlarge. search

3 * m7g.massive. search

Medium

500 GB

8.75 TB

30 @ 50 GB

6 * r7g.2xlarge.search

3 * m7g.massive. search

Giant

1 TB

17.5 TB

60 @ 50 GB

6 * r7g.4xlarge.search

3 * m7g.massive. search

XLarge

10 TB

175 TB

600 @ 50 GB

30 * i4g.8xlarge

3 * m7g.2xlarge.search

XXL

80 TB

1.4 PB

2400 @ 50 GB

87 * I4g.16xlarge

3 * m7g.4xlarge.search

As with all sizing suggestions, these pointers signify a place to begin and are primarily based on assumptions. Your workload will differ, and so your precise wants will differ from these suggestions. Be certain that to deploy, monitor, and modify your configuration as wanted.

For T-shirt sizing the workloads, an extra-small use case encompasses 10 GB or much less of knowledge per day from a single knowledge stream to a single index sample. A small use case falls between 10–100 GB per day of knowledge, a medium use case between 100–500 GB of knowledge, and so forth. Default occasion depend per area is 80 for many of the occasion household. Confer with the “Amazon OpenSearch Service quotas “ for particulars.

Moreover, take into account the next finest practices:

Conclusion

This put up supplied complete pointers for sizing your OpenSearch Service area for log analytic workloads, protecting a number of essential features. These suggestions function a stable start line, however every workload has distinctive traits. For optimum efficiency, take into account implementing extra optimizations like knowledge tiering and storage tiers. Consider cost-saving choices equivalent to reserved cases, and scale your deployment primarily based on precise efficiency metrics and queue depths.By following these pointers and actively monitoring your deployment, you’ll be able to construct a well-performing OpenSearch Service area that meets your log analytics wants whereas sustaining effectivity and cost-effectiveness.

In regards to the authors

Harsh bansal

Harsh is an Analytics and AI Options Architect at Amazon Internet Companies. Bansal collaborates carefully with purchasers, helping of their migration to cloud platforms and optimizing cluster setups to reinforce efficiency and cut back prices. Earlier than becoming a member of AWS, Bansal supported purchasers in leveraging OpenSearch and Elasticsearch for numerous search and log analytics necessities.

Aditya Challa

Aditya is a Senior Options Architect at Amazon Internet Companies. Aditya loves serving to clients by way of their AWS journeys as a result of he is aware of that journeys are at all times higher when there’s firm. He’s a giant fan of journey, historical past, engineering marvels, and studying one thing new every single day.

Raaga ng

Raaga is a Options Architect at Amazon Internet Companies. Raaga is a technologist with over 5 years of expertise specializing in Analytics. Raaga is obsessed with serving to AWS clients navigate their journey to the cloud.