Be a part of the occasion trusted by enterprise leaders for practically 20 years. VB Rework brings collectively the individuals constructing actual enterprise AI technique. Study extra

Glethe unreal intelligence inference startup, is making an aggressive play to problem established cloud suppliers like Amazon Internet Providers and Google with two main bulletins that would reshape how builders entry high-performance AI fashions.

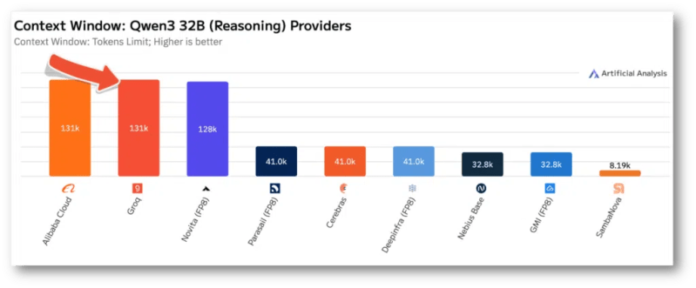

The corporate introduced Monday that it now helps Alibaba’s Qwen3 32B language mannequin with its full 131,000-token context window — a technical functionality it claims no different quick inference supplier can match. Concurrently, Groq turned an official inference supplier on Hugging Face’s platformdoubtlessly exposing its expertise to thousands and thousands of builders worldwide.

The transfer is Groq’s boldest try but to carve out market share within the quickly increasing AI inference market, the place firms like AWS Bedrock, Google Vertex AIand Microsoft Azure have dominated by providing handy entry to main language fashions.

“The Hugging Face integration extends the Groq ecosystem offering builders selection and additional reduces limitations to entry in adopting Groq’s quick and environment friendly AI inference,” a Groq spokesperson instructed VentureBeat. “Groq is the one inference supplier to allow the complete 131K context window, permitting builders to construct purposes at scale.”

How Groq’s 131k context window claims stack up in opposition to AI inference opponents

Groq’s assertion about context home windows — the quantity of textual content an AI mannequin can course of without delay — strikes at a core limitation that has plagued sensible AI purposes. Most inference suppliers wrestle to take care of velocity and cost-effectiveness when dealing with massive context home windows, that are important for duties like analyzing complete paperwork or sustaining lengthy conversations.

Unbiased benchmarking agency Synthetic Evaluation measured Groq’s Qwen3 32B deployment operating at roughly 535 tokens per second, a velocity that may enable real-time processing of prolonged paperwork or advanced reasoning duties. The corporate is pricing the service at $0.29 per million enter tokens and $0.59 per million output tokens — charges that undercut many established suppliers.

Groq and Alibaba Cloud are the one suppliers supporting Qwen3 32B’s full 131,000-token context window, based on impartial benchmarks from Synthetic Evaluation. Most opponents provide considerably smaller limits. (Credit score: Groq)

“Groq presents a totally built-in stack, delivering inference compute that’s constructed for scale, which implies we’re capable of proceed to enhance inference prices whereas additionally guaranteeing efficiency that builders have to construct actual AI options,” the spokesperson defined when requested concerning the financial viability of supporting huge context home windows.

The technical benefit stems from Groq’s customized Language Processing Unit (LPU) structuredesigned particularly for AI inference relatively than the general-purpose graphics processing models (GPUs) that the majority opponents depend on. This specialised {hardware} strategy permits Groq to deal with memory-intensive operations like massive context home windows extra effectively.

Why Groq’s Hugging Face integration might unlock thousands and thousands of recent AI builders

The integration with Hugging Face represents maybe the extra important long-term strategic transfer. Hugging Face has turn into the de facto platform for open-source AI growth, internet hosting lots of of hundreds of fashions and serving thousands and thousands of builders month-to-month. By turning into an official inference supplier, Groq good points entry to this huge developer ecosystem with streamlined billing and unified entry.

Builders can now choose Groq as a supplier immediately inside the Hugging Face Playground or APIwith utilization billed to their Hugging Face accounts. The combination helps a spread of widespread fashions together with Meta’s Name sequenceGoogle’s Gemma Fashionsand the newly added Qwen3 32B.

“This collaboration between Hugging Face and Groq is a big step ahead in making high-performance AI inference extra accessible and environment friendly,” based on a joint assertion.

The partnership might dramatically improve Groq’s consumer base and transaction quantity, but it surely additionally raises questions concerning the firm’s potential to take care of efficiency at scale.

Can Groq’s infrastructure compete with AWS Bedrock and Google Vertex AI at scale

When pressed about infrastructure growth plans to deal with doubtlessly important new site visitors from Hugging Facethe Groq spokesperson revealed the corporate’s present world footprint: “At current, Groq’s world infrastructure consists of information heart areas all through the US, Canada and the Center East, that are serving over 20M tokens per second.”

The corporate plans continued worldwide growth, although particular particulars weren’t offered. This world scaling effort shall be essential as Groq faces growing stress from well-funded opponents with deeper infrastructure sources.

Amazon’s Bedrock servicefor example, leverages AWS’s huge world cloud infrastructure, whereas Google’s Vertex AI advantages from the search large’s worldwide information heart community. Microsoft’s Azure OpenAI service has equally deep infrastructure backing.

Nevertheless, Groq’s spokesperson expressed confidence within the firm’s differentiated strategy: “As an business, we’re simply beginning to see the start of the actual demand for inference compute. Even when Groq have been to deploy double the deliberate quantity of infrastructure this 12 months, there nonetheless wouldn’t be sufficient capability to satisfy the demand right now.”

How aggressive AI inference pricing might impression Groq’s enterprise mannequin

The AI inference market has been characterised by aggressive pricing and razor-thin margins as suppliers compete for market share. Groq’s aggressive pricing raises questions on long-term profitability, significantly given the capital-intensive nature of specialised {hardware} growth and deployment.

“As we see extra and new AI options come to market and be adopted, inference demand will proceed to develop at an exponential fee,” the spokesperson stated when requested concerning the path to profitability. “Our final aim is to scale to satisfy that demand, leveraging our infrastructure to drive the price of inference compute as little as doable and enabling the long run AI financial system.”

This technique — betting on huge quantity progress to realize profitability regardless of low margins — mirrors approaches taken by different infrastructure suppliers, although success is way from assured.

What enterprise AI adoption means for the $154 billion inference market

The bulletins come because the AI inference market experiences explosive progress. Analysis agency Grand View Analysis estimates the worldwide AI inference chip market will attain $154.9 billion by 2030, pushed by growing deployment of AI purposes throughout industries.

For enterprise decision-makers, Groq’s strikes characterize each alternative and danger. The corporate’s efficiency claims, if validated at scale, might considerably cut back prices for AI-heavy purposes. Nevertheless, counting on a smaller supplier additionally introduces potential provide chain and continuity dangers in comparison with established cloud giants.

The technical functionality to deal with full context home windows might show significantly invaluable for enterprise purposes involving doc evaluation, authorized analysis, or advanced reasoning duties the place sustaining context throughout prolonged interactions is essential.

Groq’s twin announcement represents a calculated gamble that specialised {hardware} and aggressive pricing can overcome the infrastructure benefits of tech giants. Whether or not this technique succeeds will possible rely on the corporate’s potential to take care of efficiency benefits whereas scaling globally—a problem that has confirmed tough for a lot of infrastructure startups.

For now, builders achieve one other high-performance choice in an more and more aggressive market, whereas enterprises watch to see whether or not Groq’s technical guarantees translate into dependable, production-grade service at scale.

Each day insights on enterprise use circumstances with VB Each day

If you wish to impress your boss, VB Each day has you coated. We provide the inside scoop on what firms are doing with generative AI, from regulatory shifts to sensible deployments, so you may share insights for max ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.