For most individuals, the face of AI is a chat window. You sort a immediate, the AI responds, and the cycle repeats. This conversational mannequin—popularized by instruments like ChatGPT—has made AI approachable and versatile. But as quickly as your wants turn out to be extra advanced, the cracks begin to present.

Chat excels at easy duties. However if you wish to plan a visit, handle a undertaking, or collaborate with others, you end up spelling out each element, reexplaining your intent and nudging the AI towards what you truly need. The system doesn’t bear in mind your preferences or context except you retain reminding it. In case your immediate is obscure, the reply is generic. In the event you overlook a element, you’re compelled to start out over. This infinite loop is exhausting and inefficient—particularly if you’re engaged on one thing nuanced or ongoing.

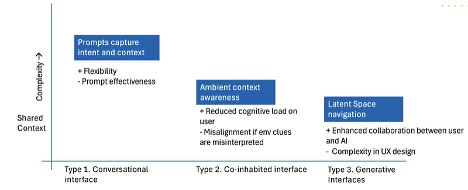

The factor is, what most of us are coping with proper now are actually simply “Kind 1” interfaces—conversational ones. They’re versatile, certain, however they undergo from what we name “immediate effectiveness fatigue.” When planning a fancy undertaking or engaged on one thing that requires sustaining context throughout a number of classes, you’ll have to elucidate your objectives, constraints, and preferences time and again. It’s practical, however it’s additionally exhausting.

This acquired us considering: What if we may transfer past Kind 1? What if interfaces may bear in mind? What if they may assume?

The Three Sorts of Interfaces We’re Truly Constructing

Right here’s what I’ve seen in my experiments with totally different AI instruments: We’re truly seeing three distinct sorts of AI interfaces emerge, every with totally different approaches to dealing with complexity and shared context.

Kind 1: Conversational Interfaces

That is the place most of us stay proper now—ChatGPT, enterprise search techniques utilizing RAG, principally something that requires you to seize your intent and context contemporary in each immediate. The flexibleness is nice, however the cognitive load is brutal. Each dialog begins from zero.

We examined this just lately with a fancy information evaluation undertaking. Every time we returned to the dialog, we needed to reestablish the context: what dataset we had been working with, what visualizations had been wanted, what we’d already tried. By the third session, we had been spending extra time explaining than working.

Kind 2: Coinhabited Interfaces

That is the place issues get fascinating. GitHub Copilot, Microsoft 365 copilots, smaller language fashions embedded in particular workflows—these techniques have ambient context consciousness. After we’re utilizing GitHub Copilot, it doesn’t simply reply to our prompts. It watches what we’re doing. It understands the codebase we’re working in, the patterns we have a tendency to make use of, the libraries we want. The ambient context consciousness means we don’t should reexplain the fundamentals each time, lowering the cognitive overload considerably. However right here’s the catch: When these instruments misread environmental clues, the misalignment might be jarring.

Kind 3: Generative Interfaces

That is the place we’re headed, and it’s each thrilling and terrifying. Kind 3 interfaces don’t simply reply to your prompts or watch your actions—they really reshape themselves primarily based on what they study your wants. Early prototypes are already adjusting web page layouts in response to click on streams and dwell time, rewriting CSS between interactions to maximise readability and engagement. The consequence feels much less like navigating an app and extra like having a considerate private assistant who learns your work patterns and discreetly prepares the precise instruments for every process.

Think about how instruments like Vercel’s v0 deal with this problem. While you sort “create a dashboard with consumer analytics,” the system processes this via a number of AI fashions concurrently—a language mannequin interprets the intent, a design mannequin generates the structure, and a code mannequin produces the React parts. The important thing promise is contextual specificity: a dashboard that surfaces solely the metrics related to this analyst, or an ecommerce move that highlights the following finest motion for this purchaser.

The Friction

Right here’s a concrete instance from my very own expertise. We had been serving to a shopper construct a enterprise intelligence dashboard, and we went via all three sorts of interfaces within the course of. Listed here are the factors of friction we encountered:

Kind 1 friction: When utilizing the sort of interface to generate the preliminary dashboard mockups, each time we got here again to refine the design, we needed to reexplain the enterprise context, the consumer personas, and the important thing metrics we had been monitoring. The flexibleness was there, however the cognitive overhead was monumental.

Kind 2 context: After we moved to implementation, GitHub Copilot understood the codebase context mechanically. It urged acceptable element patterns, knew which libraries we had been utilizing, and even caught some styling inconsistencies. However when it misinterpret the environmental cues—like suggesting a chart sort that didn’t match our information construction—the misalignment was extra jarring than beginning contemporary.

Kind 3 adaptation: Essentially the most fascinating second got here after we experimented with a generative UI system that would adapt the dashboard structure primarily based on consumer conduct. As a substitute of simply responding to our prompts, it noticed how totally different customers interacted with the dashboard and regularly reshaped the interface to floor essentially the most related info first.

Why Kind 2 Feels Just like the Candy Spot (for Now)

After working with all three varieties, we maintain coming again to why Kind 2 interfaces really feel so pure after they work properly. Take fashionable automotive interfaces—they perceive the context of your drive, your preferences, your typical routes. The diminished cognitive load is straight away noticeable. You don’t have to consider work together with the system; it simply works.

However Kind 2 techniques additionally reveal a elementary pressure. The extra they assume about your context, the extra jarring it’s after they get it flawed. There’s one thing to be stated for the predictability of Kind 1 techniques, even when they’re extra demanding.

The important thing perception from Kind 2 techniques is that ambient context consciousness can dramatically cut back cognitive load however provided that the environmental cues are interpreted appropriately. Once they’re not, the misalignment might be worse than ranging from scratch.

The Belief and Management Paradox

Right here’s one thing I’ve been wrestling with: The extra useful an AI interface turns into, the extra it asks us to surrender management. It’s a bizarre psychological dance.

My expertise with coding assistants illustrates this completely. When it really works, it’s magical. When it doesn’t, it’s deeply unsettling. The ideas look so believable that we discover ourselves trusting them greater than we should. That’s the Kind 2 lure: Ambient context consciousness could make flawed ideas really feel extra authoritative than they really are.

Now think about Kind 3 interfaces, the place the system doesn’t simply recommend code however actively reshapes the complete improvement atmosphere primarily based on what it learns about your working fashion. The collaboration potential is big, however so is the belief problem.

We expect the reply lies in what we name “progressive disclosure of intelligence.” As a substitute of hiding how the system works, Kind 3 interfaces want to assist customers perceive not simply what they’re doing however why they’re doing it. The complexity in UX design isn’t nearly making issues work—it’s about making the AI’s reasoning clear sufficient that people can keep within the loop.

How Generative Interfaces Be taught

Generative interfaces want what we consider as “sense organs”—methods to grasp what’s occurring that transcend specific instructions. That is essentially observational studying: the method by which techniques purchase new behaviors by watching and deciphering the actions of others. Consider watching a talented craftsperson at work. At first, you discover the broad strokes: which instruments they attain for, how they place their supplies, the rhythm of their actions. Over time, you start to select up subtler cues.

We’ve been experimenting with a generative UI system that observes consumer conduct. Let me let you know about Sarah, a knowledge analyst who makes use of our enterprise intelligence platform each day. The system seen that each Tuesday morning, she instantly navigates to the gross sales dashboard, exports three particular studies, after which spends most of her time within the visualization builder creating charts for the weekly staff assembly.

After observing this sample for a number of weeks, the system started to anticipate her wants. On Tuesday mornings, it mechanically surfaces the gross sales dashboard, prepares the studies she usually wants, and even suggests chart templates primarily based on the present week’s information developments.

The system additionally seen that Sarah struggles with sure visualizations—she usually tries a number of chart varieties earlier than deciding on one or spends additional time adjusting colours and formatting. Over time, it realized to floor the chart varieties and styling choices that work finest for her particular use circumstances.

This creates a suggestions loop. The system watches, learns, and adapts, then observes how customers reply to these diversifications. Profitable modifications get bolstered and refined. Modifications that don’t work get deserted in favor of higher options.

What We’re Truly Constructing

Organizations experimenting with generative UI patterns are already seeing significant enhancements throughout various use circumstances. A dev-tool startup we all know found it may dramatically cut back onboarding time by permitting an LLM to mechanically generate IDE panels that match every repository’s particular construct scripts. An ecommerce website reported increased conversion charges after implementing real-time structure adaptation that intelligently nudges patrons towards their subsequent finest motion.

The know-how is shifting quick. Edge-side inference will push era latency beneath perceptual thresholds, enabling seamless on-device adaptation. Cross-app metaobservation will permit UIs to be taught from patterns that span a number of merchandise and platforms. And regulators are already drafting disclosure guidelines that deal with each generated element as a deliverable requiring complete provenance logs.

However right here’s what we maintain coming again to: Essentially the most profitable implementations we’ve seen concentrate on augmenting human resolution making, not changing it. The perfect generative interfaces don’t simply adapt—they clarify their diversifications in ways in which assist customers perceive and belief the system.

The Highway Forward

We’re on the threshold of one thing genuinely new in software program. Generative UI isn’t only a technical improve; it’s a elementary change in how we work together with know-how. Interfaces have gotten dwelling artifacts—perceptive, adaptive, and able to appearing on our behalf.

However as I’ve realized from my experiments, the actual problem isn’t technical. It’s human. How will we construct techniques that adapt to our wants with out dropping our company? How will we preserve belief when the interface itself is continually evolving?

The reply, we predict, lies in treating generative interfaces as collaborative companions somewhat than invisible servants. Essentially the most profitable implementations I’ve encountered make their reasoning clear, their diversifications explainable, and their intelligence humble.

Executed proper, tomorrow’s screens gained’t merely reply to our instructions—they’ll perceive our intentions, be taught from our behaviors, and quietly reshape themselves to assist us accomplish what we’re actually making an attempt to do. The secret is making certain that in educating our interfaces to assume, we don’t overlook assume for ourselves.

I love how you write—it’s like having a conversation with a good friend. Can’t wait to read more!This post pulled me in from the very first sentence. You have such a unique voice!Seriously, every time I think I’ll just skim through, I end up reading every word. Keep it up!Your posts always leave me thinking… and wanting more. This one was no exception!Such a smooth and engaging read—your writing flows effortlessly. Big fan here!Every time I read your work, I feel like I’m right there with you. Beautifully written!You have a real talent for storytelling. I couldn’t stop reading once I started.The way you express your thoughts is so natural and compelling. I’ll definitely be back for more!Wow—your writing is so vivid and alive. It’s hard not to get hooked!You really know how to connect with your readers. Your words resonate long after I finish reading.

I love how you write—it’s like having a conversation with a good friend. Can’t wait to read more!This post pulled me in from the very first sentence. You have such a unique voice!Seriously, every time I think I’ll just skim through, I end up reading every word. Keep it up!Your posts always leave me thinking… and wanting more. This one was no exception!Such a smooth and engaging read—your writing flows effortlessly. Big fan here!Every time I read your work, I feel like I’m right there with you. Beautifully written!You have a real talent for storytelling. I couldn’t stop reading once I started.The way you express your thoughts is so natural and compelling. I’ll definitely be back for more!Wow—your writing is so vivid and alive. It’s hard not to get hooked!You really know how to connect with your readers. Your words resonate long after I finish reading.